Generative AI is a form of artificial intelligence (AI) that is designed to generate content, including text, images, video, and music. In today’s business landscape, harnessing the potential of generative AI has become essential to remain competitive.

Foundation models are a form of generative AI. They generate output from one or more inputs (prompts) in the form of human language instructions. Foundation models are designed to learn the underlying patterns and relationships in language and use this knowledge to perform several tasks, such as generating text, translating languages, and answering questions. Large language models (LLMs) are large deep learning models that are pre-trained on vast amounts of data. LLMs are flexible and can be used for a variety of tasks, including generating text, chatbots, text summarization, image generation, and natural language processing capabilities, such as answering questions. LLMs have the potential to disrupt content creation and the way people use search engines and virtual assistants.

Because organizations store a large percentage of their data in relational databases, there is a clear impetus to augment these datasets using generative AI foundation models to elevate end-user experiences and create novel products. In this post, we cover how to build a generative AI-powered agent assistance app to summarize case notes using Amazon Aurora MySQL-Compatible Edition and foundation models in Amazon SageMaker JumpStart.

Case summarization is a natural language processing (NLP) task that involves condensing large amounts of text data, such as customer support conversations or service tickets, into concise and meaningful summaries. Customer support agents spend considerable time reviewing the case notes before they can service a customer, and the wait time is longer when the support case is handed from one agent to another. In this post, we discuss how Amazon Aurora can assist customer support agents by providing a summary of case notes and identify customer sentiment based on previous conversations. The proposed solution helps customer support agents respond faster to customer queries and improve customer experience.

We showcase two scenarios showing how Aurora integrates with other AWS ML services to help you build generative AI applications to augment the data stored in your relational database:

First, we explain how to summarize the case notes stored in the Aurora database using the machine learning (ML) integration capabilities of Aurora to invoke foundation models hosted in SageMaker Jumpstart.

Next, we explain how to analyze sentiment based on the case notes and the case feedback stored in Aurora. We use the Amazon Aurora machine learning (Aurora ML) integration with Amazon Comprehend, which uses natural language processing (NLP) to gather nuanced sentiment insights from textual data.

This post provides insights on how you can integrate Aurora with SageMaker Jumpstart and Amazon Comprehend to produce actionable insights, enhance data quality, and provide a better experience to users.

Solution overview

The proposed solution is based on the following key components:

Aurora – Aurora is a relational database management system (RDBMS) built for the cloud with MySQL and PostgreSQL compatibility. Aurora gives you the performance and availability of commercial-grade databases at one-tenth the cost. Aurora ML enables you to call a wide variety of ML algorithms, including ML-based predictions, generative AI, and sentiment analysis, via the familiar SQL programming language. You do not need to have prior ML experience to use Aurora ML. Aurora ML provides simple, optimized, and secure integration between Aurora and AWS ML services without having to build custom integrations or move data around. Aurora calls SageMaker for a wide variety of ML algorithms, including generative AI or Amazon Comprehend for sentiment analysis, so your application doesn’t need to call these services directly.

Amazon Comprehend – Amazon Comprehend is an NLP service that uses ML to find insights in text.

SageMaker – Amazon SageMaker provides you with the ability to build, train, and deploy customer ML models quickly.

SageMaker JumpStart – SageMaker JumpStart offers an ML hub to explore, train, and deploy a wide selection of publicly available foundation models.

Our case summarization use case focuses on efficiently summarizing key insights, issues, or resolutions present in case notes, making it easier for support teams, managers, and stakeholders to quickly understand and act upon the information. This post explains how to generate case summaries one at a time or in batches. Let’s look into the pros and cons of both approaches:

On-demand summarization – This solution summarizes prior customer support conversations when the same customer opens a new case or interacts with support. The customer support agent can request the summary of the case notes in real time and a concise summary is generated in a few seconds. This enables the agent to respond quickly and more appropriately with a better understanding of the customer sentiment.

Batch summarization – This solution summarizes large volumes of historical data or pre-recorded conversations in batches from the Aurora database on a scheduled basis. The summary results are stored back in the Aurora database so that query results are available when needed with the fastest possible response time. The batch processing capability offers additional benefits like higher performance and lower cost because it will process a large volume of information in a single call to the LLM rather than multiple calls done as part of real-time invocation. Because the responses are pre-computed during off-peak hours and are readily available in the database, this approach removes runtime dependency on the ML APIs and provides faster response times to customer support agents requesting a case summary.

You can choose the design pattern based on your business requirements and operational needs. These techniques can be adapted to various scenarios in customer support, service ticket management, and other text-based summarization use cases.

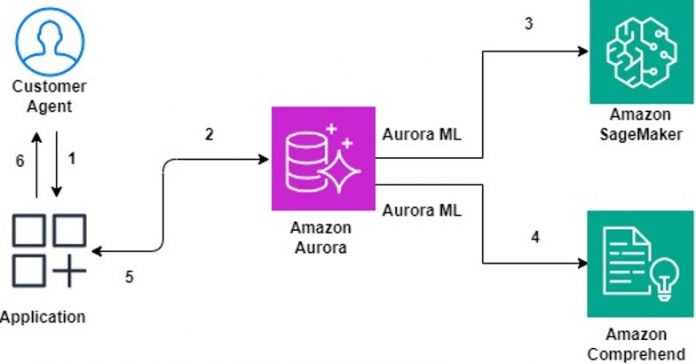

The following solution architecture shows the on-demand (real-time) technique for generating a summary of the case notes.

Let’s explore how each component contributes to the solution:

The customer support agent (referred to as the user) wants to view a summary of the incoming support case and generate a sentiment based on the case notes and the feedback provided by the user. In the front-end application, the user selects or enters the relevant case ID and chooses SageMaker JumpStart Summary.

The application runs a SQL query on Aurora that invokes an Aurora ML function by providing the case notes and additional details for the selected case ID stored in Aurora.

Aurora calls the SageMaker endpoint to generate a summary of the case notes using the AI21 Summarize third-party model to perform case summarization.

The user then requests a sentiment analysis. Aurora ML generates the sentiment based on the case notes and feedback by calling Amazon Comprehend.

In Steps 5 and 6, Aurora returns the summarized case information and sentiment analysis as an appropriate response to the user via the front-end application.

You can find the solution in the GitHub repository.

Prerequisites

Complete the following prerequisite steps:

Set up an Aurora cluster in a private subnet. Aurora ML is supported for Amazon Aurora MySQL 2.07 and higher and PostgreSQL 11.9 and higher. Refer to Aurora machine learning for a consolidated list of Aurora ML support for Amazon Aurora MySQL and Amazon Aurora PostgreSQL in each Region.

Set up an Amazon Elastic Compute Cloud (Amazon EC2) instance in a public subnet, which will act as a front end and can access for database. For instructions, refer to Tutorial: Get started with Amazon EC2 Linux instances.

Create an AWS Secrets Manager database secret for the application to access Aurora. For instructions, see Create an AWS Secrets Manager database secret.

The EC2 instance will need access to the Aurora cluster and Secrets Manager. Refer to Creating a role to delegate permissions to an AWS service for the steps to create an AWS Identity and Access Management (IAM) role and attach specific policies granting access to your Aurora cluster and Secrets Manager.

Create a SageMaker domain.

To run this sample application, you need access to the SageMaker JumpStart AI21 Summarize foundation model. Request access to the foundation model via the SageMaker console.

This solution incurs costs. Refer to Amazon Aurora pricing, Amazon Comprehend pricing, and Amazon SageMaker pricing to learn more.

Set up SageMaker Jumpstart

Aurora ML supports invoking a SageMaker endpoint with the text or CSV content type. Because certain LLMs use other content types like application or JSON as input, we have to edit the default deployment during the setup to the format expected by the ML model. If we use a model from AWS Marketplace or a third-party model from AI21, Anthropic, or others, then we need to deploy a broker instance that converts the content type to the appropriate format expected by the model.

Deploy the AI21 Summarize endpoint

To deploy the AI21 Summarize endpoint using Amazon SageMaker Studio, complete the following steps:

On the SageMaker console, navigate to Jumpstart Foundation models.

Search for “AI21 Summarize” and choose View Model.

Choose Subscribe in the Model Details page.

Choose Open Notebook in Studio.

Specify the SageMaker Studio details and choose Open.

Run all the cells in the notebook to deploy a model endpoint named summarize.

Deploy the broker endpoint

Deploy the broker endpoint with the following steps:

Open thenotebookin SageMaker Studio.

Run the cell in the notebook.

This will create an endpoint named auroramlsum.

Note the endpoint Amazon Resource Name (ARN).

Set up Aurora ML

Complete the following steps to set up Aurora ML:

Create two IAM roles to provide Aurora access to the SageMaker endpoint and Amazon Comprehend.

On the Connectivity & security tab of the Aurora cluster, choose Manage IAM Roles to assign the IAM roles to the Aurora cluster.

Update the parameters aws_default_sagemaker_role for SageMaker and aws_default_comprehend_role for Amazon Comprehend using the DB cluster parameter group in the Configuration section of the Aurora cluster.

Grant your database users access to Aurora ML. For instructions, refer to Granting database users access to Aurora machine learning.

Follow the steps in Setting up your Aurora MySQL DB cluster to use SageMaker and Setting up your Aurora MySQL DB cluster to use Amazon Comprehend to access the SageMaker ARN endpoint and Amazon Comprehend, respectively.

Set up the database

We have generated some sample case summarization data. You can use your own dataset as required. If you are using your own dataset with different table names, make sure to change the table names in the SQL queries accordingly. In this section, we detail the steps to grant Amazon Aurora MySQL access to Amazon Simple Storage Service (Amazon S3), import the data, create an Aurora ML function, and run database queries to verify the Aurora ML integration.

Enable Amazon S3 access

Complete the following steps to grant Amazon Aurora MySQL access to Amazon S3:

Create an S3 bucket and upload the following folder to that bucket.

This folder contains the sample dataset.

Note the ARN of the S3 bucket.

Provide Amazon Aurora MySQL access to the S3 bucket using the ARN. For instructions, refer to Loading data into an Amazon Aurora MySQL DB cluster from text files in an Amazon S3 bucket.

Import data from Amazon S3

Complete the following steps to import your data:

Log in to your Amazon Aurora MySQL database.

Run SQL queries in your database to create the tables. The table definitions are provided in the GitHub file.

Use the following command to load the data from the S3 bucket (provide the bucket name and table name in the S3 endpoint):

You run the preceding command five times, once for each of the five tables (CaseSummarization, CaseOwnerDetails, CategoryTypeDetails, RequestorDetails, ServiceNameDetails) mentioned in the table definitions in Amazon Aurora MySQL.

To verify the data has been successfully loaded into the table, use the following command:

Create an Aurora ML function

Next, we create an Aurora function to call the SageMaker endpoint. In the following definition, we create an Aurora ML function and configure it with the SageMaker endpoint hosting the AI21 foundation model:

Verify the Aurora ML integrations

Use the following queries to verify that the Aurora ML integrations are set up correctly:

Summarize Case Notes in real time using AI21 Summarize Foundation Model deployed in Amazon SageMaker

Summarize using AI21 and also detect sentiment using Amazon Comprehend

Store summarized Case Notes using AI21 Summarize Foundation Model deployed in Amazon SageMaker

Deploy the application

The following GitHub folder creates a Streamlit application, which you can use as a front end to test the application. Complete the following steps to deploy the app:

Download the folder in your front-end EC2 instance.

On the Amazon EC2 console, attach the role created in the prerequisites that provides the EC2 access to Aurora and Secrets Manager.

Replace the database secret (created as prerequisite) in the code so that your application can fetch the database endpoint and credentials for your Aurora database.

Make sure Python 3.10 is installed on Amazon EC2. This Python version comes with pip3 installed by default.

Navigate to the folder and install the requirements by running the following command:

To run the application, run the following command:

Test real-time queries and batch queries using the Streamlit portal

Open the Streamlit portal URL from the output of the preceding command in a browser and complete the following steps to test the application on the portal:

Choose case ID CAS02 on the Select a Case ID drop-down menu.

Choose Fetch CaseSummarization Table Data to see the case details.

The Fetch All Data option joins the tables and displays the data for the same case.

Choose SagemakerJumpstart(AI21) Summary to see the AI-generated summary from the AI21 foundation model from SageMaker Jumpstart.

Choose Sentiment to see the overall sentiment for the case.

Choose All on the Select a Case ID menu and choose SagemakerJumpstart(AI21) Summary to see the AI-generated summary for all the cases generated by the AI21 foundation model.

Similarly, choose All on the Select a Case ID menu and choose Sentiment to see the overall sentiment for all the cases.

Key design considerations

The key design considerations for using Aurora ML are as follows:

Data residing in Aurora – Aurora ML is a suitable choice if your inference data is primarily stored within an Aurora database. Aurora ML integrates seamlessly with SageMaker, Amazon S3, and AWS Lambda to help augment data in Aurora with ML services.

Cost implications with SageMaker inference endpoints – Unlike invoking API-based LLMs, which are charged based on usage, the SageMaker inference endpoint configured in the Aurora ML function is always accessible when invoked and therefore is charged based on running inference instance hours. To cost-effectively manage your SageMaker inference endpoint, it’s recommended to create and tear down the SageMaker endpoint programmatically on a scheduled basis for batch summarization.

Clean up

Complete the following steps to clean up your resources:

Delete the Aurora database created as part of the prerequisites.

Terminate the EC2 instance that was hosting the Streamlit application.

Delete the SageMaker and broker endpoint created as part of earlier steps.

Delete the S3 bucket and the sample dataset copied as part of the database setup.

Conclusion

Businesses today want to enhance the data stored in their relational databases with generative AI foundation models to improve the end-user experience or build new products. In this post, we demonstrated how you can summarize the case notes stored in an Aurora database by using Aurora ML integration capabilities with SageMaker Jumpstart. We also showed how to detect customer sentiment by integrating Aurora with Amazon Comprehend.

The ability to invoke LLMs hosted on SageMaker as SQL functions using Aurora ML simplifies the learning curve in using LLMs while building generative AI applications. It provides straightforward, optimized, and secure integration between Aurora and AWS ML services without having to build custom integrations or move data around.

For more information about Aurora ML, see Amazon Aurora Machine Learning. To explore the latest foundation models provided as part of SageMaker JumpStart, refer to JumpStart Foundation Models.

About the Author

David John Chakram is a Principal Solutions Architect at AWS. He specializes in building data platforms and architecting seamless data ecosystems. With a profound passion for databases, data analytics, and machine learning, he excels at transforming complex data challenges into innovative solutions and driving businesses forward with data-driven insights.

Jeeri Deka is a Solution Architect II at AWS, dedicated to assisting businesses especially independent software vendors (ISVs) in their transformative cloud journeys. Specialising in modernising applications through Serverless and Container technologies, her developer background enables close collaboration with customers. She excels in crafting tailored MVPs and POCs, ensuring solutions align seamlessly with their unique needs.

Dhinesh Raja M is an Associate Solutions Architect at AWS, committed to collaborating closely with customers to offer guidance and technical support for their large-scale cloud initiatives on AWS. His current focus lies in guiding independent software vendors (ISVs) through their cloud adoption journey, with his area of depth being AI/ML, aiming to drive strategic business outcomes.

Read MoreAWS Database Blog