Generative AI applications driven by foundational models (FMs) are enabling organizations with significant business value in customer experience, productivity, process optimization, and innovations. However, adoption of these FMs involves addressing some key challenges, including quality output, data privacy, security, integration with organization data, cost, and skills to deliver.

In this post, we explore different approaches you can take when building applications that use generative AI. With the rapid advancement of FMs, it’s an exciting time to harness their power, but also crucial to understand how to properly use them to achieve business outcomes. We provide an overview of key generative AI approaches, including prompt engineering, Retrieval Augmented Generation (RAG), and model customization. When applying these approaches, we discuss key considerations around potential hallucination, integration with enterprise data, output quality, and cost. By the end, you will have solid guidelines and a helpful flow chart for determining the best method to develop your own FM-powered applications, grounded in real-life examples. Whether creating a chatbot or summarization tool, you can shape powerful FMs to suit your needs.

Generative AI with AWS

The emergence of FMs is creating both opportunities and challenges for organizations looking to use these technologies. A key challenge is ensuring high-quality, coherent outputs that align with business needs, rather than hallucinations or false information. Organizations must also carefully manage data privacy and security risks that arise from processing proprietary data with FMs. The skills needed to properly integrate, customize, and validate FMs within existing systems and data are in short supply. Building large language models (LLMs) from scratch or customizing pre-trained models requires substantial compute resources, expert data scientists, and months of engineering work. The computational cost alone can easily run into the millions of dollars to train models with hundreds of billions of parameters on massive datasets using thousands of GPUs or TPUs. Beyond hardware, data cleaning and processing, model architecture design, hyperparameter tuning, and training pipeline development demand specialized machine learning (ML) skills. The end-to-end process is complex, time-consuming, and prohibitively expensive for most organizations without the requisite infrastructure and talent investment. Organizations that fail to adequately address these risks can face negative impacts to their brand reputation, customer trust, operations, and revenues.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon via a single API. With the Amazon Bedrock serverless experience, you can get started quickly, privately customize FMs with your own data, and integrate and deploy them into your applications using AWS tools without having to manage any infrastructure. Amazon Bedrock is HIPAA eligible, and you can use Amazon Bedrock in compliance with the GDPR. With Amazon Bedrock, your content is not used to improve the base models and is not shared with third-party model providers. Your data in Amazon Bedrock is always encrypted in transit and at rest, and you can optionally encrypt resources using your own keys. You can use AWS PrivateLink with Amazon Bedrock to establish private connectivity between your FMs and your VPC without exposing your traffic to the internet. With Knowledge Bases for Amazon Bedrock, you can give FMs and agents contextual information from your company’s private data sources for RAG to deliver more relevant, accurate, and customized responses. You can privately customize FMs with your own data through a visual interface without writing any code. As a fully managed service, Amazon Bedrock offers a straightforward developer experience to work with a broad range of high-performing FMs.

Launched in 2017, Amazon SageMaker is a fully managed service that makes it straightforward to build, train, and deploy ML models. More and more customers are building their own FMs using SageMaker, including Stability AI, AI21 Labs, Hugging Face, Perplexity AI, Hippocratic AI, LG AI Research, and Technology Innovation Institute. To help you get started quickly, Amazon SageMaker JumpStart offers an ML hub where you can explore, train, and deploy a wide selection of public FMs, such as Mistral models, LightOn models, RedPajama, Mosiac MPT-7B, FLAN-T5/UL2, GPT-J-6B/Neox-20B, and Bloom/BloomZ, using purpose-built SageMaker tools such as experiments and pipelines.

Common generative AI approaches

In this section, we discuss common approaches to implement effective generative AI solutions. We explore popular prompt engineering techniques that allow you to achieve more complex and interesting tasks with FMs. We also discuss how techniques like RAG and model customization can further enhance FMs’ capabilities and overcome challenges like limited data and computational constraints. With the right technique, you can build powerful and impactful generative AI solutions.

Prompt engineering

Prompt engineering is the practice of carefully designing prompts to efficiently tap into the capabilities of FMs. It involves the use of prompts, which are short pieces of text that guide the model to generate more accurate and relevant responses. With prompt engineering, you can improve the performance of FMs and make them more effective for a variety of applications. In this section, we explore techniques like zero-shot and few-shot prompting, which rapidly adapts FMs to new tasks with just a few examples, and chain-of-thought prompting, which breaks down complex reasoning into intermediate steps. These methods demonstrate how prompt engineering can make FMs more effective on complex tasks without requiring model retraining.

Zero-shot prompting

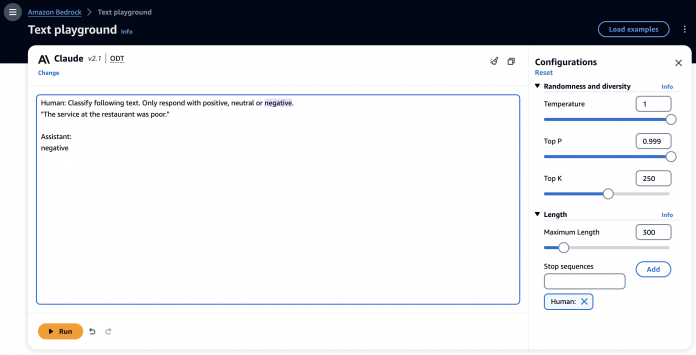

A zero-shot prompt technique requires FMs to generate an answer without providing any explicit examples of the desired behavior, relying solely on its pre-training. The following screenshot shows an example of a zero-shot prompt with the Anthropic Claude 2.1 model on the Amazon Bedrock console.

In these instructions, we didn’t provide any examples. However, the model can understand the task and generate appropriate output. Zero-shot prompts are the most straightforward prompt technique to begin with when evaluating an FM for your use case. However, although FMs are remarkable with zero-shot prompts, it may not always yield accurate or desired results for more complex tasks. When zero-shot prompts fall short, it is recommended to provide a few examples in the prompt (few-shot prompts).

Few-shot prompting

The few-shot prompt technique allows FMs to do in-context learning from the examples in the prompts and perform the task more accurately. With just a few examples, you can rapidly adapt FMs to new tasks without large training sets and guide them towards the desired behavior. The following is an example of a few-shot prompt with the Cohere Command model on the Amazon Bedrock console.

In the preceding example, the FM was able to identify entities from the input text (reviews) and extract the associated sentiments. Few-shot prompts are an effective way to tackle complex tasks by providing a few examples of input-output pairs. For straightforward tasks, you can give one example (1-shot), whereas for more difficult tasks, you should provide three (3-shot) to five (5-shot) examples. Min et al. (2022) published findings about in-context learning that can enhance the performance of the few-shot prompting technique. You can use few-shot prompting for a variety of tasks, such as sentiment analysis, entity recognition, question answering, translation, and code generation.

Chain-of-thought prompting

Despite its potential, few-shot prompting has limitations, especially when dealing with complex reasoning tasks (such as arithmetic or logical tasks). These tasks require breaking the problem down into steps and then solving it. Wei et al. (2022) introduced the chain-of-thought (CoT) prompting technique to solve complex reasoning problems through intermediate reasoning steps. You can combine CoT with few-shot prompting to improve results on complex tasks. The following is an example of a reasoning task using few-shot CoT prompting with the Anthropic Claude 2 model on the Amazon Bedrock console.

Kojima et al. (2022) introduced an idea of zero-shot CoT by using FMs’ untapped zero-shot capabilities. Their research indicates that zero-shot CoT, using the same single-prompt template, significantly outperforms zero-shot FM performances on diverse benchmark reasoning tasks. You can use zero-shot CoT prompting for simple reasoning tasks by adding “Let’s think step by step” to the original prompt.

ReAct

CoT prompting can enhance FMs’ reasoning capabilities, but it still depends on the model’s internal knowledge and doesn’t consider any external knowledge base or environment to gather more information, which can lead to issues like hallucination. The ReAct (reasoning and acting) approach addresses this gap by extending CoT and allowing dynamic reasoning using an external environment (such as Wikipedia).

Integration

FMs have the ability to comprehend questions and provide answers using their pre-trained knowledge. However, they lack the capacity to respond to queries requiring access to an organization’s private data or the ability to autonomously carry out tasks. RAG and agents are methods to connect these generative AI-powered applications to enterprise datasets, empowering them to give responses that account for organizational information and enable running actions based on requests.

Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) allows you to customize a model’s responses when you want the model to consider new knowledge or up-to-date information. When your data changes frequently, like inventory or pricing, it’s not practical to fine-tune and update the model while it’s serving user queries. To equip the FM with up-to-date proprietary information, organizations turn to RAG, a technique that involves fetching data from company data sources and enriching the prompt with that data to deliver more relevant and accurate responses.

There are several use cases where RAG can help improve FM performance:

Question answering – RAG models help question answering applications locate and integrate information from documents or knowledge sources to generate high-quality answers. For example, a question answering application could retrieve passages about a topic before generating a summarizing answer.

Chatbots and conversational agents – RAG allow chatbots to access relevant information from large external knowledge sources. This makes the chatbot’s responses more knowledgeable and natural.

Writing assistance – RAG can suggest relevant content, facts, and talking points to help you write documents such as articles, reports, and emails more efficiently. The retrieved information provides useful context and ideas.

Summarization – RAG can find relevant source documents, passages, or facts to augment a summarization model’s understanding of a topic, allowing it to generate better summaries.

Creative writing and storytelling – RAG can pull plot ideas, characters, settings, and creative elements from existing stories to inspire AI story generation models. This makes the output more interesting and grounded.

Translation – RAG can find examples of how certain phrases are translated between languages. This provides context to the translation model, improving translation of ambiguous phrases.

Personalization – In chatbots and recommendation applications, RAG can pull personal context like past conversations, profile information, and preferences to make responses more personalized and relevant.

There are several advantages in using a RAG framework:

Reduced hallucinations – Retrieving relevant information helps ground the generated text in facts and real-world knowledge, rather than hallucinating text. This promotes more accurate, factual, and trustworthy responses.

Coverage – Retrieval allows an FM to cover a broader range of topics and scenarios beyond its training data by pulling in external information. This helps address limited coverage issues.

Efficiency – Retrieval lets the model focus its generation on the most relevant information, rather than generating everything from scratch. This improves efficiency and allows larger contexts to be used.

Safety – Retrieving the information from required and permitted data sources can improve governance and control over harmful and inaccurate content generation. This supports safer adoption.

Scalability – Indexing and retrieving from large corpora allows the approach to scale better compared to using the full corpus during generation. This enables you to adopt FMs in more resource-constrained environments.

RAG produces quality results, due to augmenting use case-specific context directly from vectorized data stores. Compared to prompt engineering, it produces vastly improved results with massively low chances of hallucinations. You can build RAG-powered applications on your enterprise data using Amazon Kendra. RAG has higher complexity than prompt engineering because you need to have coding and architecture skills to implement this solution. However, Knowledge Bases for Amazon Bedrock provides a fully managed RAG experience and the most straightforward way to get started with RAG in Amazon Bedrock. Knowledge Bases for Amazon Bedrock automates the end-to-end RAG workflow, including ingestion, retrieval, and prompt augmentation, eliminating the need for you to write custom code to integrate data sources and manage queries. Session context management is built in so your app can support multi-turn conversations. Knowledge base responses come with source citations to improve transparency and minimize hallucinations. The most straightforward way to build generative-AI powered assistant is by using Amazon Q, which has a built-in RAG system.

RAG has the highest degree of flexibility when it comes to changes in the architecture. You can change the embedding model, vector store, and FM independently with minimal-to-moderate impact on other components. To learn more about the RAG approach with Amazon OpenSearch Service and Amazon Bedrock, refer to Build scalable and serverless RAG workflows with a vector engine for Amazon OpenSearch Serverless and Amazon Bedrock Claude models. To learn about how to implement RAG with Amazon Kendra, refer to Harnessing the power of enterprise data with generative AI: Insights from Amazon Kendra, LangChain, and large language models.

Agents

FMs can understand and respond to queries based on their pre-trained knowledge. However, they are unable to complete any real-world tasks, like booking a flight or processing a purchase order, on their own. This is because such tasks require organization-specific data and workflows that typically need custom programming. Frameworks like LangChain and certain FMs such as Claude models provide function-calling capabilities to interact with APIs and tools. However, Agents for Amazon Bedrock, a new and fully managed AI capability from AWS, aims to make it more straightforward for developers to build applications using next-generation FMs. With just a few clicks, it can automatically break down tasks and generate the required orchestration logic, without needing manual coding. Agents can securely connect to company databases via APIs, ingest and structure the data for machine consumption, and augment it with contextual details to produce more accurate responses and fulfill requests. Because it handles integration and infrastructure, Agents for Amazon Bedrock allows you to fully harness generative AI for business use cases. Developers can now focus on their core applications rather than routine plumbing. The automated data processing and API calling also enables FM to deliver updated, tailored answers and perform actual tasks by using proprietary knowledge.

Model customization

Foundation models are extremely capable and enable some great applications, but what will help drive your business is generative AI that knows what’s important to your customers, your products, and your company. And that’s only possible when you supercharge models with your data. Data is the key to moving from generic applications to customized generative AI applications that create real value for your customers and your business.

In this section, we discuss different techniques and benefits of customizing your FMs. We cover how model customization involves further training and changing the weights of the model to enhance its performance.

Fine-tuning

Fine-tuning is the process of taking a pre-trained FM, such as Llama 2, and further training it on a downstream task with a dataset specific to that task. The pre-trained model provides general linguistic knowledge, and fine-tuning allows it to specialize and improve performance on a particular task like text classification, question answering, or text generation. With fine-tuning, you provide labeled datasets—which are annotated with additional context—to train the model on specific tasks. You can then adapt the model parameters for the specific task based on your business context.

You can implement fine-tuning on FMs with Amazon SageMaker JumpStart and Amazon Bedrock. For more details, refer to Deploy and fine-tune foundation models in Amazon SageMaker JumpStart with two lines of code and Customize models in Amazon Bedrock with your own data using fine-tuning and continued pre-training.

Continued pre-training

Continued pre-training in Amazon Bedrock enables you to teach a previously trained model on additional data similar to its original data. It enables the model to gain more general linguistic knowledge rather than focus on a single application. With continued pre-training, you can use your unlabeled datasets, or raw data, to improve the accuracy of foundation model for your domain through tweaking model parameters. For example, a healthcare company can continue to pre-train its model using medical journals, articles, and research papers to make it more knowledgeable on industry terminology. For more details, refer to Amazon Bedrock Developer Experience.

Benefits of model customization

Model customization has several advantages and can help organizations with the following:

Domain-specific adaptation – You can use a general-purpose FM, and then further train it on data from a specific domain (such as biomedical, legal, or financial). This adapts the model to that domain’s vocabulary, style, and so on.

Task-specific fine-tuning – You can take a pre-trained FM and fine-tune it on data for a specific task (such as sentiment analysis or question answering). This specializes the model for that particular task.

Personalization – You can customize an FM on an individual’s data (emails, texts, documents they’ve written) to adapt the model to their unique style. This can enable more personalized applications.

Low-resource language tuning – You can retrain only the top layers of a multilingual FM on a low-resource language to better adapt it to that language.

Fixing flaws – If certain unintended behaviors are discovered in a model, customizing on appropriate data can help update the model to reduce those flaws.

Model customization helps overcome the following FM adoption challenges:

Adaptation to new domains and tasks – FMs pre-trained on general text corpora often need to be fine-tuned on task-specific data to work well for downstream applications. Fine-tuning adapts the model to new domains or tasks it wasn’t originally trained on.

Overcoming bias – FMs may exhibit biases from their original training data. Customizing a model on new data can reduce unwanted biases in the model’s outputs.

Improving computational efficiency – Pre-trained FMs are often very large and computationally expensive. Model customization can allow downsizing the model by pruning unimportant parameters, making deployment more feasible.

Dealing with limited target data – In some cases, there is limited real-world data available for the target task. Model customization uses the pre-trained weights learned on larger datasets to overcome this data scarcity.

Improving task performance – Fine-tuning almost always improves performance on target tasks compared to using the original pre-trained weights. This optimization of the model for its intended use allows you to deploy FMs successfully in real applications.

Model customization has higher complexity than prompt engineering and RAG because the model’s weight and parameters are being changed via tuning scripts, which requires data science and ML expertise. However, Amazon Bedrock makes it straightforward by providing you a managed experience to customize models with fine-tuning or continued pre-training. Model customization provides highly accurate results with comparable quality output than RAG. Because you’re updating model weights on domain-specific data, the model produces more contextual responses. Compared to RAG, the quality might be marginally better depending on the use case. Therefore, it’s important to conduct a trade-off analysis between the two techniques. You can potentially implement RAG with a customized model.

Retraining or training from scratch

Building your own foundation AI model rather than solely using pre-trained public models allows for greater control, improved performance, and customization to your organization’s specific use cases and data. Investing in creating a tailored FM can provide better adaptability, upgrades, and control over capabilities. Distributed training enables the scalability needed to train very large FMs on massive datasets across many machines. This parallelization makes models with hundreds of billions of parameters trained on trillions of tokens feasible. Larger models have greater capacity to learn and generalize.

Training from scratch can produce high-quality results because the model is training on use case-specific data from scratch, the chances of hallucination are rare, and the accuracy of the output can be amongst the highest. However, if your dataset is constantly evolving, you can still run into hallucination issues. Training from scratch has the highest implementation complexity and cost. It requires the most effort because it requires collecting a vast amount of data, curating and processing it, and training a fairly large FM, which requires deep data science and ML expertise. This approach is time-consuming (it can typically take weeks to months).

You should consider training an FM from scratch when none of the other approaches work for you, and you have the ability to build an FM with a large amount of well-curated tokenized data, a sophisticated budget, and a team of highly skilled ML experts. AWS provides the most advanced cloud infrastructure to train and run LLMs and other FMs powered by GPUs and the purpose-built ML training chip, AWS Trainium, and ML inference accelerator, AWS Inferentia. For more details about training LLMs on SageMaker, refer to Training large language models on Amazon SageMaker: Best practices and SageMaker HyperPod.

Selecting the right approach for developing generative AI applications

When developing generative AI applications, organizations must carefully consider several key factors before selecting the most suitable model to meet their needs. A variety of aspects should be considered, such as cost (to ensure the selected model aligns with budget constraints), quality (to deliver coherent and factually accurate output), seamless integration with current enterprise platforms and workflows, and reducing hallucinations or generating false information. With many options available, taking the time to thoroughly evaluate these aspects will help organizations choose the generative AI model that best serves their specific requirements and priorities. You should examine the following factors closely:

Integration with enterprise systems – For FMs to be truly useful in an enterprise context, they need to integrate and interoperate with existing business systems and workflows. This could involve accessing data from databases, enterprise resource planning (ERP), and customer relationship management (CRM), as well as triggering actions and workflows. Without proper integration, the FM risks being an isolated tool. Enterprise systems like ERP contain key business data (customers, products, orders). The FM needs to be connected to these systems to use enterprise data rather than work off its own knowledge graph, which may be inaccurate or outdated. This ensures accuracy and a single source of truth.

Hallucinations – Hallucinations are when an AI application generates false information that appears factual. These need to be carefully addressed before FMs are widely adopted. For example, a medical chatbot designed to provide diagnosis suggestions could hallucinate details about a patient’s symptoms or medical history, leading it to propose an inaccurate diagnosis. Preventing harmful hallucinations like these through technical solutions and dataset curation will be critical to making sure these FMs can be trusted for sensitive applications like healthcare, finance, and legal. Thorough testing and transparency about an FM’s training data and remaining flaws will need to accompany deployments.

Skills and resources – The successful adoption of FMs will depend heavily on having the proper skills and resources to use the technology effectively. Organizations need employees with strong technical skills to properly implement, customize, and maintain FMs to suit their specific needs. They also require ample computational resources like advanced hardware and cloud computing capabilities to run complex FMs. For example, a marketing team wanting to use an FM to generate advertising copy and social media posts needs skilled engineers to integrate the system, creatives to provide prompts and assess output quality, and sufficient cloud computing power to deploy the model cost-effectively. Investing in developing expertise and technical infrastructure will enable organizations to gain real business value from applying FMs.

Output quality – The quality of the output produced by FMs will be critical in determining their adoption and use, particularly in consumer-facing applications like chatbots. If chatbots powered by FMs provide responses that are inaccurate, nonsensical, or inappropriate, users will quickly become frustrated and stop engaging with them. Therefore, companies looking to deploy chatbots need to rigorously test the FMs that drive them to ensure they consistently generate high-quality responses that are helpful, relevant, and appropriate to provide a good user experience. Output quality encompasses factors like relevance, accuracy, coherence, and appropriateness, which all contribute to overall user satisfaction and will make or break the adoption of FMs like those used for chatbots.

Cost – The high computational power required to train and run large AI models like FMs can incur substantial costs. Many organizations may lack the financial resources or cloud infrastructure necessary to use such massive models. Additionally, integrating and customizing FMs for specific use cases adds engineering costs. The considerable expenses required to use FMs could deter widespread adoption, especially among smaller companies and startups with limited budgets. Evaluating potential return on investment and weighing the costs vs. benefits of FMs is critical for organizations considering their application and utility. Cost-efficiency will likely be a deciding factor in determining if and how these powerful but resource-intensive models can be feasibly deployed.

Design decision

As we covered in this post, many different AI techniques are currently available, such as prompt engineering, RAG, and model customization. This wide range of choices makes it challenging for companies to determine the optimal approach for their particular use case. Selecting the right set of techniques depends on various factors, including access to external data sources, real-time data feeds, and the domain specificity of the intended application. To aid in identifying the most suitable technique based on the use case and considerations involved, we walk through the following flow chart, which outlines recommendations for matching specific needs and constraints with appropriate methods.

To gain a clear understanding, let’s go through the design decision flow chart using a few illustrative examples:

Enterprise search – An employee is looking to request leave from their organization. To provide a response aligned with the organization’s HR policies, the FM needs more context beyond its own knowledge and capabilities. Specifically, the FM requires access to external data sources that provide relevant HR guidelines and policies. Given this scenario of an employee request that requires referring to external domain-specific data, the recommended approach according to the flow chart is prompt engineering with RAG. RAG will help in providing the relevant data from the external data sources as context to the FM.

Enterprise search with organization-specific output – Suppose you have engineering drawings and you want to extract the bill of materials from them, formatting the output according to industry standards. To do this, you can use a technique that combines prompt engineering with RAG and a fine-tuned language model. The fine-tuned model would be trained to produce bills of materials when given engineering drawings as input. RAG helps find the most relevant engineering drawings from the organization’s data sources to feed in the context for the FM. Overall, this approach extracts bills of materials from engineering drawings and structures the output appropriately for the engineering domain.

General search – Imagine you want to find the identity of the 30th President of the United States. You could use prompt engineering to get the answer from an FM. Because these models are trained on many data sources, they can often provide accurate responses to factual questions like this.

General search with recent events – If you want to determine the current stock price for Amazon, you can use the approach of prompt engineering with an agent. The agent will provide the FM with the most recent stock price so it can generate the factual response.

Conclusion

Generative AI offers tremendous potential for organizations to drive innovation and boost productivity across a variety of applications. However, successfully adopting these emerging AI technologies requires addressing key considerations around integration, output quality, skills, costs, and potential risks like harmful hallucinations or security vulnerabilities. Organizations need to take a systematic approach to evaluating their use case requirements and constraints to determine the most appropriate techniques for adapting and applying FMs. As highlighted in this post, prompt engineering, RAG, and efficient model customization methods each have their own strengths and weaknesses that suit different scenarios. By mapping business needs to AI capabilities using a structured framework, organizations can overcome hurdles to implementation and start realizing benefits from FMs while also building guardrails to manage risks. With thoughtful planning grounded in real-world examples, businesses in every industry stand to unlock immense value from this new wave of generative AI. Learn about generative AI on AWS.

About the Authors

Jay Rao is a Principal Solutions Architect at AWS. He focuses on AI/ML technologies with a keen interest in Generative AI and Computer Vision. At AWS, he enjoys providing technical and strategic guidance to customers and helping them design and implement solutions that drive business outcomes. He is a book author (Computer Vision on AWS), regularly publishes blogs and code samples, and has delivered talks at tech conferences such as AWS re:Invent.

Babu Kariyaden Parambath is a Senior AI/ML Specialist at AWS. At AWS, he enjoys working with customers in helping them identify the right business use case with business value and solve it using AWS AI/ML solutions and services. Prior to joining AWS, Babu was an AI evangelist with 20 years of diverse industry experience delivering AI driven business value for customers.

Read MoreAWS Machine Learning Blog