Amazon Relational Database Service (Amazon RDS) Custom for SQL Server is a managed database service for legacy, custom, and packaged applications that require access to the underlying operating system and database (DB) environment. It helps automate the setup, operation, and scaling of databases in the cloud while granting access to the database and underlying operating system to configure settings, install drivers, and enable native features to meet the dependent application’s requirements.

Typically, the default configurations and parameter settings for Amazon RDS Custom for SQL Server are ideal and optimal for running most workloads. However, you may want to consider implementing certain instance and database level parameters along with best practices for the underlying SQL server depending on your workload.

In this post, we discuss Microsoft SQL Server parameters and best practices to be implemented for improving the performance of mission critical workloads running on Amazon RDS Custom for SQL Server.

Database level parameters are settings that apply to a specific database. We review the following database level parameters:

Auto Growth

Auto Shrink

Auto Close

Page Verify

Virtual Log Files

Instance level parameters are settings that apply to the entire SQL server instance. We review the following instance level parameters:

Optimize for Ad hoc workloads

Backup Compression

Database Integrity

Index Defragmentation and Update Statistics

You should always baseline your database performance based on your own workload prior to implementing these recommendations in order to get accurate performance results.

Database level parameters and best practices

The following parameters and best practices are configured at the database level. They help in enhancing the performance of individual databases.

1. Auto Growth

The database level parameter enable autogrowth allows SQL Server engine to expand the size of the database file when it runs out of space. The auto-growth setting is configured for every database file associated with the database.

Too many auto growth events in the database can degrade the overall performance of the database by causing disk level fragmentation. Additionally, for every auto growth event, all activity on the database file is suspended until the growth operation is complete. It is always recommended to initialize the database files with the appropriate size when it is created.

The enable autogrowth parameter is not a replacement for adequate pre-sizing and proactive maintenance. Nonetheless, enable autogrowth is an option that should only be used for emergencies to avoid unexpected outages due to out-of-space errors as a result of huge database growth.

Furthermore, the auto growth value should be set appropriately. Smaller growth values tend to make the database fragmented, and will cause large inserts to take longer because the database must grow several times. For example, setting a database to auto grow in 64 MB increments isn’t appropriate for a database that grows by 1 GB per day.

Here are some growth rate recommendations for database files for typical database workloads.

64 MB for databases smaller than 1 GB

256 MB for databases between 1–5 GB

512 MB for databases larger than 5 GB

Before implementing these values for your databases, we recommend that you test the performance of your specific database workload to achieve optimal performance.

You can modify this parameter using T-SQL or SSMS.

The following T-SQL generates SQL statements to adjust file growth settings for databases in an instance based on their size, using previously recommended auto-growth increments. It first creates a temporary table, inserts data into it using the sp_helpdb stored procedure. Then, it selects database information from system view and generates the SQL statements. Finally, it drops the temporary table.

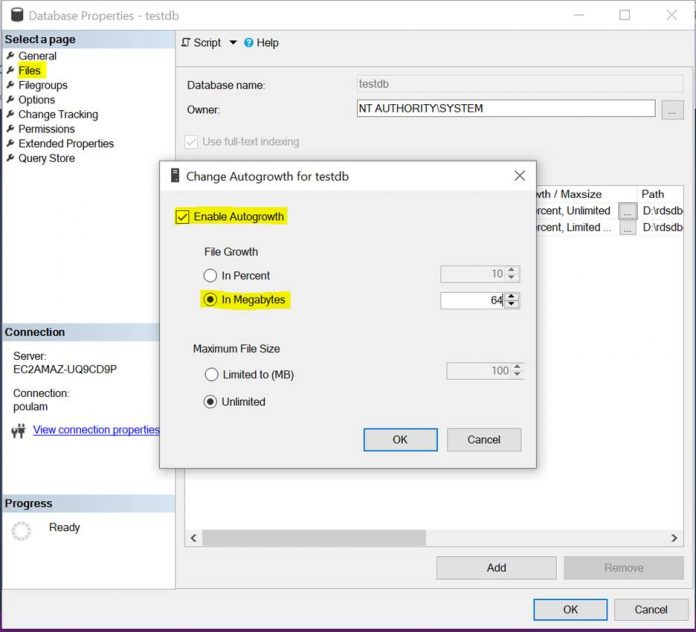

To change the setting using SSMS

Connect to SQL Server Instance

Select the database

Open the context menu (right-click), then select Properties

Select Files, then select the ellipsis (…) to open the Change Autogrowth dialog box

Under File Growth, select “In Megabytes” and input the appropriate value

2. Auto Shrink

The database level parameter auto_shrink helps to automatically shrink the database files and is used to conserve disk space.

By default, the auto_shrink parameter is not enabled. When this parameter is enabled, the database engine performs file-shrink operations on database files to reduce the total free space to 25%. Database shrinking operations can cause extensive waits and blocks; consume a lot of CPU, memory, and I/O resources; and increase fragmentation. In addition, you have no control over when it runs; it’s done automatically. More than likely, the freed disk space will be needed by the database again.

We recommend to keep the default setting and never turning on the auto_shrink parameter. If you have auto_shrink enabled on your database to conserve disk space, consider scaling up the storage appropriately to accommodate the growth of the database.

You can modify this parameter using T-SQL or SSMS.

The following T-SQL generates SQL statements to change the auto shrink setting for the database.

To change the default setting using SSMS

Connect to SQL Server Instance

Select the database

Open the context menu (right-click), then select Properties

Select Options

Under Automatic, select appropriate value for Auto Shrink

3. Auto Close

The database level parameter auto_close allows SQL Server engine to open and lock all files that are associated with the database when the database is first accessed.

By default, the auto_close parameter is not enabled. When this parameter is enabled and the last user connected to it closes the connection, the database is shutdown along with releasing file locks. The act of opening and closing the databases causes unnecessary overhead and performance degradation. Furthermore, auto_close also flushes the buffer and procedure cache for that database.

We recommend to keep the default setting and never turning on the auto_close parameter.

You can modify this parameter using T-SQL or SSMS.

The following T-SQL generates SQL statements to change the auto close setting for the database.

To change the default setting using SSMS

Connect to SQL Server Instance

Select the database

Open the context menu (right-click), then select Properties

Select Options

Under Automatic, select appropriate value for Auto Close

4. Page Verify

The database level parameter page_verify defines the SQL Server mechanism of verifying page consistency when the page is written to disk and when it is read again from disk. Based on the page verification method chosen, SQL Server discovers whether or not the data written on disk is good or corrupt. The page verify option has the following three configurable values:

CHECKSUM

TORN_PAGE_DETECTION

NONE

By default, the page_verify parameter is set to CHECKSUM. However, if databases have the page_verify parameter set to NONE or TORN_PAGE_DETECTION, recovering from storage corruption may not be an easy task.

We recommend to keep the default setting of the page_verify parameter to CHECKSUM. Enabling this option can incur a 1-2% CPU overhead for typical database workloads, but it makes it easier to recover from corruption.

You can modify this parameter using T-SQL or SSMS.

The following T-SQL generates SQL statements to change the page verify setting for the database.

To change the default setting using SSMS

Connect to SQL Server Instance

Select the database

Open the context menu (right-click), then select Properties

Select Options

Under Recovery, select appropriate value for Page Verify

5. VLF count

SQL Server internally divides every physical transaction log file into smaller sections called virtual log files (VLFs). SQL Server uses virtual log files as a unit of management, and they can be either active or inactive. A VLF is active when it stores the active portion of the transaction log, which contains the stream of log records required to keep the database consistent in the event of a transaction rollback or unexpected SQL Server shutdown. An inactive VLF contains the truncated (inactive) and unused parts of the transaction log.

The number of VLFs within the log files increases transaction log throughput, database startup recovery and restore times. The appropriate number of VLFs depends on the database size and needs to be evaluated after considering the acceptable growth and shrinkage of the transaction logfiles. You should avoid situations where the transaction log becomes overly fragmented and has a large number of small VLFs. Similarly, you should avoid situations where the log has too few but very large VLFs.

You can examine virtual log files with the sys.dm_db_log_info dynamic management view.

The following query lists the databases and their VLF count:

Having an excessive number of VLFs can negatively impact transaction log activities such as transaction log backup, database crash recovery and DML performance. We recommend fixing it by shrinking the transaction log to as small as possible and growing it back to an appropriate size:

Instance level parameters and best practices

The following parameters and best practices are configured at the instance level. They help in enhancing the performance at the instance level.

Amazon RDS Custom for SQL Server provides you with complete access to the host, enabling you to modify instance-level parameters directly, just like you would in a self-managed environment.

1. Optimize for Ad hoc workloads

The SQL Server database engine generates a query execution plan during processing and stores it in the plan cache for reuse. However, caching all plans by default can lead to unused plans occupying memory inefficiently. Those single use plans will exist in the plan cache, inefficiently consuming a portion of the server’s memory.

The instance-level parameter optimize for ad hoc workloads helps improve plan cache efficiency. By default, optimize for ad hoc workloads is not enabled at the instance level.

When enabled, this setting instructs SQL Server to only store a small compiled plan stub each time a query is run for the first time. SQL Server only stores the full compiled plan when that small compiled plan stub is referenced a second time on a subsequent run. It is recommended to enable this parameter to relieve memory pressure and improve overall performance when there are large number of ad hoc queries.

You can modify this parameter using T-SQL or SSMS.

To find the number of single-use cached plans using T-SQL, run the following query:

To view or change the optimize for ad hoc workloads parameter value for the instance, you can run the following queries:

To change the default setting using SSMS

Connect to SQL Server Instance

Select the instance

Open the context menu (right-click), then select Properties

Select Advanced

Under Miscellaneous, select appropriate value for Optimize for Ad hoc Workloads

2. Backup Compression

The instance level parameter backup compression default helps reduce the time and space required for database backups, thereby increasing the speed of database restore. By default, backup compression is not enabled.

The following factors should be taken into consideration while enabling this parameter:

There is some CPU overhead involved in backup compression. It varies based on several factors such as database size, backup type, database workload on the instance during backup process. In case of CPU contention, a compressed backup with low priority can be created in a session that has CPU usage limited by Resource Governor.

The actual compression rates achieved depend largely on the makeup of data within the database.

You can modify this parameter using T-SQL or SSMS.

You can use the following T-SQL to change or view or change the backup compression default parameter value for the instance:

Alternatively, you can override the default instance-level backup compression default parameter by using either WITH COMPRESSION or WITH NO_COMPRESSION in your T-SQL BACKUP statement. See the following code:

To change the default setting using SSMS

Connect to SQL Server Instance

Select the instance

Open the context menu (right-click), then select Properties

Select Database Settings

Configure the Compress Backup option

3. Database Integrity

DBCC CHECKDB is a utility for checking the logical and physical integrity of the objects in a database and should be run on a regular basis. The command checks database consistency to make sure that objects are stored correctly and don’t contain invalid values. We recommend checking the integrity of your databases at least once a week, preferably daily and before starting database backups. This helps ensure that there is no corruption within the database and a valid restoration can be done.

You can utilize the SQL Server native maintenance plans to create SQL Server Agent jobs that check the database integrity periodically.

4. Index Defragmentation and Update Statistics

Indexes in databases are special data structures associated with tables or views that help speed up database queries. Indexes, like any other storage objects, become fragmented over time through the course of normal insert, update, and delete activities. Identifying the level of fragmentation is a crucial component of a targeted maintenance plan.

A common database maintenance strategy involves an automated script that uses the sys.dm_db_index_physical_stats function to analyze index fragmentation levels and perform the appropriate action. For example, REORGANIZE if fragmentation is between 5–30% and REBUILD if greater than 30%.

SQL Server statistics are system objects that contain information aboutthe data distribution, such as uniqueness or selectivity of the data stored within columns and indexes. The Query Optimizer uses statistics to create query plans that improve query performance. SQL Server relies heavily on cost-based optimization, so accurate data distribution statistics are extremely important for the effective use of indexes. The optimizer’s reliance on statistics means that these need to be as accurate as possible or the optimizer could make poor choices for the run plans.

We recommend adopting a targeted approach using the native maintenance plans to create SQL Server Agent jobs that defragment the indexes and update statistics periodically. This approach looks at index fragmentation on an index-by-index basis and, depending on the severity of the fragmentation, rebuilds or reorganizes an index. It also only updates statistics that need updating. In the long run, this will use fewer resources than an approach where all indexes and statistics are rebuilt for databases.

Summary

In this post, we discussed how to configure both instance-level and database-level parameters along with best practices in Amazon RDS Custom for SQL Server to enhance the performance of your mission-critical database workloads.

If you have any comments or questions, share them in the comments section.

About the Authors

Poulami Maity is a Database Specialist Solutions Architect at Amazon Web Services. She works with AWS customers to help them migrate and modernize their existing databases to AWS cloud.

Sid Vantair is a Solutions Architect with AWS covering Strategic accounts. With over a decade of experience in working with relational databases, he thrives on resolving complex technical issues to overcome customer hurdles. Outside of work, he cherishes spending time with his family and fostering inquisitiveness in his children.

Read MoreAWS Database Blog