Many of our customers use Amazon Relational Database Service (Amazon RDS) for SQL Server for their large mission-critical SQL Server databases. Customers have asked for the optimal solution to safeguard their large databases on Amazon RDS for SQL Server to help them meet their requirements for Recovery Point Objective (RPO), which is the maximum acceptable data loss, and Recovery Time Objective (RTO), which is the maximum acceptable delay between the interruption of service and restoration of service.

One of the responsibilities of a database administrator is to protect your company’s data. Amazon RDS for SQL Server has multiple options for helping you back up and restore data. When putting together a backup strategy for large databases using Amazon RDS for SQL Server, you should consider the following:

What is the size of the database?

Do you want to restore individual databases or multiple databases on an RDS DB instance?

Does your backup and recovery strategy require in-region and cross-region protection?

Typically, you can choose from multiple strategies for safeguarding data on Amazon RDS for SQL Server, including using automated snapshots or manual snapshots, AWS Backup, native backup and restore, or a combination. However, managing large SQL Server databases (over 5 TB) can include many terabytes of information and can be cumbersome.

In this post, we walk you through two backup and restore options for large SQL Server databases hosted on Amazon RDS for SQL Server.

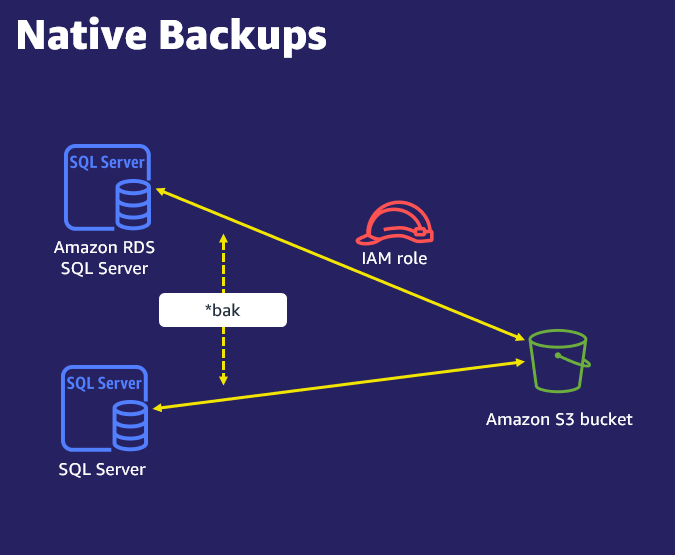

Native backup and restore approach

Amazon RDS supports native backup and restore for SQL Server databases. You can create a full backup of your RDS for SQL Server database and store the file in Amazon Simple Storage Service (Amazon S3). You can then restore the backup file to an existing RDS for SQL Server DB instance. You can also restore this backup file to an on-premises server or a different RDS for SQL Server DB instance. You can enable native SQL backup and restore for Amazon RDS for SQL Server by adding the SQL_Server_Backup_Restore option group.

The following diagram illustrates the architecture of a native backup and restore solution.

Prerequisites

Before you get started, you will need the following prerequisites:

An RDS for SQL Server instance

An S3 bucket with permissions to Amazon RDS for SQL Server

Back up your database to multiple backup files

Use the following command to perform a native backup for your RDS for SQL Server database:

@number_of_files indicate the number of files into which the backup is divided (chunked). The maximum number is 10.

Multifile backup is supported for both full and differential backups. If you enter a value of 1 or omit the parameter, a single backup file is created.

When you generate a backup of a large database, it’s advisable to generate the backup in multiple files. This process reduces the time to generate the backup. If your business requirement is to download the backup of a large database from Amazon S3, then downloading multiple backup files is faster than downloading one large backup file.

The following script backs up our sample RDS for SQL Server database (10 TB) using gp2 storage and r5b.4xlarge instance to a single backup file:

As we are using a single backup file, the backup failed with the following error because an individual Amazon S3 object can range in size from a minimum of 0 bytes to a maximum of 5TB.

“[2022-09-09 13:26:22.083] Task execution has started. [2022-09-09 13:26:22.300] Aborted the task because of a task failure or an overlap with your preferred backup window for RDS automated backup. [2022-09-09 13:26:22.303] Task has been aborted [2022-09-09 13:26:22.310] Cannot generate S3 chunks that are greater than 524288000 bytes to upload to S3.”

To overcome this limitation, we provided a different approach of backing up a database into multiple files. This method is also preferred to help reduce RPO and RTO.

We can run the same script with four backup files:

As shown in the following screenshot, the backup completed successfully.

We performed similar tests on different database sizes.

For example, use the following command to take a single file backup for a 5 TB database.

The following screenshot shows our results. The backup of 5TB database using one file completed in 602 mins.

Restore your database from multiple backup files

You can create and compile a simple script as a procedure and use it as a T-SQL tool for splitting large database backup files into several small files.

Note that in order to restore the database from the backup files, you need all the backup files for the restore database command.

The procedure works for SQL Server version 2014 and later.

Use the following restore command to restore a 10 TB database using multiple file backups. The asterisk (*) can be anywhere in the file_name part of the S3 ARN. The asterisk will be replaced by a series of alphanumeric strings in the generated files.

The following screenshot shows that restore completed successfully in 470 mins.

Use the following restore command to restore a 5 TB database using a single database file backup:

The following screenshot shows that restore completed successfully in 1008 mins.

Test results

The following table summarizes the testing that was performed with different database sizes on Amazon RDS for SQL Server with an r5b.4xlarge instance. We have observed that the time it takes to backup and restore a database size with 1TB and 5TB improves by using four multi-files compared to single file. Because the largest object that can be uploaded to S3 in a single file is 5TB, we cannot backup and restore a database size larger than 5TB (e.g. 10TB) in a single file, at this time.

Test Instance

Activity Type

Single File (minutes)

Four Files (minutes)

Performance Improvement (%)

1 TB (gp2, 3 TB storage allocated)

Backup

151

56

62.91

1 TB (gp2, 3 TB storage allocated)

Restore

200

57

71.50

1 TB (i01-40000 IOPS)

Backup

140

52

62.86

1 TB (i01-40000 IOPS)

Restore

154

54

64.93

5TB (gp2, 10 TB storage allocated)

Backup

602

235

60.96

5TB (gp2, 10 TB storage allocated)

Restore

1008

291

71.23

5 TB (io1-40000 IOPS)

Backup

601

212

64.73

5 TB (io1-40000 IOPS)

Restore

991

208

79.01

10TB (gp2,16TB storage allocated)

Backup

N/A

663

N/A

10TB (gp2,16TB storage allocated)

Restore

N/A

681

N/A

10 TB (io1-40000 IOPS)

Backup

N/A

590

N/A

10 TB (io1-40000 IOPS)

Restore

N/A

470

N/A

We performed a similar test by increasing the number of backup files to eight to see the impact:

For this specific instance, the results showed no difference in the timings compared to using four backup files with the instance size that we tested.

The optimal number of files depends on the instance size. For example, if the instance has 32vCPUs, splitting it into eight files would be faster than four files.

Snapshot approach

Amazon RDS for SQL Server allows you to take manual snapshots in addition to automated backups. These are storage volume snapshots of your DB instance, backing up the entire DB instance, not just individual databases. The time it takes for a snapshot backup depends on the size and class of your DB instance. In this test, we used an r5b.4xlarge instance.

One interesting observation we noticed is that an initial manual snapshot (backup) took around 11 hours for a 10 TB database with 16 TB storage allocated. But the restore from a snapshot took only 23 minutes. This can help reduce the RTO for your databases.

The first snapshot of a database instance contains the data for the full database instance. Subsequent snapshots of the same database instance are incremental, which means that only the data that has changed after your most recent snapshot is saved.

After the initial manual snapshot, the next snapshot took only 5 minutes and a restore time of 20 minutes. The snapshot restore approach can help you to reduce RPO and RTO for your other databases as well.

You can use the restored database instance as soon as its status is available. The DB instance continues to load data in the background. This is known as lazy loading. To help mitigate the effects of lazy loading on tables to which you require quick access, you can perform operations that involve full table scans, such as SELECT *. This allows the RDS for SQL Server instance to download the backed-up table data from Amazon S3.

The following table summarizes our findings for the Initial snapshot backup and Restores.

Test Instance

Activity Type

Duration (Minutes)

1 TB (gp2, 3 TB storage allocated)

Snapshot_Backup

109

1 TB (gp2, 3 TB storage allocated)

Snapshot_Restore

16

5 TB (gp2, 10 TB storage allocated)

Snapshot_Backup

480

5 TB (gp2, 10 TB storage allocated)

Snapshot_Restore

20

10 TB (gp2, 16 TB storage allocated)

Snapshot_Backup

635

10 TB (gp2, 16 TB storage allocated)

Snapshot_Restore

23

Clean up

When you’re finished using the resources in this post, clean up the AWS resources to avoid incurring unwanted charges. Specifically, delete the RDS for SQL Server instance and all objects from the S3 bucket used in this testing.

Conclusion

In this post, we covered two approaches on how to backup and restore a large SQL Server database hosted on Amazon RDS for SQL Server with minimal RTO and RPO. First, we demonstrated how you can optimize your backup and restore using SQL Server native backup to multiple files. This method can help you accelerate backup time and restore time when comparing to backing up to a single file. Then we showed you the manual snapshot approach and our findings from our performance testing results.

If you have any comments or questions, leave them in the comments section.

About the authors

Yogi Barot is Microsoft Specialist Principal Solutions Architect at AWS, she has 22 years of experience working with different Microsoft technologies, her specialty is in SQL Server and different database technologies. Yogi has in depth AWS knowledge and expertise in running Microsoft workload on AWS.

Vikas Babu Gali is a Sr. Specialist Solutions Architect, focusing on Microsoft Workloads at Amazon Web Services. As a native of India, Vikas enjoys playing Cricket and spending time with his family and friends outdoors.

Read MoreAWS Database Blog