Amazon SageMaker Pipelines is a continuous integration and continuous delivery (CI/CD) service designed for machine learning (ML) use cases. You can use it to create, automate, and manage end-to-end ML workflows. It tackles the challenge of orchestrating each step of an ML process, which requires time, effort, and resources. To facilitate its use, multiple templates are available that you can customize according to your needs.

Fully managed image and video analysis services have also accelerated the adoption of Computer vision solutions. AWS offers a pre-trained and fully managed AWS AI service called Amazon Rekognition that can be integrated into computer vision applications using API calls and require no ML experience. You just have to provide an image to the Amazon Rekognition API and it can identify the required objects according to pre-defined labels. It is also possible to provide custom labels specific to your use case and build a customized computer vision model with little to no overhead need for ML expertise.

In this post, we address a specific computer vision problem: skin lesion classification, and use Pipelines by customizing an existing template and tailoring it to this task. Accurate skin lesion classification can help with early diagnosis of cancer diseases. However, it’s a challenging task in the medical field, because there is a high similarity between different kinds of skin lesions. Pipelines allows us to take advantage of a variety of existing models and algorithms, and establish an end-to-end productionized pipeline with minimal effort and time.

Solution overview

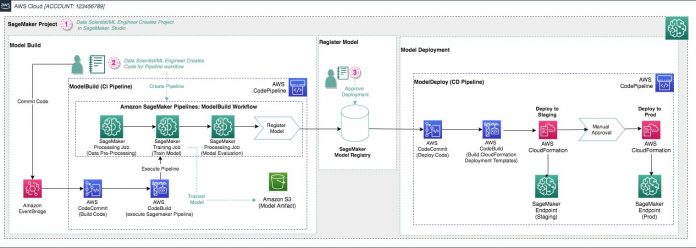

In this post, we build an end-to-end pipeline using Pipelines to classify dermatoscopic images of common pigmented skin lesions. We use the Amazon SageMaker Studio project template MLOps template for building, training, and deploying models and the code in the following GitHub repository. The resulting architecture is shown in the following figure.

For this pipeline, we use the HAM10000 (“Human Against Machine with 10000 training images”) dataset, which consists of 10,015 dermatoscopic images. The task at hand is a multi-class classification in the field of computer vision. This dataset depicts six of the most important diagnostic categories in the realm of pigmented lesions: actinic keratoses and intraepithelial carcinoma or Bowen’s disease (akiec), basal cell carcinoma (bcc), benign keratosis-like lesions (solar lentigines or seborrheic keratoses and lichen-planus like keratoses, bkl), dermatofibroma (df), melanoma (mel), melanocytic nevi (nv), and vascular lesions (angiomas, angiokeratomas, pyogenic granulomas and hemorrhage, vasc).

For the format of the model’s input, we use the RecordIO format. This is a compact format that stores image data together for continuous reading and therefore faster and more efficient training. In addition, one of the challenges of using the HAM10000 dataset is the class imbalance. The following table illustrates the class distribution.

Class

akiec

bcc

bkl

df

mel

nv

vasc

Number of images

327

514

1099

115

1113

6705

142

Total

10015

To address this issue, we augment the dataset using random transformations (such as cropping, flipping, mirroring, and rotating) to have all classes with approximately the same number of images.

This preprocessing step uses MXNet and OpenCV, therefore it uses a pre-built MXNet container image. The rest of the dependencies are installed using a requirements.txt file. If you want to create and use a custom image, refer to Create Amazon SageMaker projects with image building CI/CD pipelines.

For the training step, we use the estimator available from the SageMaker built-in Scikit Docker image for image classification and set the parameters as follows:

For further details about the container image, refer to Image Classification Algorithm.

Create a Studio project

For detailed instructions on how to set up Studio, refer to Onboard to Amazon SageMaker Domain Using Quick setup. To create your project, complete the following steps:

In Studio, choose the Projects menu on the SageMaker resources menu.

On the projects page, you can launch a pre-configured SageMaker MLOps template.

Choose MLOps template for model building, training, and deployment.

Choose Select project template.

Enter a project name and short description.

Choose Create project.

The project takes a few minutes to be created.

Prepare the dataset

To prepare the dataset, complete the following steps:

Go to Harvard DataVerse.

Choose Access Dataset, and review the license Creative Commons Attribution-NonCommercial 4.0 International Public License.

If you accept the license, choose Original Format Zip and download the ZIP file.

Create an Amazon Simple Storage Service (Amazon S3) bucket and choose a name starting with sagemaker (this allows SageMaker to access the bucket without any extra permissions).

You can enable access logging and encryption for security best practices.

Upload dataverse_files.zip to the bucket.

Save the S3 bucket path for later use.

Make a note of the name of the bucket you have stored the data in, and the names of any subsequent folders, to use later.

Prepare for data preprocessing

Because we’re using MXNet and OpenCV in our preprocessing step, we use a pre-built MXNet Docker image and install the remaining dependencies using the requirements.txt file. To do so, you need to copy it and paste it under pipelines/skin in the sagemaker-<pipeline-name>-modelbuild repository. In addition, add the MANIFEST.in file at the same level as setup.py, to tell Python to include the requirements.txt file. For more information about MANIFEST.in, refer to Including files in source distributions with MANIFEST.in. Both files can be found in the GitHub repository.

Change the Pipelines template

To update the Pipelines template, complete the following steps:

Create a folder inside the default bucket.

Make sure the Studio execution role has access to the default bucket as well as the bucket containing the dataset.

From the list of projects, choose the one that you just created.

On the Repositories tab, choose the hyperlinks to locally clone the AWS CodeCommit repositories to your local Studio instance.

Navigate to the pipelines directory inside the sagemaker-<pipeline-name>-modelbuild directory and rename the abalone directory to skin.

Open the codebuild-buildspec.yml file in the sagemaker-<pipeline-name>-modelbuild directory and modify the run pipeline path from run-pipeline —module-name pipelines.abalone.pipeline (line 15) to the following:

Save the file.

Replace the files pipelines.py, preprocess.py, and evaluate.py in the pipelines directory with the files from the GitHub repository.

Update the preprocess.py file (lines 183-186) with the S3 location (SKIN_CANCER_BUCKET) and folder name (SKIN_CANCER_BUCKET_PATH) where you uploaded the dataverse_files.zip archive:

skin_cancer_bucket=”<bucket-name-containing-dataset>”

skin_cancer_bucket_path=”<prefix-to-dataset-inside-bucket>”

skin_cancer_files=”<dataset-file-name-without-extension>”

skin_cancer_files_ext=”<dataset-file-name-with-extension>”

In the preceding example, the dataset would be stored under s3://monai-bucket-skin-cancer/skin_cancer_bucket_prefix/dataverse_files.zip.

Trigger a pipeline run

Pushing committed changes to the CodeCommit repository (done on the Studio source control tab) triggers a new pipeline run, because an Amazon EventBridge event monitors for commits. We can monitor the run by choosing the pipeline inside the SageMaker project. The following screenshot shows an example of a pipeline that ran successfully.

To commit the changes, navigate to the Git section on the left pane.

Stage all relevant changes. You don’t need to keep track of the -checkpoint file. You can add an entry to the .gitignore file with *checkpoint.* to ignore them.

Commit the changes by providing a summary as well as your name and an email address.

Push the changes.

Navigate back to the project and choose the Pipelines section.

If you choose the pipelines in progress, the steps of the pipeline appear.

This allows you to monitor the step that is currently running.It may take a couple of minutes for the pipeline to appear. For the pipeline to start running, the steps defined in CI/CD codebuild-buildspec.yml have to run successfully. To check on the status of these steps, you can use AWS CodeBuild. For more information, refer to AWS CodeBuild (AMS SSPS).

When the pipeline is complete, go back to the project page and choose the Model groups tab to inspect the metadata attached to the model artifacts.

If everything looks good, choose the Update Status tab and manually approve the model.The default ModelApprovalStatus is set to PendingManualApproval. If our model has greater than 60% accuracy, it’s added to the model registry, but not deployed until manual approval is complete.

Navigate to the Endpoints page on the SageMaker console, where you can see a staging endpoint being created.After few minutes, the endpoint is listed with the InService status.

To deploy the endpoint into production, on the CodePipeline console, choose the sagemaker-<pipeline-name>-modeldeploy pipeline that is currently in progress.

At the end of the DeployStaging phase, you need to manually approve the deployment.

After this step, you can see the production endpoint being deployed on the SageMaker Endpoints page. After a while, the endpoint shows as InService.

Clean up

You can easily clean up all the resources created by the SageMaker project.

In the navigation pane in Studio, choose SageMaker resources.

Choose Projects from the drop-down menu and choose your project.

On the Actions menu, choose Delete to delete all related resources.

Results and next steps

We successfully used Pipelines to create an end-to-end MLOps framework for skin lesion classification using a built-in model on the HAM10000 dataset. For the parameters provided in the repository, we obtained the following results on the test set.

Metric

Precision

Recall

F1 score

Value

0.643

0.8

0.713

You can work further on improving the performance of the model by fine-tuning its hyperparameters, adding more transformations for data augmentation, or using other methods, such as Synthetic Minority Oversampling Technique (SMOTE) or Generative Adversarial Networks (GANs). Furthermore, you can use your own model or algorithm for training by using built-in SageMaker Docker images or adapting your own container to work on SageMaker. For further details, refer to Using Docker containers with SageMaker.

You can also add additional features to your pipeline. If you want to include monitoring, you can choose the MLOps template for model building, training, deployment and monitoring template when creating the SageMaker project. The resulting architecture has an additional monitoring step. Or if you have an existing third-party Git repository, you can use it by choosing the MLOps template for model building, training, and deployment with third-party Git repositories using Jenkins project and providing information for both model building and model deployment repositories. This allows you to utilize any existing code and saves you any time or effort on integration between SageMaker and Git. However, for this option, a AWS CodeStar connection is required.

Conclusion

In this post, we showed how to create an end-to-end ML workflow using Studio and automated Pipelines. The workflow includes getting the dataset, storing it in a place accessible to the ML model, configuring a container image for preprocessing, then modifying the boilerplate code to accommodate such image. Then we showed how to trigger the pipeline, the steps that the pipeline follows, and how they work. We also discussed how to monitor model performance and deploy the model to an endpoint.

We performed most of these tasks within Studio, which acts as an all-encompassing ML IDE, and accelerates the development and deployment of such models.

This solution is not bound to the skin classification task. You can extend it to any classification or regression task using any of the SageMaker built-in algorithms or pre-trained models.

About the authors

Mariem Kthiri is an AI/ML consultant at AWS Professional Services Globals and is part of the Health Care and Life Science (HCLS) team. She is passionate about building ML solutions for various problems and always eager to jump on new opportunities and initiatives. She lives in Munich, Germany and is keen of traveling and discovering other parts of the world.

Yassine Zaafouri is an AI/ML consultant within Professional Services at AWS. He enables global enterprise customers to build and deploy AI/ML solutions in the cloud to overcome their business challenges. In his spare time, he enjoys playing and watching sports and traveling around the world.

Fotinos Kyriakides is an AI/ML Engineer within Professional Services in AWS. He is passionate about using the technology to provide value to customers and achieve business outcomes. Base in London, in his spare time he enjoys running and exploring.

Anna Zapaishchykova was a ProServe Consultant in AI/ML and a member of Amazon Healthcare TFC. She is passionate about technology and the impact it can make on healthcare. Her background is in building MLOps and AI-powered solutions to customer problems in a variety of domains such as insurance, automotive, and healthcare.

Read MoreAWS Machine Learning Blog