In the post, Validate database objects post-migration from Microsoft SQL Server to Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL, we explained the manual process to run relevant queries at source and target and match the output to identify the gaps post-migration. Because it’s a tedious job to validate all the available database objects, table level counts, data type mismatches, and other valuable checks post-migration, we automated this manual validation process using Python. We took advantage of its capabilities to query, validate, and generate a report with the object-level mismatches in the target database with regards to the source database.

In this post, we explain how to validate the database objects post-migration for multiple databases like SQL Server, Oracle, PostgreSQL, and MySQL databases using Python. This provides a much simpler way to assess the success of a database migration for different database types where migration summary report can be created by just providing the database credentials.

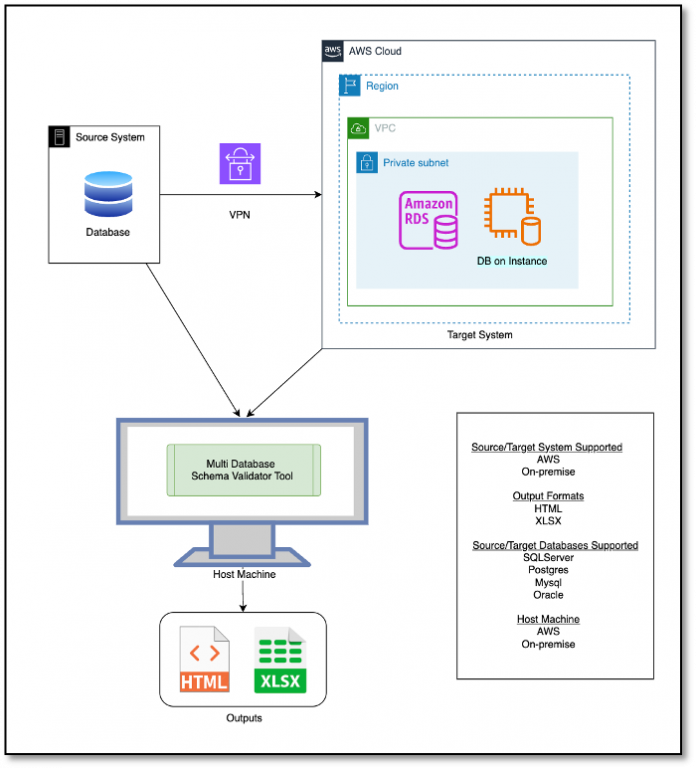

Solution Overview

We use Python for generating a report with a summary and detail-level schema validations for a given database, with encrypted connection and AWS Secrets Manager service to securely store the connection details (host, username, password, port and database) of source and target databases. The report is generated in user requested output format, such as an Excel file (xlsx) or HTML. This solution is currently supported on Windows operating system and SQL Server, Oracle, PostgreSQL, and MySQL databases.

Sample Flow to use Multi Database Schema Validator tool (MDSV) for on-premise to AWS cloud migration

Pre-requisites

Python 3.10.x is required. Download the latest version before setting up the report generation tool.

ODBC Driver for SQL Server

Connectivity to the source and target databases from the host machine.

SELECT permissions on metadata tables or views for the user executing queries on source and target databases.

AWS CLI configured in host machine.

Operating Systems

Currently supported on Windows and macOs.

Note: If you’re using macOS, you might encounter a few installation errors, review the following steps:

To fix the error – ERROR: Failed building wheel for pymssql, re-install pymssql using the following commands. pip3 uninstall pymssql

pip3 install pymssql

To fix the error – Error occurred while executing the MsSQL query: (pyodbc.Error) (‘01000’, “[01000] [unixODBC][Driver Manager]Can’t open lib ‘ODBC Driver 18 for SQL Server’ : file not found (0) (SQLDriverConnect)”) , install the ODBC driver for macOs.

Report generation tool setup

To set up the tool, complete the following steps:

Download the code repository to host machine.

Open the command prompt, navigate to the root directory, and run one of the following commands to check the version of Python installed on your host machine: python –version

python3 –version

Based on the Python version in your host machine, run one of the following commands with appropriate version requirements file: python -m pip install -r requirements3.10.txt

python3 -m pip install -r requirements3.10.txt

Setting up AWS Secret Manager secrets for Source and Target database details

The details for the databases such as host names, username, password and more need to be specified in separate AWS Secret Manager secrets which provide more security for your credentials.

The following steps explain how to create a secret:

Open the Secrets Manager Console

Ensure that you are in the correct Region

Choose Store a new secret

Select Other type of secret

Under Plaintext specify your database details in the following manner:

Add AWS Secret content {

“username”: “<username>”,

“password”: “<password>”,

“host”: “<database_hostname>”,

“port”: “<port>”,

“database_name”: “<database_name>”

}

Select the Encryption key to be used for secret encryption. Ensure that the role/user used on the machine running the tool has appropriate permissions to access the key and the secret. You may refer to this document.

Specify name and other details for the secret and other details and save the secret and note the name for it as it will be used in the next step.

Provide the details for the source, target, file-format, Secrets manager Region, and logging level in the configuration file “configurations.ini” in conf folder of tool source directory. Specify the details of the Secrets Manager secret for source and target, file format and logging details in the following format.

[source]

SOURCE_DATABASE_TYPE = mssql

SOURCE_SECRET_ID = <source_database_secrets_id>

[target]

TARGET_DATABASE_TYPE = postgres

TARGET_SECRET_ID = <target_database_secrets_id>

[region]

SECRET_REGION = <secrets_manager_secret_region>

[file-format]

FILE_FORMAT = html

[logging]

DEBUG_LEVEL = INFO

The allowed inputs for SOURCE_DATABASE_TYPE and TARGET_DATABASE_TYPE are as follows:

SQL Server = mssql

MySQL = mysql

Oracle = oracle

PostgreSQL = postgres

The allowed inputs for the file_format are xlsx and HTML (the default option is HTML).

You can also set these details using environment variables with the same names, which would override the details in this file. We recommend using environment variables for database passwords.

Generate Reports

To generate a database migration report, run one of the following commands :

python src/main.py

python3 src/main.py

Output

The tool generates the report under the output folder. The job run logs are in the logs folder.

In the following screenshots, we show the sample output for the migration_summary_mssql_to_postgres_20230404_064829.html.

The first screenshot is the database migration summary and table of contents.

The following screenshot shows the source and target database details.

The following screenshot is an overall summary.

The following screenshot shows a schema summary.

The following screenshot shows an object summary.

Cleanup

Delete the AWS Secret Managers secrets that are created in “Setting up AWS Secret Manager secrets for Source and Target database details” section, to store the database details.

Conclusion

Validating database objects is essential to ensure database migration accuracy and confirm that all objects have been migrated successfully with proper attributes and dependencies. Validating all the database objects helps you ensure target database integrity, thereby allowing your application to function seamlessly as before on the source.

In this post, we discussed automating the manual validation process of the database objects post-migration using Python and its libraries. The output includes a summary and detail-level mismatches for each schema with a match percentage for the given database.

Refer to the following related resources to learn more about database migration:

AWS Schema Conversion Tool

Database Migration – What Do You Need to Know Before You Start ?

Microsoft SQL Server To Amazon Aurora with PostgreSQL Compatibility Migration Playbook

CREATE PROCEDURE SQL command

CREATE FUNCTION SQL command

Indexes: The SQL Language

If you have any questions or suggestions about this post, leave a comment.

About the authors

Sai Krishna Namburu is a Lead Database Consultant with AWS Professional Services based out of Hyderabad, India. With good knowledge on relational databases adding hands-on in homogenous and heterogenous database migrations, he helps the customer’s migration to AWS cloud and their optimisations.

Pratik Sharma is a consultant with the AWS Professional Services based out of Pune, India. He likes combining code and AWS services in new ways to build the solutions and automations that empower customers in their cloud journey.

Ramesh Babu Donti is a Lead Database Consultant with AWS Professional Services based out of Hyderabad, India. Ramesh focuses on helping customers to build high-available, cost-effective database solutions and migrate their large scale databases to AWS.

Read MoreAWS Database Blog