As an organization, you run critical applications on AWS, and the infrastructure that runs those critical applications can be spread across different accounts and have complex relationships. When you want to understand the landscape of your existing setup, it can seem daunting to go through lists of resources and try to understand how the resources are connected. It would be useful if you had an easier way to visualize everything. As customers continue to migrate mission-critical workloads on to AWS, their cloud assets continue to grow. Getting a holistic, contextual view of your cloud inventory is becoming critical to achieve operational excellence with your workloads.

A good understanding and visibility into cloud assets allows you to plan, predict, and mitigate any risk associated with their infrastructure. For example, you should have visibility into all the workloads running on a particular instance family. If you decide to migrate to a different instance family, a knowledge graph around all the workloads that would be affected can help you plan for this change and make the whole process seamless. To address this growing need of managing and reporting asset details, you can use AWS Config. AWS Config discovers AWS resources in your account and creates a map of relationships between AWS resources (as in the following screenshot).

In this post, we use Amazon Neptune with AWS Config to get an insight of our landscape on AWS and map out relationships. We also complement that with an open-source tool to visualize our data stored in Neptune.

Neptune is a fully managed graph database service that can store billions of relationships within highly connected datasets and query the graph with milliseconds latency. AWS Config enables you to assess, audit, and evaluate the configurations of your AWS resources. With AWS Config, you can review changes in configurations and relationships between AWS resources, dive into detailed resource configuration histories, and determine your overall compliance against the configurations specified in your internal guidelines.

Prerequisites

Before you get started, you need to have AWS Config enabled in your account and enable the stream for AWS Config so that any time a new resource is created, you get a notification of the resource and its relationships.

Solution overview

The workflow includes the following steps:

Enable AWS Config in your AWS account and set up an Amazon Simple Storage Service (Amazon S3) bucket where all the config logs are stored.

Amazon S3 Batch Operations uses AWS Lambda on an existing S3 bucket to populate the Neptune graph with the existing AWS Config inventory and build out the relationship map. AWS Lambda function is also triggered when a new AWS Config file is delivered to an S3 bucket and updates the Neptune database with all the changes.

User authenticates with Amazon Cognito and makes a call to an Amazon API Gateway endpoint

The static website calls an AWS Lambda function which is accessed through the proxy and exposed to the internet using Amazon API Gateway.

AWS Lambda function is used to query the graph in Amazon Neptune and passes the data back to the app to render the visualization.

The resources referred to in this post, including the code samples and html files are available in the amazon-neptune-aws-config-visualization GitHub repository.

Enable AWS Config in an AWS account

If you haven’t enabled AWS Config yet, you can set up AWS Config through the AWS Management Console.

If you have already enabled AWS Config, make note of the S3 bucket where all the configuration history and snapshot files are stored.

Set up a Neptune cluster

Your next step is to provision a new Neptune instance inside a VPC. For more information, see the Neptune user guide.

After you set up the cluster, note the cluster endpoint and port; you need this when inserting data into the cluster and querying the endpoint to display that using the open-source library VIS.js. VIS.js is a JavaScript library used for visualizing graph data. It has different components such as DataSet, Timeline, Graph2D, Graph3D, and Network for displaying data in various ways.

Configure a Lambda function to trigger when AWS Config delivers a file to an S3 bucket

After you set up the cluster, you can create a Lambda function to be invoked when AWS Config sends a file to Amazon S3.

Create a directory with the name configparser_lambda and run the following commands to install the packages for our function to use:

Create a file configparser_lambdafunction.py in the directory and open it in a text editor.

Enter the following into the configparser_lambdafunction.py file:

Create a .zip archive of the dependencies:

Add your function code to the archive:

When the Lambda deployment package (.zip file) is ready, create the Lambda function using the AWS Command Line Interface (AWS CLI).For instructions to install and configure AWS CLI on your operating system, see Installing, updating, and uninstalling the AWS CLI.

After you install the AWS CLI, run aws configure to set the access_key, and AWS Region.

Run the following commands to create a Lambda function within the same VPC as the Neptune cluster. (The Lambda function needs an AWS Identity and Access Management (IAM) execution role to be able to create ENIs in the VPC for accessing the Neptune instance.)

Attach the following policy to the role:

Create the Lambda function using the deployment package and IAM role created in previous steps. (Use subnet-ids from the VPC in which the Neptune cluster is provisioned.)

After you create the function, add the S3 bucket as the trigger for the Lambda function.

The S3 bucket is where all the AWS Config files are sent and only for the .gz file extension.

Use Lambda with S3 Batch Operations

If your AWS Config was turned on from before, you need to ingest all the existing resource data into the Neptune cluster that you created. To do so, you need to set up S3 Batch Operations. S3 Batch Operations allow you to invoke Lambda functions to perform custom actions on objects. In this use case, you use the function to read all the existing files in your AWS Config S3 bucket and insert data into the Neptune cluster. For more information, see Performing S3 Batch Operations.

You need to specify a manifest for the S3 Batch Operations. In this setup, you need to have enabled an Amazon S3 inventory report for your bucket where AWS Config sends all the files. For instructions, see Configuring Amazon S3 inventory. Make sure to use CSV output format for the inventory.

After the inventory is set up and the first report has been delivered (which can take up to 48 hours), you can create an S3 Batch Operations job to invoke the Lambda function.

On the S3 Batch Operations console, choose Create job.

For Region, choose your Region.

For Manifest format, choose S3 inventory report.

For Manifest object, enter the location of the file.

Choose Next.

In the Choose operation section, for Operation type, select Invoke AWS Lambda function.

For Invoke Lambda function, select Choose from function in your account.

For Lambda function, choose the function you created.

Choose Next.

In the Configure additional options section, for Description, enter a description of your job.

For Priority, choose your priority.

For Completion report, select Generate completion report.

Select All tasks.

Enter the bucket for the report.

Under Permissions, select Choose from existing IAM roles.

Choose the IAM role that grants the necessary permissions (a role policy and trust policy that you can use are also displayed).

Choose Next.

Review your job details and choose Create job.

The job enters the Preparing state. S3 Batch Operations checks the manifest and does some other verification, and the job enters the Awaiting your confirmation state. You can select it and choose Confirm and run, which runs the job.

Create a Lambda function to access data in the Neptune cluster for visualization

After the data is loaded in Neptune, you need to create another Lambda function to access the data and expose it via RESTful interface through API Gateway.

Run the following commands:

Save the file as visualizeneptune.js in the preceding directory.

Create a file package.json in the directory where you saved the preceding file and add the following dependencies, which are required by the Lambda function in the file:

Run the following in the directory that you saved the file in:

When the Lambda deployment package (.zip file) is ready, we can create the Lambda function using the AWS CLI.

Run the following commands to create a Lambda function within the same VPC as the Neptune cluster. (We create the Lambda function using the deployment package and IAM role created earlier, and use subnet-ids from the VPC in which the Neptune cluster is provisioned.)

We recommend you go through the Lambda function source code at this point to understand how to query data using Gremlin APIs and how to parse and reformat the data to send to clients.

Create and configure API Gateway with a proxy API

We expose the Lambda function created in the earlier step through API Gateway Proxy API. For more information, see Set up Lambda proxy integrations in API Gateway.

Create the RESTful API using the following command from the AWS CLI:

Note the value of the id field from the earlier output and use it as the <rest-api-id> value in the following code:

Note the value of the id field from the earlier output and use it as the <parent-id> value in the following command, which creates a resource under the root structure of the API:

Note the value of the id field from the output and use it as the <resource-id> in the following command:

So far, we created an API, API resource, and methods for that resource (GET, PUT, POST, DELETE, or ANY for all methods). We now create the API method integration that identifies the Lambda function for which this resource acts as a proxy.

Use the appropriate values obtained from the previous commands in the following code:

Deploy the API using the following command:

For API Gateway to invoke the Lambda function, we either need to provide the execution role to the API integration or we add the permission (subscription) in Lambda explicitly that says that the API can invoke a Lambda function. This API Gateway subscription is also reflected on the console.

Run the following command to add the API Gateway subscription and permission to invoke the Lambda function:

We have now created an API Gateway proxy for the Lambda function. To configure authentication and authorization with the API Gateway, we can use Amazon Cognito as described in the documentation.

Configure an S3 bucket to host a static website and upload the HTML file

Now that we have all the backend infrastructure ready to handle the API requests getting data from Neptune, let’s create an S3 bucket to host a static website.

Run the following commands to create an S3 bucket as a static website and upload visualize-graph.html into it:

Upload the HTML file to Amazon S3

The main.js file has to be updated to reflect the API Gateway endpoint that we created in the previous steps.

Run the following commands to replace the value of PROXY_API_URL with the API Gateway endpoint. You can obtain the value of the URL on the API Gateway console—navigate to the API and find it listed as Invoke URL in the Stages section. You can also construct this URL using the following template.

When you run the following commands, make sure to use the escape character in URLs.

For Linux, use the following code:

The following is an example of the completed code:

For MacOS, use the following code:

The following is an example of the completed code:

After you replace the value of PROXY_API_URL in the visualize-graph.html file, upload the file to Amazon S3 using the following command:

You’re all set! You can visualize the graph data through this application from the following URL:

Visualize the resources on the dashboard

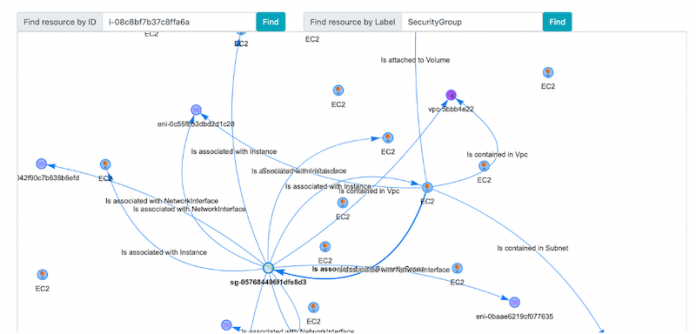

You can now search for resources by a specific ID in the account or search by label. For more information about the resources that are supported and indexed by AWS Config, see Supported Resource Types.

Landing page

The landing page has an option to search via your resource ID or resource label.

Search by ID

You can enter the ID of a specific resource in the Find Resource by ID field and choose Find to populate the dashboard with that resource.

To find resources related to this instance (such as VPC, subnet, or security groups), choose the resource. A visualization appears that shows all the related resources based on the AWS Config relationships.

You can see more relationships by choosing a specific resource and pulling up its relationships.

Search by label

In certain use cases, you might want to view all the resources without looking at any individual resources. For example, you may want to see all the Amazon Elastic Compute Cloud (Amazon EC2) instances without searching for a specific instance. To do so, you search by label. Neptune stores the label of a resource based on the resource type value in AWS Config. For example, if you look at the EC2 resources, you can choose the specific value Instance (AWS::EC2::Instance), SecurityGroup (AWS::EC2::SecurityGroup), or NetworkInterface (AWS::EC2::NetworkInterface).

In this case, you can search for all the SecurityGroup values, and the dashboard is populated with all the security groups that exist in our account.

You can then drill down into the specific security group and start mapping out the relationships (for example, which EC2 instance it’s attached to, if any).

Conclusion

In this post, you saw how you can use Neptune to visualize all the resources in your AWS account. This can help you better understand all the existing relationships through easy-to-use visualizations.

Amazon Neptune now supports graph visualization in Neptune workbench, in our next blogpost we will demonstrate how you can use Neptune workbench to visualize your data.

More details on Neptune workbench in this post.

About the author

Rohan Raizada is a Solutions Architect for Amazon Web Services. He works with enterprises of all sizes with their cloud adoption to build scalable and secure solutions using AWS. During his free time, he likes to spend time with family and go cycling outdoors.

Amey Dhavle is a Senior Technical Account Manager at AWS. He helps customers build solutions to solve business problems, evangelize new technologies and adopt AWS services. Outside of work, he enjoys watching cricket and diving deep on advancements in automotive technologies. You can find him on twitter at @amdhavle.

Read MoreAWS Database Blog