In some cases, Database Administrators (DBAs) need to run post-database creation steps such as running SQL statements for creating users and database objects, resetting passwords, or standardizing Oracle database builds. This is mainly done during the database creation phase of new application deployment or during database refreshes that occur in non-production environments. AWS CloudFormation gives you an easy way to model a collection of related AWS and third-party resources, provision them quickly and consistently, and manage them throughout their lifecycles, by treating infrastructure as code. As of this writing, AWS CloudFormation doesn’t directly support running SQL scripts right after creating an Amazon RDS for Oracle database, but you can solve this problem two different ways:

Use an AWS CloudFormation custom resource to call an AWS Lambda function to run post-database creation steps on the RDS for Oracle DB instance right after creation or restoration from a snapshot

Use Amazon Elastic Compute Cloud (Amazon EC2) as a temporary instance to connect with the RDS for Oracle database to run post-database creation steps on the RDS for Oracle DB instance right after creation or restoration from a snapshot

In this post, we will demonstrate couple of methods mentioned above when using Amazon RDS for Oracle for our solution, but you can use this solution for other Amazon RDS engine types (MS SQL Server, MySQL, PostgreSQL and Amazon Aurora) by using their respective client tools or drivers.

Solution overview

In this section, we discuss the two solution options in more detail.

Option 1: Connect to your DB instance with a Lambda function

You can use different options such as JDBC and ODBC drivers or Oracle Client libraries connect to your RDS for Oracle DB instance from a Lambda function and run SQL scripts. The AWS CloudFormation custom resource can call a Lambda function, which runs SQL scripts as soon as the RDS for Oracle database creation is complete. To connect with an RDS for Oracle DB instance from Lambda, you can create a Lambda function using a Lambda layer, which has the following executables:

Oracle client tools such as SQL*Plus and Oracle client libraries

The cx_Oracle Python module that enables access to Oracle Database

You can prepare a .zip file or package for your Lambda layer and function after you determine which method you want to use to connect with the RDS for Oracle database.

To implement this solution, you create the following:

A .zip or archive file including the Oracle client libraries, cx_Oracle, libaio package, and SQL scripts

An Amazon Simple Storage Service (Amazon S3) gateway endpoint in the Amazon Virtual Private Cloud (Amazon VPC) where you deploy the Lambda function and RDS for Oracle DB instance

An S3 bucket with the .zip file loaded

A security group and subnets to use in the RDS for Oracle database and Lambda function, which enable the needed port to connect to the database using SQL*Plus or cx_Oracle from the function

A Lambda execution role

A Lambda layer using the .zip package created

A Lambda function using the Lambda layer and execution role

An RDS for Oracle database or database restore from a snapshot

An AWS CloudFormation custom resource to call the Lambda function to run post-database creation scripts after the database build is complete

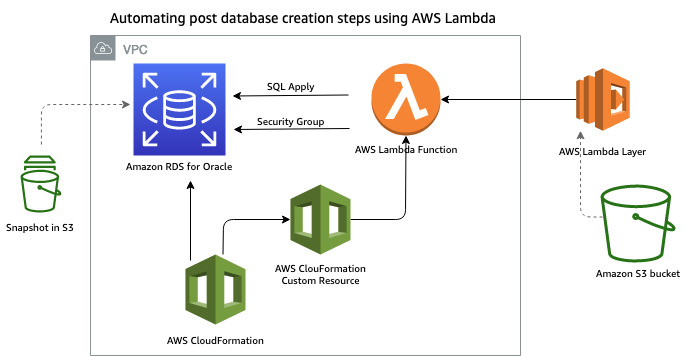

The following diagram illustrates this architecture.

Option 2: Connect to your DB instance with an EC2 instance

Use this option if you have post-database creation steps, scripts, or restore processes that need to run more than the currently allowed Lambda quota limits.

For this solution, you create the following:

SQL scripts to run after database deployment

A GitHub repo or S3 bucket to store the SQL scripts

Security groups for Amazon EC2 and the RDS for Oracle DB instance and an inbound rule to allow Amazon EC2 to connect with the RDS for Oracle DB instance

A CloudFormation template to create or restore the RDS for Oracle DB instance

A CloudFormation template to create an EC2 instance (Free Tier)

Amazon EC2 user data to install Oracle client tools, download SQL scripts, and run the scripts by connecting to the RDS for Oracle DB instance

The following diagram illustrates this architecture.

The CloudFormation templates and the sample code provided in this post are for evaluation purposes only. Don’t use these for production without thorough testing.

Connect to your DB instance with a Lambda function (Option 1)

To perform this solution, first complete the following:

Create and activate an AWS account.

Create a new VPC or use existing an VPC with the required subnets in appropriate Availability Zones.

Create a new EC2 instance or use an existing instance using an Amazon Linux image.

Create a new S3 bucket or use an existing bucket ensure the S3 bucket can be accessed by the Lambda function when creating the Lambda layer.

Configure a gateway VPC endpoint for amazonaws.<region>.s3 with private route tables that include the required subnets and Amazon VPC ID.

To deploy the Lambda function using cx_Oracle and Oracle client tools to run the post-database creation scripts on the RDS for Oracle database, complete the steps in the following sections.

Create the .zip file for the Lambda function

First, let’s download the files to create the.zip file for Lambda layer.

Download the appropriate Linux and Python version of the cx_Oracle package.

The Lambda runtime environment is based on the Linux and Python version. For example, on the cx_Oracle download page, manylinux states the Linux version and cp37 denotes the Python 3.7 environment if you download a cx_Oracle package (version 8.1.0) named cx_Oracle-8.1.0-cp37-cp37m-manylinux1_x86_64.whl. You use the same version of Python to write scripts and the same Python version runtime in the Lambda function. You can also download older versions of cx_Oracle.

Download the Oracle instant client and tools in .zip format for your Linux-based operating system.

You must download the instant client Basic Light package, which includes libraries for cx_Oracle and SQL*Plus.

You may download the SQL*Plus package if you want to use the SQL*Plus utility to run SQL scripts against RDS for Oracle Instance.

You may optionally download the instant client tools package, which includes utilities like SQL*Loader and Data Pump, if you want to use them to load data into Oracle database.

Download the appropriate Libaio package.

You can choose the latest version under the PCLinuxOS category. For this post, we use a PCLinuxOS version because it needs to be extracted in Amazon Linux while packaging the libraries for the Lambda layer.

The following screenshot shows the page with the with binary package download link after you choose the .rpm link under PCLinux.

Following code will download the Libaio Package,

Next, let’s create the .zip file from the files we downloaded. You need a Linux environment to build the .zip file files needed for Lambda. You can create an EC2 instance using an Amazon Linux image.

Copy the downloaded files such as cx_Oracle, the Oracle instant client, SQL*Plus, and the tools and Libaio packages to the Amazon Linux running on Amazon EC2.

SSH into the EC2 instance to perform the following steps to create the .zip file for the Lambda function.

Determine the version and install Python 3.7 (because we downloaded cx_Oracle with cp37 version in this walkthrough):

Install pip3 (Amazon Linux 2 already has pip3 installed and you can update or install it if pip3 doesn’t exist):

Install the virtual environment and activate it:

The preceding command creates a directory called awslambda under /tmp or the present working directory.

Extract the cx_Oracle package and the copy necessary files from it to the /tmp/archive_oracle_lambda directory:

The following screenshot shows the files being copied into our /tmp/archive_aws_lambda folder.

Extract the Oracle client and SQL*Plus package:

Optionally, you can extract the tools package, which contains utilities like SQL*Loader and Data Pump, if you want to use them to load data into Oracle database:

Copy all the extracted files from the extracted folder (/tmp/instantclient_21_1 in this case) to the archive_aws_lambda directory:

Optionally, you can add tnsnames.ora file and entries in the /tmp/archive_aws_lambda/network/admin/ directory if your Python script wants to use tnsnames to resolve the database connection when run from Lambda. This might be helpful if you are connecting to an existing RDS for Oracle instance.

Extract the Libaio package and copy the necessary files from it to the /tmp/archive_aws_lambda directory:

Write your SQL scripts

You can write your own SQL scripts that run the post-database creation steps in the RDS for Oracle database from Lambda. You can also review the cx_Oracle documentation and sample scripts to write a Python script to run SQLs against the RDS for Oracle database as per your requirement. You can also use SQL*Plus , expdp , impdp, and SQL*Loader utilities in your Python script as needed. You need to include the related SQL script files in the /tmp/archive_aws_lambda/ directory to have your main Python script call them during the run. In this post, we created a sample SQL script called SqlScript.sql and included it in this package:

Make sure your Lambda function doesn’t exceed the maximum allowed Lambda quotas. The size of the .zip file and the unzipped file must be smaller than 50 MB and 250 MB, respectively. You can create multiple Lambda functions for each post-creation script or task and run them separately from the CloudFormation template if you exceed the function limit.

Package files for the Lambda function

Now you have all the required libraries, executables, and corresponding SQL scripts for Lambda to connect with the RDS for Oracle database and run the required post-creation steps. Make a .zip file of the /tmp/archive_aws_lambda/ directory and upload that to the Amazon S3 location. You must upload it to Amazon S3 because the file size exceeds the size limit defined by Lambda for direct upload. See the following code:

Make sure you follow the preceding commands when you make a .zip file because the directory structure inside the .zip file is very important during the Lambda extraction process, and the environment variables in the function should match with that path. In this example, we don’t make any subdirectories to separate the libraries, executables, or SQL files, and all files are extracted into /opt by default. Also, we’re inside the /tmp/archive_aws_lambda/ directory and use * while making the .zip file.

Create an S3 bucket and upload the .zip file

Now we have the /tmp/awslambdapkg_rds_oracle_post.zip file, which we upload to the S3 bucket. Make sure the bucket is located in the same Region as your Lambda function and RDS for Oracle DB instance. If you don’t have a bucket, you can create one.

After you upload the file to Amazon S3, note the path of that file. You can choose the file name and then choose Copy path.

Use AWS CloudFormation to create the Lambda layer, security group, IAM role, and Lambda function

Now that you have the package to use in the Lambda layer, you can use to create the CloudFormation stack using the sample template from here. This will create a Lambda layer, security group, AWS Identity and Access Management (IAM) role, and Lambda function, including a sample Python code to run the SQL scripts.

The following screenshot shows the possible stack parameters to input.

The following screenshot shows the resources that are created.

You can see the exported Lambda function name (ARN) and security group ID created for the Lambda function on the Outputs tab of the CloudFormation stack.

We use these exported names in the next step in our RDS for Oracle DB instance creation template.

Use AWS CloudFormation to create the RDS for Oracle instance and AWS CloudFormation custom resources

Now that Lambda function is set up and ready for use, we can create an RDS for Oracle DB instance and use a custom Lambda resource to call the Lambda function we created. You can Launch the Stack using by using the sample CloudFormation template from here to create these resources.

Make sure to follow best practices such as enabling delete protection and storing passwords in AWS Secret Manager when you create an RDS for Oracle database.

The following screenshots show the possible parameters you can input for the CloudFormation template.

The following screenshot shows the resources we created, including the Amazon RDS subnet, security group, database secret, RDS for Oracle instance, and Lambda custom resources.

When the stack is complete, you see an output message to confirm that the post-database creation scripts ran after creating the RDS for Oracle DB instance.

You can also view the Amazon CloudWatch logs of the Lambda function to review the output of the SQL scripts.

Connect to your DB instance with an EC2 instance (Option 2)

Traditionally, database administrators and DevOps teams use a client computer on premises or an EC2 instance in AWS to connect to managed database services like Amazon RDS for Oracle to run SQL scripts. This approach brings a similar experience by launching an EC2 instance along with Amazon RDS for Oracle. As part of this CloudFormation template, we create an EC2 instance using an Amazon Linux image (we recommend creating it in a private subnet) to run the post-database creation or restore scripts on the RDS for Oracle database automatically. You can also connect to this EC2 instance to perform additional checks or run additional scripts.

After the CloudFormation stack is complete, the following steps are performed on the EC2 instance automatically through the Amazon EC2 user data functionality:

Update yum.

Download SQL scripts from the GitHub repo.

Download the Oracle client libraries of the Linux version.

Install the Oracle SQL*Plus client and a few other utilities. You also can use the Data Pump and SQL*Loader utilities in your user data script as needed.

Update the environment variables.

Create shell scripts under the /home/ec2-user directory to run the downloaded SQL scripts.

Run the shell script with the SQL scripts by connecting to the RDS for Oracle DB instance.

When you’re done running your script, make sure to stop the EC2 instance to avoid any additional charges.

Use AWS CloudFormation to create the security group, RDS for Oracle DB instance, and EC2 instance

To create a security group, RDS for Oracle DB instance, and EC2 instance, including a sample user data code to run the post-database creation SQL scripts, you can to create the stack by using sample CloudFormation template from here

Make sure to follow best practices such as enabling delete protection and storing passwords in AWS Secrets Manager when you create the RDS for Oracle DB instance.

The following screenshots show the possible parameters you can input for the CloudFormation template.

The following screenshot shows the resources you created, including the security group, EC2 instance, subnet group DBSubnetGroup, RDS for Oracle DB instance, and Amazon RDS primary user account secret in Secrets Manager.

When the stack is complete, you can see an output message in the stack to confirm that the post-database creation scripts ran.

You can also view the logs by logging in to the Amazon EC2 instance created by this stack to review the output of the SQL scripts.

Additional customization for Option 2

You can make the following customizations to this solution:

EC2 instance AMI ID – Depending on the Region you create the EC2 instance in, you have to change the AMI of the EC2 instance. Validate the available Amazon Linux image based on the Region you use and provide the appropriate AMI ID.

SQL script – You can have your SQL script in GitHub or an S3 bucket and provide the appropriate URL in the template to download them. You can do this by modifying the UserData section of the EC2 instance. Make sure the GitHub URL or the Amazon S3 URL where you have the SQL script is accessible by the EC2 instance when the EC2 instance is created.

New database creation vs. restoration from snapshot – The CloudFormation template is written to create a new RDS for Oracle. You can modify the template to include a snapshot identifier parameter to restore the RDS for Oracle database from an existing snapshot. This may be helpful when you want to refresh the environments from a snapshot copy.

Conclusion

In this post, we demonstrated how to automate post-database creation steps using AWS CloudFormation, Amazon EC2, Lambda, and Python. These solutions are helpful to any RDS DB instance creation that requires you to run custom scripts as part of the creation process. Try out the solution for yourself, and leave your thoughts in the comments.

About the Authors

Siva Subramaniam is a Database Consultant with AWS Professional Services, US West Application Practice, and has two decades of IT experience in technical leadership and application and database design. He also has hands-on experience as an infrastructure and application support DBA, including database architecture, design, build, data modeling, infrastructure automation, migrations and upgrades, performance tuning, monitoring, backup and recovery, and disaster recovery. Siva holds a master’s degree in Computer Applications and is a certified professional in various database and cloud technologies including AWS, Azure, OCI, Oracle, MySQL, Cassandra, and VMware. Siva enjoys playing cricket with his friends and son, doing farming and agriculture-related activities, and learning to cook from his wife.

Arumugam Petchimuthu is a Senior Solutions Architect with AWS World Wide Specialist Organization, specializing in databases. Aru has 25 years of IT experience in technical leadership, application and database design, DevOps, infrastructure, and application support, including database administration, database and application architecture, building, data modeling, data migrations, and performance tuning. Aru holds a master’s degree in Computer and Software Applications and is a certified professional in relational and NoSQL database and cloud technologies, including AWS, Oracle, and Cassandra. Aru enjoys listening to music, outdoor photography, making documentaries, volunteering for community service activities, and cooking traditional food.

Read MoreAWS Database Blog