Applications often use an array of data to represent a business object containing multiple values. Many customers choose Amazon Relational Database Service (Amazon RDS) for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition to store their array of business data.

There are several architectures available for saving array values to a PostgreSQL database. You need to consider several factors when it comes to selecting which architecture to apply:

How many tables do you need to create in PostgreSQL? What are the table definitions and database constraints?

How does the selected architecture impact your application design?

How do you migrate records from the PostgreSQL tables to a target database if your business requires to do so?

In this post, we discuss four architectures and their effects.

Overview of architectures

When creating a database schema to represent an array business object, you have the following architecture options:

Save the array business object as a delimited string

Create primary-detail relational tables

Use the PostgreSQL JSON data type

Use the PostgreSQL array data type

In this post, we describe the table schema required to support each option. We also discuss how each option may affect the complexity and performance of your applications. It provides you with guidance to select the right architecture based on your business requirements.

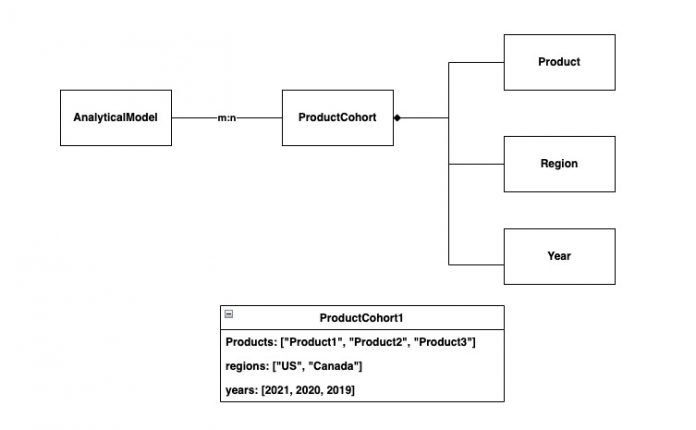

The following diagram shows a use case from an imaginary distributor. The distributor wants to create analytical models based on the historical sales data of product cohorts. A product cohort represents multiple products sold in multiple regions over multiple years. The example ProductCohort1 contains three products sold in the US and Canada in 2019, 2020, and 2021. We discuss four architectures using the business object ProductCohort.

Architecture 1: Delimited string

Many applications convert an array of values to a comma-delimited string and store the delimited string using a text field. The following SQL creates the productcohort table with four columns:

id – A primary key

products – A text field for multiple product names

regions – A text field for multiple regions

years – A text field for multiple years

The following SQL inserts the sample ProductCohort1 data record into the table productcohort:

The architecture represents the business object ProductCohort with a simple table productcohort. Business may require records in the database table productcohort to be copied to a data warehouse. In that case, an ETL job based on the single table productcohort suffices to meet those needs.

The applications using the table have to convert data from multiple array values to a delimited string and vice versa. The applications also need to escape the comma delimiter appeared in array values.

The architecture can have scaling issues for searching and filtering operations, compared to the other options reviewed in this post. The following SQL query looks up the productcohort records that contain the product Product2 sold in the US in 2020:

The preceding query of table records based on a user-specified matching criteria often results in string matching and full table scans, which causes delays when the table size grows.

For performance testing, we insert 100,000 rows into the table blogs.productcohort. We run the following select statements.

Query 1:

Query 2:

Query 3:

Query Number

Returned rows

Elapsed Time (ms)

Query 1

1

48

Query 2

2

55

Query 3

1

64

Average

1.3

56

Execution plan for query 1:

Postgres full text searching (FTS) finds natural-language documents that satisfy a query and optionally sorts the query results by relevance. You can speed up the full text searching on documents by creating GIN indexes which preprocess documents and save indexes for later rapid search. The preprocessing step eliminates the stop words such as ( a, an, is, are) which are not used in searching. It also normalizes the words in documents while creating indexes so that a search can find various forms of a normalized word. The following code shows how to create the GIN indexes for the table and how to query the table using the full text searching

Query A:

Execution plan:

Query B:

Execution plan:

Query C:

Execution plan

Query Number

Returned rows

Elapsed Time (ms)

Query A

1

55

Query B

1

93

Query C

1

469

Average

1

206

In our example, the values in the column “products”, “regions”, and “years” are delimited strings constructed by concatenating multiple basic array elements. They are much simpler than regular text documents. As shown in the testing results, creating GIN indexes by normalizing words in those data fields does not offer a performance advantage over the similarity query in our case.

Architecture 2: Primary-detail table

A traditional architecture is to store the array values for regions, years, and products to detail tables and store the business object ProductCohort in a primary table. Relationships between the primary table and the detail tables are stored in a set of relation tables. The following diagram shows the design of seven tables to represent the ProductCohort business object.

The following SQL creates the product table, and uses the following parameters:

id – A primary key

text – A text field for a product name

We populate the table with the following SQL statements:

The following SQL creates the year table, with the following parameters:

id – A primary key

value – An integer field for a year value

We populate the table with the following SQL statements:

The following SQL creates the region table with the following parameters:

id – A primary key

name – A text field for the region name value

We populate the table with the following SQL statements:

The following SQL creates the productcohort2 table. The id column is a primary key to represent a product cohort.

We populate the table with the following SQL statements. You can extend this table to add more information about a product cohort, such as name, description, and so on.

The cohortproductrelation table describes the relationships between the records in the productcohort2 table and the records in the product table. A record in cohortproductrelation contains two foreign key values: one to point back to a record in the productcohort2 table and one to point back to a record in the product table. The following SQL shows the cohortproductrelation table, with the following parameters:

product_cohort_id – A foreign key for the primary key ID in the productcohort2 table

product_id – A foreign key for the primary key ID in the product table

We populate the table with the following SQL statements.

The cohortregionrelation table describes the relationships between the records in the productcohort2 table and the records in the region table. A record in the cohortregionrelation contains two foreign key values: one to point back to a record in the productcohort2 table and one to point back to a record in the region table. The following SQL creates the cohortregionrelation table, with the following parameters:

product_cohort_id – A foreign key for the primary key ID in the productcohort2 table

region_id – A foreign key to the ID for the primary key ID in the region table

We populate the table with the following SQL statements.

The cohortyearrelation table describes the relationships between the records in the productcohort2 table and the records in the year table. A record in the cohortyearrelation contains two foreign key values: one to point back to a record in the productcohort2 table and one to point back to a record in the year table. The following SQL creates the cohortyearrelation table, with the following parameters:

product_cohort_id – A foreign key for the primary key ID in the productcohort2 table

year_id – A foreign key for the primary key ID in the year table

We populate the table with the following SQL statements.

The primary-detail architecture represents the business object ProductCohort with seven normalized tables. To test query performance, we populate the tables with the data records generated from 100,000 business objects and then run the following queries.

Query 1:

Query 2:

Query 3:

Query Number

Returned rows

Elapsed Time (ms)

Query 1

2

84

Query 2

1

88

Query 3

1

95

Average

1.3

89

Execution plan for query 3:

To test the performance with indexes, we create six indexes with the following SQL statements.

We review the execution plan and find that only two indexes product_name_idx and cpr_productid_idx are used. Therefore, we drop four other unused indexes.

We run the three queries again after finalizing the indexes.

Query 4

Query 5:

Query 6

Query Number

Returned rows

Elapsed Time (ms)

Query 4

1

52

Query 5

1

57

Query 6

1

44

Average

1

51

This option appears to have a better performance than the first option, but we can speed up queries further by leveraging other data types as described in the architecture option 3 and 4.

Architecture option 3: PostgreSQL JSON data type

This architecture stores the business object ProductCohort as JSON data records. PostgreSQL offers two data types for storing JSON data: json and jsonb. The json data type stores the input JSON string as text and we need no preprocessing to save the string into a database column. When the jsonb data type is used to save an input text, the input text is converted into a decomposed binary format. The jsonb data type also supports indexing, which can result in a performance advantage over the json data type.

The following SQL creates the productcohort3 table, with the following parameters:

id – A primary key

products_regions_years_json – A jsonb data field to save a business object ProductCohort

The following SQL inserts the sample business object ProductCohort1 into the table ProductCohort3:

The architecture represents the business object ProductCohort1 as a JSON payload in the PostgreSQL database. Modern databases and data warehouses support JSON data formats. Copying the productcohort3 table to a data warehouse solution is as simple as creating a single-table based ETL job.

The applications using the productcohort3 table need to serialize a business object to a JSON string when applications load the object into the PostgreSQL table. Applications also need to de-serialize a JSON string back to its business object format when the application retrieves data records from the PostgreSQL table. Converting between business objects and JSON is a simple task in modern programming language. JSON format also provides the flexibility for applications to add additional attributes to the JSON payloads.

JSON is schemeless. Applications may load unexpected payloads, or payloads of different versions into the same database table. It is the application’s responsibility to maintain data integrity of the database table.

PostgreSQL provides JSON operators and functions to filter, search, process JSON data.

The following SQL query finds the productcohort3 records that contain the product Product1 sold in the US in 2020:

For performance testing, we insert 100,000 rows into the table blogs.productcohort3 and run the following queries.

Query 1:

Query 2

Query 3

Query Number

Returned rows

Elapsed Time (ms)

Query 1

1

87

Query 2

1

81

Query 3

1

89

Average

1

86

Execution plan for query 1:

To scale and speed up searching and filtering operations, we create a GIN index to the column products_regions_years_json:

We rerun the three queries after creating the index.

Query 4

Query 5

Query 6

Query Number

Returned rows

Elapsed Time (ms)

Query 4

2

42

Query 5

1

40

Query 6

2

44

Execution plan for query 4:

In this section, we show how to store arrays as a jsonb data type. Finally, we show how to store arrays as a native PostgreSQL array type.

Architecture option 4: PostgreSQL array data type

This architecture uses the PostgreSQL array type. The array data type allows you to define a column to be an array of any valid data type. The following SQL creates the productcohort4 table, with the following parameters:

id – A primary key

products – An array column to save product names

years – An array column to save years

regions – An array column to save regions

The following SQL inserts the sample ProductCohort1 business object into the table productcohort4:

With this architecture, the table schema has the same structure as the business object ProductCohort. However, some databases, such as MySQL, don’t support the array data type. Business may require the records in the PostgreSQL table productcohort4 to be copied to a MySQL database. In that case, you need to represent arrays using a different data type supported by the MySQL database, such as the JSON data type.

PostgreSQL provides specialized operations and functions for the array type to filter, search, and process array data fields. The following SQL query searches for the productcohort4 records that contain the product Product2 sold in the US in 2020:

The following screenshot shows the query result.

For performance testing, we insert 100,000 rows into the table blogs.productcohort4. we run the following queries.

Query 1

Query 2

Query 3

Query Number

Returned rows

Elapsed Time (ms)

Query 1

1

86

Query 2

3

72

Query 3

1

93

Average

1.7

84

Execution plan of query 1:

We create GIN indexes on array columns to scale and speed up the searching and filtering operations:

We rerun the three queries after creating the indexes.

Query 4

Query 5

Query 6

Query Number

Returned rows

Elapsed Time (ms)

Query 4

1

37

Query 5

1

37

Query 6

1

43

Average

1

39

Execution plan for Query:

From the execution plan, we see the query uses the index idx_products_array and the index idx_products_array, but not the index idx_years_array. We can drop the unused index.

Performance and Recommendations

We present four architectures for storing business objects with array data fields in a PostgreSQL database. To evaluate their performance, we conduct tests based on data records generated from 100,000 business objects. We use the Amazon Aurora PostgreSQL version 14.6. The instance type is db.r6g.large which has 2 vCPUs and 16GB memory. We run select queries on the table, and then add proper indexes. We rerun the select queries again and record the average elapsed times.

Architecture Options

Average query time without index (milliseconds)

Average query time with indexes (milliseconds)

Data Tables Size

Index Size

Option 1 (Delimited string)

56

206

15 MB

5 MB

Option 2 (Primary-detail tables)

89

51

57 MB

21 MB

Option 3 (JSONB data type)

86

42

22 MB

2 MB

Option 4 (Array data type)

84

39

20 MB

2 MB

In scenarios when you need to perform a full text search of text documents, option 1 is a good fit. PostgreSQL will exclude stop words and normalize words while building GIN indexes. However, in our case, we are not saving text documents to data fields, creating GIN index does not offer a performance advantage over the similarity query. Performance varies based on the complexity of text documents, table size, and queries. You need to run your own tests, check execution plans and performances if you plan to add GIN indexes to support full text searching.

Compared with other options of using one single table, option 2 of using primary-detail tables creates seven database tables. It may result into a complex application implementation. You need to deal with multiple tables and understand the key and foreign key relationships among tables. If business requires data records to be copied to other systems, for the same reason, option 2 may cost you more to develop data migration jobs. We do not recommend this approach.

Options 3 (JSONB data type) and 4 (Array data type) offer a simpler design with a single table, making them efficient for storing data. Based on our tests, we find that the performance is better when using GIN indexes compared to when no index is present. We observe that two options offer the similar query performance. We recommend using the Array data type to store array data in your database if you have a fixed structure for your business object. By choosing the Array data type, you can benefit from a simpler design and improved performance, ensuring efficient storage of your array data in PostgreSQL. If you like to have flexible structure to describe your array data, you may choose the JSONB data type over the Array data type. Using the JSONB data type, you can change the structures of your JSONB data fields without affecting the table schema. JSON also has better interchangeability with other database systems. For example, MySQL does not have an array data type, but it supports a native JSON data type.

Conclusion

In this post, we show you four architectures to store business objects with array data fields to a PostgreSQL database. For each architecture, we explain the table design and its effects on applications and data migration jobs. We also show how to search and filter database records based on array values and what options are available to improve operation performance.

The architectures of using the JSONB and array data types are easier to support and maintain than other architectures. You can create GIN indexes on JSONB or array columns to improve query performance.

If you have questions or suggestions, please leave them in the comments section.

About the Authors

Jeff Li is a Senior Cloud Application Architect with the Professional Services team at AWS. He is passionate about diving deep with customers to create solutions and modernize applications that support business innovations. In his spare time, he enjoys playing tennis, listening to music, and reading.

Shunan Xiang is a Senior Database Architect with the Professional Services team at AWS. He works as a database migration specialist and data lake specialist to help Amazon customers in design and implementing scalable, secure, performant, and robust database/data solutions on the cloud. In his spare time, he enjoys cooking, listening to music, and reading.

Read MoreAWS Database Blog