At Next ‘23, we launched AlloyDB AI, an integrated set of capabilities built into AlloyDB for building generative AI applications. One of those capabilities allows you to call a Vertex AI model directly from the database using SQL.

AlloyDB is a fully managed PostgreSQL-compatible database that offers superior performance, availability and scale. In our performance tests, AlloyDB delivers up to 100X faster analytical queries than standard PostgreSQL, and AlloyDB AI runs vector queries up to 10x faster compared to standard PostgreSQL when using the IVFFlat index. It also provides enhanced vector search and predictive machine learning (ML) capabilities.

With Vertex AI, Google’s end-to-end AI platform, you can upload and label your data and train and deploy your own ML models. You can also utilize Google, third-party, and open-source AI models through Model Garden on Vertex AI.

You can enable AlloyDB access to Vertex AI with AlloyDB AI using the google_ml_integration extension, which allows you to run predictions with your data in AlloyDB using custom models in Vertex AI or models from Model Garden. AlloyDB AI also integrates open-source tools like pgvector and LangChain, allowing you to use AlloyDB as a vector store to store embeddings and connect to your LangChain applications.

By combining Google Cloud products and open-source AI tools, AlloyDB AI enables you to enhance your applications by creating new user experiences using live data. In other words, you can create dynamic AI experiences that change in real time according to changes in your database.

In this post, we’ll explore five examples of using SQL to access models in Vertex AI or your own custom models for similarity search, sentiment analysis, bot detection, healthcare predictions, and risk prediction.

Similarity search with vector embeddings

Let’s say you own a store called South Bay Furnishers and you store your inventory information in a relational database. You might have a table called products to store information, such as product descriptions, inventory-related information, and more.

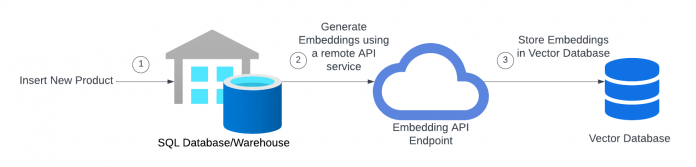

Traditionally, your workflow might look like this if you want to generate embeddings for your product descriptions.

First, you need to pull your data from a database or data warehouse, generate embeddings for that data, and then upsert the resulting vector into a vector database. At each step, you may encounter performance bottlenecks, such as batching and API limits. In short, traditional workflows often require building your own ETL process that relies on multiple third-party services. Additionally, you need to store vectors in a dedicated vector database, which must be maintained along with the database your application relies on for other transactions.

AlloyDB AI turns this three-step process into one SQL query, allowing you to generate embeddings from your data and store them in the same AlloyDB database you already use.

To automatically generate embeddings for a table, you can simply add a generated column to your table using the following command:

Now, when you insert rows into your table, Vertex AI’s modeltextembedding-geckowill automatically generate text embeddings for your products. You can then usepgvectorand AlloyDB AI’s enhanced vector search capabilities to perform similarity search and enhance your product recommendations experience.

Sentiment analysis

You can leverage even more of your data in AlloyDB with pretrained models from Model Garden. For example, you can use Vertex AI’s sentiment analysis model to gauge a user’s sentiment without having to train your own model.

Imagine you have a video streaming service where people subscribe to live-streaming channels hosted by different content creators. Viewers can write live comments, which are stored in AlloyDB. Let’s say you would like to gauge the overall viewer sentiment, store that information in your database, and display the overall sentiment of the comments on the channel as an emoji. For example, a creator and their viewers would see a 😐 emoji if comments are 50% positive and 50% negative or a 😀 emoji if the comments are overwhelmingly positive.

When storing the comments, you can use Vertex AI’s pretrained sentiment analysis model to calculate a score and magnitude value to enter in AlloyDB. The model outputs a score between -1 and 1, where -1 is negative, 0 is neutral, and 1 is positive. It also calculates a magnitude reflecting the quantity of negative vs. positive content. For instance, the model would give the comment “You are a terrible streamer!” a score of -.868 and a magnitude of .977.

If you wanted to calculate how negative comments are on a stream, you would shift the score distribution to range between 0 and 1 (instead of -1 and 1) and multiply by the magnitude.

To shift the distribution you might use an equation such as f(x) = .5(x +1) to map the interval [-1,1] onto [0,1].

You would then sum the results for each comment and divide by the total number of comments. Your distribution can be converted into a percentage by multiplying by 100, so now 0% would indicate that most streamers have negative comments, 50% indicates you have a balanced mix of negative and positive comments and 100% indicates you nearly exclusively have positive comments. In the following example, the stream would get a score of .306 or a 31% positivity score. Since this score is considered negative (i.e. below 50%), the stream would display a 😠 emoji.

Using the pretrained model, you can immediately gauge viewer reaction to live streams, allowing you to create dynamic experiences for users and give creators real-time feedback so they can course correct as they stream. You can also track the overall sentiment for each creator and post it to their profile to provide more feedback to help them improve their content.

Custom models

Aside from accessing models in Model Garden, you can also run predictions using your own custom models deployed on Vertex AI using the google_ml_integration extension by specifying an endpoint id. You can then run predictions on live data from your own database for real-time predictions on time-sensitive transactions. Here are three examples.

Bot detection in online games

Say you are a video game developer for a real-time multiplayer sports game. Some players have complained that the game isn’t fair as other players appear to be cheating using bots, causing a significant decline in daily active users. To combat this issue, your data science team created a model to detect when players are cheating. Once you flag a player as cheating, you can remove them from the game and ban them.

Catching cheaters is a cat-and-mouse game for game developers. As the model incorporates more signals to identify bot-like behavior, hackers will inevitably find new ways to defeat it. Luckily, it’s easy to update models with zero downtime using Vertex AI and AlloyDB. All you need to do is switch your deployed endpoint to your new model, and your application will be able to use it without any changes to your application code.

Healthcare predictions

Now say you are a health-tech company looking to provide primary care physicians with better insights into their patients’ heart health. You may want to generate cardiac risk scores for patients based on their updated vitals, such as BMI and blood pressure. Using a generated column, you can automatically calculate a new cardiac risk score with the new vitals entered into your AlloyDB database. You can then return an updated record to your application and display this information in a patient’s chart so that the physician can determine the best course of treatment.

Insurance risk models

A core competency of any insurance company is the ability to accurately model risk. For instance, imagine you’re an auto insurance company that offers online quotes. Every time a customer applies for a policy, you need to evaluate the risk based on their data (e.g., driving record) and run a risk model to generate a quote.

If customer input and other data are stored in AlloyDB, you can use AlloyDB AI to access the data and run a risk model hosted on Vertex AI. The same approach works for claims processing, where a model needs to determine the validity of the claim, and other types of fraud detection in insurance and finance.

Conclusion

Using machine learning models with data from your organization’s database often involves a multi-step process and the support of a data science team. AlloyDB AI turns this process into just one step allowing you to use machine learning models to generate embeddings, classify data, predict outcomes based on data, and more. Additionally, AlloyDB enables developers to create AI applications without having to wait for support from data science teams by providing easy access to Vertex AI’s pretrained models. Learn more about AlloyDB AI here or try this tutorial to learn how to use AlloyDB AI with Langchain applications.

Cloud BlogRead More