Today Amazon SageMaker announced the support of SageMaker training instance fallbacks for Amazon SageMaker Automatic Model Tuning (AMT) that allow users to specify alternative compute resource configurations.

SageMaker automatic model tuning finds the best version of a model by running many training jobs on your dataset using the ranges of hyperparameters that you specify for your algorithm. Then, it chooses the hyperparameter values that result in a model that performs the best, as measured by a metric that you choose.

Previously, users only had the option to specify a single instance configuration. This can lead to problems when the specified instance type isn’t available due to high utilization. In the past, your training jobs would fail with an InsufficientCapacityError (ICE). AMT used smart retries to avoid these failures in many cases, but it remained powerless in the face of sustained low capacity.

This new feature means that you can specify a list of instance configurations in the order of preference, such that your AMT job will automatically fallback to the next instance in the list in the event of low capacity.

In the following sections, we walk through these high-level steps for overcoming an ICE:

Define HyperParameter Tuning Job Configuration

Define the Training Job Parameters

Create the Hyperparameter Tuning Job

Describe training job

Define HyperParameter Tuning Job Configuration

The HyperParameterTuningJobConfig object describes the tuning job, including the search strategy, the objective metric used to evaluate training jobs, the ranges of the parameters to search, and the resource limits for the tuning job. This aspect wasn’t changed with today’s feature release. Nevertheless, we’ll go over it to give a complete example.

The ResourceLimits object specifies the maximum number of training jobs and parallel training jobs for this tuning job. In this example, we’re doing a random search strategy and specifying a maximum of 10 jobs (MaxNumberOfTrainingJobs) and 5 concurrent jobs (MaxParallelTrainingJobs) at a time.

The ParameterRanges object specifies the ranges of hyperparameters that this tuning job searches. We specify the name, as well as the minimum and maximum value of the hyperparameter to search. In this example, we define the minimum and maximum values for the Continuous and Integer parameter ranges and the name of the hyperparameter (“eta”, “max_depth”).

AmtTuningJobConfig={

“Strategy”: “Random”,

“ResourceLimits”: {

“MaxNumberOfTrainingJobs”: 10,

“MaxParallelTrainingJobs”: 5

},

“HyperParameterTuningJobObjective”: {

“MetricName”: “validation:rmse”,

“Type”: “Minimize”

},

“ParameterRanges”: {

“CategoricalParameterRanges”: [],

“ContinuousParameterRanges”: [

{

“MaxValue”: “1”,

“MinValue”: “0”,

“Name”: “eta”

}

],

“IntegerParameterRanges”: [

{

“MaxValue”: “6”,

“MinValue”: “2”,

“Name”: “max_depth”

}

]

}

}

Define the Training Job Parameters

In the training job definition, we define the input needed to run a training job using the algorithm that we specify. After the training completes, SageMaker saves the resulting model artifacts to an Amazon Simple Storage Service (Amazon S3) location that you specify.

Previously, we specified the instance type, count, and volume size under the ResourceConfig parameter. When the instance under this parameter was unavailable, an Insufficient Capacity Error (ICE) was thrown.

To avoid this, we now have the HyperParameterTuningResourceConfig parameter under the TrainingJobDefinition, where we specify a list of instances to fall back on. The format of these instances is the same as in the ResourceConfig. The job will traverse the list top-to-bottom to find an available instance configuration. If an instance is unavailable, then instead of an Insufficient Capacity Error (ICE), the next instance in the list is chosen, thereby overcoming the ICE.

TrainingJobDefinition={

“HyperParameterTuningResourceConfig”: {

“InstanceConfigs”: [

{

“InstanceType”: “ml.m4.xlarge”,

“InstanceCount”: 1,

“VolumeSizeInGB”: 5

},

{

“InstanceType”: “ml.m5.4xlarge”,

“InstanceCount”: 1,

“VolumeSizeInGB”: 5

}

]

},

“AlgorithmSpecification”: {

“TrainingImage”: “433757028032.dkr.ecr.us-west-2.amazonaws.com/xgboost:latest”,

“TrainingInputMode”: “File”

},

“InputDataConfig”: [

{

“ChannelName”: “train”,

“CompressionType”: “None”,

“ContentType”: “json”,

“DataSource”: {

“S3DataSource”: {

“S3DataDistributionType”: “FullyReplicated”,

“S3DataType”: “S3Prefix”,

“S3Uri”: “s3://<bucket>/test/”

}

},

“RecordWrapperType”: “None”

}

],

“OutputDataConfig”: {

“S3OutputPath”: “s3://<bucket>/output/”

},

“RoleArn”: “arn:aws:iam::340308762637:role/service-role/AmazonSageMaker-ExecutionRole-20201117T142856”,

“StoppingCondition”: {

“MaxRuntimeInSeconds”: 259200

},

“StaticHyperParameters”: {

“training_script_loc”: “q2bn-sagemaker-test_6”

},

}

Run a Hyperparameter Tuning Job

In this step, we’re creating and running a hyperparameter tuning job with the hyperparameter tuning resource configuration defined above.

We initialize a SageMaker client and create the job by specifying the tuning config, training job definition, and a job name.

import boto3

sm = boto3.client(‘sagemaker’)

sm.create_hyper_parameter_tuning_job(

HyperParameterTuningJobName=”my-job-name”,

HyperParameterTuningJobConfig=AmtTuningJobConfig,

TrainingJobDefinition=TrainingJobDefinition)

Describe training jobs

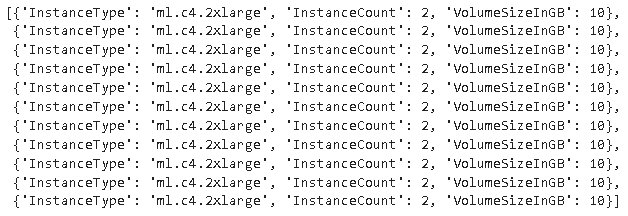

The following function lists all instance types used during the experiment and can be used to verify if an SageMaker training instance has automatically fallen back to the next instance in the list during resource allocation.

Conclusion

In this post, we demonstrated how you can now define a pool of instances on which your AMT experiment can fall back in the case of InsufficientCapacityError. We saw how to define a hyperparameter tuning job configuration, as well as specify the maximum number of training jobs and maximum parallel jobs. Finally, we saw how to overcome the InsufficientCapacityError by using the HyperParameterTuningResourceConfig parameter, which can be specified under the training job definition.

To learn more about AMT, visit Amazon SageMaker Automatic Model Tuning.

About the authors

Doug Mbaya is a Senior Partner Solution architect with a focus in data and analytics. Doug works closely with AWS partners, helping them integrate data and analytics solution in the cloud.

Kruthi Jayasimha Rao is a Partner Solutions Architect in the Scale-PSA team. Kruthi conducts technical validations for Partners enabling them progress in the Partner Path.

Bernard Jollans is a Software Development Engineer for Amazon SageMaker Automatic Model Tuning.

Read MoreAWS Machine Learning Blog