Cloud Run is a managed compute platform that lets you run containers directly on top of Google’s scalable infrastructure. You can consume Cloud Run applications from Apigee X, with minimal required resources. Apigee X is a platform for developing and managing APIs. By fronting services with a proxy layer, Apigee provides an abstraction or facade for your backend service APIs and provides security, rate limiting, quotas, analytics, and more.

Read on to learn how to consume Internal Cloud Run applications (apps) from another Cloud project and VPC using Private Service Connect (PSC). This naturally leads us to present the possibilities of connecting to Cloud Run apps from Apigee X, minimizing the number of Service Attachments.

Use Case

Cloud Run allows developers to spend their time writing their code, and very little time operating, configuring, and scaling their runtime environment.

In short: You don’t need to create a cluster or manage infrastructure to be productive with Cloud Run.

While Cloud Run makes it simple to expose your application to the public internet, You can also use ingress settings to restrict external network access to your services.

But how do you access these internal applications from another GCP project or Virtual Private Cloud (VPC) automatically?

Then comes the question regarding the possibilities to connect two VPCs, or at least to connect to a network resource from one VPC to another eventually in different Cloud projects, assuming the resources are located in the same Cloud region.

The two solutions we discuss later in this article are:

VPC Peering

Private Service Connect (PSC)

We have selected these two capabilities as we discuss how to consume Internal Cloud Run apps from Apigee X. Apigee X supports both VPC Peering and PSC in terms of API exposure and target endpoint connectivity.

This use-case is particularly interesting in the context of API Management, where you create internal services and want to expose only a subset of these services as APIs, to some specific external clients. Additionally, you can create a developer portal that would expose these APIs as products that could even be monetized.

When creating Cloud Run services and working with PSC from Apigee X target endpoints, it is possible to minimize the creation of Service Attachments.

This is the final use-case we want to implement and describe in this article.

Solutions for Network Communications between VPCs

In this section, we focus on two possible solutions to reach a resource present in one VPC from another VPC.

VPC Peering

Google Cloud VPC Network Peering connects two VPC networks so that resources in each network can communicate with each other. One of the benefits of VPC peering is network security: service owners do not need to have their services exposed to the public Internet and deal with its associated risks.

On the other hand, setting a network communication like VPC Peering requires coordinating subnets and managing complex routing topologies across different networks and organizations.

This is a real challenge for enterprises that want to keep services completely isolated to address security concerns. As an example, many companies require the use of a network security appliance on the consumer side, to control the transport and network layers of the VPC peering communications.

Private Service Connect

PSC implies a one way communication channel between an endpoint and a Service Attachment. Unlike VPC Peering, the underlying infrastructure is not exposed: connecting and managing services is much simpler, more secure and private.

On the other hand, PSC requires the configuration of Endpoint Attachments and Service Attachments for each application that needs to be accessed, even though several solutions to minimize the creation of these attachments exist.

In the article, we base our solution on PSC from an Apigee X instance to Cloud Run services (backends). We voluntarily do not present the Northbound aspects which are left to the free choice of the reader (use of VPC Peering via External Load Balancer and “classic” MIG, or External Load Balancer and PSC Network Endpoint Group).

Solution Overview

This section presents the proposed solution that is used to access Internal Cloud Run services from an Apigee X Runtime.

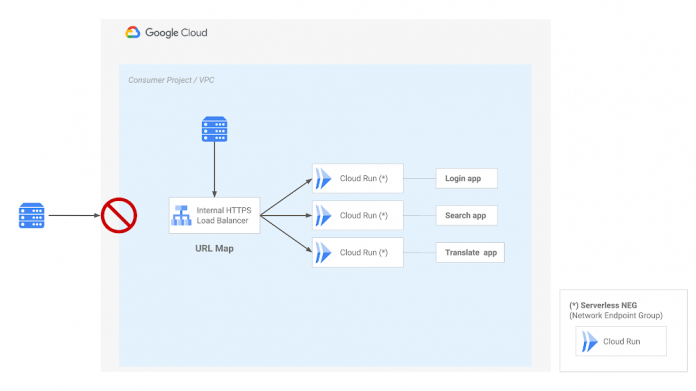

An internal HTTPS Load Balancer (L7 ILB) is used to serve several Cloud Run apps using Serverless NEG and URL Mask. A Serverless NEG backend can point to several Cloud Run services.

A URL mask is a template of your URL schema. The Serverless NEG uses this template to map the request to the appropriate service.

The L7 ILB is accessed through a PSC Service Attachment that can be reached by an Apigee X instance via a PSC Endpoint Attachment. These two attachments (Endpoint and Service Attachments) along with the L7 ILB must be part of the same GCP region.

Apigee X uses a target endpoint to reach the L7 ILB through the PSC channel. This target endpoint is configured in HTTPS and uses a dedicated hostname (the hostname of the l7 ILB).

Therefore, we need two DNS resolutions:

The first on Apigee X (target endpoint) to point to the PSC Endpoint Attachment: a private Cloud DNS (A Record) and a DNS Peering are required to manage the resolution. This Private DNS Zone is configured on the Consumer VPC (named apigee-network in the picture above) that is peered with the Apigee X VPC. The DNS Peering is configured between the two VPCs for a particular domain (example.com in the example above).

The second on the PSC Service Attachment to point to the L7 ILB: a private Cloud DNS (A Record) is required to manage the resolution. This Private Zone is configured on the VPC (named ilb-network in the picture above). Note that the L7 ILB presents an SSL Certificate used to establish a secured communication.

An identity token (ID Token) is required to consume the different Cloud Run services. This ID token is generated on Apigee X and transmitted as a Bearer Token to the Cloud Run apps.

Here is the configuration of the target endpoint on Apigee X:

The API Proxy that contains this target endpoint configuration can make authenticated calls to Google services, like Cloud Run. A dedicated Service Account with the role “roles/run.invoker” must be created and configured on this API proxy, as explained on the Google Apigee documentation.

The audience (aud attribute of the ID Token) is dynamically set using an AssignMessage policy on Apigee:

How to access Internal Cloud Run apps?

In this section, we describe how to access Internal Cloud Run applications using an internal HTTPS load balancer and a Serverless Network Endpoint Group (NEG).

Internal HTTPS Load Balancer

The different components required to access a Cloud Run app using a Serverless NEG and an Internal HTTPS Load Balancer are presented on the following picture:

When the HTTP(S) load balancer is enabled for serverless apps, you can map a single URL to multiple serverless services that serve at the same domain.

A client application can connect to the HTTPS Internal Load Balancer, which exposes specific SSL Certificates through a target HTTPS proxy.

Serverless NEG

Serverless NEGs lets you use Cloud Run services with your load balancer. After configuring a load balancer with the Serverless NEG backend, requests to the load balancer are routed to the Cloud Run backend.

How to minimize the number of Service Attachments?

URL Masking

A Serverless NEG backend can point to either a single Cloud Run service, or a URL Mask that points to multiple Cloud Run services. A URL Mask is a template of your URL schema. The Serverless NEG uses this template to map the request to the appropriate service.

URL Masks are an optional feature that make it easier to configure Serverless NEGs when your serverless application consists of multiple Cloud Run. Serverless NEGs used with internal HTTP(S) load balancers can only use a URL Mask that points to Cloud Run services.

URL masks are useful if your serverless app is mapped to a custom domain rather than the default address that Google Cloud provides.

With a custom domain such as example.com, you could have multiple services deployed to different subdomains or paths on the same domain. In such cases, instead of creating a separate Serverless NEG backend for each service, you can create a single Serverless NEG with a generic URL Mask for the custom domain (for example, example.com/<service>). The NEG extracts the service name from the request’s URL.

Accessing Cloud Run apps deployed on different Google Cloud projects and VPCs

From Apigee X, it is possible to access different Internal Cloud Run services that are deployed on different VPCs and GCP projects. In this situation, it is necessary to create different PSC Endpoint Attachments on the Apigee X side.

Each of this Apigee X Endpoint Attachment is associated with a dedicated PSC Service Attachment that can be part of different GCP projects and VPCs but always in the same GCP region.

Solution Deployment

The Terraform modules that can be used to provision the complete architecture as described in the document can be found here.

What Next?

Apigee is the perfect platform to secure, productize, control costs and provide analytics of APIs based on internal Cloud Run applications. Deploying API products on a developer portal simplifies access to these APIs.

For more on Apigee please check out the following:

Documentation:

How to provision an Apigee eval organization in Google Cloud

Northbound networking with Private Service Connect

Southbound networking patterns

Git Repo

Apigee Devrel: If you look for reference solutions across Apigee products

Google Cloud community:

Cloud BlogRead More