Graph databases are uniquely designed to address query patterns focused on relationships within a given dataset. From a relational database perspective, graph traversals can be represented as a series of table joins, or recursive common table expressions (CTEs). Not only are these types of SQL query patterns computationally expensive and complex to write (especially for highly connected datasets that involve multiple joins), but they can be difficult to tune for performance.

Amazon Neptune provides a means to address these gaps. Neptune is a fully managed graph database with a purpose-built, high-performance graph database engine that is optimized for storing billions of relationships and running transactional graph queries with millisecond latency.

However, there are situations where we can achieve even better performance through the use of various caching techniques. Caching, or the ability to temporarily store data in memory for fast retrieval when read more than once, is a technique as old as computing itself and used broadly across many database platforms.

In this three-part series, we discuss how to improve your graph query performance using a variety of caching techniques with Neptune. Part one sets the scene by discussing the Neptune query process and how the buffer pool cache works. In part two, we discuss the additional Neptune caches (query results cache and lookup cache) and how they can improve performance for use cases with repeat queries, pagination, and queries that materialize large quantities of literals. In part three, we show how to implement Neptune cluster-wide caching architectures with Amazon ElastiCache, which benefits use cases where the results cache should be at the cluster level, use cases that require dynamic sorting of large result sets, or use cases that want to cache the query results of any graph query language.

Because the buffer pool cache stores the most recently used components, it can help accelerate queries that frequently access the same portions of the graph, which works well for the majority of transactional query patterns that we see being used within Neptune.

However, there are some cases where additional caching techniques can be employed to further improve certain types of graph access patterns. In the following sections, we introduce the query results cache and the lookup cache, which are two caching features that can be enabled on a Neptune instance to improve performance for use cases with repeat queries, pagination, and queries that materialize large quantities of literals.

The query results cache

Note: The query results cache discussed in this next section currently does not support openCypher or SPARQL queries.

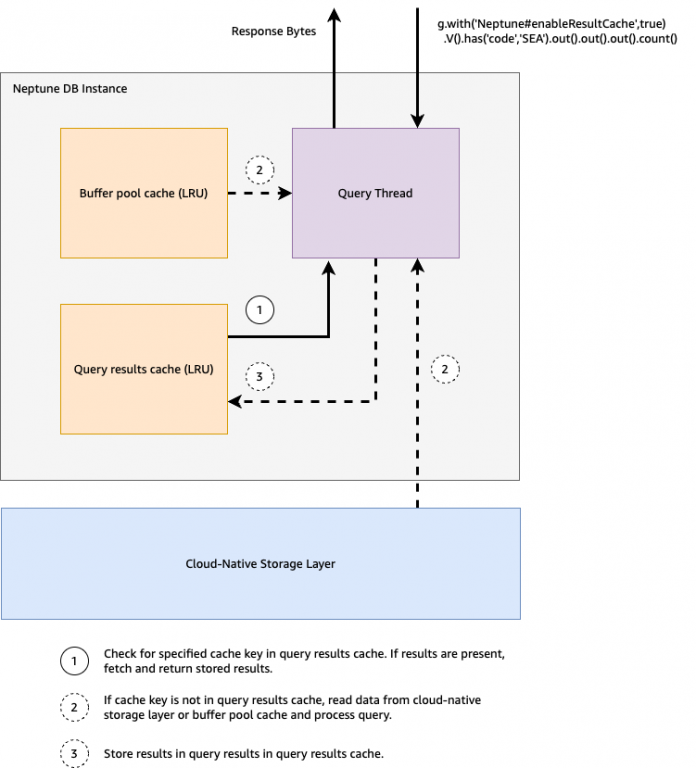

For Gremlin queries that are not necessarily I/O bound (cases where the computation of a query takes more time than the fetching of the data required to compute the query), a buffer pool cache may have little effect on improving query performance if that same query needs to be run again. In these situations, you can use the Neptune query results cache.

The query results cache is an in-memory cache that can store the computed results of a Gremlin query. Should that query need to be run again, the pre-computed results can be fetched from the in-memory cache and immediately returned to the user without needing to recompute the query.

The query results cache also lets you perform pagination over cached result sets. This is particularly useful for pagination use cases, because TinkerPop doesn’t provide a guarantee of ordered results, so paginating without the query results cache would require the full query (including sorting) to be run for each page.

Setting up and managing the query results cache

To enable the query results cache, refer to Enabling the query results cache in Neptune. As mentioned in the instructions, ensure that you are setting the neptune_result_cache parameter at the instance-level DB parameter as opposed to the cluster-level DB parameter. After setting the parameter, reboot the instance – as of writing, the neptune_result_cache parameter is static as opposed to dynamic, which requires a reboot for the changes to be reflected. To confirm that the query results cache is enabled, run a status call – the ResultCache parameter in the response should read as enabled.

The query results cache can be enabled on one or more instances within a Neptune cluster, however the query results cache is specific to the instance that it’s enabled on, and is not a cluster-wide query results cache. Therefore, when routing queries to Neptune while using the query results cache, you need to be conscious and deliberate about which instance a query is routed to, in order to pull back cached results from the correct query results cache. If a cluster-wide cache is required, refer to part three of this post series for recommendations on how to implement this.

It’s also important to note that the query results cache is just a cache, so if a query’s results change due to updates in the graph data, these results won’t show up in the cache until the cache or result set is invalidated and the query is rerun.

Working with the query results cache

After you’ve enabled the query results cache, to actually use the cache you must specify query hints within your queries. Query hints are extensions of the graph query languages to specify how Neptune should process your query. In addition to customizing optimization and evaluation strategies, they are also used to specify behavior of the query results cache. The following table is a reference of the available query hints for use with the query results cache.

Query Hint

What does it do?

When should I use it?

enableResultCache

Tells Neptune whether it should be looking in the query results cache. Also needed to cache a result.

You want to store or fetch results from the cache.

enableResultCacheWithTTL

Same functionality as enableResultCache, except with a defined TTL to be applied to newly cached results.

You want to store results in the cache with a TTL.

invalidateResultCacheKey

Clears the cached results corresponding to the query it’s used in.

You want to clear the results for a particular query. For example, data has been updated and using previously cached results would give a stale answer.

invalidateResultCache

Clears the entire query results cache.

You want to clear the entire query results cache.

numResultsCached with iterate()

Specifies the maximum number of query results to cache.

You want to cache a certain number of results without returning all the results that are being cached.

noCacheExceptions

Suppresses cache-related exceptions that would have otherwise been raised.

You want to suppress exceptions that are raised as a result of trying to cache results that are too large to fit in the cache.

The query results cache uses the query itself as the cache key to locate cached results. The query cache key of a query is evaluated as follows:

Query hints are ignored, except for numResultsCached

A final iterate() step is ignored

The rest of the query is ordered according to its byte-code representation

The following table shows some examples of cache keys for various queries.

Query

Query Cache Key

g.with(“Neptune#enableResultCache”, true).with(“Neptune#numResultsCached”, 50).V().has(‘genre’,’drama’).in(‘likes’).iterate()

g.with(“Neptune#numResultsCached”, 50).V().has(‘genre’,’drama’).in(‘likes’)

g.with(‘Neptune#enableResultCacheWithTTL’, 120).V().has(‘genre’,’drama’).in(‘likes’)

g.V().has(‘genre’,’drama’).in(‘likes’)

g.with(‘Neptune#enableResultCache’, true).V().has(‘genre’,’drama’).in(‘likes’).range(0,10)

g.V().has(‘genre’,’drama’).in(‘likes’).range(0,10)

However, if that full cache key does not exist, Neptune will search for the partial cache key of g.V().has(‘genre’,’drama’).in(‘likes’)

When using the query results cache with the cache keys, the workflow will look like the following:

Caching and retrieving results with the query results cache

Let’s check out some of the performance improvements we get with the query results cache, using the air routes dataset from Kelvin Lawrence. You can load the air routes dataset from a Neptune notebook using the %seed command. For the rest of this post, we’ll be working with version 0.87 of the air routes dataset, and we’ll be using the db.r6g.large instance type unless otherwise noted.

First, we run a query against our freshly rebooted instance, so we know we’re running our query against an empty buffer pool cache. The following query calculates the number of four-hop connections (including cycles, if any) from the Santa Fe Regional Airport, which turns out to be over 4 million possible results, and returns this count. It takes about 3 seconds to run to completion:

On a subsequent run of the same query against a warm buffer pool cache, the response time has improved to about 2.3 seconds.

We can cache the query results in the query results cache by using the preceding query hint as follows:

And when the query is run for the first time with the query hint, its results will be cached in the results cache.

We also use the same query to retrieve the query results from the query results cache. The round trip time to fetch from the query results cache takes around 34 milliseconds.

Note: The query results cache will not be used if you route queries to the /explain or /profile endpoint, therefore the preceding example with the query results cache shows latency including round trip times.

However, by enabling the slow query logs which is available starting from engine version 1.2.1.0, we can inspect the resultCacheStats parameter within it to determine if we had a result cache hit or miss. To log result cache metrics, simply enable the slow query logs to either the info or debug mode. When running the above query for the very first time, we see our logs values look like this:

Indicating a result cache miss and subsequent insertion of the query results after its execution. After re-running the same query, we have the following in our slow query log:

Indicating our query was able to be served by our cached result.

In addition to being completely cleared upon instance reboot, restart, and stop/start of the cluster, results in the cache are removed according to a least recently used (LRU) policy, so when the cache reaches capacity and needs to make room for new cache results, the least recently used results are removed first. The query results cache’s capacity is equivalent to approximately 10% of the memory available to the query execution threads (approximately one-third of the instance’s memory, as approximately two-thirds of the memory is allocated to the buffer pool cache).

You can also manually control the lifetime of a cached result through the following methods:

Set a Time to Live (TTL) for a specific query’s results using the enableResultCacheWithTTL query hint.

For example, the following query caches the results of the query for 60 seconds, if the query hasn’t been previously cached:

Note that running the same exact query with a TTL hint twice does not reset the TTL, unless the second run of the query is run after the expiration of the original TTL. For example, if you run the above query for the first time at t=0, and run it again at t=30 seconds, the results cached from the query run at t=0 would be returned. Once the expiration is met at t=60 seconds, running the same query again would cache new results with a TTL of 60.

Invalidate all the results in the cache using the invalidateResultCache query hint.

For example, the following query invalidates the entire result cache:

You can also invalidate a specific result in the cache using the invalidateResultCacheKey query hint. For example, the following query:

Invalidates the result in the cache that has the cache key of:g.V().has(‘code’, ‘SAF’).out().out().out().out().count()

The query results cache is simply just a cache, so if the graph changes in such a way that would result in different results for previously cached queries, you need to invalidate those cached queries and re-cache them.

Paginating results with the query results cache

One of the key use cases of the query results cache paginating cached results. Because TinkerPop doesn’t guarantee the ordering of results, to do pagination without caching requires you to run an ordered query once for each page to return. But with the query results cache, we can instead run the query one time, then pull each page of the result directly from the cache.

For example, let’s find all the unique three-hop routes we can take from Yan’an Airport:

The preceding query returns 326,037 results. On a warm buffer pool cache, it takes just under 2 seconds to run.

But what if we want to paginate the results of the query into pages of 3,000 results each? We need to do the following to get the first page of results:

Then we adjust the values of the range and rerun the query to collect each subsequent page.

Running the preceding query now takes about 7 seconds on a warm buffer pool cache. The order().by() clause adds latency to our query but is necessary when paginating without a cache. This is because the order of results isn’t guaranteed, so if we try to collect different ranges of the query results without a known order, we could be collecting duplicates.

For the next few examples, we use the db.r6g.4xlarge instance type, to ensure we have enough room in our query results cache to cache all the results. As mentioned earlier, the query results cache’s capacity is equivalent to approximately 10% of the memory available to the query execution threads (approximately one-third of the instance’s memory, as approximately two-thirds of the memory is allocated to the buffer pool cache).

Instead of using the preceding method to paginate, we could use the query results cache to cache the entire result set:

When retrieving pages from the query results cache, we keep the original query and simply add a range step at the end. This is because each part of the original query (including the numResultsCached query hint) is used in the query cache key. So we can pull pages with the desired range as follows:

Remember that the query results cache will not be used if you route queries to the /explain or /profile endpoint, therefore the preceding example with the query results cache shows latency including round trip times.

Pulling back the first 3,000 results from our query results cache results in a total round trip time of 163 milliseconds. We can subsequently adjust the ranges to pull back the next page of 3,000 results.

Pagination is especially useful for queries that are computationally heavy, such as queries that do sorting, ordering, grouping, or complex path finding. With those types of queries, you can get all the results at once, store them in the results cache, and whenever you need a subset of the results, Neptune can look in the cache and grab a range of the result, instead of having to recompute the query each time.

The lookup cache

In this section, we discuss the features and benefits of the lookup cache in Neptune.

Property materialization

Before discussing how the lookup cache works and when it’s useful, let’s discuss the process of property materialization.

As mentioned in part one of this series, Neptune persists data in a shared storage volume comprised of a set of indexes and a separate property store. As queries are run, the indexes allow Neptune to optimally traverse through the graph to compute a query’s result. During a traversal, however, the query may require returning a set of property values from the property store. This process of creating the result set with property values is called materialization. Property materialization occurs whenever the query needs to refer to the string value of a property, such as when a query needs to return a list of properties that include string data types, or when a query has filtering conditions on string properties (for example, when using text search predicates).

An example of property materialization can be seen using the following query:

This query traverses the graph to find the airports connected to the Lafayette Regional Airport via outgoing routes, then returns the properties of each airport found.

We run the query on a freshly rebooted instance to see what performance is like on a cold buffer pool cache. We also run the query through the Neptune Gremlin Profile API, which gives additional information about the query’s performance and query plan. The following is a snippet of the profile output:

The profile output says the number of terms materialized during the query run is 38. This is because adding valueMap() to the end of the query means we are now fetching related properties for the associated vertices referenced at the end of our traversal. We are also finding the Lafayette Regional Airport via a string match (has(‘code’, ‘LFT’)), which would also require materialization. Because the query was run against a cold buffer pool cache, these properties are fetched from the separate dictionary index, or property store, hosted on the underlying shared storage volume.

Running the same query again, we get slightly different results in our profile output:

Caching property values with the lookup cache

From the preceding example, we saw that the buffer pool cache can hold materialized property values so that subsequent queries don’t need to fetch from the property store. But as the size of a query’s result set and the number of properties materialized increase, the delay for materialization can become a large portion of the overall query runtime. Having all of the required property values stored in the buffer pool cache at the time of the query can help, but may not always be possible.

Therefore, another way that we can improve performance of these types of queries is to use the lookup cache. The lookup cache caches string property values for the purpose of faster property materialization. This feature is enabled through support of Amazon Elastic Compute Cloud (Amazon EC2) instances with the d-type attribute. An instance type with the d-type attribute has instance store volumes that are physically connected to the host server and provide block-level storage that is coupled to the lifetime of that instance. The lookup cache utilizes this instance store volume to cache the string property values and RDF literals for rapid access, benefitting queries with frequent, repetitive lookups of those values. Today, Neptune supports the r5d and x2iedn instance types for use with the lookup cache.

The following diagram illustrates the high-level query workflow when the lookup cache is enabled.

As queries are run on the Neptune instance, property values are then stored within the instance store’s local storage volume. In this manner, any subsequent queries that are issued against the instance can materialize the associated property values from local storage without the penalty of needing to go to the property store hosted on the underlying shared storage volume.

The lookup cache also has the benefit of further optimizing the usage of the buffer pool cache. When enabled, property values are stored in the lookup cache and not in the buffer pool cache. This allows for more capacity in the buffer pool cache for caching the indexes of the graph.

To enable the lookup cache, launch an instance with a d-type attribute (for example, r5d or x2iedn) within a Neptune cluster and set the neptune_lookup_cache parameter in the DB cluster parameter group to 1. Like the query results cache, the use of the lookup cache is instance-dependent. Queries will need to be directed to the specific instance where the lookup cache is hosted (the instance with the d-type attribute) in order to take advantage of the cached properties.

Considerations for the lookup cache

The cache design strategy implemented depends on which instance type the lookup cache is enabled on. A lookup cache can be deployed on the writer instance in the cluster or the read replicas. If deployed on the writer instance, the cache will write property values into the cache volume as new write requests come into the cluster (write-through design). If deployed on a read replica, the property values will only be cached when they are first read via a read query destined for that specific read replica (lazy loading design).

Therefore, deploying the lookup cache on the writer instance can help with quickly filling the cache with associated property values as they are written to the cluster. However, any associated read queries that need to take advantage of the lookup cache in this scenario also need to be routed to the writer instance. Using this configuration comes with another important trade-off in terms of write throughput. If the lookup cache is enabled on the writer instance, some additional latency will occur as data needs to be written to the cache. This is also true if using the Neptune Bulk Load API to ingest data into a cluster, because load throughput will be impacted if the lookup cache is enabled on the writer instance.

Another consideration is that the storage volume used by the lookup cache is an Amazon EC2 instance store. This makes this volume ephemeral and the data within this volume will only persist for the life of the instance. In the event of an instance replacement due to an instance failure, scaling operation, and so on, the data hosted in the lookup cache inside of this volume will be deleted and the cache resets to empty. This is important to note especially if architecting scaling procedures to scale up and down a given instance with an attached lookup cache.

Lastly, the lookup cache doesn’t presently expose a means to reset the cache. If you need to clear the cache, the easiest means to do this is via an instance replacement. Instance replacement can occur by deleting and creating a new instance, or via scaling the instance up or down.

Although the lookup cache can accelerate queries with frequent, repetitive lookups of dictionary values, it is best suited for situations where all of the following are true:

You have been observing increased latency in read queries.

The increased latency in read queries correlates with a drop in the Amazon CloudWatch metric BufferCacheHitRatio.

The read queries spend a lot of time in materializing return values prior to returning results. You can check the count of term materialization via the “# of terms materialized” value in Neptune Gremlin profiles, or the “TermResolution” operator in openCypher explain plans and SPARQL explain plans.

Summary

The Neptune instance-specific query results cache and lookup cache features can have a significant impact in decreasing graph query latency for certain query types, and can be used in conjunction with Neptune’s buffer pool cache.

The following tables summarize the features of native caching within Neptune.

.

Buffer Pool Cache

Query Results Cache

Lookup Cache

Is it always on?

Yes

No; enable via instance parameter group

No; use instances with a d-type attribute and enable via cluster parameter group

Is it cleared on reboot?

Yes

Yes

No

Is it cleared if I stop then start my cluster?

Yes

Yes

No

How big is it?

~67% of the instance’s memory

~10% of the memory available to the query execution threads

~25% of the size of the attached instance store volume

Does it work with all query languages?

Yes

No; Gremlin-only

Yes

What minimum engine version do I need to use it?

No minimum needed

1.0.5.1+

1.0.4.2 +

When should I use it?

N/A, it’s always on

Repeat queries, pagination use cases

Read queries with frequent, repetitive lookups of string property values/RDF literals

How do I clear it?

Instance reboot, stop/start of the Neptune cluster, instance replacement

Instance reboot, stop/start of the Neptune cluster, instance replacement, query hints

Instance replacement

What is the cache eviction strategy used?

LRU

LRU

None

What instance types does it work with?

All

All except t2.medium and t3.medium

Instances with a d-type attribute only – does not work with serverless

Although the native caching features provided by Neptune cover a wide variety of use cases, there may still be certain situations where a user wants a global, or cluster-wide cache for all results or all properties.

In part three of this series, we discuss how this can be achieved by using Neptune in conjunction with other database services to build a cluster-wide caching architecture.

About the Authors

Taylor Riggan is a Sr. Graph Architect focused on Amazon Neptune. He works with customers of all sizes to help them learn and use purpose-built, NoSQL databases. You can reach out to Taylor via various social media outlets such as Twitter and LinkedIn.

Abhishek Mishra is a Sr. Neptune Specialist Solutions Architect at AWS. He helps AWS customers build innovative solutions using graph databases. In his spare time, he loves making the earth a greener place.

Kelvin Lawrence is a Sr. Principal Graph Architect focused on Amazon Neptune and many other related services. He has been working with graph databases for many years, is the author of the book Practical Gremlin, and is a committer on the Apache TinkerPop project.

Melissa Kwok is a Neptune Specialist Solutions Architect at AWS, where she helps customers of all sizes and verticals build cloud solutions with graph databases according to best practices. When she’s not at her desk, you can find her in the kitchen experimenting with new recipes or reading a cookbook.

Read MoreAWS Database Blog