Data preparation is a crucial step in any machine learning (ML) workflow, yet it often involves tedious and time-consuming tasks. Amazon SageMaker Canvas now supports comprehensive data preparation capabilities powered by Amazon SageMaker Data Wrangler. With this integration, SageMaker Canvas provides customers with an end-to-end no-code workspace to prepare data, build and use ML and foundations models to accelerate time from data to business insights. You can now easily discover and aggregate data from over 50 data sources, and explore and prepare data using over 300 built-in analyses and transformations in SageMaker Canvas’ visual interface. You’ll also see faster performance for transforms and analyses, and a natural language interface to explore and transform data for ML.

In this post, we walk you through the process to prepare data for end-to-end model building in SageMaker Canvas.

Solution overview

For our use case, we are assuming the role of a data professional at a financial services company. We use two sample datasets to build an ML model that predicts whether a loan will be fully repaid by the borrower, which is crucial for managing credit risk. The no-code environment of SageMaker Canvas allows us to quickly prepare the data, engineer features, train an ML model, and deploy the model in an end-to-end workflow, without the need for coding.

Prerequisites

To follow along with this walkthrough, ensure you have implemented the prerequisites as detailed in

Launch Amazon SageMaker Canvas. If you are a SageMaker Canvas user already, make sure you log out and log back in to be able to use this new feature.

To import data from Snowflake, follow steps from Set up OAuth for Snowflake.

Prepare interactive data

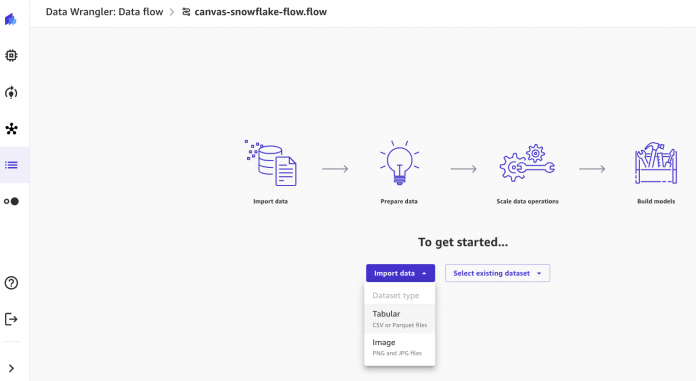

With the setup complete, we can now create a data flow to enable interactive data preparation. The data flow provides built-in transformations and real-time visualizations to wrangle the data. Complete the following steps:

Create a new data flow using one of the following methods:

Choose Data Wrangler, Data flows, then choose Create.

Select the SageMaker Canvas dataset and choose Create a data flow.

Choose Import data and select Tabular from the drop-down list.

You can import data directly through over 50 data connectors such as Amazon Simple Storage Service (Amazon S3), Amazon Athena, Amazon Redshift, Snowflake, and Salesforce. In this walkthrough, we will cover importing your data directly from Snowflake.

Alternatively, you can upload the same dataset from your local machine. You can download the dataset loans-part-1.csv and loans-part-2.csv.

From the Import data page, select Snowflake from the list and choose Add connection.

Enter a name for the connection, choose OAuth option from the authentication method drop down list. Enter your okta account id and choose Add connection.

You will be redirected to the Okta login screen to enter Okta credentials to authenticate. On successful authentication, you will be redirected to the data flow page.

Browse to locate loan dataset from the Snowflake database

Select the two loans datasets by dragging and dropping them from the left side of the screen to the right. The two datasets will connect, and a join symbol with a red exclamation mark will appear. Click on it, then select for both datasets the id key. Leave the join type as Inner. It should look like this:

Choose Save & close.

Choose Create dataset. Give a name to the dataset.

Navigate to data flow, you would see the following.

To quickly explore the loan data, choose Get data insights and select the loan_status target column and Classification problem type.

The generated Data Quality and Insight report provides key statistics, visualizations, and feature importance analyses.

Review the warnings on data quality issues and imbalanced classes to understand and improve the dataset.

For the dataset in this use case, you should expect a “Very low quick-model score” high priority warning, and very low model efficacy on minority classes (charged off and current), indicating the need to clean up and balance the data. Refer to Canvas documentation to learn more about the data insights report.

With over 300 built-in transformations powered by SageMaker Data Wrangler, SageMaker Canvas empowers you to rapidly wrangle the loan data. You can click on Add step, and browse or search for the right transformations. For this dataset, use Drop missing and Handle outliers to clean data, then apply One-hot encode, and Vectorize text to create features for ML.

Chat for data prep is a new natural language capability that enables intuitive data analysis by describing requests in plain English. For example, you can get statistics and feature correlation analysis on the loan data using natural phrases. SageMaker Canvas understands and runs the actions through conversational interactions, taking data preparation to the next level.

We can use Chat for data prep and built-in transform to balance the loan data.

First, enter the following instructions: replace “charged off” and “current” in loan_status with “default”

Chat for data prep generates code to merge two minority classes into one default class.

Choose the built-in SMOTE transform function to generate synthetic data for the default class.

Now you have a balanced target column.

After cleaning and processing the loan data, regenerate the Data Quality and Insight report to review improvements.

The high priority warning has disappeared, indicating improved data quality. You can add further transformations as needed to enhance data quality for model training.

Scale and automate data processing

To automate data preparation, you can run or schedule the entire workflow as a distributed Spark processing job to process the whole dataset or any fresh datasets at scale.

Within the data flow, add an Amazon S3 destination node.

Launch a SageMaker Processing job by choosing Create job.

Configure the processing job and choose Create, enabling the flow to run on hundreds of GBs of data without sampling.

The data flows can be incorporated into end-to-end MLOps pipelines to automate the ML lifecycle. Data flows can feed into SageMaker Studio notebooks as the data processing step in a SageMaker pipeline, or for deploying a SageMaker inference pipeline. This enables automating the flow from data preparation to SageMaker training and hosting.

Build and deploy the model in SageMaker Canvas

After data preparation, we can seamlessly export the final dataset to SageMaker Canvas to build, train, and deploy a loan payment prediction model.

Choose Create model in the data flow’s last node or in the nodes pane.

This exports the dataset and launches the guided model creation workflow.

Name the exported dataset and choose Export.

Choose Create model from the notification.

Name the model, select Predictive analysis, and choose Create.

This will redirect you to the model building page.

Continue with the SageMaker Canvas model building experience by choosing the target column and model type, then choose Quick build or Standard build.

To learn more about the model building experience, refer to Build a model.

When training is complete, you can use the model to predict new data or deploy it. Refer to Deploy ML models built in Amazon SageMaker Canvas to Amazon SageMaker real-time endpoints to learn more about deploying a model from SageMaker Canvas.

Conclusion

In this post, we demonstrated the end-to-end capabilities of SageMaker Canvas by assuming the role of a financial data professional preparing data to predict loan payment, powered by SageMaker Data Wrangler. The interactive data preparation enabled quickly cleaning, transforming, and analyzing the loan data to engineer informative features. By removing coding complexities, SageMaker Canvas allowed us to rapidly iterate to create a high-quality training dataset. This accelerated workflow leads directly into building, training, and deploying a performant ML model for business impact. With its comprehensive data preparation and unified experience from data to insights, SageMaker Canvas empowers you to improve your ML outcomes. For more information on how to accelerate your journeys from data to business insights, see SageMaker Canvas immersion day and AWS user guide.

About the authors

Dr. Changsha Ma is an AI/ML Specialist at AWS. She is a technologist with a PhD in Computer Science, a master’s degree in Education Psychology, and years of experience in data science and independent consulting in AI/ML. She is passionate about researching methodological approaches for machine and human intelligence. Outside of work, she loves hiking, cooking, hunting food, and spending time with friends and families.

Ajjay Govindaram is a Senior Solutions Architect at AWS. He works with strategic customers who are using AI/ML to solve complex business problems. His experience lies in providing technical direction as well as design assistance for modest to large-scale AI/ML application deployments. His knowledge ranges from application architecture to big data, analytics, and machine learning. He enjoys listening to music while resting, experiencing the outdoors, and spending time with his loved ones.

Huong Nguyen is a Sr. Product Manager at AWS. She is leading the ML data preparation for SageMaker Canvas and SageMaker Data Wrangler, with 15 years of experience building customer-centric and data-driven products.

Read MoreAWS Machine Learning Blog