Amazon DynamoDB supports transactions to give atomicity, consistency, isolation, and durability (ACID), helping you maintain data consistency in your workloads. A database transaction is a sequence of multiple operations that are performed on one or more tables. These sequences of multiple operations represent a unit of work that is committed to the tables or rolled back all together. With the DynamoDB API, you can enforce the ACID attributes across one or more tables within a single AWS account and AWS Region.

Developers are familiar with the ACID transaction concept, but they sometimes struggle when working with the DynamoDB transaction APIs because transactions in SQL are presented and committed in a different format.

In this post, we introduce a framework that uses a layered approach to standardize and present DynamoDB transactions in a format that is familiar to developers. In this post, we use ASP.NET Core in C# to show how to use the framework. You can implement this design pattern in any other platform or using different programming languages.

The purpose of this framework is to:

Present the DynamoDB API in a format familiar to developers

Decouple the transaction process with a dedicated framework for single-responsibility that multiple client applications can use

Offer interface segregation so the client of the framework doesn’t need to worry about DynamoDB transaction APIs formatting

Framework design overview

The transaction framework presented in this post wraps the DynamoDB API into a common transaction scope class, which maintains the transaction items that are to be committed or rolled back. The framework begins with the TransactScope class, which is designed as an interface for the transaction client application. You can use it to simplify the transaction process used by the application client, encapsulating DynamoDB API calls. When needed, the application user calls the methods available to begin, commit, or roll back transactions.

In the middle of the framework, and to control the transaction logic—such as adding or removing items to the transaction—we use an extension class named TransactExtensions. The TransactExtensions class offers the ability to add transaction action requests for database PUT, UPDATE, and DELETE operations to the TransactScope instance.

To help translate the different data types into corresponding DynamoDB item attributes, we use an extension class named AttributeValueExtensions.

Transaction workflow overview

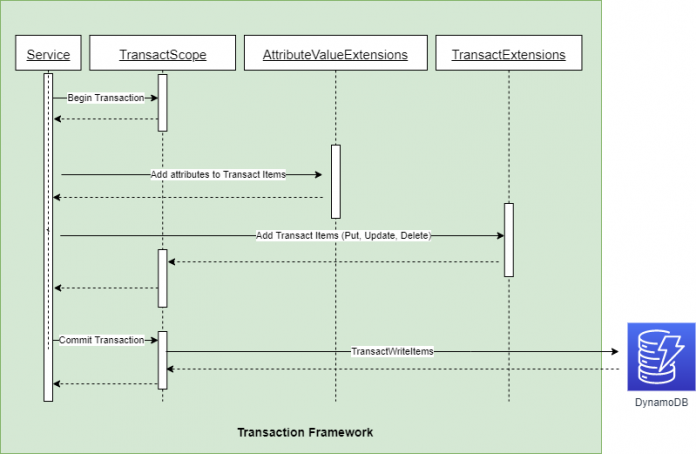

The following figure illustrates the transaction workflow, which is described in the following paragraphs. The service component represents the client application, which understands the business logic to begin, commit, or rollback transactions.

When a typical workflow starts, the TransactScope class initializes its internal DynamoDB client object and the actions container. The action targets a DynamoDB table item for ADD, UPDATE, or DELETE operations. These actions can target items in different tables, but not in different AWS accounts or Regions. No two actions can target the same item. For example, you can’t do a condition check and an update action on the same item in the same transaction.

The user application, which we will continue to call the “Service”, uses the AttributeValueExtensions to add various types of attributes to the item and then add the item to the container in TransactScope, through TransactExtensions.

When the item operations are complete, the service can commit the items to DynamoDB tables so that either all of them succeed or all of them fail. The service can roll back the scope and clean up the container by removing the TransactScope object.

Example use case using the framework

This example demonstrates the usage pattern of the framework by adding, deleting, and updating operations in atomic transactions. It uses the transaction framework and a layered approach to illustrate the transaction operations on three different product types: Album, Book, and Movie. The items of these three product types are persisted in three DynamoDB tables: Album, Book, and Movie.

For the album table, each album product has an Artist and a Title attribute, where Artist is the Album table’s partition key (PK) and Title is the sort key (SK). This approach allows the user to search for the albums by an artist. For example, you can query for the albums by the artist Johann Sebastian Bach, or get the album title 6 Partitas. Books have the attributes Author, Publish Date, and Title, where Author is the Book table’s PK and Title is the SK. For example, you can query the book Odyssey from the author Homer and get the attributes Publish Date as 1614 and Language as English. The movie product has attributes such as Director, Genre, and Title, where Director is the Movie Table’s PK and Title is the SK. For example, you can query the movie The Kid from Charlie Chaplin and learn that it belongs to the Genres Comedy, Drama, and Children.

Another approach would be to use a generic PK and SK to store the distinct entities with different prefixes in the same table, such as A# for album artist, B# for book author, and M# for movie directors. For example, A#Johann Sebastian Bach, B#Homer, and M#Charlie Chaplin. We will continue to use 3 different tables in this example.

We use the following front-end UI (in the following GitHub repository) to run transactions on the three different tables. Here’s the sequence of events a user would follow:

Create the three tables by choosing Create Product Tables (Book, Album, Movie).

Choose Begin Transaction to start a new transaction.

Select Album, Book, or Movie on the Product Type drop-down menu.

Add the corresponding product attributes on the Product Type drop-down.

Choose Add Item or Remove Item to add or remove items from the corresponding tables.

To update an item, enter the product PK and SK and choose Retrieve Item to get the latest version of an item, change the attributes as needed, and then choose Add Item.

Choose Commit Transaction to commit the transaction items to the tables or choose Cancel Transaction to roll back the transaction.

Layered approach

We implemented this example using three layers—front end, service, and data provider—using an interface and implementation approach. The interface defines the prototype of the layer and implementation has the details. Then, the front end layer interacts with the service layer through its interface to create tables; begin the transaction; add, update, or remove items; and then commit or roll back the transaction. Next, the service layer has the core business logic, which interacts with the data provider layer and transaction framework for Album, Book, and Movie product type management. The data provider layer decouples the DynamoDB API from the service layer for the DynamoDB item operations.

Create tables

Choose Create Product Tables (Book, Album, Movie) to initiate the createTableBtn_Click function, which then invokes the ProductService layer three times to create Album, Book and Movie tables.

We use ProductProvider to create corresponding tables, and it uses the DynamoDB API to run the CreateTable task and create the tables.

Begin transaction

Choose Begin Transaction to initiate the beginTransactionBtn_Click function, which invokes the ProductService layer to start a transaction.

The ProductService function starts the transaction using the BeginTransaction method, which invokes the Begin method in TransactScope. From there, TransactScope initializes the container in TransactWriteItemsRequest using the Begin method.

Add transaction items

You can visit the GitHub repository to explore how to use the TransactExtensions class to add and map transactions.

Attribute extensions

This common extension class is used to build regular item actions by translating the entity objects to the AWS AttributeValue type. You can explore this in the AttributeValueExtensions class.

A few comments on transactions:

Normalized data structures are a primary driver for ACID requirements. With DynamoDB many of these requirements are fulfilled by storing item aggregates as a single set of items or rows. Each single item operation is ACID, since no two operations could partially impact an item.

Avoid using transactions to maintain a normalized data set on DynamoDB.

Transactions are a great use case for committing changes across different items, or perform conditional batch inserts/updates.

Consider the following best practices while working with transactions.

Transactions can occur across multiple tables, but no two actions can target the same item in DynamoDB at the same time.

There is a maximum of 100 action requests in a transaction.

Each transaction is limited to a single AWS account or Region.

Conclusion

In this post, we introduced a framework that will help developers expand their use of transactions in DynamoDB, using methods similar to transactions in SQL databases. This framework builds a common, reusable framework that wraps the DynamoDB transaction APIs, enabling the service layer to deal with regular item actions without having to know or understand the details of DynamoDB transaction APIs. This allows for transactions to be started at any point and makes it easier to start a transaction when using a NoSQL database such as DynamoDB.

There are two fundamental concepts in this framework: the transaction scope, which translates standard item actions into transaction items, and the extension class for converting an entity object into a DynamoDB attribute value. To show the use of this framework, we created a sample application using the framework and a layered approach to manage three different tables.

We wrote the sample code in C# programming language, using .Net Core and AWSSDK.DynamoDBv2. You can find the entire sample code and the sample application that implements the design pattern described in this post in the

GitHub repository

About the Authors

Jeff Chen, Principal Architect with AWS Professional Services. As a technical leader, he works with customers and partners on their cloud migration and optimization programs. In his spare time, Jeff likes to spend time with family and friends exploring mountains near Atlanta, GA.

Esteban Serna, Sr DynamoDB Specialist SA. Esteban has been working with Databases for the last 15 years, helping customers to choose the right architecture to match their needs. Fresh from university he worked deploying the infrastructure required to support contact centers in distributed locations. Since NoSQL databases were introduced he felt in love with them and decided to focus on them as centralized computing was no longer the norm. Today Esteban is focusing on helping customers to design distributed massively scale applications that require single digit millisecond latency using DynamoDB. Some people say he is an open book and he loves to share his knowledge with others.

Read MoreAWS Database Blog