Thriving in today’s world requires creating modern data pipelines that make it easy to move data and extract value from it. To help businesses build modern data pipelines, we’ll cover definitions and examples of data pipelines, must-have features of modern data pipelines, and more.

Data Pipeline or ETL Pipeline: What’s the Difference?

Features of Modern Data Pipelines

Real-time Data Processing and Analysis

Scalable Architecture

Fault-Tolerant Architecture

Exactly-Once Processing (E1P)

Self-Service Management

Process High Volumes of Data

Streamlined Data Pipeline Development

What is a Data Pipeline?

A data pipeline is a sequence of actions that moves data from a source to a destination. A pipeline may involve filtering, cleaning, aggregating, enriching, and even analyzing data-in-motion.

Data pipelines move and unify data from an ever-increasing number of disparate sources and formats so that it’s suitable for analytics and business intelligence. In addition, data pipelines give team members exactly the data they need, without requiring access to sensitive production systems.

Data pipeline architectures describe how data pipelines are set up to enable the collection, flow, and delivery of data. Data can be moved via either batch processing or stream processing. In batch processing, batches of data are moved from sources to targets on a one-time or regularly scheduled basis. Batch processing is the tried-and-true legacy approach to moving data, but it doesn’t allow for real-time analysis and insights.

In contrast, stream processing enables the real-time movement of data. Stream processing continuously collects data from sources like change streams from a database or events from messaging systems and sensors. Stream processing enables real-time business intelligence and decision making.

Data Pipeline Examples

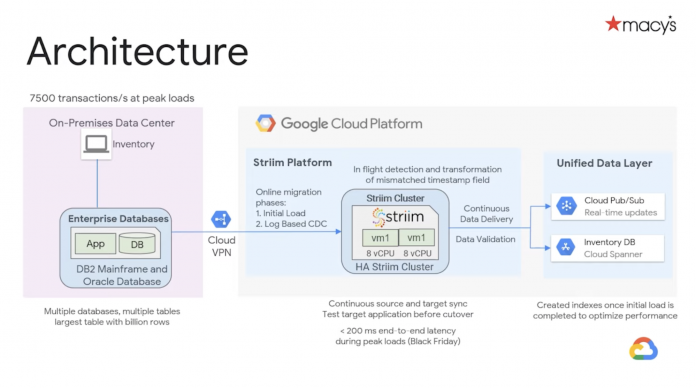

The complexity and design of data pipelines varies according to their intended purpose. For example, Macy’s streams change data from on-premise databases to Google Cloud to provide a unified experience for their customers — whether they’re shopping online or in-store.

Macy’s streams data from on-premises databases to the cloud to provide a unified customer experience

HomeServe uses a streaming data pipeline to move data pertaining to its leak detection device (LeakBot) to Google BigQuery. Data scientists analyze device performance and continuously optimize the machine learning model used in the LeakBot solution.

Homeserve streams data from a MySQL database to BigQuery for analysis and optimization of machine learning models

Data Pipeline or ETL Pipeline: What’s the difference?

ETL pipelines are a type of data pipeline. In the ETL process (“extract, transform, load”), data is first extracted from a data source or various sources. In the “transform” phase it is processed and converted into the appropriate format for the target destination (typically a data warehouse or data lake).

While legacy ETL has a slow transformation step, a modern ETL tool like Striim replaces disk-based processing with in-memory processing to allow for real-time data transformation, enrichment, and analysis. The final step of ETL involves loading data into the target destination.

ELT pipelines (extract, load, transform) reverse the steps, allowing for a quick load of data which is subsequently transformed and analyzed in a destination, typically a data warehouse.

7 Features of Modern Data Pipelines

To help companies build modern data pipelines, we have come up with a list of features that advanced pipelines contain. This list is by no means exhaustive. But ensuring your data pipelines contain these features will help your team make faster and better business decisions.

1. Real-Time Data Processing and Analytics

Modern data pipelines should load, transform, and analyze data in near real time, so that businesses can quickly find and act on insights. To start with, data must be ingested without delay from sources including databases, IoT devices, messaging systems, and log files. For databases, log-based Change Data Capture (CDC) is the gold standard for producing a stream of real-time data.

Real-time, or continuous, data processing is superior to batch-based processing because batch-based processing takes hours or days to extract and transfer data. This processing delay could have major consequences – a profitable social media trend may rise, peak, and fade before a company can spot it, or a security threat might be spotted too late, allowing malicious actors to execute their plans.

Real-time data pipelines provide decision makers with more current data. And businesses, like fleet management and logistics firms, can’t afford any lag in data processing. They need to know in real time if drivers are driving recklessly or if vehicles are in hazardous conditions to prevent accidents and breakdowns.

2. Scalable Cloud-Based Architecture

Modern data pipelines rely on the cloud to enable users to automatically scale compute and storage resources up or down. While traditional pipelines aren’t designed to handle multiple workloads in parallel, modern data pipelines feature an architecture in which compute resources are distributed across independent clusters. Clusters can grow in number and size quickly and infinitely while maintaining access to the shared dataset. Data processing time is easier to predict as new resources can be added instantly to support spikes in data volume.

Cloud-based data pipelines are agile and elastic. They allow businesses to take advantage of various trends. For example, a company that expects a summer sales spike can easily add more processing power when needed and doesn’t have to plan weeks ahead for this scenario. Without elastic data pipelines, businesses find it harder to quickly respond to trends.

3. Fault-Tolerant Architecture

Data pipeline failure is a real possibility while data is in transit. To mitigate the impacts on mission-critical processes, today’s data pipelines offer a high degree of reliability and availability.

Modern data pipelines are designed with a distributed architecture that provides immediate failover and alerts users in the event of node failure, application failure, and failure of certain other services.

A distributed, fault-tolerant data pipeline architecture

And if one node does go down, another node within the cluster immediately takes over without requiring major interventions.

4. Exactly-Once Processing (E1P)

Data loss and data duplication are common issues with data pipelines. Modern data pipelines offer advanced checkpointing capabilities that ensure that no events are missed or processed twice. Checkpointing keeps track of the events processed, and how far they get down various data pipelines.

Checkpointing coordinates with the data replay feature that’s offered by many sources, allowing a rewind to the right spot if a failure occurs. For sources without a data replay feature, data pipelines with persistent messaging can replay and checkpoint data to ensure that it has been processed, and only once.

5. Self-Service Management

Modern data pipelines are built using tools that have connectivity to each other. From data integration platforms and data warehouses to data lakes and programming languages, teams can use various tools to easily create and maintain data pipelines in a self-service and automated manner.

Traditional data pipelines usually require a lot of time and effort to integrate a large set of external tools for data ingestion, transfer, and analysis. Ongoing maintenance is time-consuming and leads to bottlenecks that introduce new complexities. And legacy data pipelines are often unable to handle all types of data, including structured, semi-structured, and unstructured.

Modern pipelines democratize access to data. Handling all types of data is easier and more automated than before, allowing businesses to take advantage of data with less effort and in-house personnel.

6. Process High Volumes of Data (in Varied Formats)

By 2025, the amount of data produced each day is predicted to be a whopping 463 exabytes. Since unstructured and semi-structured data make up 80% of the data collected by companies, modern data pipelines must be equipped to process large volumes of semi-structured data (like JSON, HTML, and XML files) and unstructured data (including log files, sensor data and weather data and more).

A big data pipeline might have to move and unify data from apps, sensors, databases, or log files. Often data has to be standardized, cleaned, enriched, filtered and aggregated — all in near-real time.

7. Streamlined Data Pipeline Development

Modern data pipelines are developed following the principles of DataOps, a methodology that combines various technologies and processes to shorten development and delivery cycles. DataOps is about automating data pipelines across their entire lifecycle. As a result, pipelines deliver data on time to the right stakeholder.

Streamlining pipeline development and deployment makes it easier to modify or scale pipelines to accommodate new data sources. Testing data pipelines is easier, too. Pipelines are built in the cloud, where engineers can rapidly create test scenarios by replicating existing environments. They can then test planned pipelines and modify them accordingly before the final deployment.

Gain a Competitive Edge

Data pipelines are the backbone of digital systems. Pipelines move, transform, and store data and enable organizations to harness critical insights. But data pipelines need to be modernized to keep up with the growing complexity and size of datasets. And while the modernization process takes time and effort, efficient and modern data pipelines will allow teams to make better and faster decisions and gain a competitive edge.

Striim’s modern streaming data pipeline solution

To learn more about Striim’s streaming data pipeline solution, feel free to request a demo or try Striim for free. Striim integrates with over hundred sources and targets, including databases, message queues, log files, data lakes, and IoT. Striim offers scalable in-memory streaming SQL to process and analyze data in flight.

Read MoreStriim