PostgreSQL has become the preferred open-source relational database for many enterprise companies and startups, powering leading business and mobile applications. Many customers choose PostgreSQL as the target database when migrating from commercial engines such as Oracle and Microsoft SQL Server. AWS offers Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Aurora PostgreSQL-Compatible Edition as fully managed PostgreSQL database services.

You can migrate from SQL Server to PostgreSQL using the AWS Schema Conversion Tool (AWS SCT) and the AWS Database Migration Service (AWS DMS). AWS SCT can convert both the source database schema and the majority of the code objects into the target database’s compatible format. With AWS DMS, you can migrate the data from the source database to the target database with minimal downtime. Another database migration method is to use Babelfish for Aurora PostgreSQL. With Babelfish, Amazon Aurora PostgreSQL supports commonly used T-SQL language and semantics which reduces the amount of code changes related to database calls in an application. As a result, the amount of application code you need to re-write is minimized, reducing the risk of any new application errors.

Some customers that have simpler migration requirements, such as one-time migration for few tables, look for quick way to migrate schema and data from SQL Server to PostgreSQL. In this post, we demonstrate an alternative data migration method using the PostgreSQL extension tds_fdw. PostgreSQL is designed to be easily extensible, and extensions are add-on modules that enhance the functionality of the database. The tds_fdw extension allows PostgreSQL to access the database’s supporting tabular data stream (TDS) protocol (used by Sybase and SQL Server databases) through a PostgreSQL feature called a foreign data wrapper. The foreign data wrapper is responsible for fetching data from the remote data source and returning it to the PostgreSQL engine. To learn more about federated query support using PostgreSQL foreign data wrapper, see Federated query support for Amazon Aurora PostgreSQL and Amazon RDS for PostgreSQL.

Solution overview

The high-level steps to implement this solution are as follows:

Configure the target PostgreSQL database.

Import the schema from the SQL Server database.

Create the target tables based on the source table definition.

Reconcile DATE-related column issues due to locale setting differences.

Perform data validation.

Clean up the intermediate objects used for migration.

Prerequisites

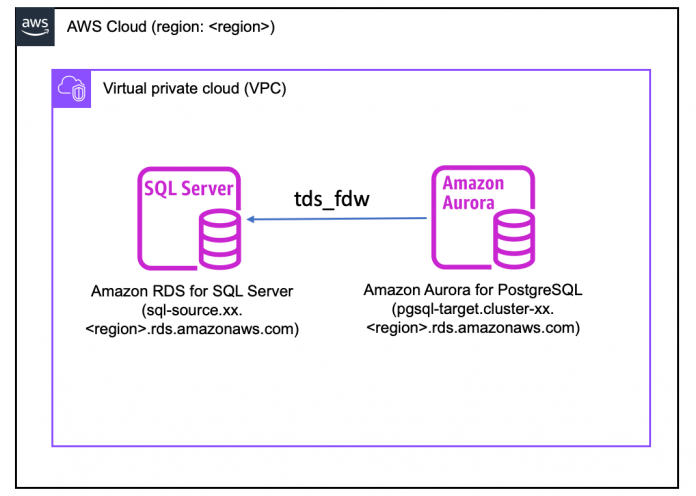

This post assumes you have a SQL Server instance created and running. In this post, we have an RDS for SQL Server database configured with the following information. This solution works with SQL Server set up in a non-Amazon RDS environment as well.

SQL Server version: SQL Server Standard Edition 15.00.4236.7.v1

Database endpoint: sql-source.xx.<region>.rds.amazonaws.com

Database name: dms_sample

Admin user: admin

Admin user password: your_complex_password

Application user: dms_user

Application user password: your_complex_password

Database client tool: SQL Server Management Studio (SSMS)

We also assume you have a PostgreSQL database created and running. In this post, we have the following target database. Although we use Amazon Aurora PostgreSQL, this solution works with Amazon RDS for PostgreSQL as long as the required tds_fdw extension is supported.

Aurora PostgreSQL version: 15.2

Database endpoint: pgsql-target.cluster-xx.<region>.rds.amazonaws.com

Database name: fdwdemo

Admin user: postgresql

Admin user password: your_complex_password

Database client tool: psql

The following diagram illustrates our database configuration.

We use the Amazon DMS Sample Database for SQL Server schema to demonstrate the migration process.

This solution involves the creation and utilization of AWS resources and therefore will incur costs on your account, including but not limited to RDS for SQL Server instances and storage costs and Aurora instances and storage costs. Refer to AWS Pricing for more information.

Configure the target PostgreSQL database

Perform the following procedures to set up the tds_fdw extension and create an empty schema to store the foreign tables and target tables to be migrated:

Connect to the target database fdwdemo, using an account that has rds_superuser permissions. You can use the postgres user, which is the admin user created during the RDS instance creation with such permission. Enter the password when prompted.

Create an empty schema. Here, we use the fdw schema to store foreign tables referencing directly to the SQL Server database. We use the dms_sample schema to store objects migrated from SQL Server. Although an empty schema is not compulsory, it makes the cleanup and troubleshooting easier compared to working with existing schemas with other objects inside.

The output should look like the following:

The following architecture illustrates our setup between databases.

Run the following commands to create the user dms_user, which will be used as the least privilege user to carry out the data migration:

The output should look like the following:

Run the following command to install the tds_fdw extension:

CREATE EXTENSION tds_fdw;

The output should look like the following:

Create a foreign server (SQL Server) in the target database (PostgreSQL) and grant permission for dms_user to use it. The foreign server represents the remote database you want to connect from PostgreSQL. In this case, it’s the dms_sample database in the sql-source.xx.<region>.rds.amazonaws.com instance. Refer to TDS Foreign data wrapper for advanced options such as TDS version and optimizer-related parameters used for query planning.

The output should look like the following:

Specify a user name and password pair for the user dms_user to log in to the remote database specified in the foreign server. The dms_user inside the OPTIONS clauses refers to the SQL login created on the source SQL Server.

The output should look like the following:

Import the schema from the SQL Server database

Complete the following steps to import your schema:

Connect to the target database fdwdemo using the user dms_user created previously. Enter the password when prompted.

Instead of using CREATE FOREIGN TABLE one by one for each table required for migration, you can use the IMPORT FOREIGN SCHEMA command to create foreign tables in bulk based on the import options. In the following SQL commands, we exclude the view getNewID during foreign table creation and include column DEFAULT expressions in the definitions of foreign tables:

The output should look like the following:

After you import the foreign tables, run the following command to list them in the schema fdw from the target database:

Optionally, run the following query to count the number of rows in the source database via a foreign data wrapper. The SELECT statement generates these SELECT COUNT(*) statements for each foreign tables in the schema fdw, and the gexec command runs the generated statements one by one:

Create target tables based on the source table definition

Run the following script to create target tables based on the foreign tables imported in the previous steps and populate the data after table creation using CREATE TABLE … AS SELECT… (CTAS) SQL syntax. Based on the size of the source and destination, it could take several minutes to create tables and transfer the data.

If you have already created a target table using other methods, such as AWS SCT or Liquibase, generate the script with the syntax INSERT INTO..SELECT.. FROM.. instead. For example, INSERT INTO dms_sample.ticket_purchase_hist AS SELECT * FROM fdw.ticket_purchase_hist.

The generated script and execution output is as following, omitting some output for better reading experience.

Ignore the message related to ERROR: invalid input syntax for the SQL Server DATETIME data type at this stage. We explain the causes and share workarounds in next section.

Handle DATE-related columns due to locale setting differences

As part of troubleshooting, let’s examine the foreign table structure in PostgreSQL and the source table structure in SQL Server for the table sporting_event.

To display the foreign table column definition, run d fdw.sporting_event in your psql terminal:

To check the source table definition, script the table definition for sporting_event using SQL Server Management Studio (SSMS) connected to the source SQL Server database. For instructions, refer to Script tables. The following SQL script is the output of the script table definition using SSMS:

To confirm the root cause of the error ERROR: invalid input syntax that occurred previously, query start_date alone. The same error should appear as expected:

As part of the foreign table access, the data in the DATE data type in SQL Server converts into TEXT format, and later converts to the DATE data type. The default date format of MM DD YYYY HH12:MI:SS:AM through the foreign server is not compatible with the PostgreSQL default setting, which is YYYY-MM-DD. A similar problem would be applicable for the SQL Server DATETIME2 data type column as well.

Workaround 1: Create a view to map the source table data type from DATE to DATETIME

In this workaround, we convert the DATE data type to DATETIME, which maps to TIMESTAMP in PostgreSQL. To minimize the impact on the source database, we create a view for this change instead of making a direct modification in the source SQL Server database. Use SSMS to connect to the source SQL Server database dms_sample with SQL login dms_user and run the following SQL to create the view:

In the target PostgreSQL database, we import the view sporting_event_v as a new foreign table. The LIMIT TO clause is useful here to restrict importing to only this table.

The output should look like the following:

Let’s create a target table without data based on fdw.sporting_event. It completes successfully because no actual data conversion is involved:

The output should look like the following:

Insert the data based on the sporting_event_v view and convert the data type from TIMESTAMP to DATE as part of the INSERT..SELECT.. statement:

The output should look like the following:

Workaround 2: Use the TEXT data type instead of DATE in the PostgreSQL foreign table

Because PostgreSQL can’t convert the data into the DATE data type due to the improper format, we can use the TEXT data type instead.

Drop the start_date column and add it back using the TEXT data type:

The output should look like the following:

Create the target table dms_sample.sporting_event based on the foreign table fdw.sporting_event, with the data type conversion from TEXT to DATE for column start_date:

The output should look like the following:

Perform data validation

Data validation is critical to ensure that your data was migrated accurately from the source to the target. In this section, we demonstrate how to perform data validation by examining the row count and then an actual data comparison. Row count is faster, but may not able to catch data value discrepancies.

The following is an example of performing data validation based on row count for the table sporting_event:

The output should look like the following:

The warning message Table definition mismatch: Foreign source has column named, but target table does not. Column will be ignored. is related to how the tds_fdw extension interprets the value in the COUNT function. If we perform COUNT on an existing column such as id instead of *, there won’t be a warning message, but COUNT(*) returns the count for all rows, and COUNT(id) only returns the count for rows for which the ID is NOT NULL.

Generate the row count comparison SQL statements and run them using gexec in psql:

The following is an example of data comparison for the table sporting_event:

The output should look like the following:

Generate the data comparison statements and run them using gexec in psql:

Clean up intermediate objects used for migration

These foreign tables are no longer required after migration, so you can drop them programmatically:

Similarly, drop the foreign server and related objects. The CASCADE keyword drops the user mapping for the foreign server as well:

Additional use case: Use an interim RDS for SQL Server database when direct connection is not feasible

Using tds_fdw to migrate data requires direct network connectivity from the source SQL Server database to the target PostgreSQL database. In real-world migration scenarios, there are use cases where direct network connectivity is not feasible. One workaround is to take a SQL Server native backup, upload the backup to an Amazon Simple Storage Service (Amazon S3) bucket, and then restore to an intermittent SQL Server. See Importing and exporting SQL Server databases using native backup and restore for steps to restore a native backup from on-premises SQL Server to Amazon RDS for SQL Server. The following diagram illustrates this architecture.

Clean up

To avoid ongoing costs, delete the resources that you created as part of this post:

RDS for SQL Server DB instance

Aurora PostgreSQL DB instance

Conclusion

In this post, we showed you how to use the tds_fdw extension to migrate data from SQL Server to PostgreSQL, as well as how to address known issues related to the DATE data type in SQL Server. This approach works well for customers who have simple data migration requirements. In this case, you can migrate your data directly over the network via the tds_fdw extension without using AWS DMS.

If you have any comments or questions, leave them in the comment section.

About the Authors

Donghua Luo is a Senior RDS Specialist Solutions Architect. He works with AWS customers to migrate and achieve higher flexibility, scale, and resiliency with database services in AWS cloud.

Bill Baldwin is a Principal Specialist Solutions Architect at AWS. Bill has over 20 years of experience working with data. He is passionate about providing technical guidance to our global customers, on their AWS database, analytics, and ML projects.

Venkataramanan Govindarajan is a Senior Database Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide architecture guidance and database solutions, helping them innovate using AWS services to improve business value.

Read MoreAWS Database Blog