Semantic reasoning is a powerful form of symbolic AI that brings meaning to data. At a high level, this is achieved by inferring new facts from existing information (or base facts) using a data model and knowledge of the domain. It can be useful for performing calculations, ensuring consistency, and detecting intricate patterns.

Semantic reasoning is used for many purposes, including detecting financial crimes and predicting new drugs for rare diseases.

In this post, we demonstrate how to implement semantic reasoning rules over a Formula 1 racing dataset by integrating RDFox with Amazon Neptune. We use the example Formula 1 racing dataset published by the Ergast Developer API.

An example reasoning rule that we could apply to our Formula 1 dataset could be: If a driver participated in the race Monte Carlo Grand Prix, and the driver is part of team Ferrari, we can infer that Ferrari also participated in the race.

By integrating RDFox with Neptune, we can store all your data to Neptune, taking advantage of its high availability, automated backups, and other managed service attributes, while also taking advantage of the in-memory semantic reasoning capabilities of RDFox.

Both Neptune and RDFox support Semantic Web Standards of RDF 1.1 and SPARQL 1.1. This means that the data is compatible, and querying can be federated between the systems.

Solution overview

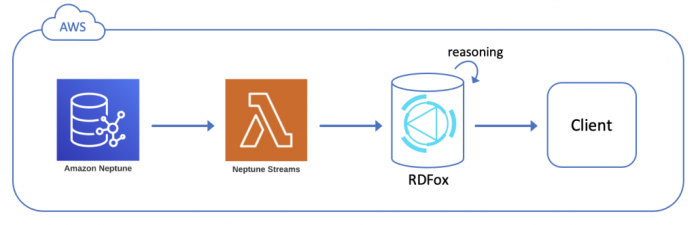

The following diagram illustrates our solution architecture.

We use the following services in our solution:

Amazon Neptune – A fast, reliable, and fully managed graph database service that makes it easy to build and run applications that work with highly connected datasets. It is purpose-built and optimized for storing billions of relationships and querying graph data with millisecond latency.

RDFox – A high-performance in-memory graph database built for semantic reasoning and speed, capable of making complex inferences, and available on AWS Marketplace.

AWS Lambda – A serverless, event-driven compute service that lets you run code for virtually any type of application or backend service without provisioning or managing servers. You can trigger Lambda from over 200 AWS services and software as a service (SaaS) applications, and only pay for what you use.

Amazon Elastic Container Service – Amazon ECS is a fully managed container orchestration service that makes it easy for you to deploy, manage, and scale containerized applications.

Neptune Streams – Neptune Streams logs every change to your graph as it happens, in the order that it is made, in a fully managed way. When you enable Neptune Streams, Neptune takes care of availability, backup, security, and expiration.

Customers are responsible for the costs of AWS and AWS Marketplace services. For pricing information of the AWS services, see the public pricing for Amazon Neptune, AWS Lambda, and Amazon ECS. For RDFox, See RDFox Pricing Information on AWS Marketplace. You can delete the stack once you are finished with it to stop any charges.

The following diagram illustrates our AWS infrastructure.

All of the data and queries used in this solution can be found in the following Amazon Simple Storage Service (Amazon S3) folder.

Follow these steps to create the solution:

Load the semantic reasoning rules into RDFox.

Load some RDF data into Neptune to be reasoned upon.

Query Neptune for new inferred facts.

Prerequisites

You can set up the environment for this solution by following these steps:

Deploy and run RDFox on AWS.

Create an RDFox guest role for SPARQL 1.1 Federated Query.

Set up Neptune.

Create a graph notebook.

Enable Neptune Streams.

Deploy and configure a Neptune-to-Neptune Streams consumer application.

Deploy and run RDFox on AWS

The easiest way to deploy and run RDFox for development purposes on AWS is through AWS Marketplace. RDFox by Oxford Semantic Technologies supports an hourly subscription on AWS Marketplace.

After you have accepted the license terms, access the following AWS CloudFormation template to quickly create an RDFox stack (make sure to set your preferred Region). This template runs RDFox inside a Docker image on Amazon ECS.

For more information about running RDFox on AWS Marketplace, refer to Get Started with RDFox on AWS Marketplace.

When configuring your stack parameters, use the default name for the data store and the admin role.

After your stack is created, note the following outputs to use later:

VPC – RDFox runs within a VPC. Use this VPC later when creating the Neptune cluster to allow for simpler communication between the two services.

RDFoxClientSG – This is the security group you use for your Neptune-to-Neptune replication Lambda function, which you configure later in this post.

RDFoxLoadBalancerDNSName – This is the address where the Neptune-to-Neptune replication Lambda function is able to access RDFox.

SSHTunnel – This is the SSH command used to access the running RDFox instance.

RDFoxFirstRolePassword – This is the password generated for the RDFoxFirstRoleName that will be used for authentication.

RDFoxPrivateSubnet – This is the subnet you need to use for your stream poller.

PrivateRouteTableOne – This is the route table associated with the preceding subnet.

Alternatively, instead of running RDFox through AWS Marketplace, if you have a separate RDFox license, you can use a regular Amazon Elastic Compute Cloud (Amazon EC2) machine and run RDFox there. A free trial license is available.

Create an RDFox guest role for SPARQL 1.1 Federated Query

You will need to run federated queries from Neptune to RDFox to see the combined response from the two services.

In this setup, by default, the RDFox SPARQL endpoint requires a basic authentication header using RDFoxFirstRoleName and RDFoxFirstRolePassword from the Cloud Formation outputs. Neptune does not support basic authentication headers through Federated Query.

Set up a guest user that doesn’t require a basic authentication header to run queries on RDFox.

In this setup, RDFox is set up inside a VPC. We advise using the same VPC for both Neptune and RDFox, and to ensure that the endpoints are only accessible from inside the VPC.

For more information on securing RDFox, see Access Control. To learn more about access control for Neptune, see Security in Amazon Neptune.

Open a terminal and run the SSHTunnel command previously noted from the CloudFormation stack outputs (you might need to adjust the key path) to connect to the RDFox instance.

In RDFox, permissions need to be granted to a guest role to allow operations without specifying a user name and password.

Run the following commands to create the guest role and set its permissions (you need to use the RDFoxFirstRolePassword value from the RDFox CloudFormation stack output to authenticate):

Set up Neptune

Neptune is a fast, reliable, fully managed graph database service that makes it easy to build and run applications that work with highly connected datasets. Neptune supports the W3C’s graph model RDF and its query language SPARQL.

Neptune is designed to run inside a VPC, so we recommend using the same VPC for both Neptune and RDFox, because this will enable communication between the two entities.

Follow the steps in Launching a Neptune DB cluster using the AWS Management Console and during the process, perform the following actions:

Use the VPC recorded from the outputs when creating RDFox

Create a new security group for your Neptune cluster

After launching Neptune, go to its security group and modify the inbound rules to allow traffic from within the security group on the database port (8182 by default).

Additionally, in order to be able to run federated queries from Neptune to RDFox, add your Neptune instance to the security group RDFoxClientSG.

Create a graph notebook

In this post, we demonstrate queries in a graph notebook. Either use the Neptune console or follow the guide to create a graph notebook to run queries against the Neptune cluster.

Enable the Neptune Streams API

The Neptune Streams API is a REST API that you can poll for change notifications to the Neptune database. Think of it as a CDC (change data capture) REST API for Neptune.

For instructions to turn on Neptune Streams for your Neptune cluster, refer to Enabling Neptune Streams.

Deploy and configure the Neptune-to-Neptune Streams consumer application

We have created a Neptune-to-Neptune Streams consumer application that will capture any changes in Neptune and push them to any target SPARQL 1.1 UPDATE HTTP endpoint, thereby replicating any data in Neptune to a downstream system (in this case, RDFox).

The application includes a Lambda function for the logic to replicate data between the systems, and an Amazon DynamoDB table to keep track of the position in the queue of changes.

When creating the function, configure it to use the security group mentioned earlier in this post (RDFoxClientSG). For instructions to build and run the Lambda function, refer to Using AWS CloudFormation to Set Up Neptune-to-Neptune Replication with the Streams Consumer Application. Note that this guide is compatible with any SPARQL 1.1 UPDATE endpoint.

When choosing your options in the CloudFormation template, configure the network parameters as follows:

VPC – Use the VPC that you used for Neptune and RDFox

Subnet IDs – Include the subnet RDFoxPrivateSubnet that was an output from the RDFox stack

Security group IDs – Include the security group ID specified in the RDFox creation (RDFoxClientSG) and the security group you created for your Neptune cluster

For the target Neptune cluster, configure the following parameters:

Target SPARQL Update endpoint – Use the RDFoxLoadBalancerDNSName output that you noted earlier, with HTTP schema and appending the port 80 and the path /datastores/default/sparql, which is the SPARQL 1.1 UPDATE endpoint. For example: http://internal-RDFox-000.eu-west-1.elb.amazonaws.com:80/datastores/default/sparql.

SPARQL Triple Only Mode – Enable this option so that RDFox doesn’t replicate any named graphs.

Now that you have the replication Lambda function running, all the data that is persisted into Neptune is replicated into RDFox.

Load the semantic reasoning rules into RDFox

The unique reasoning engine of RDFox allows you to automatically materialize implicit triples as you add new facts and rules. This powerful feature allows you to simplify your SPARQL code by anticipating what complex patterns you will be looking for most often. RDFox supports the open standards OWL, SHACL and SWRL for reasoning. These standards provide a subset of the RDFox reasoning capabilities. The most comprehensive set is provided via the Datalog language.

There are various ways to load reasoning rules into RDFox; in this post, we describe every step using the RDFox REST API.

OWL (Web Ontology Language) is a popular W3C standard in the semantic space. An OWL ontology contains various assertions about the classes and properties in your graph.

The following diagram illustrates our data model.

Reasoning rules are also often referred to as axioms.

Suppose we want to know which drivers have participated in which races. There is no direct relationship expressed in the graph between a :driver and a :race; we can only infer that a driver participated in a race by finding out if that driver was either in the qualifying or result of that race.

In OWL, we can write a rule that can make this inference automatically, by writing an OWL axiom as follows:

Adding this OWL axiom to our data store makes RDFox automatically add (or more accurately infer) new facts. For example, as shown in the following diagram, RDFox infers the new :participatedInRace edge if there is data that says that the driver has a result from that race.

Load an OWL axiom into RDFox

To load the OWL reasoning rules into RDFox using the RDFox REST API, follow these steps:

Download the axioms.ttl file onto the RDFox instance:

wget https://aws-neptune-customer-samples.s3.amazonaws.com/reasoning-blog/axioms.ttl

Issue the following curl command to load the OWL axioms RDF file into RDFox (we need the SSH tunnel to be running for this command to work):

Data in RDF graphs can be separated into named graphs for contextual representation. The following OWL axiom is loaded into a named graph http://www.oxfordsemantic.tech/f1demo/axiomsGraph. The following screenshot shows the axioms.ttl file contents.

When loading OWL axioms as .ttl files (as we demonstrate here), you must issue a PATCH HTTP request to RDFox to scan a named graph (http://www.oxfordsemantic.tech/f1demo/axiomsGraph) and load any axioms from it:

Load a Datalog axiom into RDFox

Reasoning based on OWL ontologies has its limitations. What if we want to create a link between a :Result and the corresponding :Qualifying node? In that case, it’s not enough to ensure that they have the same :Driver or the same :Race they need to share both.

RDFox also supports Datalog rules to offer more advanced reasoning than OWL. In Datalog, we can create a link between race results and their qualifying rounds by adding the following rule to our graph:

To load Datalog reasoning rules into RDFox using the RDFox REST API, follow these steps:

Download the rule.dlog file to the RDFox instance:

Issue the following curl command to load the Datalog file rule1.dlog into RDFox:

The following screenshot shows the Datalog axiom loaded into RDFox:

Now that one OWL axiom and one Datalog rule are successfully loaded into RDFox, you can load some data into Neptune to be reasoned upon.

Load RDF data into Neptune to be reasoned upon

For this part of the process, you need to be able to issue HTTP requests to your Neptune cluster. For more information, refer to Using the HTTP REST endpoint to connect to a Neptune DB instance.

In a graph notebook, issue the following SPARQL UPDATE LOAD query to load some data from Amazon S3 into your Neptune cluster:

Query Neptune for new inferred facts

Now that the data is loaded, you can use SPARQL 1.1 Federated Query to see the newly inferred data in our Neptune SPARQL query result.

Neptune supports SPARQL 1.1 Federated Query, which means you can run a SPARQL query against Neptune, which in turn federates part of that query to RDFox.

With SPARQL 1.1 Federated Query, we can retrieve all the original data from Neptune and combine it with the newly inferred facts and data from RDFox.

See the result from our OWL axiom

Issue a query to get all drivers who participated in the Australian Grand Prix 2009, replacing <SERVICE_URL> with the complete RDFox URL:

This query response is only possible because of the OWL axiom previously loaded into RDFox.

See the result from our Datalog axiom

Issue a query to find all of Lewis Hamilton’s race results and their corresponding qualifying round results:

This query response is only possible because of the Datalog rule previously loaded.

Advanced features of Datalog

Datalog lets you use a multitude of additional features, such as the following:

Negation – You can test for the absence of a pattern, and any facts inferred will automatically be retracted if that pattern suddenly appears in the future

Aggregation – You can divide all your matches into groups and then apply aggregate functions to these groups to find the following:

The number of elements

Their sum

Their multiplicative product

Their maximum or minimum

Mathematical operations – Datalog allows you to perform a wide range of numerical operations in your BINDs and FILTERs, including all deterministic SPARQL functions and more:

Comparisons (>, <, =, !=, <=, >=)

Addition, subtraction, multiplication, and division

Exponentiation, roots, and logarithms

Maximums and minimums

Absolute values and rounding

Trigonometric and hyperbolic functions

Statistical functions

String manipulation – You can use concatenation, replacement, regular expression matching, substring extraction, or measure the length of your string

Date and time calculations – You can use the xsd:duration type to add or subtract a given number of seconds, days, months, or years from a date-time literal or find out how much time passed between two points

For a full set and more details, refer to the RDFox reasoning documentation.

Let’s look at an example of a Datalog aggregation reasoning rule. Formula 1 scoring systems have changed a lot throughout the years, so a curious fan might want to run a query to calculate the number of drivers who were awarded points in each race, using the following Datalog rule:

Datalog can perform many more complex inferences. In this example, you could also use Datalog to compute any the following:

The team member relationship between drivers and all the constructors they ever worked with

The teammate relationship between drivers who raced for the same constructor at the same time

The win count metric, to measure how many times a driver has won

The win percentage for each driver

The streak length for each winning result, to check how long a driver’s streak had been so far

The improvement score for each result, to see how much the driver improved between

the starting position and their final time

The most recent race for each driver

Visit the RDFox website or blog to learn more about Datalog in RDFox and its various use cases.

Clean up

To clean up the environment, follow these steps in order:

Delete your Neptune instances.You can either use the AWS client and run the DeleteDBCluster command, or navigate to the Neptune console and delete each instance in your cluster, selecting each instance in turn and choosing Actions, Delete.

Delete the CloudFormation stack for your Neptune-to-Neptune Streams consumer application.If the Neptune-to-Neptune Streams consumer application was created using AWS CloudFormation as described, you can tear down your stack, including all its components such as the Lambda function and the DynamoDB table, by following the guide Deleting a stack on the AWS CloudFormation console.

Delete the CloudFormation stack for the RDFox instance.If the RDFox stack was created using AWS CloudFormation, you can tear down your RDFox instance, along with all the components that were provisioned for your VPC, by following the guide Deleting a stack on the AWS CloudFormation console.

After the stack has been deleted, navigate to EC2 Elastic Block Store volumes and delete the volume called <your-stack-name>-rdfox-server-directory.

Conclusion

Now you can integrate RDFox with Neptune, and combine the unique features and strengths of both. You can take advantage of the high availability of Neptune along with Datalog and OWL reasoning to build sets of rules that work together to materialize new insights.

Semantic reasoning enables you to understand facts about your graph, with far less data, and far simpler querying. You can retrospectively add rules to assert new facts, without having to change or add new data. Simply write or edit the rules that govern your world, and your SPARQL queries will return the facts.

These examples showcase how powerful reasoning can be and will hopefully give you some ideas for how you can use it with your graph. You can use similar principles to expand upon known information in any domain of knowledge, such as detecting fraud or inferring relationships between people in a social graph.

A fraud graph reasoning example

Consider a fraud graph that contains billions of transactions. We could write a reasoning rule that says: “Any transactions that take place within 10 seconds of each other should be flagged as a potential risk.”

Reasoning will happen at write time, so you can be notified immediately of the potentially fraudulent activity.

Now consider that you want to change that rule: “Any transactions that take place within 5 minutes of each other, from different IP addresses, should be flagged as a potential risk.”

Reasoning will re-compute the facts, allowing you to act promptly, according to your new world view, without changing the explicitly persisted RDF data.

A social graph reasoning example

Consider a social graph, with relationships between only children and their parents. With reasoning, you can have rules that say: “My child’s child is my grandchild” and “My parent’s child is my sibling.”

Because of reasoning, you don’t have to store explicit data about siblings and grandchildren; it’s enough to just store the relationships between parents and children:

You could then add new rules, to detect cousins, aunts and uncles, great-grandparents, and more, without adding any new data. The knowledge in your graph expands exponentially, without the explicit graph expanding at all.

About the Authors

Charles Ivie is a Senior Graph Architect with the Amazon Neptune team at AWS. He has been designing, implementing and leading solutions using knowledge graph technologies for over ten years.

Diana Marks is a Knowledge Engineer at Oxford Semantic Technologies, the company behind RDFox. She has a degree in Mathematics from University of Oxford and a background in software development.

Read MoreAWS Database Blog