As organizations work to modernize their traditional applications to an event-driven, serverless model, a question that comes up frequently is how the object-relational mapping (ORM) layer should be managed. Packaging it with AWS Lambda functions increases its size and adds a cognitive burden on the development team to track. In addition, many organizations have requirements to maintain a strict separation of duties between the database team and the application developers.

In this post, we discuss how developers can migrate their monolithic applications to a serverless stack using Amazon API Gateway and Lambda while continuing to use SQLAlchemy, a popular Python ORM. We also discuss how separation of duties between the database and the development team can be achieved.

The companion source code for this post can be found on GitHub.

Solution overview

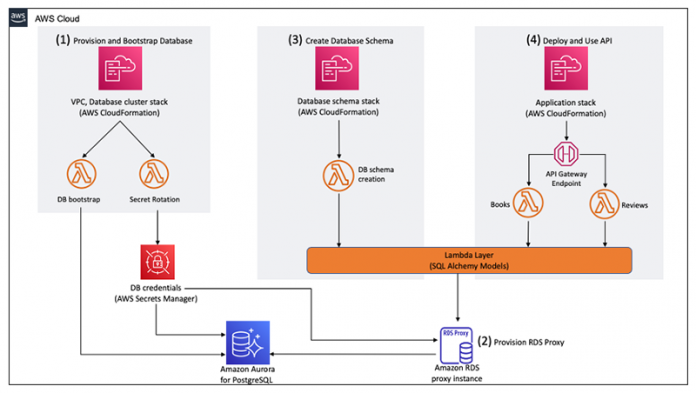

In this solution, the database schema is created and shared via code with the application layer. The application layer, which consists of multiple serverless functions, imports and uses the same schema objects. This provides separation of duties and also helps the application development team focus on their core deliverables. The following diagram shows the AWS CloudFormation stacks and the order in which we deploy them.

We complete the following steps:

First, we provision an Amazon Aurora PostgreSQL-Compatible Edition cluster based on the recommended best practices of the AWS Well-Architected Framework.

Then we deploy a proxy with Amazon RDS Proxy. RDS Proxy is a fully managed service that handles an application’s access to its database. It provides connection pooling, multiplexing, and graceful failover by maintaining client connections and queuing them. It enables scaling beyond database connection limits and manages bursts of connections and requests from applications.

The third step is publishing a Lambda layer of the ORM objects. A Lambda layer is an archive that contains, in this case, additional Python libraries and our database definition code.

Finally, a sample application called Bookstore is deployed.

The following diagram shows the application infrastructure. It offers two REST endpoints, /book and /review, which allows the user to store and retrieve books and their reviews. This application uses API Gateway to manage the routes, and each route triggers a Lambda function. API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. The Lambda functions inherit the ORM objects from the layer. Due to the stateless nature of Lambda functions, the ORM connects to RDS Proxy instead of directly to the database. This allows RDS Proxy to pool and multiplex the connections to the database. This separation of duties allows the application developers to focus solely on the business logic and helps accelerate the product development lifecycle.

Prerequisites

To deploy this solution, you need the following:

An AWS account

The latest version of the AWS Command Line Interface (AWS CLI) configured with permissions to deploy to the AWS account

The AWS Serverless Model (SAM) CLI

Python 3.8

A local copy of the companion code

Deploy a relational database

Amazon Relational Database Service (Amazon RDS) makes it easy to set up, operate, and scale a relational database in the cloud. It offers a variety of popular database engines to choose from, including Amazon Aurora, PostgreSQL, MySQL, and MariaDB. SQLAlchemy is compatible with all these engines. For this post, we provision an Aurora PostgreSQL database.

To deploy the database for our sample application, follow the instructions in Deploy an Amazon Aurora PostgreSQL DB cluster with recommended best practices using AWS CloudFormation. This solution follows AWS best practices and uses AWS Secrets Manager to manage and rotate the database credentials. It also provisions a bastion host, which we use later to validate the database setup.

Provision an RDS proxy

RDS Proxy pools connections from the applications and shares the database connections. This is especially important when it comes to modern serverless architectures, which can have a large number of connections opening and closing at a high rate, thereby exhausting the database compute and memory resources. RDS Proxy manages that for us and improves the database efficiency and application scalability. On Aurora databases, the proxy reduces failover times by up to 66%. RDS Proxy also integrates with Secrets Manager to simplify the database credential management.

On the AWS CloudFormation console, choose the Aurora database stack.

On the Resources tab, search for AuroraDBCluster.

Note the value in the Physical ID column.

On the same Resources tab, search for secrets.

The database credentials are stored in the secret. RDS Proxy uses this secret to connect to the database.

Choose the link for AuroraMasterSecret.

You’re redirected to Secrets Manager.

Note the secret ARN to use later.

On the AWS CloudFormation console, choose Create stack.

Select Upload a template file.

Upload rds_proxy/template.yaml from the cloned source repository.

Choose Next.

For Stack name, enter a name (for example, sob-rds-proxy).

For AuroraDBClusterId, enter the Aurora DB cluster ID you copied earlier.

For AuroraDBSecretArn, enter the ARN you copied earlier.

For AuroraVPCStackName, enter the name of the Aurora database VPC CloudFormation stack. (This stack was deployed with the initial Aurora DB cluster.)

For ProxyName, enter a name for this RDS proxy.

Choose Next.

Review the parameter values you set.

Acknowledge the creation of AWS Identity and Access Management (IAM) resources.

Choose Create stack.

After the stack is created, navigate to the Amazon RDS console and choose Proxies.

You can see the newly created RDS proxy.

Choose the name to see additional details.

We’re interested in the target groups. The target group embodies the RDS DB instance or Aurora cluster that the proxy can connect to. For an Aurora cluster, by default the target group is associated with all the DB instances in that cluster. That way, the proxy can connect to whichever Aurora DB instance is promoted to be the writer instance in the cluster. The RDS DB instance associated with a proxy, or the Aurora cluster and its instances, are called the targets of that proxy.

Choose the default target group to check the status of the connection to the Aurora cluster.

You can see that the connection was successful.

The RDS proxy is now ready to be used by our Lambda functions.

Create the database schema

The database schema is provisioned using the db_schema Lambda function. We add two utility source files to the Lambda layer in order to encapsulate SQLAlchemy components and make them reusable across the business logic Lambda functions:

bookstore_orm_objects.py – This file holds the SQLAlchemy ORM objects and mappings (Book and Review). These objects are used to create the database schema as well as perform CRUD operations on the database.

bookstore_utils.py – This file provides utility functions to handle the SQLAlchemy session and engine components.

Browse to the db_schema directory inside the source code you cloned earlier.We deploy this Lambda function using the AWS Serverless Model (SAM) CLI. We use AWS SAM because it simplifies the management of the Lambda function and its layers. This template requires the following parameter values:

Stack name – A descriptive name for this CloudFormation stack, such as dbschema-stack.

AuroraVPCStackName – The name of the Aurora database VPC CloudFormation stack. (This stack was deployed with the initial Aurora DB cluster.)

AuroraDBSecretName – The name of the Aurora database primary secret, such as dev/aurora-pg/aurora-db.

AuroraRDSProxyStackName – The name of the RDS Proxy CloudFormation stack (sob-rds-proxy).

AWS SAM offers a guided deployment that provides an interactive deployment process.

Use the following code, and replace the user input placeholders with your own information

Let’s validate the deployment of the database schema.

On the Secrets Manager console, browse to the database secret.

Choose Retrieve secret value.

Here you can see all the connection details.

To validate the schema was set up correctly, we connect to the bastion host that was created as part of the Aurora database stack. It’s preconfigured to allow connections to the database.

Log in to the database using the following command (replace the user input placeholders with the values in Secrets Manager):

Now you can connect to the database and list the tables.

Deploy the Bookstore application

Now we’re ready to deploy the Bookstore application. Like the Lambda layer, we use AWS SAM to simplify the deployment process.

Navigate to the bookstore directory inside the source code you cloned earlier.

This template requires the following parameter values:

Stack name – A descriptive name for this CloudFormation stack, such as bookstore-stack.

AuroraVPCStackName – The name of the Aurora database VPC CloudFormation stack. (This stack was deployed with the initial Aurora DB cluster.)

AuroraDBSecretName – The name of the Aurora database primary secret, such as dev/aurora-pg/aurora-db.

AuroraRDSProxyStackName – The name of the RDS Proxy CloudFormation stack (sob-rds-proxy).

SimpleBookstoreDBSchemaStackName – The name of the Simple Bookstore DB schema CloudFormation stack (dbschema-stack).

This project uses Lambda functions that depend on libraries deployed to a Lambda layer. So, the first step is to make sure these libraries are installed properly in the Lambda layer. Execute the commands below in a sandbox environment similar to your Lambda function’s environment (e.g., a docker container). This is required as package psycopg2-binary is OS-dependent.From your sandbox, type:

Check directory python/ to make sure the libraries have been installed properly.

Use the AWS SAM guided deployment again and provision the application:

Copy the BookStoreAPIEndpoint from the outputs and load up your preferred API client, such as Postman.

Let’s start off by retrieving a list of books. Send a GET request to /book.

This returns an empty list.

Add a book by issuing a PUT request to /book.

Run the GET command again.This time, we get an array of books back.

Finally, we can use the Bastion host again to validate the contents of the book table.

Clean up

To avoid incurring future charges, delete all the CloudFormation stacks. For more information about pricing, see Amazon Aurora Pricing, Amazon RDS Proxy pricing, Amazon API Gateway pricing and AWS Lambda Pricing.

Delete all the CloudFormation stacks one at a time in the following order:

Bookstore application stack

DB schema stack

RDS Proxy stack

Aurora PostgreSQL Database stack

Delete the Amazon Simple Storage Service (Amazon S3) bucket and its contents used by the AWS SAM CLI.

Conclusion

In this post, we discussed how using Aurora PostgreSQL, RDS Proxy and Lambda layers allows Python developers to re-platform their application on a modern serverless stack without giving up on their existing SQLAlchemy objects. This solution also allows organizations to maintain a separation of duties between the database specialists and the application developers. For instance, the database team becomes responsible for providing the database and the RDS Proxy endpoint, and also bootstrapping the database with the correct schema. Meanwhile, the application team can focus exclusively on the business logic and launching new features for customers.

You can easily adapt the ideas in this post to fit your needs. Using Lambda layers simplifies the packaging and usage of the ORM objects. We encourage you to extend the solution for different software stacks and relational databases.

The companion source code for this post can be found on GitHub. As always, AWS welcomes feedback. Please submit comments or questions in the comments section.

About the authors

Marcilio Mendonca is a Sr. Solutions Developer in the Global Solutions Prototyping Team at Amazon Web Services. In the past years, he has been helping AWS customers to design, build and deploy modern applications on AWS leveraging VMs, containers, and Serverless architectures. Prior to joining AWS, Marcilio was a Software Development Engineer with Amazon. He also holds a PhD in Computer Science. You can find him on twitter at @marciliomendonc.

Srijit Mitra is a Solutions Architect based in Toronto, Canada. He is passionate about helping startups leverage AWS to bring their disruptive ideas to fruition.

Read MoreAWS Database Blog