Amazon Lex provides automatic speech recognition (ASR) and natural language understanding (NLU) technologies to transcribe user input, identify the nature of their request, and efficiently manage conversations. Lex lets you create sophisticated conversations, streamline your user experience to improve customer satisfaction (CSAT) scores, and increase containment in your contact centers.

Natural, effective customer interactions require that the Lex virtual agent accurately interprets the information provided by the customer. One scenario that can be particularly challenging is capturing a street address during a call. For example, consider a customer who has recently moved to a new city and calls in to update their street address for their wireless account. Even a single United States zip code can contain a wide range of street names. Getting the right address over the phone can be difficult, even for human agents.

In this post, we’ll demonstrate how you can use Amazon Lex and the Amazon Location Service to provide an effective user experience for capturing their address via voice or text.

Solution overview

For this example, we’ll use an Amazon Lex bot that provides self-service capabilities as part of an Amazon Connect contact flow. When the user calls in on their phone, they can ask to change their address, and the bot will ask them for their customer number and their new address. In many cases, the new address will be captured correctly in the first try. For more challenging addresses, the bot may ask them to restate their street name, spell their street name, or repeat their zip code or address number to capture the correct address.

Here’s a sample user interaction to model our Lex bot:

IVR: Hi, welcome to ACME bank customer service. How can I help? You can check account balances, order checks, or change your address.

User: I want to change my address.

IVR: Can you please tell me your customer number?

User: 123456.

IVR: Thanks. Please tell me your new zip code.

User: 32312.

IVR: OK, what’s your new street address?

User: 6800 Thomasville Road, Suite 1-oh-1.

IVR: Thank you. To make sure I get it right, can you tell me just the name of your street?

User: Thomasville Road.

IVR: OK, your new address is 6800 Thomasville Road, Suite 101, Tallahassee Florida 32312, USA. Is that right?

User: Yes.

IVR: OK, your address has been updated. Is there anything else I can help with?

User: No thanks.

IVR: Thank you for reaching out. Have a great day!

As an alternative approach, you can capture the whole address in a single turn, rather than asking for the zip code first:

IVR: Hi, welcome to ACME bank customer service. How can I help? You can check account balances, order checks, or change your address.

User: I want to update my address.

IVR: Can you please tell me your customer number?

User: 123456.

IVR: Thanks. Please tell me your new address, including the street, city, state, and zip code.

User: 6800 Thomasville Road, Suite 1-oh-1, Tallahassee Florida, 32312.

IVR: Thank you. To make sure I get it right, can you tell me just the name of your street?

User: Thomasville Road.

IVR: OK, your new address is 6800 Thomasville Road, Suite 101, Tallahassee Florida 32312, US. Is that right?

User: Yes.

IVR: OK, your address has been updated. Is there anything else I can help with?

User: No thanks.

IVR: Thank you for reaching out. Have a great day!

Solution architecture

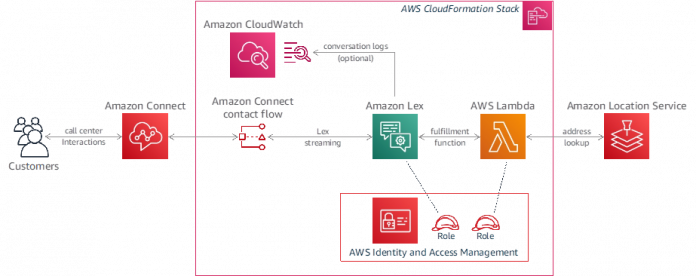

We’ll use an Amazon Lex bot integrated with Amazon Connect in this solution. When the user calls in and provides their new address, Lex uses automatic speech recognition to transcribe their speech to text. Then, it uses an AWS Lambda fulfillment function to send the transcribed text to Amazon Location Service, which performs address lookup and returns a normalized address.

As part of the AWS CloudFormation stack, you can also create an optional Amazon CloudWatch Logs log group for capturing Lex conversation logs, which can be used to create a conversation analytics dashboard to visualize the results (see the post Building a business intelligence dashboard for your Amazon Lex bots for one way to do this).

How it works

This solution combines several techniques to create an effective user experience, including:

Amazon Lex automatic speech recognition technology to convert speech to text.

Integration with Amazon Location Service for address lookup and normalization.

Lex spelling styles, to implement a “say-spell” approach when voice inputs are not clear (for example, ask the user to say their street name, and then if necessary, to spell it).

The first step is to make sure that the required slots have been captured.

In the first code section that follows, we prompt the user for their zip code and street address using the Lex ElicitSlot dialog action. The elicit_slot_with_retries() function prompts the user based on a set of configurable prompts.

The last section of code above uses a helper function parse_address.parse() that converts spoken numbers into digits (for example, it converts “sixty eight hundred” to “6800”).

Then, we send the user’s utterance to Amazon Location Service and inspect the response. We discard any entries that don’t have a street, a street number, or have an incorrect zip code. In cases where we have to re-prompt for a street name or number, we also discard any previously suggested addresses.

Once we have a resolved address, we confirm it with the user.

If we don’t get a resolved address back from the Amazon Location Service, or if the user says the address that we suggested wasn’t right, then we re-prompt for some additional information, and try again. The additional information slots include:

StreetName: slot type AMAZON.StreetName

SpelledStreetName: slot type AMAZON.AlphaNumeric (using Amazon Lex spelling styles)

StreetAddressNumber: slot type AMAZON.Number

The logic to re-prompt is controlled by the next_retry() function, which consults a list of actions to try:

The next_retry() function will try these actions in order. You can modify the sequence of prompts by changing the order in the RETRY_ACTIONS list. You can also configure different prompts for scenarios where Amazon Location Service doesn’t find a match, versus when the user says that the suggested address wasn’t correct. As you can see, we may ask the user to restate their street name, and failing that, to spell it using Amazon Lex spelling styles. We refer to this as a “say-spell” approach, and it’s similar to how a human agent would interact with a customer in this scenario.

To see this in action, you can deploy it in your AWS account.

Prerequisites

You can use the CloudFormation link that follows to deploy the solution in your own AWS account. Before deploying this solution, you should confirm that you have the following prerequisites:

An available AWS account where you can deploy the solution.

Access to the following AWS services:

Amazon Lex

AWS Lambda, for integration with Amazon Location Service

Amazon Location Service, for address lookup

AWS Identity and Access Management (IAM), for creating the necessary policies and roles

CloudWatch Logs, to create log groups for the Lambda function and optionally for capturing Lex conversation logs

CloudFormation to create the stack

An Amazon Connect instance (for instructions on setting one up, see Create an Amazon Connect instance).

The following AWS Regions support Amazon Lex, Amazon Connect, and Amazon Location Service: US East (N. Virginia), US West (Oregon), Europe (Frankfurt), Asia Pacific (Singapore), Asia Pacific (Sydney) Region, and Asia Pacific (Tokyo).

Deploying the sample solution

Sign in to the AWS Management Console in your AWS account, and select the following link to deploy the sample solution:

This will create a new CloudFormation stack.

Enter a Stack name, such as lex-update-address-example. Enter the ARN (Amazon Resource Name) for the Amazon Connect instance that you’ll use for testing the solution. You can keep the default values for the other parameters, or change them to suit your needs. Choose Next, and add any tags that you may want for your stack (optional). Choose Next again, review the stack details, select the checkbox to acknowledge that IAM resources will be created, and then choose Create stack.

After a few minutes, your stack will be complete, and include the following resources:

A Lex bot, including a published version with an alias (Development-Alias)

A Lambda fulfillment function for the bot (BotHandler)

A CloudWatch Logs log group for Lex conversation logs

Required Amazon IAM roles

A custom resource that adds a sample contact flow to your Connect instance

At this point, you can try the example interaction above in the Lex V2 console. You should see the sample bot with the name that you specified in the CloudFormation template (e.g., update-address-bot).

Choose this bot, choose Bot versions in the left-side navigation panel, choose the Version 1 version, and then choose Intents in the left-side panel. You’ll see the list of intents, as well as a Test button.

To test, select the Test button, select Development-Alias, and then select Confirm to open the test window.

Try “I want to change my address” to get started. This will use the UpdateAddressZipFirst intent to capture an address, starting by asking for the zip code, and then asking for the street address.

You can also say “I want to update my address” to try the UpdateAddress intent, which captures an address all at once with a single utterance.

Testing with Amazon Connect

Now let’s try this with voice using a Connect instance. A sample contact flow was already configured in your Connect instance:

All you need to do is set up a phone number, and associate it with this contact flow. To do this, follow these steps:

Launch Amazon Connect in the AWS Console.

Open your Connect instance by selecting the Access URL, and logging in to the instance.

In Dashboard, select View phone numbers.

Select Claim a number, choose a country from the Country drop-down, and choose a number.

Enter a Description, such as “Example flow to update an address with Amazon Lex”, and select the contact flow that you just created.

Choose Save.

Now you’re ready to call in to your Connect instance to test your bot using voice. Just dial the number on your phone, and try some US addresses. To try the zip code first approach, say “change my address”. To try the change address in one turn approach, say “update my address”. You can also just say, “my new address is”, followed by a valid US address.

But wait… there’s more

Another challenging use case for voice scenarios is capturing a user’s email address. This is often needed for user verification purposes, or simply to let the user change their email address on file. Lex has built-in support for email addresses using the AMAZON.EmailAddress built-in slot type, which also supports Lex spelling styles.

Using a “say-spell” approach for capturing email addresses can be very effective, and since the approach is similar to the user experience in the street address capture scenarios that we described above, we’ve included it here. Give it a try!

Clean up

You may want to clean up the resources created as part of the CloudFormation template when you’re done using the bot to avoid incurring ongoing charges. To do this, delete the CloudFormation Stack.

Conclusion

Amazon Lex offers powerful automated speech recognition and natural language understanding capabilities that can be used to capture the information needed from your users to provide automated, self-service functionality. Capturing a customer’s address via speech recognition can be challenging due to the range of names for streets, cities, and towns. However, you can easily integrate Amazon Lex with the Amazon Location Service to look up the correct address, based on the customer’s input. You can incorporate this technique in your own Lex conversation flows.

About the Author

Brian Yost is a Senior Technical Program manager on the AWS Lex team. In his spare time, he enjoys mountain biking, home brewing, and tinkering with technology.

Read MoreAWS Machine Learning Blog