In this post, we deep dive into using AWS Database Migration Service (AWS DMS) to load data to Amazon Redshift and discuss how to optimize data loading.

In a world where data is always growing and larger datasets need to be processed, it’s important to use the right tool for the right job. Amazon Redshift uses a combination of machine learning (ML) and SQL to analyze structured and semi-structured data across data warehouses, operational databases, and data lakes. Some of the key challenges faced by organizations include the following:

Consolidating datasets into Amazon Redshift while also keeping up to date with all the changes

Configuring parameters for optimizing loading data into Amazon Redshift

Solution overview

AWS DMS support a wide range of sources and targets. AWS DMS uses a replication instance or AWS DMS Serverless, which is the compute resource that runs the replication software. You create a source and target connection to tell AWS DMS where to extract data from and where to load it. Next, you schedule a task that runs on this server to move your data.

There are three types of replication tasks on AWS DMS:

Migrate existing data (full load) – The task migrates existing data based on table and schema filters and stops after it’s loaded. This is a one-time migration.

Change data capture (CDC) – The task migrates ongoing changes after you have data on your target database.

Migrate existing data and replicate ongoing changes (full load and CDC) – The task migrates existing data and then updates the target database based on changes to the source database.

During data migration, AWS DMS reads the data from the source database and creates a series of CSV files. For full load operations, AWS DMS reads from source tables using simple select queries and creates CSV files for each table separately on the replication instance. AWS DMS uses the AWS SDK to copy the CSV files into an Amazon Simple Storage Service (Amazon S3) bucket in your account. AWS DMS then uses the COPY command in Amazon Redshift to copy data from the CSV files in Amazon S3 to an appropriate table in Amazon Redshift. During the CDC phase, AWS DMS uses batch apply mode as default for Amazon Redshift as target, instead of transaction apply mode.

For data migration, your Redshift cluster must be in the same AWS account and AWS Region as the AWS DMS replication instance. This is required because AWS DMS reads data from Amazon S3 to Amazon Redshift in the same Region. For more details on these requirements, refer to Prerequisites for using an Amazon Redshift database as a target for AWS Database Migration Service.

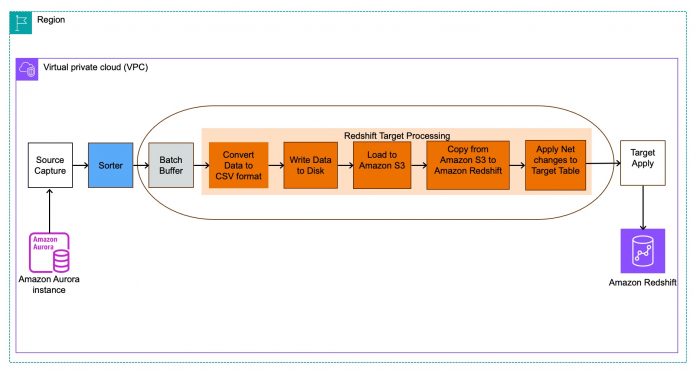

In the case of data warehouses, commits can be quite expensive to process, and transactional mode can no longer meet the demands and performance needed. To mitigate this impact, AWS DMS uses batch apply, where changes are processed in the following way:

AWS DMS reads change events from the transaction logs of the source database.

AWS DMS has an internal component called Sorter that keeps track of the events in commit order on the source database.

AWS DMS creates a net changes table on the target database (for example, awsdms_changes000000000XXXX).

The target accumulates events until a specific time has passed or batch size has reached a certain size. Then AWS DMS calculates net changes on the replication instance and creates the net changes in CSV files.

The net table data (CSV file) is stored in an S3 bucket.

AWS DMS copies the net table data into the Amazon Redshift net changes table (for example, awsdms_changes000000000XXXX).

Operations from the net changes table are then run as DELETE, INSERT, and UPDATE statements on the Amazon Redshift as batch.

The following diagram illustrates this process.

In this post, we use Amazon Aurora MySQL-Compatible Edition as a source; however, the strategies and best practices defined here can be used for other sources with Amazon Redshift as a target using AWS DMS. With Zero ETL now generally available, we can also explore this option for Amazon Aurora MySQL as source.

Prerequisites

To follow along with this post, you should have familiarity with the following AWS Services:

AWS DMS

Amazon Redshift

Best practices for loading data into a Redshift cluster

Consider the following best practices when loading your data into a Redshift cluster:

Make sure that the Redshift tables have primary keys. When using AWS DMS for data migration to Amazon Redshift, it’s recommended to have primary keys on the target tables. AWS DMS uses batch apply mode for ongoing replication. If the target table doesn’t have primary keys, then the inserts will go in batch apply mode, but updates and deletes will be applied one by one, which is bad for performance. Also note that it’s best practice to set the primary key as the sort key in the target table.

It’s recommended to either set target table preparation mode to TRUNCATE_BEFORE_LOAD or DO_NOTHING. The reason is that when AWS DMS creates tables for Amazon Redshift targets, it creates a table with the distribution key by default and no sort keys. For example, the following table is created by AWS DMS on the target when we set the table preparation mode as DROP and CREATE. To inspect the table pre-created by AWS DMS, we can use the following view available on GitHub. We can then recreate the table with the appropriate distribution key and sort key.

Because AWS DMS works in batch apply, you can adjust the following settings to reduce the number of commits on the target:

BatchApplyTimeoutMin – Sets the minimum amount of time in seconds that AWS DMS waits between each application of batch changes. The default value is 1.

BatchApplyTimeoutMax – Sets the maximum amount of time in seconds that AWS DMS waits between each application of batch changes before timing out. The default value is 30.

BatchApplyMemoryLimit – Sets the maximum amount of memory in MB to use for preprocessing in batch optimized apply mode. The default value is 500.

During full load, by default AWS DMS creates 32 MB CSV files for data transfer with a default of 10 threads. If you’re migrating a large dataset, increasing the endpoint settings of maxFileSize and fileTranferUploadStream will increase the full load speed.

You can use Amazon Redshift workload management (WLM) to manage workloads. You can use the WLM queue to define multiple query queues to route queries to the appropriate queues at runtime. If other parallel extract, transform, and load (ETL) queries are running, AWS DMS can run slower and performance is impacted. For these scenarios, you have to properly configure your WLM properties to influence performance during the replication operations. Refer to Manage your workloads better using Amazon Redshift Workload Management to understand the different options available and choosing auto compared to manual WLM.

Ensure that there are no locks or blocking sessions on the database. The following query helps in detecting locks:

Use the following multithreaded settings for full load and CDC:

ParallelLoadThreads – Specifies the number of concurrent threads that AWS DMS uses during a full load. The default is 0 and the maximum is 32.

ParallelLoadBufferSize – Specifies the maximum data record requests while using parallel load threads. The default is 100 and the maximum is 1000.

ParallelApplyThreads – Specifies the number of concurrent threads that AWS DMS uses during CDC. The default is 0 and the maximum is 32.

ParallelApplyBufferSize – Specifies the maximum data record requests while using parallel apply threads. The default is 100 and the maximum is 1000.

Check for Amazon Redshift Advisor recommendations and make changes appropriately.

To ensure that the COPY traffic from Amazon S3 to the Redshift cluster doesn’t traverse through the internet, set up enhanced VPC routing.

As mentioned previously, AWS DMS uses batch apply. In case there is a conflict while applying the changes, AWS DMS applies the changes one by one. Refer to How can I troubleshoot why Amazon Redshift switched to one-by-one mode because a bulk operation failed during an AWS DMS task for more information.

Perform benchmarking tests in your lower environment to understand what works best for your environment. To provide some stats, we performed benchmarking using sysbench. We created 500 tables on the source, each with 1 million records and observed the following:

For a task with default configuration, the full load completion time was around 30 minutes on a dms.r5.4xlarge instance.

For a one-time load, by increasing the full load task setting MaxFullLoadSubTasks (which sets the maximum number of tables to load in parallel) to 49, we were able to reduce the completion time by almost 50% (16.0117 minutes).

By using ParallelLoadThreads as shown in the following code, the completion time was reduced by 30% (21.171 minutes):

By setting MaxFullLoadSubTasks to 49 and using ParallelLoadThreads, we were able to reduce the completion time by 76% (7.88 minutes).

Note that increasing MaxFullLoadSubTasks and ParallelLoadThreads will cause the IOPS and CPU utilization to spike on the AWS DMS replication instance, so ensure that you monitor and scale the storage and the replication instance class appropriately. For more details, refer to Optimizing AWS DMS performance.

In the next section, we dive deep into a customer use case where we discuss some of the settings we’ve discussed in this post.

Customer case study

In this use case, a healthcare customer migrated from MicroStrategy to Amazon QuickSight. QuickSight reads the data from their Redshift cluster, which receives data from multiple Aurora MySQL clusters. To support the migration, they used AWS DMS by creating over 400 tasks. They faced continuous issues with target latency, frequent AWS DMS task failures, and Amazon Redshift resource-heavy utilization. They were looking for a way to stabilize their AWS DMS implementation.

The following diagram shows their initial setup.

Some of the challenges that the customer faced with this setup included the following:

The initial full load for most tasks was more than 24 hours, so CDC was lagging 3–4 days behind and wasn’t able to catch up in the CDC phase

Because there were many clusters involved, some tables needed to be prioritized in order to meet the SLA of the downstream systems

They had to manage the maintenance window of the source, AWS DMS replication instance, and Redshift cluster

On task failure or restart, the changes were written to disk instead of memory, resulting in high latency

They had to manage DDL changes on the source

In the next section, we discuss some of the changes that were proposed.

Proposed changes to address pain points

To optimize for full load, we increased the resources on the source, AWS DMS replication instance, and the target, and then increased the following parameters:

MaxFullLoadSubTasks – We increased this from the default 8 to 25.

CommitRate – This indicates the maximum number of records that can be transferred together. We increased the commit rate to 50,000.

To prioritize certain tables, we set up load-order in the table mapping. Note that load-order is applicable for table initialization. The load of a successive table won’t wait for a previous table load to complete if MaxFullLoadSubTasks is greater than 1.

To optimize for parallel load during full load, we tuned the following parameters:

We tuned the following change process settings to optimize the changes applied during CDC. We chose those values after conducting several tests and monitoring the latency on the AWS DMS task. Although the default settings can be a good starting point for most AWS DMS tasks, we recommend assessing your AWS DMS task and monitoring the latency and perform the changes in an iterative manner to see what works best for your environment.

We were noticing a timeout that was causing sporadic increase in latency. We identified this behavior using Amazon CloudWatch metrics and increased the timeout on the Redshift cluster. The following snippet was captured in the CloudWatch logs:

The command to increase the timeout is as follows:

ALTER USER dmsuser SESSION TIMEOUT 72000;

The following diagram shows the customer’s final setup.

Conclusion

In this post, we discussed how AWS DMS loads data into Amazon Redshift during a full load and CDC, and we shared some best practices on optimizing data loading. We also shared a customer case study and some of their learnings.

To familiarize yourself more with AWS DMS, refer to Getting started with AWS Database Migration Service. Share your thoughts in the comments section on how this approach worked for you or your customers.

About the Authors

Felipe Gregolewitsch is a Senior DB Migration Specialist SA with Amazon Web Services and SME for Amazon RDS core services. With more than 14 years of experience in database migrations covering projects in South America and Asia/Pacific, Felipe works on enabling customers on their migration or replication journeys from multiple engines and platforms like relational, data lakes, and data warehouses by leveraging options ranging from native tools up to AWS DMS.

Suchindranath Hegde is a Data Migration Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide guidance and technical assistance on data migration to the AWS Cloud using AWS DMS.

Prabhu Ayyakkannu is a Data Migration Specialist Solutions Architect at Amazon Web Services. He works on challenges related to data migrations and works closely with customers to help them realize the true potential of AWS DMS.

Bipin Nair is a Cloud Support DBA with Amazon Web Services and Subject Matter Expert for AWS DMS. He has over a decade of experience in working with Oracle databases and AWS relational databases. At AWS, he works with customers to handle a variety of migration scenarios and assist them in troubleshooting issues related to AWS DMS.

Read MoreAWS Database Blog