In this post, we compare the high availability (HA) and disaster recovery (DR) features of Amazon Aurora to Oracle, with a focus of the Aurora disk subsystem and how this key innovation allows Amazon Aurora Global Database to deliver performance and availability.

Data today is increasingly seen as a corporate asset, and safeguarding this asset is a key focus for many businesses. When that data exists in a database, the vendors of these systems produce methods and services to enable their customer’s data to be protected both for HA and DR. These services have focused on a strategy of producing an exact copy of the source database across the network to a destination database, and then applying this copy to the destination. Database technologies are designed to be compatible with a broad range of hardware and storage options, they can only meet HA and DR requirements through the database engine. All of them suffer from a broad range of implementation complexities, because all of them were developed in an on-premises World prior to the development of cloud platforms.

The Amazon Aurora product team has taken a cloud-native approach when designing and building out what has become a modern relational database platform. The key innovation that this team developed was the disk subsystem that allows Aurora to implement HA and DR across the globe in a way that allows implementors to merely check a box. Amazon Aurora Global Database provides a cluster with the ability to replicate data to multiple AWS Regions by asynchronously replicating data with low latency and no impacts to performance.

One of the players in the relational database space is Oracle, and the product that implements this previous strategy for their database is called Oracle Data Guard. To provide the best HA and DR Oracle recommends using two additional “at cost” database options called Active Data Guard and Real Application Clusters (RAC).

Oracle Active Data Guard Far Sync design

When traditional relational database vendors went to solve the HA and DR problems their customers were having, they did it with the components they could control. These were the database processes running on the servers and the network interfaces they used to communicate. They actively ignored the disk systems that their data were maintained on because they had no way of controlling that system between servers and had to completely rely on the storage vendors if they were to use that as a method of recovery or availability. Amazon Aurora has a shared storage architecture that allows data to be independent from the DB instances in the cluster. This means that you can add a new DB instance to the cluster quickly, because Aurora does not need to create a new copy of the table data. Instead, the new DB instance can simply connect to the shared volume that already contains all the data. Similarly, you can remove a DB instance from the cluster without affecting the data stored in the cluster. This helps to improve the flexibility and scalability of the database.

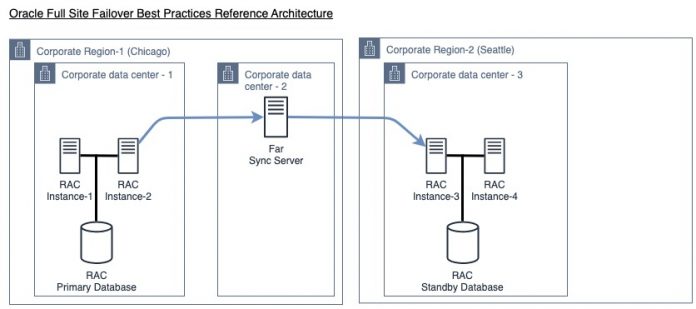

The Oracle Active Data Guard option has a reference architecture that shows database administrators and system engineers how to configure HA and DR across 2 or more databases located on different physical sites. This is known as a full site failover configuration, and is the only configuration that addressed the failure of a site in a protective way that allows for full data recovery. The following high-level diagram shows the primary database, the secondary database, and the synchronization server that is recommended to ensure that in the case of a complete site failure, the last transactions sent out by the primary can be applied to the secondary server.

This design is known as Active Data Guard Far Sync, which is a licensed feature of the Oracle Enterprise Database. The Far Sync design is intended to enable replication of data to another site without impacting the performance of the primary cluster. You do need to keep in mind that this configuration forces a 2-phase commit between the primary server and the Far Sync Server which can lead to performance degradation on the Primary server depending on how far apart the two servers are. Active Data Guard with Far Sync requires a Far Sync server in the same region as the Primary database to help reduce the chance for data loss in the event of a disaster. This Far Sync instance is a shell database, without any data, simply acting as a server to receive redo in a synchronous fashion (Max Protection) from the Primary, and send it asynchronously (Max Performance) to the Standby database in a different region.

Aurora design

One of the key architectural foundations in Aurora is the separation of compute and storage. The purpose-built storage layer is distributed across three Availability Zones, maintains six copies of your data, and is distributed across hundreds to thousands of nodes depending on the size of your database. When a cluster is created, it consumes very little storage, and as the database expands, Aurora seamlessly expands the volume to accommodate the demand and has the capacity to grow to 128 TB. To review the architecture of the storage layer and how it works refer to Amazon Aurora storage and reliability.

High Availability

When comparing the following diagram to what Oracle has, you must first understand what an Availability Zone (AZ) is in AWS. Each Availability Zone comprises a logical data center that has multiple physical data centers supporting it. There are low-latency connections between the Availability Zones, and they exist inside of a Region, which is a named set of AWS resources that’s in the same geographical area. A Region comprises at least three Availability Zones. The following design is highly scalable and durable, exclusively addressing high availability but not disaster recovery. The AWS Global Infrastructure is always growing and to get an understand of its reach please see this diagram of the footprint.

This diagram shows the High Available setup of an Amazon Aurora cluster with read replicas in two of the availability zones. The storage layer is a separate layer from compute and as the compute scales from a writer/reader to additional readers in other AZs the storage is simply presented to these new compute instances.

Disaster recovery

Disaster recovery across AWS Regions is configured by enabling the global database setting, which replicates the entire cluster disk set to a different Region. This enables fast recovery with RTO that can be in the order of minutes of downtime and supports up to five Regions, each with the ability to host 15 read replicas.

Amazon Aurora is a fully managed RDBMS; therefore, the details of network configuration are handled for you, both the DNS failover as well as the data replication between AZs and Regions is done without configuration or manual intervention by the operators.

To get a deeper understand and explanation of the Aurora storage engine, refer to Introducing the Aurora Storage Engine.

This diagram depicts an Aurora Global Database and the supporting disk subsystems. The yellow arrows show how writes are propagated so that 6 copies are spread across 3 AZs in a Region. The orange arrow are reads being processes and sent back to the calling application. The green arrow is the cross-region replication process making sure that writes on the hosting Region are replicated into the subscribed Regions.

The next diagram is the AWS global footprint with Regions currently available in blue with future Regions in orange. Overlade on this is a high-level local setup of Aurora in three Regions, one that is primary and two that are set to read, but that can be promoted to primary. The green arrow here is showing the push mechanism used to update secondary Regions.

Applications may additionally need to write to the database from other Regions other than the primary one. This is supported in Aurora by a feature called write forwarding, which is supported by Aurora MySQL-Compatible Edition version 2.08.1 or later. This feature allows applications to send INSERT, UPDATE, and DELETE statements to a secondary cluster, and then those statements are sent to the write endpoint on the primary cluster. This way, there is still only one writer to ensure data consistency across all clusters in the global database. Aurora handles the cross-Region networking setup. Aurora also transmits all necessary session and transactional context for each statement.

It is important to keep in mind that applications using write forwarding may be affected by latency as the statements traverse the network. There are metrics for write forwarding available in Amazon CloudWatch logs that allow you to monitor the throughput, latency, duration and count for statements forwarded to the writer instance.

Setting up Aurora write forwarding is straightforward, with a handful of commands to set up using either the AWS Command Line Interface (AWS CLI) or the Amazon Relational Database Service (Amazon RDS) SDK.

For full implementation details on write forwarding, refer to Enabling write forwarding.

Report offload with read replica

Similar to an Oracle Active Data Guard standby used for read activity, Aurora read replicas serve two primary purposes. Firstly, you can issue queries to them to scale the read operations for your application. You typically do so by connecting to the reader endpoint of the cluster. That way, Aurora can spread the load for read-only connections across as many Aurora replicas as you have in the cluster. Aurora replicas also help increase availability just like Oracle Active Data Guard does. If the writer instance in a cluster becomes unavailable, you can promote one of the Aurora secondary clusters to take the role of new writer. Secondly, you can use the reader endpoints for ETL workloads. Since Aurora’s read replicas are accessing the same storage as the writer instance, periods of high writes have no impact to the concurrency of the data that readers are accessing so solutions like ETL products or queries that are time sensitive will perform as expected.

Any process that needs to run an extract, transform, and load (ETL) workload to land data in other systems can use the existing read replicas, or an ETL-only read replica endpoint can be created to support these workloads connecting to Aurora.

Oracle Active Data Guard has standby instances that support read replicas for reporting or ETL processes. These instances are merely clusters running in another location; the failover mechanism called Net Connect lists multiple sites for a client to communicate with. This is not the same capability as having multiple DNS-supported endpoints for various read workloads and requires the client to be updated rather than having the service itself update the client as in the case of DNS. Aurora supports custom reader endpoints whose underlying compute can be tuned to the client requirements, this could take the form of more compute for the BI team and less for the HR team depending on the needs and requirements of the workload by those teams.

To read more on read replicas and how to deploy them, refer to Working with read replicas.

The following diagram shows the separation of the cluster endpoint that is used for write/read operations and a reader endpoint that can be used for reporting applications, ETL processing, or any other application whom will read data. Custom reader endpoints with their own DNS and set of Amazon Elastic Compute Cloud (Amazon EC2) instances can be configured so processing of data for specific use cases can be addressed. For instance, a reader endpoint for the Business Intelligence team could include instances with much higher memory while another endpoint could be configured to enable casual queries of everyday business users.

Maintenance

Aurora is a managed service so it performs the maintenance on your behalf, DBAs only need to set the windows that this will occur in.

The Aurora cluster has a weekly maintenance window, which is when any pending system changes are applied to the cluster. If a maintenance event is scheduled for a given week, it’s initiated during the 30-minute maintenance window you define. Aurora performs maintenance on the DB cluster’s hardware, operating system, or database engine version.

Aurora also supports zero-downtime patching, client connections are preserved throughout the Aurora upgrade process. To learn more about zero-downtime patching ZDP please see the documentation.

With Oracle Data Guard, the process for maintenance is the same as upgrading a standalone Oracle instance. The only difference is that you start with a standby server, test the server, and then upgrade the primary servers. You can then switch the primary to standby and have the upgraded standby take over. Nothing in that process is automatic, and it requires following a comprehensive manual process to perform correctly.

Backups

Aurora backs up your cluster volume automatically and retains restore data for the length of the backup retention period 1- 35 days. Aurora backups are continuous and incremental, so you can restore to any point within the backup retention period, which is known as point-in-time recovery (PITR). With the separation of compute and storage in Aurora, there is no performance impact or interruption of database service as backup data is being written. You can specify a backup retention period from 1–35 days when you create or modify a DB cluster. Aurora backups are stored in Amazon Simple Storage Service (Amazon S3).

If you want to retain a backup beyond the backup retention period, you can also take a snapshot of the data in your cluster volume. Because Aurora retains incremental restore data for the entire backup retention period, you only need to create a snapshot for data that you want to retain beyond the backup retention period. You can create a new DB cluster from the snapshot. The backup features in Aurora are similar in capabilities to Oracle RMAN.

Clones

By employing Aurora cloning, you can create a new cluster that uses the same Aurora cluster volume and utilizes the same data as the original. The process is designed to be fast and cost-effective. The new cluster with its associated data volume is known as a clone. Producing a clone is faster and more space-efficient than physically copying the data using other techniques, such as restoring a snapshot.

The use cases for creating a clone include obtaining a copy of production to run tests against, running analytics workloads, or saving a point in time snapshot for analysis without impacting the production system.

This diagram depicts two Aurora clusters “A” and “B”, the original cluster A is the source of the cloned cluster B. Here we see that the primary A cluster is updated while the cloned copy cluster B still sees the original data. This is enabled by a copy-on-write protocol as changes are made in one cluster the protocol creates a new copy of the page and updates the pointer.

Using database cloning is a powerful way to enable blue/green application deployments where testing data or schema changes is necessary before deploying to the production system. For more information about this strategy and deployment, refer to Amazon Aurora PostgreSQL blue/green deployment using fast database cloning.

Neither Oracle Data Guard nor Oracle RAC support database cloning; all updates to data are propagated through the query engine because Oracle systems don’t have access to the underlying disk systems their database runs on. Although Oracle does employ the terminology of “cloning,” the process entails taking a backup and restoring this on another server. This process copies the entire database and applies logs to the destination, which has to be at the same level as the source system. Cloning Oracle Database and Pluggable Databases.

Summary

This post illustrated how enterprises gain substantial benefits from using Aurora and the architectural advantages of being a cloud-native relational database. The primary innovation of separate compute and storage layers has enabled superior data durability, availability, and recoverability when compared to storage-agnostic methods of capturing a base snapshot, copying that to another server, restoring the snapshot, then copying and applying the changes from the source system to the destination. Aurora addresses a comprehensive range of business requirements with a simple-to-use managed service that provides enterprises with the data protection they need.

Creating an Amazon Aurora cluster is fast and easy, you can try these technology innovations out for yourself, test the performance and reliability. Visit the Getting started with Amazon Aurora documentation to spin up an instance, and remember to clean up after your done testing to avoid on going costs.

About the authors

John Vandervliet is a Senior Customer Delivery Architect at AWS based out of Calgary, AB. He works with Enterprises across Canada on their Cloud Journey. His areas of interest are Databases, Migrations, and Security. In his spare time, he enjoys spending time with family, reading, and hiking.

Pavan Pusuluri is a Senior Database Consultant with the Professional Services team at Amazon Web Services. His passion is building scalable, highly available and secure solutions in AWS cloud. His focus area is homogenous and heterogeneous migrations of on-premise databases to AWS RDS and Aurora PostgreSQL. Outside of work, he cherishes spending time with his family, exploring food and playing cricket.

Read MoreAWS Database Blog