This is a guest blog post by Ioanna Lytra, Data & Knowledge Engineer, and Albin Ahmeti, Data & Knowledge Engineer, at the Semantic Web Company.

According to the International Data Corporation (IDC), more data will be created in the next 3 years than in the prior 30 years combined. In the ideal world, this data will be structured, stored in one location, easy to query. But in the real world, we see the following:

Data is stored in different types (structured, semi-structured, unstructured) and in various formats (Word documents, spreadsheets, JSON files, CSV files, and more)

Data is distributed and not accessible in one single interface

Data is disconnected and can’t be easily integrated

This situation inevitably leads to data silos, which are isolated data pools not usable outside of the application or purpose they were created for, leading to a lack of an integrated view over the data.

In this post, we demonstrate how a knowledge graph can help organizations integrate data silos into one connected interface.

Our ultimate goal is to transform data into knowledge, and for this we use PoolParty Semantic Suite and Amazon Neptune.

The integration of PoolParty with Neptune is possible because of the Semantic Web and each tool supporting the Resource Description Framework (RDF) and SPARQL 1.1. This means that you can simply add data into Neptune and manage it in PoolParty, or vice versa.

We go through a step-by-step approach and dive into the technical details of our knowledge graph approach with a human resources use case.

Use case overview

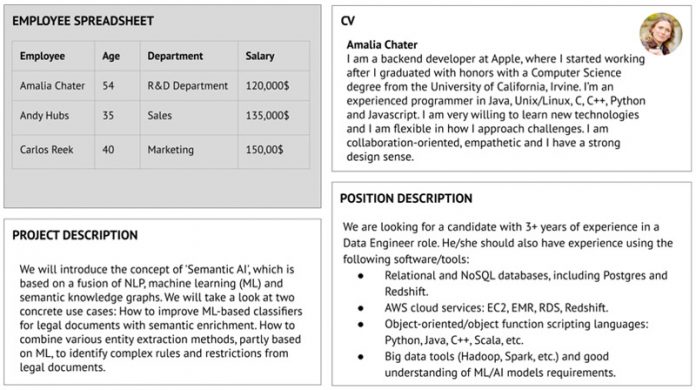

Human resources and project managers want to make the best use of their employees’ skills and experience when they build new teams for customer projects or fill internal positions. In this scenario, data is distributed, diverse, and not integrated. Traditionally, such tasks are tedious and time-consuming, and have to be performed manually. Let’s look closer at the data sources available in our HR use case:

Employees’ CVs are available as PDF documents in SharePoint, a document management system

Information about employees (such as age, salary, and department) is stored in spreadsheets, along with links to employee photos

The HR department keeps a record of all upcoming projects and open positions in Word documents, which are managed using SharePoint

The following employee spreadsheet contains information about employees and excerpts of text documents—a CV, project description, and position description.

We use PoolParty Semantic Suite and Neptune to demonstrate how Semantic Web technologies can help us do the following:

Extract knowledge from unstructured data

Integrate different data formats

Automate the creation of a knowledge graph

Use a knowledge graph to create AI applications

Build the knowledge graph

The process of building a knowledge graph involves many stakeholders and starts with defining knowledge relevant to the organization’s domain. Its ultimate purpose is to make explicit the implicit knowledge that exists within the organization, typically starting with a taxonomy and then transforming data into a knowledge graph. For more information about why and how to build knowledge graphs, check out The Knowledge Graph Cookbook.

The Jobs and Skills taxonomy provides a common vocabulary for describing a set of skills (such as operating systems, languages, and tools), job roles, company types, and educational degrees.

The Jobs and Skills taxonomy provides a set of domain-specific concepts organized in a hierarchical structure, but it’s not enough for modeling our domain. This is where ontologies are useful because they formalize the description of entities by introducing concept categories (classes), definition of properties (attributes), and types of relationships between entities (relations), among others.

The following screenshot shows a custom ontology in PoolParty Ontology Management covering the Jobs and Skills domain. PoolParty Corpus Analysis can help domain experts build taxonomies from scratch by suggesting relevant terms and relations that are candidate concepts as well as new connections between concepts.

For our use case, we have introduced a class named Employee to refer to any entity used to describe employees from the HR use case. In addition, each Employee has several properties associated with it, like name, age, gross salary, and job title. Additionally, Employees are connected to other entity types like Tools, Technology Domains, and Languages.

PoolParty Corpus Analysis can spot concepts from a taxonomy and suggest terms as candidate concepts as well as existing concepts they may relate to. In addition, it indicates shadow concepts (concepts that appear together), word senses (terms whose senses are similar, ambiguous, or unambiguous in the context of the corpus and the thesaurus), and similar terms. In this example, we can consider adding “Postgres” and “Cloud” to our taxonomy, which are currently missing.

Create links within the data

To be able to link our data, we create mappings that guide how the source structured data is transformed into the knowledge graph, based on column, header, and attribute mapping.

After we have these mappings, we can transform our Excel spreadsheet into RDF data, which is a set of triples (subject, predicate, object).

The following diagram shows the semantic mappings (in 1-1 form) and the transformation of the Excel spreadsheet into a set of RDF triples. The column and row names are mapped to properties and resources, which allows us to generate statements (in our example, statements about Amalia Chater) in the form of triples (subject, predicate, object).

Link unstructured data

To make unstructured data interlinkable to our structured datasets, we extract knowledge from this unstructured information using the PoolParty Extractor, a text mining tool combining methods from natural language processing and machine learning.

Let’s see what PoolParty Extractor extracted from Amalia’s Chater’s CV:

Amalia Chater has the job role Backend Developer at the company Apple

She has a degree in Computer Science

She knows the Operating System Unix/Linux

She has programming skills in Java, C, C++, Python, and JavaScript

How do we know that Computer Science is a degree, that Apple is a company, and Java, C, and Python refer to programming languages? Because we have created our taxonomy by organizing our concepts under concept schemes and top or broader concepts. In fact, we know more than that, based on the knowledge embedded in our taxonomy: Apple is a corporation, which means that Amalia Chater works at a corporation, and because Amalia Chater works as a Backend Developer, she has a role in the IT domain.

The following screenshot shows the semantic footprint of Amalia Chater’s CV. The colors indicate different concept schemes in which the extracted concepts are found in the Jobs and Skills taxonomy.

Now we just need to put all pieces together to build our knowledge graph.

Create a knowledge graph

At this point, we have converted all our strings into things with unique identifiers. Amalia Chater with URI <http://example.org/employee/Amalia_Chater> is associated with several properties and relations extracted from the Excel file (Employee data) and a few additional relations that have been extracted from the semantic footprint of her CV. In addition, entities related to Amalia Chater are also identifiable through URIs that are linked back to the concepts in the Jobs and Skills taxonomy. That means that “Java” found in Amalia Chater’s CV refers to the “Java” concept in the taxonomy, but it’s also the same “Java” spotted in the position description of our use case.

To create a knowledge graph, we convert this information into a knowledge representation consisting of entities and properties or attributes. For this, we use the RDF, which uses triples (subject, predicate, object) to represent the graph structure. The following code shows some examples of triples:

The following screenshot shows these triples visualized in the knowledge graph.

Connect to Amazon Neptune

With PoolParty Semantic Suite, you can easily configure the connection to the Neptune database instance, taking advantage of its features (availability, scalability, performance, and more). We use Neptune to store all the data created with PoolParty, including the Jobs and Skills taxonomy, Employee ontology, and HR knowledge graph.

The following screenshot shows our Neptune connection configuration in PoolParty Semantic Suite.

Edit the knowledge graph

The knowledge graph is supposed to be dynamic, in the sense that it gets updated regularly and is continuously enriched, which adds more value and introduces more completeness. This step is very important—an entire governance workflow model is often created around it, ensuring different people, agents, and subject matter experts work together in harmony. This idea is central to the linked data lifecycle, in which different iterations continuously enrich and refine the knowledge graph.

One of the steps in linked data lifecycle management is data authoring, which is possible via the PoolParty component.

PoolParty GraphEditor is a component that you can use to do data authoring, namely creating, updating, and deleting instance data. Apart from editing, GraphEditor allows you to perform complex conjunctive (AND), as well as disjunctive (OR) queries that can be combined with regular expressions. This allows people who are unfamiliar with SPARQL to write queries by simply selecting classes, properties, and attributes in the GUI.

The following screenshot shows the visual query editor, which allows exploring knowledge graphs and creating customizable views.

The following screenshot illustrates the SPARQL queries generated by the visual query editor. Note the keyword GRAPH, which means that answers to queries are bindings from different named graphs that can be extended by simply doing a configuration in PoolParty GraphEditor. You can plug in any named graph from Neptune, thereby expanding the dataset.

In the following screenshot, we see the user-friendly interface in GraphEditor for editing specific parts of the knowledge graph.

Conclusions

Knowledge graphs can help you break existing data silos to explore, analyze, enrich, and get further insights from your data. Furthermore, many use cases and applications can rely on knowledge graphs, including semantic search, intelligent content recommendation, knowledge discovery and extraction, question answering, and dialogue systems, to name a few.

In this post, we saw how PoolParty Semantic Suite tools can assist subject matter experts, knowledge engineers, and data scientists in the process of creating and managing a knowledge graph as well as building Semantic AI applications on top of it. Neptune as a graph database in the backend contributes to achieving high performance, scalability, and availability for such Semantic AI applications.

To find out more about the benefits of Semantic AI applications, watch this webinar and learn everything you always wanted to know about knowledge graphs.

About the authors

Ioanna Lytra is a Data and Knowledge Engineer at Semantic Web Company. With background in Electrical and Computer Engineering (MSc.) and Computer Science (PhD), Ioanna has been involved in software development and software architecture projects in different domains, before becoming passionate about Semantic Web technologies and Knowledge Graphs. Through several research and industrial projects, she has gathered more than five years of experience on applying Semantic Web in natural language processing, knowledge extraction, data management, and integration problems, as well as question answering and dialogue systems.

Albin Ahmeti is a Data & Knowledge Engineer at Semantic Web Company. He holds a MSc in Computer Engineering with focus on Data Integration, and a PhD in Computer Science with focus on Semantic Web. His PhD thesis “Updates in the Context of Ontology-Based Data Management” is about the interplay of SPARQL/Updates and Entailment Regimes in the context of triple stores and virtual graphs. He has more than 10 years of theoretical and practical experience in Semantic Web, respectively in different institutes in academia and practical experience in industry. His focus lies on: triple stores, (virtual) knowledge graphs, data quality, logic and reasoning.

Read MoreAWS Database Blog