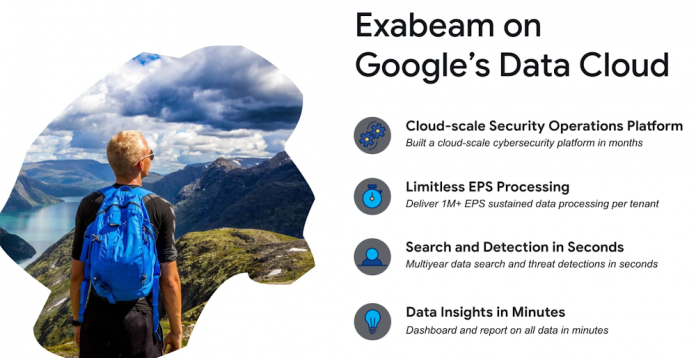

Editor’s notes: In this guest blog, we have the pleasure of inviting Sanjay Chaudhary, VP, Product Management at Exabeam to share his experiences and learnings of building real-time security insights and actions products capabilities using Google Cloud. Exabeam is a cybersecurity leader in SIEM and security analytics for organizations worldwide.

Today’s datasphere is opening a new fast lane for intelligent businesses, offering a tremendous advantage to organizations who can leverage it. Business leaders must address this new data with instant analysis and action to both win in the market today, and meet customer expectations tomorrow. Last week I had the pleasure to speak at Google Cloud Next, where I shared some lessons Exabeam learned on how Google Cloud can help create real-time insights and actions, all while removing technical operational complexity through unified batch and streaming analytics capabilities. Below is a summary of the key takeaways:

The best way to achieve real-time intelligence – Unified streaming and batch

This might sound counterintuitive at first, as real-time capabilities are enabled by streaming technologies. However, combining batch and streaming data allows Exabeam to automate decisions based on a complete view of real-time and historical data across all data types. Exabeam is a lean organization, so it is critical to achieve real-time analysis while also maintaining operational efficiency. This means we want to write code once that can run on both stream and batch jobs. Before we adopted Google Cloud this required separate technologies (for example, Apache Spark for batch processing and Apache Flink for real-time stream processing). With Google Cloud we can write code for real-time processing on Dataflow, and then use the same source code to run on BigQueryfor batch jobs. We now operate using the same tool with no-rewriting required, saving us significant time.

Integration with machine learning at scale

Real-time intelligence is a game changer, but what’s truly revolutionary is the ability to automatically apply intelligence on the data. This ensures we continually make smart decisions based on the most recent data available. At Exabeam we ingest and process large volumes of data, then feed it back to our machine learning models before we reverse extract, transform, load (ETL) the freshest model outputs back to the source system. Dataflow allows us to evaluate millions of events per second across hundreds of machine learning models. No other product comes close to Dataflow in terms of performance and scale for processing machine learning jobs.

Handling the unexpected

Real-time stream processing can be complex and messy, with a key challenge being the handling of the late arriving events. When you want to make important decisions based on events from a particular session window, sometimes the events arrive late and miss the window. Dataflow is unique in handling these unplanned situations, as it allows Exabeam to easily rescore the models to capture these late events.

Do more with less

Cloud transformation is not just about the massive scale capabilities you can offer to your customers; you also have to run your business profitably. For example, when you go to a massive scale data processing pipeline in Spark, it might take you months to mature. This has huge implications on your total cost of ownership and time to market. When we migrated from Spark to Dataflow, we saw an eighty percent reduction in the lines of code. This has helped our data scientists and data engineers spend more time writing efficient code, and less time managing the Spark clusters.

The three most important not-so-obvious benefits of Google Cloud’s Dataflow:

Observability: Dataflow provides a UI for viewing full pipeline, work logs, and report metrics to the cloud monitoring.This means you can track the stage of your jobs and look at the metrics for all your processing jobs, which is very convenient and provides peace of mind.

Predictable cost modeling: We build cybersecurity products and serve them to our customers through SaaS applications. Dataflow’s linear cost model is hugely beneficial, as it enables our costs to scale up proportionally based on usage even when running large scale processing jobs. No cost surprises.

Multi-tenancy: Dataflow pipelines allow Exabeam to build a single pipeline and parameterize it to run differently for different tenants. This is very important for Exabeam, as it allows us to offer custom machine learning and advanced model capabilities to each of our customers.

Recommendations and learnings:

Plan for 10X scale: Regardless of your company size, you should think 10X scale for your cloud transformation. If you’re running 10PB of scale today, plan for 100PB. A good example in our industry is that the scale and complexity of end point security products today has grown exponentially compared to ten years ago. As you build the data platform, build with the future in mind. Don’t get stuck on a technology stack which you have to reinvent every three to five years.

Cloud-native and serverless: A lot of data products have been built for the on-prem ecosystem, starting with Apache Kafka, Apache Spark, MongoDB, Presto, and Elasticsearch. When you bring these technologies on the cloud, you will spend a lot of time managing them. When you restart your cloud journey, focus on how to get to serverless and cloud-native capabilities as quickly as possible.

To learn more about how Google’s Data Cloud has helped Exabeam, watch my talk Google Cloud Next here.

Cloud BlogRead More