Audience: (Intermediate level) Targeting readers that have not yet interacted with Google Cloud before, but have engineering continuous integration, package management, beginner-level container and messaging experience. Requiring these individuals to have a pre-existing frontend application and supporting API server locally in place.

Technologies:

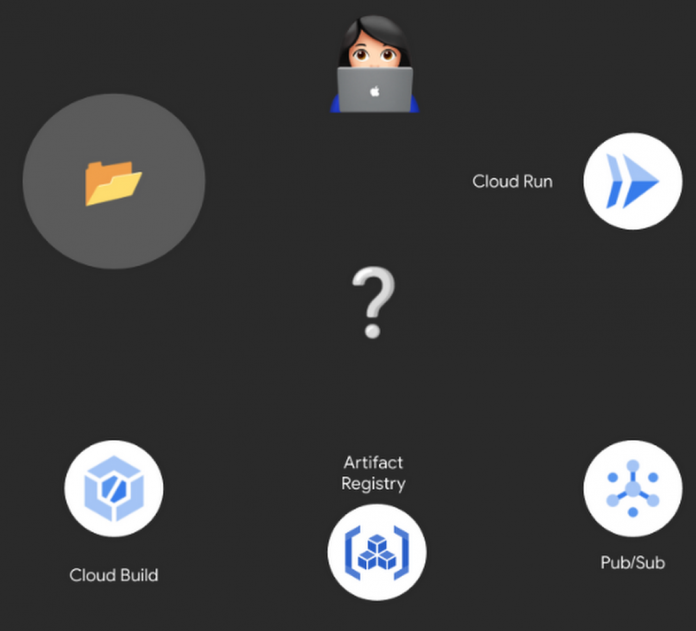

Cloud BuildCloud Build TriggersArtifact RegistryCloud RunPub/Sub

Requirements before getting started:

Functional client-side repository Functional API server repositoryPre-existing GCP project with billing enabledUnix machine

A Hero’s Journey – The Quest Begins

In the initial stages of development, it’s easy to underestimate the grunt work needed to containerize and deploy your application, especially if you are new to the cloud. Could Google Cloud help you complete your project without adding too much bloat to the work? Let’s find out! This blog will take you on a quest to get to the heart of quick automated deployments by leveraging awesome features from the following products:

Cloud Build: DevOps automation platform

Artifact Registry: Universal package manager

Cloud Run: Serverless for containerized applications

Pub/Sub: Global real time messaging

To help on this learning journey, we’d like to arm you with a realistic example of this flow as you are fashioning your own CI/CD pipeline. This blog will be referencing an open source Github project that models a best practices architecture using Google Cloud serverless patterns, Emblem. (Note: References will be tagged with Emblem).

Note: This blog will showcase the benefits of using Pub/Sub with multiple triggers, as it does in Emblem. If you are looking for a more direct path to building and deploying your containers with one trigger, check out the following quickstarts: ”Deploying to Cloud Run using Cloud Build” and “Deploying to Cloud Run using Cloud Deploy”.

Quest goals

The following goals will lead you to create a lean automated deployment flow for your API service that will be triggered by any change to the main branch of its source Github repository.

Manual deployment with Cloud Build and Cloud Run

Before you run off and attempt to automate anything, you will need a solid understanding of what commands you will be adding to your future cloudbuild.yaml files.

Build an image with a Cloud Build trigger

Creating the first trigger and cloudbuild.yaml file in the Cloud Build product that will react to any new changes to the main branch of your Github project.

React to Cloud Build events with Pub/Sub

Using a cool in-built feature of Artifact Registry repositories, create a pubsub topic.

Deploy with a Cloud Build trigger

Creating a new Cloud Build trigger that listens to the above Pub/Sub topic and a new cloudbuild.yaml file that will initiate deployment of newly created container images from Artifact Registry.

Before getting started

For the purposes of this blog, the following is required:

gcloud cli installed on Unix machine

An existing REST API server with associated Dockerfile

Google Cloud project with billing enabled (pricing)

You will create a new Github project repository epic-quest-projectand adding your existing REST API server code directory (i.e Emblem: content-api) to create the following project file structure:

Now onto the quest!

Goal #1: Manual deployment with Cloud Build and Cloud Run

You will be building and deploying your containers using Google Cloud products, Cloud Build and Cloud Run, via the Google Cloud CLI, also known as gcloud.

Within an open terminal, you will be setting up the following environment variables that declare the Google Cloud project ID, and which region you will be basing your project from. You will also need to enable the following product APIs (Cloud Run, Cloud Build & Artifact Registry APIs) within the Google Cloud project.

The container images you create from the server-side/ directory will be stored in an image repository named “epic-quest”, managed by Artifact Registry.

Now that the “epic quest” Artifact Registry repository has been created you can begin pushing container images to it! Use gcloud builds submit to build and tag an image from the server-side/ directory with Artifact Registry repository specific format: <region>-docker.pkg.dev/<project-id>/<repository>

After pushing your server-side container image to the Artifact Registry repository, you’re all set to deploy it with Cloud Run!

Excellent, you’ve created a basic manual CI/CD pipeline! Now, you can explore what it looks like to have this pipeline automated.

Goal #2: Build an image with a Cloud Build trigger

To start automating your small pipeline you will need to create a cloudbuild.yaml file that will configure your first Cloud Build trigger.

In the ops directory of epic-quest-project, create a new file named api-build.cloudbuild.yaml. This new yaml file will describe the steps Cloud Build will use to build your container image and push it to the Artifact Registry.

(Emblem: ops/api-build.cloudbuild.yaml)

To configure Cloud Build to automatically execute the steps in the above yaml, use the Cloud Console to create a new Cloud Build trigger:

Remember to select `Push to a branch` as the event that will activate the build trigger and, under `Source` connect your “epic-quest-project” Github repository.

You may need to authenticate with your GitHub account credentials to connect a repository to your Google Cloud project. Once you have a repository connected, specify the location of the cloud build configuration in that repository:

Submitting this configuration will create a new trigger named api-new-build that will be invoked whenever a change is committed and merged into the main branch of the repository with changes to the server-side/ folder.

After committing your changes to server-side/ files locally, you can verify this trigger works by merging a new commit into the main branch of your repository. Once merged, you will be able to observe the build trigger at work in the Build History page of the Cloud Console.

Excellent, the container build is now automated! How will Cloud Run know when a new build is ready to deploy? Enter Pub/Sub.

Goal #3: React to Cloud Build events with Pub/Sub

By default, Artifact Registry will publish messages about changes in its repositories to a Pub/Sub topic named gcr if it exists. Let’s take advantage of that feature for your next Cloud Build trigger. First, create a Pub/Sub topic named gcr:

Now, every time a new build is pushed to any Artifact Registry repository, a message is published to the gcr topic with a build digest that identifies that build. Next it’s time to configure your second trigger to complete the automated deployment pipeline.

Goal #4: Deploy with a Cloud Build trigger

Now you’re arriving at the final step, creating the deployment trigger! This Cloud Build trigger is the last link to complete your automated deployment story.

Note: Read more about our opinionated way to perform this step here using Cloud Run with checkbox CD and check out the new support for Cloud Run in Cloud Deploy.

In the ops directory of the epic-quest-project, create a new file named api-deploy.cloudbuild.yaml. In short, this will perform the deployment action of the new container image on your behalf. ( Emblem: ops/deploy.cloudbuild.yaml) .

The first step in this Cloud Build configuration will print to the build job log the body of the message published by Artifact Registry and the second step will deploy to Cloud Run.

Open the console and create another new Cloud Build Trigger with the following configuration:

Instead of choosing a repository event like in the api-build trigger, select Pub/Sub message to create a subscription to the desired Pub/Sub topic along with the trigger:

Once again, provide the location of the corresponding Cloud Build configuration file in the repository. Additionally, include values for the substitution variables that exist in the configuration file. Those variables are identifiable by the underscore prefix (_). Note that the _BODY, _IMAGE_NAME and _REVISION variables reference data included in the body of the Pub/Sub message:

The Cloud Build service account by default will initiate the deployment to Cloud Run, so it will need to have Cloud Run Developer and Service Account User IAM rolesgranted to it in the project where the Cloud Run services reside.

After granting those roles, check that the pipeline is working by creating a commit to the server-side/ directory in your epic-quest-project GitHub repository. It should result in the automatic invocation of the api-new-build trigger followed closely by the api-deploy trigger, and finally with a new revision in the corresponding Cloud Run service.

Your final project setup should similar to the following:

Quest complete!

Excellent, you now have a shiny automated pipeline and leveled up your deployment game!

After reading today’s post, we hope you have a better understanding of how to manually create and spin up a container using just Cloud Build and Cloud Run, use Cloud Build triggers to react to Github repository actions, writing cloudbuild.yaml files to add additional configuration to your build triggers, and magical benefits of using Artifact Registry repositories.

If you want to learn even more, check out the open source serverless project Emblem on Github.

Cloud BlogRead More