Intro

Can you actually put generative AI to use at work? Or is it just good for half-baked demos that we play with for the sake of amusement?

In banking, retail, and entertainment, just to name a few industries, AI can give you a different angle on your operational data or increase productivity by offloading certain types of work. A lot of routine work traditionally done by people can be simplified and streamlined by the new technology. And generative AI has become much easier to build into applications, now that models are readily available and can be used directly from the tools you already know, whether they’re developer tools or the database’s native interface. Let’s take a look at an example of using AlloyDB Omni, a PostgreSQL-compatible relational database, with the AlloyDB AI capabilities we recently announced in preview.

How AI Can Help

Let’s say you’re selling online and want to add extra info to data to give your customers a better experience, provide more details about your products, or add a quick summary of a product description. This isn’t hard for a small number of items, but it can be a lot of work for thousands of products or titles in an inventory. How can you improve the process and make it more efficient? Sounds like a great use case for generative AI.

What if I told you that you can call AI models from your database to achieve these goals? And that with AlloyDB, you can do this in your own data center or any cloud using a downloadable version – AlloyDB Omni? Yes, you can make these calls directly from the database using AlloyDB AI, a set of generative AI capabilities in AlloyDB. Let me explain how it works.

In our example, we have our AlloyDB Omni database as a backend for a rental and streaming service where one of the tables – called titles – represents a list of available shows. The table has columns called title and description. The descriptions are rather short and we’d like to expand the descriptions to give more details about each show. We are going to use one of our Vertex AI foundation models for generative AI – the “text-bison” Large Language Model (LLM) – to write the expanded descriptions, and we’ll call the model directly from the AlloyDB Omni database using our title and original description as a prompt.

Deploy and Install

We start from the deployment of AlloyDB Omni. The process of installing and setting it up is easy and thoroughly described in the documentation so we don’t need to repeat it here. But before running the final “database-server install” command we need to take some extra steps to enable the Vertex AI integration.

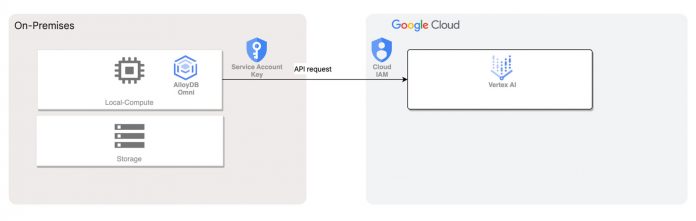

From the high-level point of view the AlloyDB Omni instance has to be able to call the Vertex AI API and it requires authentication and authorization in Google Cloud. The steps are described in the AlloyDB Omni documentation.

You need a service account in the Google Cloud Project where the Vertex AI API is enabled.Then you grant permissions to the service account to use Vertex AI.You create a service account key in JSON format and store it on the AlloyDB Omni database server.Then you can use the key location in your alloydb cli “database-server install” command to enable Vertex AI integration for your instance.

Here’s a high level diagram of the architecture.

AlloyDB Omni ML integration.

Once we have integration with Vertex AI enabled we can use either custom or pre-built foundation Vertex AI models in our application.

Run the Demo

First Attempt

Let’s go back to our example. To run the demo queries, I’ve loaded some movies and tv-shows titles to my sample AlloyDB Omni database. The table “titles” has a title column with the name of the show and a brief description in the column description for a movie or show. Here is what we have as the original description for “Pinocchio”.

For my website, I want more elaborate descriptions for each movie or show. To achieve this, I create an additional column and fill it with data generated by Vertex AI based on a prompt to the Google “text-bison” model using the title and description columns as I’ve described earlier.

Can We Do Better?

The prompt itself is simple enough and would be along the lines of “Can you create a summary for the <column title value> based on the following description – <column description value>?”. The prompt is used as the second argument in the “ml_predict_row” function. The first argument is the endpoint for the Vertex AI model, i.e. the location of the model on Google Cloud. In our case it is “publishers/google/models/text-bison”.

And here is the result returned by the function for the “Pinocchio”.

We have more information and it might be enough, but as you probably know, the prompt determines everything in generative AI. What if we ask about something more elaborate? Here’s a slightly modified prompt where I’ve added the word “elaborate” and the result.

This output reveals almost the whole plot of “Pinocchio”, so we might need to be more careful. The model accepts a number of parameters, such as temperature, which shows how relevant the response should be to the request and some other model specific parameters. You can read more about the “text-bison” model and parameters in the documentation.

Where to Start?

This is a pretty simple example of how AlloyDB AI can help make some tasks much faster, but you can do much more to achieve your business requirements and implement your ideas. Vertex AI has a set of foundation models that can be used with different types of data and returned values. For example, it has models for vector search of similar values and models for working with images. You can read much more in the Vertex AI documentation.

As an exercise, you can bring your own data and tune one of the foundation models for your case using the tutorial embedded into the Vertex AI documentation. I recommend starting with the Tune Foundation Model tutorial.

Vertex AI foundation model tuning.

If you’re new to AlloyDB, you may be eligible for a free trial of the cloud-based version. Alternatively you can download the AlloyDB Omni free developer edition. To get started, go to this page and choose the free developer edition.

Cloud BlogRead More