Want to do quick Generative AI without writing much code, meeting your data where it is, only with SQL queries and without having to worry about security, privacy and compliance issues? BQML (BigQuery ML) helps you perform analytics on data stored in BigQuery using large language models (LLM) from Vertex AI. This means you can also perform LLM-based analytics on data stored in other databases like Cloud SQL and Spanner that can be queried through federation from BigQuery, in addition to using SQL-only LLM analytics on data from CSV files and other external data sources!

In this blog, I have listed the steps to perform summarization of source code from Github repos and identification of the language of programming in the repo, using Vertex AI Large Language Model for text generation (text-bison) as a hosted remote function in BigQuery. Thanks to the GitHubArchive Project, we now have a full snapshot of over 2.8 million open source GitHub repositories in Google BigQuery Public Datasets.

1. Before you begin, make sure you have a Google Cloud project created, billing enabled, Cloud Shell activated and necessary APIs (BigQuery API, Vertex AI API, BigQuery Connection API) enabled.

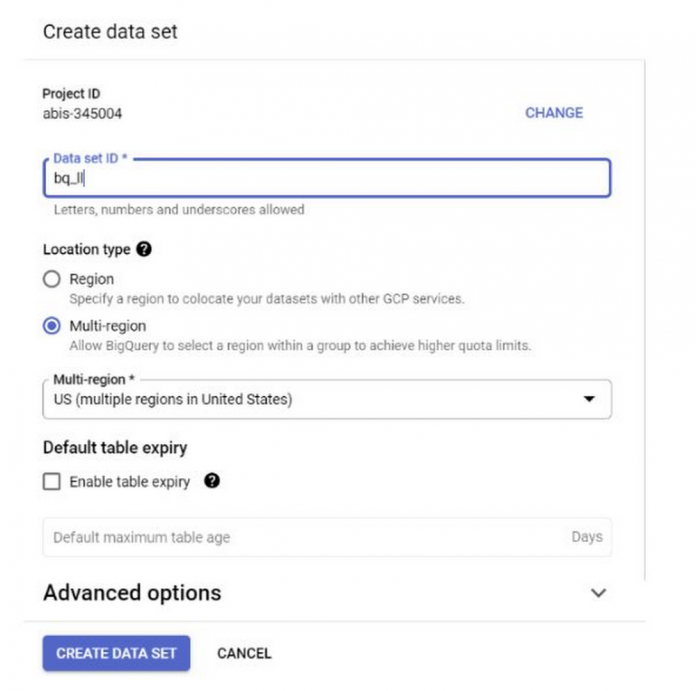

2. Create a BigQuery Dataset in the region “US” (or any region of your choice) named bq_llm

3. In this use case we are using the source code content from github_repos dataset in the Google BigQuery Public Datasets. To use this, in the BigQuery console, search for “github_repos” and press enter. Click on the star next to the dataset that is listed as the search result. Then click on the “SHOW STARRED ONLY” option to see that dataset only from the public datasets.

Expand the tables in the dataset to view the schema and data preview. We are going to use the sample_contents, which only contains a sample (10%) of the full data in the contents table. Here is a preview of the data:

4. If you want to do the same on your own dataset that lives in a CSV (or any other file), you can load your data into a BigQuery table by running the command below from the Cloud Shell terminal:

bq load –source_format=CSV –skip_leading_rows=1 bq_llm.table_to_hold_your_data

./your_file.csv text:string,label:string

5. Create an External Connection (Enable BQ Connection API if not already done) and note down the Service Account id from the connection configuration details

a. Click the +ADD button on the BigQuery Explorer pane (in the left of the BigQuery console) and click “Connection to external data sources” in the popular sources listed

b. Select Connection type as “BigLake and remote functions” and provide “llm-conn” as Connection ID

c. Once the connection is created, take a note of the Service Account generated from the connection configuration details

6. Grant permissions to the SA to access the Vertex AI Service:

Open IAM and add the copied Service Account as the Principal and select “Vertex AI User” Role

7. Create the remote model that represents a hosted Vertex AI large language model

This will take several seconds to complete.

8. Once the model is created, use the model to generate and categorize text

Explanation:

ml_generate_text_result is the response from the text generation model in JSON format that contains both content and safety attributes:

a. Content represents the generated text result

b. Safety Attributes represent the built-in content filter with an adjustable threshold that is enabled in Vertex AI PaLM API to avoid any unintended or unforeseen responses from the large language model – the response is blocked if it violates the safety threshold

ML.GENERATE_TEXT is the construct you use in BigQuery to access the Vertex AI LLM to perform text generation tasks

CONCAT appends your PROMPT statement and the database record

github_repos is the dataset name and sample_contents is the name of the table that holds the data we will use in the prompt design

Temperature is the prompt parameter to control the randomness of the response – lesser the better in terms of relevance

Max_output_tokens is the number of words you want in response

The query response looks like this:

9. Flatten the result so you do not have to decode the JSON explicitly in the query:

Result:

Flatten_json_output represents the boolean, which if set true returns a flat understandable text extracted from the JSON response.

That is it! We have successfully used a Vertex AI Text Generation LLM programmatically to perform text analytics on your data only using SQL-queries. Check out Vertex AI LLM product documentation to learn more about available models. If you want to try this out hands-on, check out the corresponding codelab for this.

Cloud BlogRead More