In this post we’ll show how to use Recommendations AI to display predictions on a live website and set up A/B experiments to measure performance. A/B testing involves creating two or more versions of a page and then splitting user traffic into groups to determine the impact of those changes. This is the most effective way to measure the impact of Recommendations AI. You can use A/B testing to test Recommendations AI against an existing recommender, or if you don’t have any recommendations on your site currently you can measure the impact of a recommender like Recommendations AI. You can also test different placement locations on a page or different options to find the optimal settings for your site.

If you’ve been following the previous Recommendations AI blog posts you should now have a model created and you’re ready to serve live recommendations (also called “predictions”). Using the Retail API predict method you specify which serving config to use, and given some input data a list of item id’s will be returned as a prediction.

Predict Method

The predict method is how you get recommendations back from Recommendations AI. Based on the model type objectives, those predictions are returned in an ordered list, with the highest probability items returned first. Based on the input data, you’ll get a list of item id’s back that can then be displayed to end users.

The predict method is authenticated via OAuth using a service account. Typically, you’ll want to create a service account dedicated to predict requests and assign the role of “Retail Viewer”. The retail documentation has an example of how to call predict with curl. For a production environment however, we would usually recommend using the retail client libraries.

The predict request requires a few fields:

placement id (serving config)

Passed as part of the url

userEvent

Specifies the required fields for the model being called. This is separate (and can be different) from the actual user event sent on the corresponding page view. It is used to pass the required information to the model:

eventType

home-page-view, detail-page-view, add-to-cart, etc

visitorId

Required for all requests. This is typically a session id

userInfo.userId

Id of logged in user (not required, but strongly recommended when a user is logged in or otherwise identifiable)

productDetails[].product.id

Required for models that use product ids (Others You May Like, Similar Items, Frequently Bought Together). Not required for Recommended For You recommendations since those are simply based on vistorId/userId history.

You can pass in a single product (product detail page placements) or a list of products (cart pages, order complete, category pages, etc.)

There are also some optional fields that are used to control the results:

filter

Used to filter on custom tags that are included as part of the catalog. Can also filter out OUT_OF_STOCK items.

pageSize

Controls how many predictions are returned in the response

params

Various parameters:

returnProduct returns the full product data in the response (id’s only is the default),

returnScore returns a probability score for each item

priceRerankLevel and diversityLevel control the rerank and diversity settings

The prediction response can be used however you like. Typically the results are incorporated into a web page, but you could also use these results to provide personalized emails or recommendations within an application. Keep in mind that most of the models are personalized in real-time, so the results shouldn’t be cached or stored for long periods of time since they will usually become outdated quickly.

Serving Recommendations client-side

Returning results as part of the full web page response is one method of incorporating recommendations into a page, but there are some drawbacks. As a “blocking” request, a server-side implementation can add some latency to the overall page response, it also tightly couples the page serving code to the recommendations code. A server-side integration may also limit the recommendations serving code to serving web results only, so a separate handler may be needed for a mobile application.

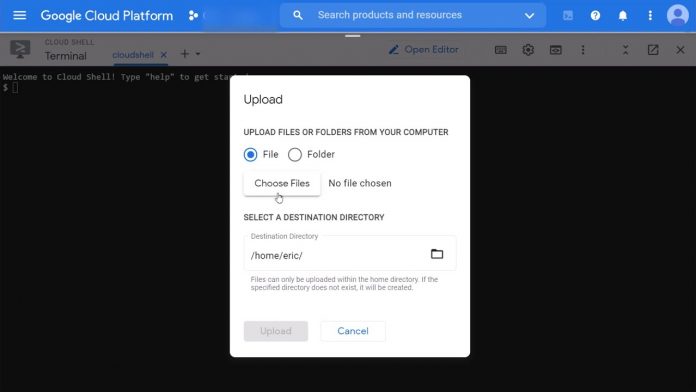

An Ajax implementation of Recommendations can solve these issues. There is no direct API endpoint for Recommendations AI that can be called directly from client-side JavaScript since the predict method requires authentication, however it is easy to implement a handler to serve Ajax requests. This can be a webapp or endpoint deployed within your existing serving infrastructure, or deploying on App Engine or a Google Cloud Function are also good alternatives.

Google Cloud Functions (GCF) are a great serverless way to quickly deploy this type of handler. An example GCF for Recommendations AI can be found here. This example uses python and the retail client library to provide an endpoint that can return Recommendations responses in JSON or HTML.

This video shows how to set up the cloud function and call it from a web page to render results in a <div>.

A/B testing recommendations

Once you have finished the frontend integration for Recommendations AI, you may want to evaluate them on your site. The best way to test changes is usually a live A/B test.

A/B testing for Recommendations may be useful for testing and comparing various changes:

Existing recommender vs. Google Recommendations AI

Google Recommendations vs. no recommender

Various models or serving changes (CTR vs. CVR optimized, or changes to price re-ranking & diversity)

UI changes or different placements on a page

There are some more A/B testing tips for Recommendations AI here.

In general, A/B testing involves splitting traffic into 2 or more groups and serving different versions of a page to each group, then reporting the results. You may have a custom in-house A/B testing framework or a 3rd-party A/B testing tool, but as an example here we’ll show how to use Google Optimize to run a basic A/B test for Recommendations AI.

Google Optimize & Analytics

If you’re already using Google Analytics, Google Optimize provides easy-to-manage A/B experiments and displays the results as a Google Analytics report. To use Google Optimize, simply link your Optimize account to Google Analytics, and install the Optimize tag. Once installed, you can create and run new experiments without any server-side code changes.

Google Optimize is primarily designed for front-end tests: any UI or DOM changes, CSS. Optimize can also add JavaScript to each variant of an experiment, which is useful when testing content that is displayed via an Ajax call (e.g. our cloud function). Doing an A/B experiment with server-side rendered content is possible, but usually this needs to be implemented by doing a Redirect test or by using the Optimize JavaScript API.

As an example, let’s assume we want to test two different models on the same page: Similar Items & Others You Make Like. Both models take a product id as input and are well-suited for a product details page placement. For this example we’ll assume a cloud function or other service is running that returns the recommendations in HTML format. These results can then be inserted into a div and displayed on page load.

The basic steps to configure an Optimize experiment here are:

Click Create Experience in Google Optimize control panel

Give your experience a name and select A/B test as the experience type

Add 2 variants: one for Others You May Like, another for Similar Items

Set variants weights to 0 for Original and 50%/50% for the 2 variants

Edit each variant and add your Ajax call to “Global JavaScript code” to populate the div

Add a url match rule to Page targeting to match all of your product detail pages

Choose primary and secondary objectives for your experiment

Revenue or Transactions for example, and Recommendation Clicks or another useful metric for secondary

Change any other optional settings like Traffic allocation as necessary

Verify your installation and click Start to start your experiment

In this scenario we have an empty <div> on the page by default, and then we create two variants that call our cloud function with a different placement id on each variant. You could use an existing <div> with the current recommendations for the Original version and then just have one variant, but this will cause unneeded calls to the recommender and may cause the display to flicker as the existing <div> content is changed.

Once the experiment is running you can click into the Reporting tab to view some metrics:

Optimize will predict a winner of the experiment based on the primary objective. But to view more detailed reports click the “View in Analytics” button and you’ll be able to view all metrics that Analytics has for the different segments in the experiment:

In this case it’s difficult to choose a clear winner, but we can see that the Similar Items model is providing a bit more Revenue per Session, and viewing the other goals shows a higher click through rate. We could choose to run the experiment longer, or try another experiment with different options. Most retailers run A/B experiments continually to test new features and options on the site to find what works best for their business objectives, so your first A/B test is usually just the start.

For more information please see the main Retail documentation, and some more tips for A/B experiments with Recommendations AI.

Cloud BlogRead More