Most enterprise companies enforce strict requirements for how APIs should be exposed to the public internet that require centralized handling of the authentication credentials, the collection of metrics metrics and other classic API management capabilities. Before the introduction of sidecars in Cloud Run, developers either had to implement these requirements within the service itself or add a full-lifecycle API management platform in front of their service as described in a previous blog post.

In this post we want to demonstrate a new way to fulfill the requirement for API management in Cloud Run. Specifically we want to look at how the recently announced multi-container feature in Cloud Run enables a sidecar pattern that can be used by developers of Cloud Run services to add pre-packaged API management capabilities. This includes self-service developer onboarding in a developer portal, credential validation and quota enforcements. As an additional operational benefit the described solution also adds centralized analytics and metrics for APIs that are exposed via Cloud Run.

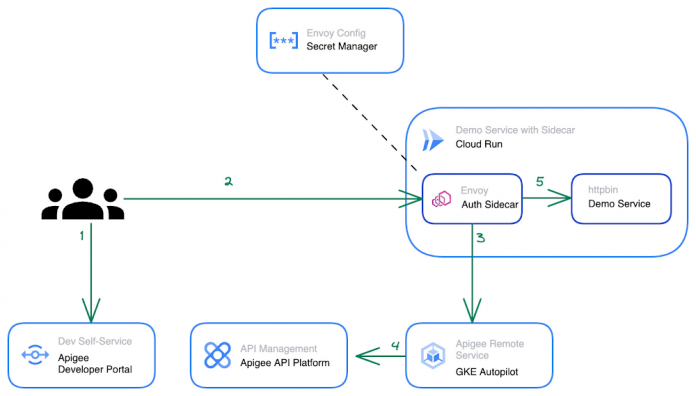

Our solution for adding API management capabilities for Cloud Run is provided by the following three components:

The Cloud Run service that hosts a traditional RESTful web application and is fronted by a vanilla Envoy proxy.

An Apigee Envoy Adapter aka. Remote service that runs in GKE Autopilot and acts as a policy decision point PDP for Envoy’s external authorization filter and is responsible for accepting or rejecting calls to the Cloud Run service.

An Apigee API Platform that is used to manage the API lifecycle of the Cloud Run service. Apigee also offers a turn-key developer portal where developers can obtain access credentials to access an API.

The user journey of an incoming request to our new Cloud Run Service with API management looks as follows:

An API Developer self-registers in the Apigee Developer Portal and obtains access credentials for the API

They call the Cloud Run endpoint and provide their credential for authentication

In Cloud Run service the Envoy proxy container intercepts the request and initiates a gRPC call to the Apigee Envoy adapter to authenticate the client.

The Apigee Envoy adapter verifies the request’s credentials and identifies the corresponding API product as defined in Apigee. The Envoy adapter also verifies the call quota associated with the client and sends the analytics data and access logs back to the control plane.

If the credentials are valid and the client hasn’t exhausted their call quota the Apigee Envoy adapter forwards the request to its co-located container in the Cloud Run service.

The step by step instructions for how to configure the architecture above can be found in this blog post.

Starting from a pre-existing Apigee installation the Apigee Envoy adapter is used to connect back to the Apigee runtime and control plane for accessing the API product definitions to link the incoming requests to the issued credentials and quotas. The Apigee remote service component can be replicated for high availability and is shared between multiple Cloud Run services that require a gRPC target for the sidecar’s external authorization filter.

The Cloud Run service connects to the Apigee Remote Service via an Envoy proxy that acts as a sidecar to the main application. The configuration for the envoy proxy can either be embedded within a customized Envoy image or mounted via the secret manager integration of cloud run as shown in the diagram above. Externalizing the configuration simplifies the maintenance and upgrade of the sidecar container as it can just pull the latest patch version of Envoy regularly.

The newly added API management capability is transparent for the primary application container within the Cloud Run service and does not require any changes in the application source code. Once the Cloud Run service is re-deployed with the sidecar in place, consuming applications can start to use credentials that they obtained via the Apigee API Management platform or the API developer portal to consume the Cloud Run service. At an operations level the platform operators will start to see requests to the Cloud Run service popping up in Apigee’s analytics dashboards and be able to track consumption and exposure at an API product level.

Next Steps

If you are interested in trying the multi-container support for Cloud Run yourself, check out the release announcement with many more use case descriptions. For another example and a detailed walkthrough on how to use sidecars in Cloud Run to report custom metrics to Google Cloud managed service for Prometheus you can head over to this tutorial in the Cloud Run documentation. Lastly, if you’re interested in the broader picture of how the latest features in Cloud Run are moving serverless forward, then make sure you check out this video.

Cloud BlogRead More