Over the past few years, breakthroughs in computer vision and video analytics have generated a lot of interest – and put attention on Developers who work with large amounts of video assets. Unfortunately, many of the benefits of these breakthroughs in computer vision have remained elusive. Vertex AI Vision makes it easy for developers to build, deploy and manage computer vision applications.

Challenges of building compelling computer vision applications have been plentiful, but two big buckets have emerged: data engineering and data science. On the data engineering side of the house, ingesting large amounts of video into a system has proven time consuming, expensive, and error prone. Furthermore, storing that data and then making use of the insights from that data requires advanced skills in data warehousing, search, analytics etc.

On the data science side of the house, creating custom image classification, object recognition, and image segmentation requires sophisticated machine learning skills that are often hard to find. Effective, highly tuned models for emerging use cases such as occupancy analytics, PPE detection, and vehicle detection require even more specialized skills.

Vertex AI Vision

Vertex AI Vision solves these challenges by providing an end-to-end application development environment that makes it easy for developers to:

Ingest camera video feeds in real time

Analyze the video using advanced AI pre-built or custom models

Store, search and retrieve video data at a massive scale

In this blog, we’ll walk you through Vertex AI Vision and show you how to get started. As always, our AI products also adhere to our AI Principles.

What developers need to know

A Vertex AI Vision application consists of these three main components:

Streams

Warehouse

Processors

The Stream component connects to the camera and streams data into the application. You can think of it as a source where data is getting sent in from the cameras or video files.

The Warehouse component is a repository of video and metadata attached to the videos. You can think of the warehouse as the sink of the video stream. You can additionally search the warehouse for clips on the metadata associated with the video clip, like the time duration, number of vehicles etc. This can be done both via UI and via a comprehensive set of API.

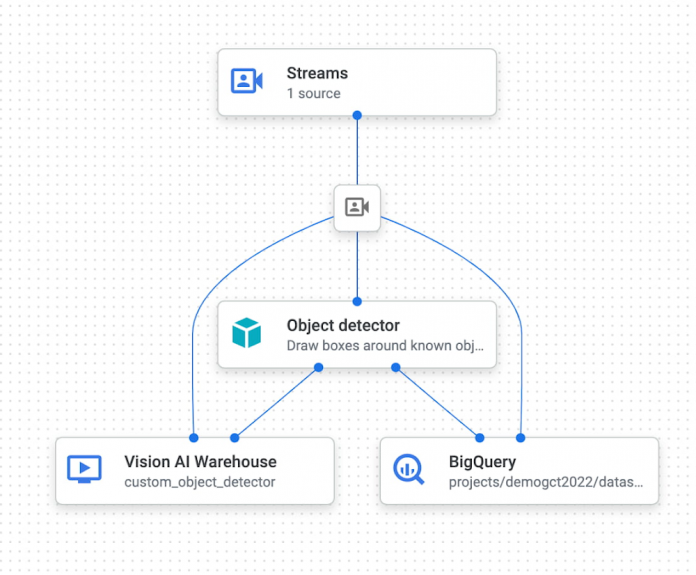

The Processor component is a function that takes the input from the source (Streams), transforms them and sends them to a sink (Warehouse or BigQuery). The processors are typically Computer Vision Models that can perform machine learning tasks on the video frames like Object Detection, Vehicle Detectors, Person blur, Occupancy count etc. You can additionally chain processors before storing the output in a warehouse or BigQuery. You can also attach your own processors by building a custom model with Vertex AI AutoML Object Detection or with Vertex AI custom modeling. Each of the processing nodes have input and output streams. Vertex AI Vision provides APIs to read the intermediary output of the streams.

Vertex AI Vision also provides the capability to store the outputs of the Processors in a BigQuery Table. This can be used to build in Looker or Data Studio dashboards.

An application is an abstraction that ties the Sources, Sinks and the Processors together in a nice Graphical User Interface.

In the sample application shown in Fig 1, the stream is connected to a pre-built Object Detector model (processor), the output of it is stored in Vision AI Warehouse and BigQuery. The object detector can identify more than 500 types of objects. The processor model infers on the video frames and generates metadata which is sent to the Vision AI Warehouse and BigQuery.

Connecting the cameras to the Streams in Vertex AI Vision is easily done via a command line tool called vaictl or a C++ API.

The video ingestion_time (the time the video stream reaches the video stream from the remote cameras) field is used as a key to correlate metadata and frames across the app.

The pre-built models include:

We also provide an advanced analytical processor called Occupancy Analytics processors, which not only detects people and vehicles it also sends the count information over to the sink (warehouse and BigQuery).

We additionally provide a model to filter out inactive video frames from being sent to the applications.

Steps to Build an Application include:

Creating a Stream

Creating a Warehouse

Creating an Application

In the application UI

Drag the Stream component and register the above stream

Attach the right processor for your stream

Store the output of the processor in Warehouse, BigQuery or both

Deploy the application

Resources for developers to get started

As a developer, you want to write great code that is both useful and is used. You want to use the tools you are familiar with already and you want to leverage other assets (code, libraries, frameworks etc) wherever it makes sense. In the case of video analytics you want to focus on what you do best, building great apps, and not on the vagaries of data engineering or the complexities of model building, tuning etc.

For detailed steps follow the Vertex AI Vision docs. The security prerequisites are listed here. Once the application is deployed you can start streaming your camera stream or a video file using the vaictl command. The syntax of command is provided in the documentation.

Once you start the stream you can view the stream inside the application and search for videos clips in the vision warehouse. Have fun!

Important resources for you to get started with Vertex AI Vision include:

Tutorials

Create a face blur app with warehouse storage

Create an occupancy count app with remote streaming

Create occupancy analytics app with BigQuery forecasting

Documentation

Videos and Demos

Free tier/ Cloud Credits

Cloud BlogRead More