Database audit logs are records of activities and events that occur within a database system. These logs capture details about user interactions, system changes, and data modifications, providing a comprehensive trail of actions performed on the database. By maintaining a meticulous record of these activities, organizations gain valuable insights into who accessed the database, what actions were taken, and when these actions occurred.

Database audit logs serve as evidence that organizations are adhering to compliance requirements by demonstrating that appropriate security measures are in place and being actively monitored. In regulated industries such as pharmaceuticals, biotechnology, and healthcare, maintaining compliance with Good Practice (GxP) guidelines is of the utmost importance. One critical aspect of GxP compliance is ensuring the security and integrity of data. In this post, we explore the significance of Amazon Relational Database Service (Amazon RDS) security audit logs in GxP environments and discuss how automating the log export process can enhance security while streamlining compliance efforts.

Challenges

In a large organization with multiple AWS accounts hosting various types of workloads, a central governance team is responsible for putting together an RDS audit log process across all the AWS accounts managed by their organization. Enabling RDS audit logs on all the databases hosted in an AWS organization brings up the following additional challenges:

Diverse database engines and versions – Different engines require varying approaches to audit log configuration. The central governance team faces the task of harmonizing these diverse configurations, ensuring that audit logs align with security standards across all database environments.

Automatic enforcement of enabling database audit logs – Enabling audit logs automatically across various databases requires careful orchestration to avoid disruptions. The central team must design automated processes that consider the intricacies of each database while ensuring that the enforcement doesn’t inadvertently impact critical operations.

Log retention and storage cost – With Audit logs enabled, log accumulation is inevitable, and as the logs grow, so do storage expenses. Determining appropriate retention periods while optimizing storage costs requires careful consideration. Crafting retention policies that align with compliance mandates and business needs becomes a task of precision.

In this post, we present a solution to automate enabling, capturing, and archiving RDS audit logs on Amazon Aurora MySQL-Compatible Edition, Amazon Aurora PostgreSQL-Compatible Edition, Amazon RDS for MySQL, Amazon RDS for PostgreSQL, Amazon RDS for SQL Server, Amazon RDS for MariaDB, and Amazon RDS for Oracle.

Solution overview

This post, in conjunction with the linked open-source repository, presents a comprehensive solution for achieving fully automated RDS audit log enablement using AWS serverless services.

An AWS CloudFormation template for workload accounts serves as a stack, effectively rolled out across all workload accounts via the capabilities of CloudFormation StackSets. This harmonious deployment approach brings uniformity to the configuration process, reducing administrative complexity and promoting a consistent environment.

At the core of this architecture lies the RDS Audit Log Custom API. This key component is introduced to the central or governance account by the governance team. Comprised of a collection of AWS Lambda functions, these components are orchestrated through the capabilities of AWS Step Functions.

The governance team uses RDS Audit Log Custom API to manage workload accounts, an account that hosts Amazon RDS instances. These workload accounts are usually grouped under a specific set of Organization Units. To deploy the solution, specify the Governance Account Number and the set of OUs.

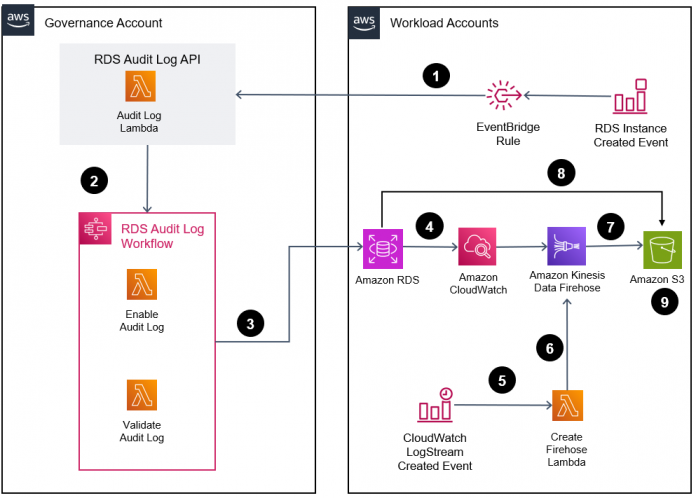

The following diagram illustrates the solution architecture.

Figure: RDS Audit Log Solutions Architecture

The workflow steps are as follows:

An Amazon EventBridge rule, in the workload account, triggers the custom Audit Log API, developed as part of this solution, whenever an RDS instance is created.

The API starts a Step Functions workflow that enables the database audit log and waits for successful enablement.

The Enable Audit Log Lambda function describes the provisioned database instance and read the secret managed by RDS in AWS Secrets Manager for the master user password and connect with the database instance. The Enable Audit Lambda functions also reads the parameter groups and option groups associated with the DB instance and updates the parameter for enabling the audit log.

Database engines log user activities into an Amazon CloudWatch log as a database audit log.

When the database engine writes the first entry into the CloudWatch log stream, that results in the creation of a new log stream. This CloudWatch log stream creation event triggers a Lambda function.

The function creates a new Firehose delivery stream to stream logs from CloudWatch to Amazon S3.

Amazon Kinesis Data Firehose reads the audit log from the CloudWatch log stream and writes the data to Amazon Simple Storage Service (Amazon S3).

Amazon RDS for SQL Server can upload the audit logs to Amazon Simple Storage Service (Amazon S3) by using built-in SQL Server audit mechanisms.

By default, CloudWatch logs are kept indefinitely and never expire. You can adjust the retention policy for the log group by choosing a retention period between 10 years and one day. In order to reduce cost, this solution configures the CloudWatch logs retention period to 1 day. For optimizing the cost for logs stored on S3, you can configure Amazon S3 Lifecycle policies or leverage the S3 Intelligent-Tiering storage class.

The key components within this architectural design (as labeled in the preceding diagram) are as follows:

RDS Audit Log Custom API – A Lambda function is designed to gather essential details about the RDS instance or cluster. It serves as the initiator for the audit log enablement workflow by triggering the necessary steps.

RDS Audit Log Workflow – This Step Functions state machine orchestrates steps between the Enable Audit Log Lambda function and the Validate Audit Log function. Because enabling audit logs requires a database restart, this workflow waits for the next maintenance window to restart the database instances. This can lead to a longer wait time between the invocation of the Enable and Validate functions. The Step Functions state will notify successful and failed audit log enablement through a Simple Notification Service (Amazon SNS) topic.

As the options for enabling Audit Logs differ based on the database engine type, this Lambda Function automatically detects and applies the appropriate settings. For example, RDS MySQL 5.7 requires adding the MARIADB_AUDIT_PLUGIN Option and configuring the Server_AUDIT_EVENTS settings.

Figure: RDS MySQL 5.7 Option Group

Validate Audit Log – This Lambda function is responsible for verifying that parameter groups have been successfully applied to the database following the restart or maintenance window. It also ensures proper configuration validation post-restart.

Enable Audit Log This Lambda function configures parameter groups or options groups and runs a set of SQL statements to activate RDS database instances to stream audit logs to CloudWatch Logs or S3. For running SQL statements against databases, this Lambda function reads database admin credentials stored in the AWS Secrets Manager. Amazon RDS for SQL Server engines send logs directly to the S3 bucket. However, the other database engines send logs to CloudWatch Log groups and Kinesis Firehose exports the logs from CloudWatch log groups to S3.

Audit Log Automation CloudFormation Template – The CloudFormation template consists of the following two resources:

RDS Instance Created EventBridge rule – This rule serves as the trigger mechanism for the RDS Audit Log API Lambda function, housed in the governance account. It activates whenever a new RDS instance or cluster is created within the workload account.

Create Firehose Lambda function – This Lambda function creates of a Kinesis Data Firehose delivery stream in the workload account, enabling the streaming of audit logs from CloudWatch log groups to an S3 bucket. Activation of this function is triggered by the creation of a new log group following a specific naming pattern, as handled by the Enable Audit Log Lambda function.

In the following sections, we detail the two-step process to implement this solution in your environment.

Prerequisites

This solution requires a Lambda function to be deployed on the governance account to run audit enablement SQL queries in the RDS database instances hosted on the workload account. The following configuration should be in place for the RDS Audit Log API to function correctly:

The governance account’s VPC hosting the audit enablement Lambda function must have connectivity to all workload accounts’ VPCs hosting RDS database instances via VPC peering or AWS Transit Gateway

All RDS database instances should have a security group that allows inbound connections on appropriate ports from the Lambda functions hosted on the governance account

Install the RDS Audit Log API

To install the RDS Audit Log API in your designated governance account, complete the following steps:

Make sure to have both docker and Serverless Framework in your deployment environment. These two components are required for deploying this solution.

Clone the source code located in the GitHub repo.

Modify the deployment-config.yml file in the root folder to have the appropriate resource values for VPCs, security groups, subnets, and S3 bucket names.

Run the following command to install the API in your governance account:

Configure workload accounts

This step involves creating a CloudFormation StackSet with the provided Audit Log Automation CloudFormation template. Use the following command:

Test the solution

To validate the functionality of the RDS audit log solution, use the following Boto3 code snippet. This code initiates the creation of an RDS for MySQL instance within a designated workload account. This action serves as the catalyst that triggers the audit log workflow.

The following screenshot illustrates the successful initiation of the Step Functions state machine within the governance account. This activation facilitates the enabling of the audit log for the recently established database instance.

Upon the successful activation of the audit log for the target database instance, the Step Functions state machine yields a positive response as output. This outcome confirms audit logging was successfully completed.

The following screenshot confirms the audit log activation completion by showing an option group’s association with the recently established database.

The RDS for MySQL database has initiated the process of exporting its audit logs to the designated CloudWatch log groups. You can navigate to the log group on the CloudWatch console for details.

The log stream shows the details of the log events.

On the Amazon S3 console, you can confirm that Kinesis Data Firehose has successfully transferred logs from the CloudWatch log groups to an S3 bucket.

Cleanup

Follow the instructions below to delete the resources created by this solution. Configure your serverless framework to point to the Governance account and run the following command.

This command will remove the RDS Audit Log Custom API deployed on the governance account.

Run the following AWS CLI command against the Organization’s root account.

This command will remove the resources created in the Workload accounts.

If you have created any Amazon RDS instances for testing in the Workload accounts, you need to delete them also.

Conclusion

GxP-compliant organizations must stay proactive in safeguarding their sensitive information in the ever-evolving landscape of data security and regulatory compliance. Automating the RDS security audit log export process is a practical and valuable step toward achieving secure GxP compliance. In this post, we presented a solution for automating audit logs using AWS serverless services. With this automation, organizations can achieve real-time log collection, accurate data analysis, and streamlined compliance reporting. As an alternative to this solution, you could use managed Database Activity Streams, for supported RDS databases, by integrating activity streams with your monitoring tool. With enhanced visibility into security events and threats, organizations can confidently focus on their core mission of delivering safe and high-quality products to consumers while maintaining the highest standards of data protection.

Try the solution and if you have any questions or feedback about this post, submit it in the comments section.

About the Authors

Suresh Poopandi is a Senior Solutions Architect at AWS, based in Chicago, Illinois, helping Healthcare Life Science customers with their cloud journey by providing architectures utilizing AWS services to achieve their business goals. He is passionate about building home automation and AI/ML solutions.

Abhay Kumar is a Lead Cloud Consultant with Amazon Web Services based in Bengaluru, India. Abhay enables customers to modernize and migrate their application workloads to AWS to achieve their business goals.

Mansoor Khan is a Senior Solutions Architect at Amazon Web Services. He works with customers to achieve business outcomes by developing well-architected solutions, modernization strategies, and incorporating customer requirements as feedback to internal service teams.

Read MoreAWS Database Blog