Google Cloud CLI makes it very quick and easy for engineers to get started with initial development on Google Cloud Platform and perform many common cloud tasks. The majority of the initial development experience is via the command line interface using tools like gsutil, gcloud, but getting the code to production requires writing ceremonial code or building API-level integration.

Developers often come across scenarios where they need to run simple commands in their production environment on a scheduled basis. In order to execute on this successfully, they are required to code and create schedules in an orchestration tool such as Data Fusion or Cloud Composer.

One such scenario is copying objects from one bucket to another (e.g. GCS to GCS or S3 to GCS), which is generally achieved by using gsutil. Gsutil is a Python application that is used to interact with Google Cloud Storage through the command line. It can be used to perform a wide range of functions such as bucket and object management tasks, including: creating and deleting buckets, uploading, downloading, deleting, copying and moving objects.

In this post, we will describe an elegant and efficient way to schedule commands like Gsutil using Cloud Run and Cloud Scheduler. This methodology saves time and reduces the amount of effort required for pre-work and setup in building API level integration.

You can find the complete source code for this solution within our Github.

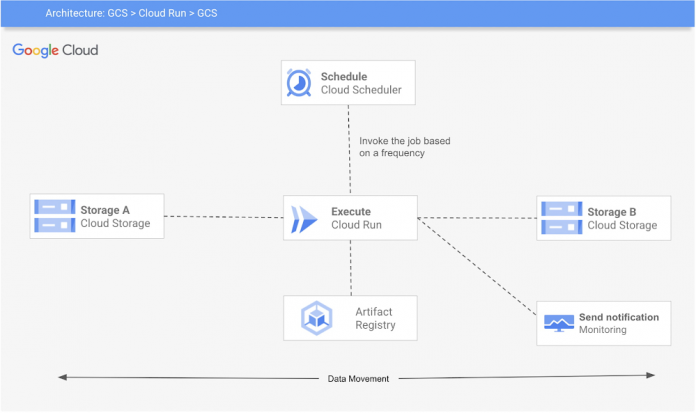

Here’s a look at the architecture of this process:

The 3 Google Cloud Platform (GCP) services used are:

Cloud Run: The code will be wrapped in a container, gcloud SDK will be installed ( or you can also use a base image with gcloud SDK already installed). Cloud Scheduler: A Cloud Scheduler job invokes the job created in Cloud Run on a recurring schedule or frequency.Cloud Storage: Google Cloud Storage (GCS) is used for storage and retrieval of any amount of data.

This example requires you to set up your environment for Cloud Run and Cloud Scheduler, create a Cloud Run job, package it into a container image, upload the container image to Container Registry, and then deploy to Cloud Run. You can also build monitoring for the job and create alerts. Follow below steps to achieve that:

Step 1: Enable services (Cloud Scheduler, Cloud Run) and create a service account

To deploy a Cloud Run service using a user-managed service account, you must have permission to impersonate (iam.serviceAccounts.actAs) that service account. This permission can be granted via the roles/iam.serviceAccountUser IAM role.

Step 2: Create a docker image and push to GCR. Navigate to gcs-to-gcs folder and push the image

Step 3: Create a job with the GCS_SOURCE and GCS_DESTINATION for gcs-to-gcs bucket. Make sure to give the permission (roles/storage.legacyObjectReader) to the GCS_SOURCE and roles/storage.legacyBucketWriter to GCS_DESTINATION

Step 4: Finally, create a schedule to run the job.

Step 5: Create monitoring and alerting to check if the cloud run failed.

Cloud Run is automatically integrated with Cloud Monitoring with no setup or configuration required. This means that metrics of your Cloud Run services are captured automatically when they are running.

You can view metrics either in Cloud Monitoring or in the Cloud Run page in the console. Cloud Monitoring provides more charting and filtering options. Follow these steps to create and view metrics on Cloud Run.

The steps described in the blog present a simplified method to invoke the most commonly used developer-friendly CLI commands on a schedule, in a production setup. The code and example provided above are easy to use and help avoid the need of API level integration to schedule commands like gsutil, gcloud etc.

Cloud BlogRead More