Editor’s Note: Established in 2021, Character.ai is a pioneer in the design and development of open-ended conversational applications. The platform utilizes an advanced neural language model to generate human-like text responses and engage in contextually relevant conversations. Character.ai relies on Google Cloud’s portfolio of managed databases, including AlloyDB for PostgreSQL and Spanner, as a solid foundation for its platform, providing reliability, scalability, and price performance for its workloads, from engagement and operations to AI and analytics. Google Cloud’s integrated environment encourages collaboration among these services, providing access for users as they engage with their favorite characters.

Do you ever wish you could sit down and talk physics with Albert Einstein or playwriting with William Shakespeare? With Character.ai, you can experience the next best thing.

At Character.ai, we’re on a mission to deliver lifelike interactions using artificial intelligence (AI). Our service offers a groundbreaking platform where users can engage in believable conversations with their favorite characters. These can be inspired by historical figures like Abraham Lincoln, real individuals like Taylor Swift, or fictional personas like Wonder Woman. These are just a few of the types of characters that visitors can chat with. They can also immerse themselves in dynamic group chats, where multiple characters interact seamlessly with both users and one another, creating an interactive dialogue. Character.ai makes this possible by harnessing the power of neural language models to analyze extensive text data and generate intelligent responses based on that knowledge.

Soaring growth requires a scalable solution

We’re a digital native business end-to-end, from training our distinctive models on supercomputers to delivering our service as a web-based chatbot. This, along with the growing momentum of the generative AI market, requires the ability to scale and modernize without missing a beat.

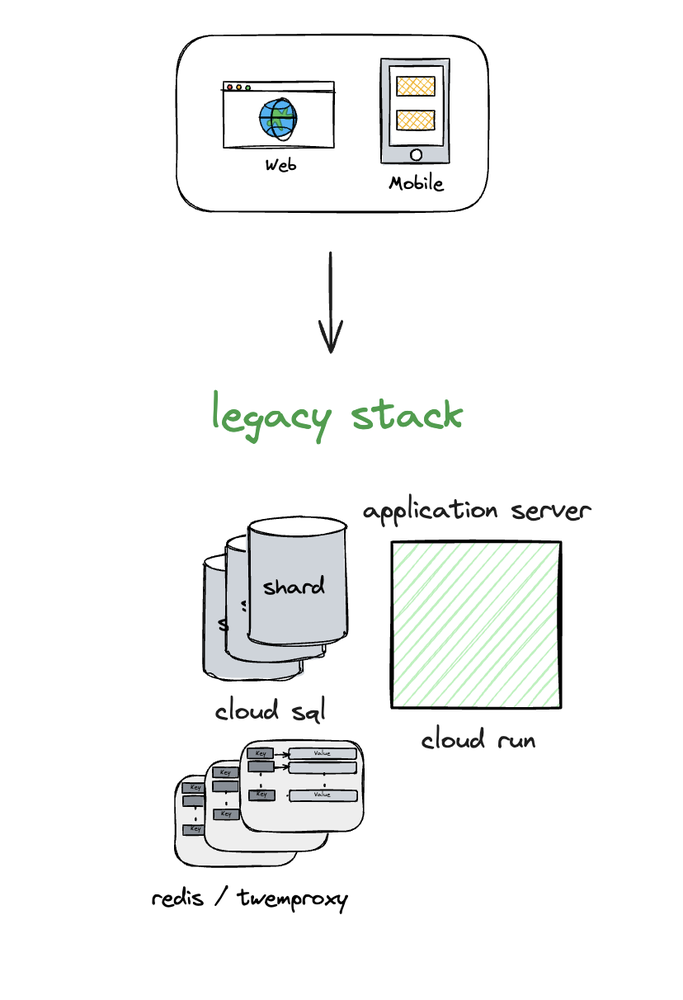

The database layer of our application stores critical data essential to the platform’s functionality. Since we released our first beta model in September 2022, Character.ai’s popularity has soared, causing the database load to grow exponentially, and it’s showing no signs of slowing down. Consequently, we needed to scale our operations — fast. The database we had initially been using was restricted by the maximum scaling capacity of each instance and the number of smaller instances we could patch together to effectively distribute the workload. We didn’t have the resources to transform or refactor the database to a more scalable engine within our given timeframe, and we needed a solution that could offer immediate scale and performance benefits without requiring extensive code changes.

Figure 1: Previous architecture

Enhancing data performance with Google Cloud and AlloyDB

As an AI company, efficiently processing large amounts of data is paramount for our time-to-market and ability to build differentiated algorithms. Therefore, we were initially drawn to Google Cloud’s distinctive tensor processor units (TPUs) and graphic processor units (GPUs) like NVIDIA’s L4 GPUs. Then, as we prototyped our service and started building our consumer application, Google Cloud’s managed database solutions became critical in helping us scale our applications with a skeleton crew.

When we found AlloyDB for PostgreSQL, we were stuck between a rock and a hard place. Usage of our service had scaled exponentially, putting unique stresses to various parts of our infrastructure, especially our databases. Initially, we were able to solve the increased demand by scaling up to larger machines, but over the course of a few weeks, we found that even the largest machines weren’t able to service our customer demand reliably, and we were running out of headroom. With the time pressure we had, we needed to find a solution that could be deployed in days. Major refactoring to a sharded architecture or a proprietary database engine, for example, was out of the question. AlloyDB promised better performance and higher scalability with full PostgreSQL compatibility — but could we migrate in the required timeframe?

Achieving 150% growth with AlloyDB’s increased scalability

To facilitate the migration process, we opted for a replication strategy. We ran two replication sets from the source database to the destination AlloyDB database, operating in change data capture (CDC) mode for 10 days. This allowed us to prepare our environment for the cutover. As a precaution, we provisioned a fallback instance in the source database in case we needed to roll back the migration. The migration process was smooth, requiring no changes to the application code thanks to AlloyDB’s full compatibility with PostgreSQL.

Since migrating to AlloyDB, we’ve been able to confidently segment our read traffic into read pools so that user activity growth can continue smoothly. Because AlloyDB’s replication lag is consistently under 100 milliseconds, we can scale reads to 20 times the capacity we had previously. This improvement has allowed us to effectively handle a surge in demand and process a larger volume of queries, leading to a substantial 150% increase in queries processed per second.

As a direct result, we have seen remarkable improvements in our service, providing an exceptional user experience with outstanding uptime. With AlloyDB’s full PostgreSQL compatibility and low-lag read pools, we had a robust foundation to continue scaling. But we didn’t stop there.

Scaling our infrastructure with AlloyDB and Spanner

We knew that at our current rate of growth, we would eventually run into scaling issues with our original monolith architecture. Not to mention, we wanted to have the headroom to scale towards 1 billion daily active users.

To address this, we identified the fastest growing part of our Django monolith and refactored it into its own standalone microservice. This allowed us to isolate the growth of this particular part of the system and manage it independently of the rest of the monolith, which we had already migrated to AlloyDB.

AlloyDB now plays a crucial role in powering the system of engagement, particularly in the frontend chat where real-time performance is vital for a responsive user interface, where the data needs the highest levels of consistency and availability while the user interacts with the chatbot.

However, that interactivity is mostly ephemeral and profile and reference data are relatively small and scoped in terms of our business model (e.g., one user profile per user). With this in mind, we refactored the second piece — the chat stack — to a microservice written in Go and backed it with Spanner, whose industry-leading HA story and virtually unlimited scale allowed us to significantly improve the scalability and performance of our refactored chat stack.

Now, Spanner powers the system of record for chat history, allowing the frontend to send chat requests to the backend, where it’s recorded and sent to the AI magic. Asynchronously, it gets a response, logs it, and sends it back to the frontend for the user. This two-way system allows both databases to actively work together across the frontend chat, creating the highest levels of data consistency and availability, for a great experience for our end users. With Spanner, we can now process terabytes of data daily without concerns about site stability.

We’ve future-proofed our chat application and are confident that it can handle any spikes in user activity. We’ve also reduced our operational costs by moving to a managed database service. Right now, our biggest cost is our opportunity cost.

We are continuing to evolve our architecture as we grow, and looking for ways to improve scalability, performance, and reliability. We believe that by adopting architecture that leverages the strengths of both AlloyDB and Spanner, we can build a system that can meet the needs of our users and handle our growth, which is currently projected 10x growth over the next 12 months.

Figure 2: Current architecture

Fueling Character.ai’s journey with Google Cloud

As Google Cloud users, we use a variety of products and services — from infrastructure products like VMs, K8s, TPUs and GPUs, to various managed databases and analytics, including Cloud SQL, AlloyDB, Spanner, Datastream and BigQuery. Google Cloud’s infrastructure and managed services collectively create a coherent set of tools designed to efficiently work together to provide unmatched scale and resilience.

But we’re not done — we have ambitious growth plans for Character.ai. In the short term, AlloyDB will enable us to meet current demand without outages so often caused by the lock-wait spikes of traditional databases. In the long term, we aim to scale our platform to become the largest consumer media company in the world, serving over 1 billion daily active users (DAU).

Figure 3: Aspirational architecture

For more, discover how Google Cloud’s AlloyDB, Spanner and other managed services help us deliver an exceptional and reliable experience to millions of users across countless use cases, from gaming and entertainment to life coaching and more.

Ready to get started?

Check out Google Cloud’s portfolio of managed services offering industry-leading reliability, global scale, and open standards to any size organization.Find out more about AlloyDB and start a free trial today.Get started with a 90-day Spanner free trial instance.

Cloud BlogRead More