As AI adoption is accelerating across the industry, customers are building sophisticated models that take advantage of new scientific breakthroughs in deep learning. These next-generation models allow you to achieve state-of-the-art, human-like performance in the fields of natural language processing (NLP), computer vision, speech recognition, medical research, cybersecurity, protein structure prediction, and many others. For instance, large language models like GPT-3, OPT, and BLOOM can translate, summarize, and write text with human-like nuances. In the computer vision space, text-to-image diffusion models like DALL-E and Imagen can create photorealistic images from natural language with a higher level of visual and language understanding from the world around us. These multi-modal models provide richer features for various downstream tasks and the ability to fine-tune them for specific domains, and they bring powerful business opportunities to our customers.

These deep learning models keep growing in terms of size, and typically contain billions of model parameters to scale model performance for a wide variety of tasks, such as image generation, text summarization, language translation, and more. There is also a need to customize these models to deliver a hyper-personalized experience to individuals. As a result, a greater number of models are being developed by fine-tuning these models for various downstream tasks. To meet the latency and throughput goals of AI applications, GPU instances are preferred over CPU instances (given the computational power GPUs offer). However, GPU instances are expensive and costs can add up if you’re deploying more than 10 models. Although these models can potentially bring impactful AI applications, it may be challenging to scale these deep learning models in cost-effective ways due to their size and number of models.

Amazon SageMaker multi-model endpoints (MMEs) provide a scalable and cost-effective way to deploy a large number of deep learning models. MMEs are a popular hosting choice to host hundreds of CPU-based models among customers like Zendesk, Veeva, and AT&T. Previously, you had limited options to deploy hundreds of deep learning models that needed accelerated compute with GPUs. Today, we announce MME support for GPU. Now you can deploy thousands of deep learning models behind one SageMaker endpoint. MMEs can now run multiple models on a GPU core, share GPU instances behind an endpoint across multiple models, and dynamically load and unload models based on the incoming traffic. With this, you can significantly save cost and achieve the best price performance.

In this post, we show how to run multiple deep learning models on GPU with SageMaker MMEs.

SageMaker MMEs

SageMaker MMEs enable you to deploy multiple models behind a single inference endpoint that may contain one or more instances. With MMEs, each instance is managed to load and serve multiple models. MMEs enable you to break the linearly increasing cost of hosting multiple models and reuse infrastructure across all the models.

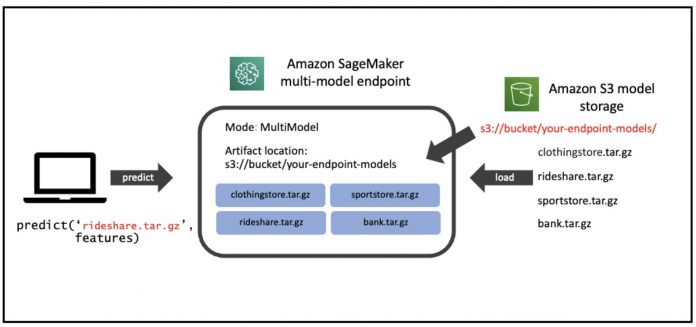

The following diagram illustrates the architecture of a SageMaker MME.

The SageMaker MME dynamically downloads models from Amazon Simple Storage Service (Amazon S3) when invoked, instead of downloading all the models when the endpoint is first created. As a result, an initial invocation to a model might see higher inference latency than the subsequent inferences, which are completed with low latency. If the model is already loaded on the container when invoked, then the download and load step is skipped and the model returns the inferences with low latency. For example, assume you have a model that is only used a few times a day. It is automatically loaded on demand, whereas frequently accessed models are retained in memory and invoked with consistently low latency.

SageMaker MMEs with GPU support

SageMaker MMEs with GPU work using NVIDIA Triton Inference Server. NVIDIA Triton Inference Server is an open-source inference serving software that simplifies the inference serving process and provides high inference performance. Triton supports all major training and inference frameworks, such as TensorFlow, NVIDIA® TensorRT, PyTorch, MXNet, Python, ONNX, XGBoost, Scikit-learn, RandomForest, OpenVINO, custom C++, and more. It offers dynamic batching, concurrent runs, post-training quantization, and optimal model configuration to achieve high-performance inference. Additionally, NVIDIA Triton Inference Server has been extended to implement MME API contract, to integrate with MME.

The following diagram illustrates an MME workflow.

The workflow steps are as follows:

The SageMaker MME receives an HTTP invocation request for a particular model using TargetModel in the request along with the payload.

SageMaker routes traffic to the right instance behind the endpoint where the target model is loaded. SageMaker understands the traffic pattern across all the models behind the MME and smartly routes requests.

SageMaker takes care of model management behind the endpoint, dynamically loads the model to the container’s memory, and unloads the model based from the shared fleet of GPU instances to give the best price performance.

SageMaker dynamically downloads models from Amazon S3 to the instance’s storage volume. If the invoked model isn’t available on the instance storage volume, the model is downloaded onto the instance storage volume. If the instance storage volume reaches capacity, SageMaker deletes any unused models from the storage volume.

SageMaker loads the model to the NVIDIA Triton container’s memory on a GPU accelerated instance and serve the inference request. The GPU core is shared by all the models in an instance. If the model is already loaded in the container memory, the subsequent requests are served faster because SageMaker doesn’t need to download and load it again.

SageMaker takes care of traffic shaping to the MME endpoint and maintains optimal model copies on GPU instances for best price performance. It continues to route traffic to the instance where the model is loaded. If the instance resources reach capacity due to high utilization, SageMaker unloads the least-used models from the container to free up resources to load more frequently used models.

SageMaker MMEs can horizontally scale using an auto scaling policy, and provision additional GPU compute instances based on metrics such as invocations per instance and GPU utilization to serve any traffic surge to MME endpoints.

Solution overview

In this post, we show you how to use the new features of SageMaker MMEs with GPU with a computer vision use case. For demonstration purposes, we use a ResNet-50 convolutional neural network pre-trained model that can classify images into 1,000 categories. We discuss how to do the following:

Use an NVIDIA Triton inference container on SageMaker MMEs, using different Triton model framework backends such and PyTorch and TensorRT

Convert ResNet-50 models to optimized TensorRT engine format and deploy it with a SageMaker MME

Set up auto scaling policies for the MME

Get insights into instance and invocation metrics using Amazon CloudWatch

Create model artifacts

This section walks through the steps to prepare a ResNet-50 pre-trained model to be deployed on an SageMaker MME using Triton Inference Server model configurations. You can reproduce all the steps using the step-by-step notebook on GitHub.

For this post, we demonstrate deployment with two models. However, you can prepare and deploy hundreds of models. The models may or may not share the same framework.

Prepare a PyTorch model

First, we load a pre-trained ResNet50 model using the torchvision models package. We save the model as a model.pt file in TorchScript optimized and serialized format. TorchScript compiles a forward pass of the ResNet50 model in eager mode with example inputs, so we pass one instance of an RGB image with three color channels of dimension 224 x 224.

Then we need to prepare the models for Triton Inference Server. The following code shows the model repository for the PyTorch framework backend. Triton uses the model.pt file placed in the model repository to serve predictions.

The model configuration file config.pbtxt must specify the name of the model (resnet), the platform and backend properties (pytorch_libtorch), max_batch_size (128), and the input and output tensors along with the data type (TYPE_FP32) information. Additionally, you can specify instance_group and dynamic_batching properties to achieve high performance inference. See the following code:

Prepare the TensorRT model

NVIDIA TensorRT is an SDK for high-performance deep learning inference, and includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for inference applications. We use the command line tool trtexec to generate a TensorRT serialized engine from an ONNX model format. Complete the following steps to convert a ResNet-50 pre-trained model to NVIDIA TensorRT:

Export the pre-trained ResNet-50 model into an ONNX format using torch.onnx.This step runs the model one time to trace its run with a sample input and then exports the traced model to the specified file model.onnx.

Use trtexec to create a TensorRT engine plan from the model.onnx file. You can optionally reduce the precision of floating-point computations, either by simply running them in 16-bit floating point, or by quantizing floating point values so that calculations can be performed using 8-bit integers.

The following code shows the model repository structure for the TensorRT model:

For the TensorRT model, we specify tensorrt_plan as the platform and input the Tensor specifications of the image of dimension 224 x 224, which has the color channels. The output Tensor with 1,000 dimensions is of type TYPE_FP32, corresponding to the different object categories. See the following code:

Store model artifacts in Amazon S3

SageMaker expects the model artifacts in .tar.gz format. They should also satisfy Triton container requirements such as model name, version, config.pbtxt files, and more. tar the folder containing the model file as .tar.gz and upload it to Amazon S3:

Now that we have uploaded the model artifacts to Amazon S3, we can create a SageMaker MME.

Deploy models with an MME

We now deploy a ResNet-50 model with two different framework backends (PyTorch and TensorRT) to a SageMaker MME.

Note that you can deploy hundreds of models, and the models can use the same framework. They can also use different frameworks, as shown in this post.

We use the AWS SDK for Python (Boto3) APIs create_model, create_endpoint_config, and create_endpoint to create an MME.

Define the serving container

In the container definition, define the model_data_url to specify the S3 directory that contains all the models that the SageMaker MME uses to load and serve predictions. Set Mode to MultiModel to indicate that SageMaker creates the endpoint with MME container specifications. We set the container with an image that supports deploying MMEs with GPU. See the following code:

Create a multi-model object

Use the SageMaker Boto3 client to create the model using the create_model API. We pass the container definition to the create model API along with ModelName and ExecutionRoleArn:

Define MME configurations

Create MME configurations using the create_endpoint_config Boto3 API. Specify an accelerated GPU computing instance in InstanceType (we use the g4dn.4xlarge instance type). We recommend configuring your endpoints with at least two instances. This allows SageMaker to provide a highly available set of predictions across multiple Availability Zones for the models.

Based on our findings, you can get better price performance on ML-optimized instances with a single GPU core. Therefore, MME support for GPU feature is only enabled for single-GPU core instances. For a full list of instances supported, refer to Supported GPU Instance types.

Create an MME

With the preceding endpoint configuration, we create a SageMaker MME using the create_endpoint API. SageMaker creates the MME, launches the ML compute instance g4dn.4xlarge, and deploys the PyTorch and TensorRT ResNet-50 models on them. See the following code:

Invoke the target model on the MME

After we create the endpoint, we can send an inference request to the MME using the invoke_enpoint API. We specify the TargetModel in the invocation call and pass in the payload for each model type. The following code is a sample invocation for the PyTorch model and TensorRT model:

Set up auto scaling policies for the GPU MME

SageMaker MMEs support automatic scaling for your hosted models. Auto scaling dynamically adjusts the number of instances provisioned for a model in response to changes in your workload. When the workload increases, auto scaling brings more instances online. When the workload decreases, auto scaling removes unnecessary instances so that you don’t pay for provisioned instances that you aren’t using.

In the following scaling policy, we use the custom metric GPUUtilization in the TargetTrackingScalingPolicyConfiguration configuration and set a TargetValue of 60.0 for the target value of that metric. This autoscaling policy provisions additional instances up to MaxCapacity when GPU utilization is more than 60%.

We recommend using GPUUtilization or InvocationsPerInstance to configure auto scaling policies for your MME. For more details, see Set Autoscaling Policies for Multi-Model Endpoint Deployments

CloudWatch metrics for GPU MMEs

SageMaker MMEs provide the following instance-level metrics to monitor:

LoadedModelCount – Number of models loaded in the containers

GPUUtilization – Percentage of GPU units that are used by the containers

GPUMemoryUtilization – Percentage of GPU memory used by the containers

DiskUtilization – Percentage of disk space used by the containers

These metrics allow you to plan for effective utilization of GPU instance resources. In the following graph, we see GPUMemoryUtilization was 38.3% when more than 16 ResNet-50 models were loaded in the container. The sum of each individual CPU core’s utilization (CPUUtilization) was 60.9%, and percentage of memory used by the containers (MemoryUtilization) was 9.36%.

SageMaker MMEs also provide model loading metrics to get model invocation-level insights:

ModelLoadingWaitTime – Time interval for the model to be downloaded or loaded

ModelUnloadingTime – Time interval to unload the model from the container

ModelDownloadingTime – Time to download the model from Amazon S3

ModelCacheHit – Number of invocations to the model that are already loaded onto the container

In the following graph, we can observe that it took 8.22 seconds for a model to respond to an inference request (ModelLatency), and 24.1 milliseconds was added to end-to-end latency due to SageMaker overheads (OverheadLatency). We can also see any errors metrics from calls to invoke an endpoint API call, such as Invocation4XXErrors and Invocation5XXErrors.

For more information about MME CloudWatch metrics, refer to CloudWatch Metrics for Multi-Model Endpoint Deployments.

Summary

In this post, you learned about the new SageMaker multi-model support for GPU, which enables you to cost-effectively host hundreds of deep learning models on accelerated compute hardware. You learned how to use the NVIDIA Triton Inference Server, which creates a model repository configuration for different framework backends, and how to deploy an MME with auto scaling. This feature will allow you to scale hundreds of hyper-personalized models that are fine-tuned to cater to unique end-user experiences in AI applications. You can also leverage this feature to achieve needful price performance for your inference application using fractional GPUs.

To get started with MME support for GPU, see Multi-model endpoint support for GPU.

About the authors

Dhawal Patel is a Principal Machine Learning Architect at AWS. He has worked with organizations ranging from large enterprises to mid-sized startups on problems related to distributed computing and artificial intelligence. He focuses on deep learning, including NLP and computer vision domains. He helps customers achieve high-performance model inference on Amazon SageMaker.

Vikram Elango is a Senior AI/ML Specialist Solutions Architect at Amazon Web Services, based in Virginia, US. Vikram helps global financial and insurance industry customers with design, implementation and thought leadership to build and deploy machine learning applications at scale. He is currently focused on natural language processing, responsible AI, inference optimization, and scaling ML across the enterprise. In his spare time, he enjoys traveling, hiking, cooking, and camping with his family.

Saurabh Trikande is a Senior Product Manager for Amazon SageMaker Inference. He is passionate about working with customers and is motivated by the goal of democratizing machine learning. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. In his spare time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Deepti Ragha is a Software Development Engineer in the Amazon SageMaker team. Her current work focuses on building features to host machine learning models efficiently. In her spare time, she enjoys traveling, hiking and growing plants.

Nikhil Kulkarni is a software developer with AWS Machine Learning, focusing on making machine learning workloads more performant on the cloud and is a co-creator of AWS Deep Learning Containers for training and inference. He’s passionate about distributed Deep Learning Systems. Outside of work, he enjoys reading books, fiddling with the guitar, and making pizza.

Jiahong Liu is a Solution Architect on the Cloud Service Provider team at NVIDIA. He assists clients in adopting machine learning and AI solutions that leverage NVIDIA accelerated computing to address their training and inference challenges. In his leisure time, he enjoys origami, DIY projects, and playing basketball.

Eliuth Triana Isaza is a Developer Relations Manager on the NVIDIA-AWS team. He connects Amazon and AWS product leaders, developers, and scientists with NVIDIA technologists and product leaders to accelerate Amazon ML/DL workloads, EC2 products, and AWS AI services. In addition, Eliuth is a passionate mountain biker, skier, and poker player.

Read MoreAWS Machine Learning Blog