Dataproc is a fully managed service for hosting open-source distributed processing platforms such as Apache Hive, Apache Spark, Presto, Apache Flink, and Apache Hadoop on Google Cloud. Dataproc provides flexibility to provision and configure clusters of varying sizes on demand. In addition, Dataproc has powerful features to enable your organization to lower costs, increase performance and streamline operational management of workloads running on the cloud.

Dataproc is an important service in any data lake modernization effort. Many customers begin their journey to the cloud by migrating their Hadoop workloads to Dataproc and continue to modernize their solutions by incorporating the full suite of Google Cloud’s data offerings.

This guide demonstrates how you can optimize Dataproc job stability, performance, and cost-effectiveness. You can achieve this by using a workflow template to deploy a configured ephemeral cluster that runs a Dataproc job with calculated application-specific properties.

Before you begin

Pre-requisites

A 100-level understanding of Dataproc (FAQ)

Experience with shell scripting, YAML templates, Hadoop ecosystem

An existing dataproc application , referred to as “the job” or “the application”

Sufficient project quotas (CPUs, Disks, etc.) to create clusters

Consider Dataproc Serverless or BigQuery

Before getting started with Dataproc, determine whether your application is suitable for (or portable to) Dataproc Serverless or BigQuery. These managed services will save you time spent on maintenance and configuration.

This blog assumes the user has identified Dataproc as the best choice for their scenario. For more information about other solutions, please check out some of our other guides like Migrating Apache Hive to Bigquery and Running an Apache Spark Batch Workload on Dataproc Serverless.

Separate data from computation

Consider the advantages of using Cloud Storage. Using this persistent storage for your workflows has the following advantages:

It’s a Hadoop Compatible File System (HCFS), so it’s easy to use with your existing jobs.

Cloud Storage can be faster than HDFS. In HDFS, a MapReduce job can’t start until the NameNode is out of safe mode—a process that can take a few seconds to many minutes, depending on the size and state of your data.

It requires less maintenance than HDFS.

It enables you to easily use your data with the whole range of Google Cloud products.

It’s considerably less expensive than keeping your data in replicated (3x) HDFS on a persistent Dataproc cluster.

Pricing Comparison Examples (North America, as of 11/2022):

GCS: $0.004 – $0.02 per GB, depending on the tier

Persistent Disk: $0.04 – $0.34 per GB + compute VM costs

Here are some guides on Migrating On-Premises Hadoop Infrastructure to Google Cloud and HDFS vs. Cloud Storage: Pros, cons, and migration tips. Google Cloud has developed an open-source tool for performing HDFS to GCS.

Optimize your Cloud Storage

When using Dataproc, you can create external tables in Hive, HBase, etc., where the schema resides in Dataproc, but the data resides in Google Cloud Storage. Separating compute and storage enables you to scale your data independently of compute power.

In HDFS / Hive On-Prem setups, the compute and storage were closely tied together, either on the same machine or in a nearby machine. When using Google Cloud Storage over HDFS, you separate compute and storage at the expense of latency. It takes time for Dataproc to retrieve files on Google Cloud Storage. Many small files (e.g. millions of <1mb files) can negatively affect query performance, and file type and compression can also affect query performance.

When performing data analytics on Google Cloud, it is important to be deliberate in choosing your Cloud Storage file strategy.

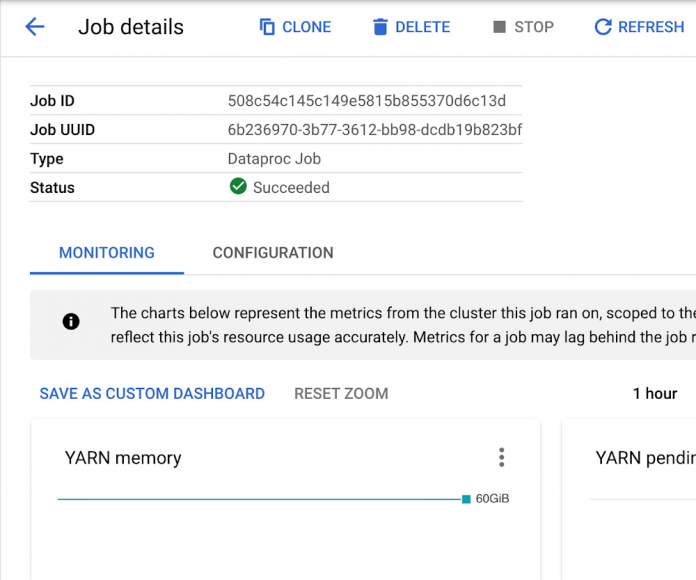

Monitoring Dataproc Jobs

As you navigate through the following guide, you’ll submit Dataproc Jobs and continue to optimize runtime and cost for your use case. Monitor the Dataproc Jobs console during/after job submissions to get in-depth information on the Dataproc cluster performance. Here you will find specific metrics that help identify opportunities for optimization, notably YARN Pending Memory, YARN NodeManagers, CPU Utilization, HDFS Capacity, and Disk Operations. Throughout this guide you will see how these metrics influence changes in cluster configurations.

Guide: Run Faster and Cost-Effective Dataproc Jobs

1. Getting started

This guide demonstrates how to optimize performance and cost of applications running on Dataproc clusters. Because Dataproc supports many big data technologies – each with their own intricacies – this guide intends to be trial-and-error experimentation. Initially it will begin with a generic dataproc cluster with defaults set. As you proceed through the guide, you’ll increasingly customize Dataproc cluster configurations to fit your specific workload.

Plan to separate Dataproc Jobs into different clusters – each data processing platform uses resources differently and can impact each other’s performances when run simultaneously. Even better, isolating single jobs to single clusters can set you up for ephemeral clusters, where jobs can run in parallel on their own dedicated resources.

Once your job is running successfully, you can safely iterate on the configuration to improve runtime and cost, falling back to the last successful run whenever experimental changes have a negative impact.

You can export an existing cluster’s configuration to a file during experimentation. Use this configuration to create new clusters through the import command.

Keep these as reference to the last successful configuration incase drift occurs.

2. Calculate Dataproc cluster size

a. Via on-prem workload (if applicable)

View the YARN UI

If you’ve been running this job on-premise, you can identify the resources used for a job on the Yarn UI. The image below shows a Spark job that ran successfully on-prem.

The table below are key performance indicators for the job.

For the above job you can calculate the following

Now that you have the cluster sizing on-prem, the next step is to identify the initial cluster size on Google Cloud.

Calculate initial Dataproc cluster size

For this exercise assume you are using n2-standard-8, but a different machine type might be more appropriate depending on the type of workload. n2-standard-8 has 8 vCPUs and 32 GiB of memory. View other Dataproc-supported machine types here.

Calculate the number of machines required based on the number of vCores required.

Recommendations based on the above calculations:

Take note of the calculations for your own job/workload.

b. Via an autoscaling cluster

Alternatively, an autoscaling cluster can help determine the right number of workers for your application. This cluster will have an autoscaling policy attached. Set the autoscaling policy min/max values to whatever your project/organization allows. Run your jobs on this cluster. Autoscaling will continue to add nodes until the YARN pending memory metric is zero. A perfectly sized cluster minimizes the amount of YARN pending memory while also minimizing excess compute resources.

Deploying a sizing Dataproc cluster

Example:

2 primary workers (n2-standard-8)

0 secondary workers (n2-standard-8)

pd-standard 1000GB

Autoscaling policy: 0 min, 100 max.

No application properties set.

sample-autoscaling-policy.yml

Submitting Jobs to Dataproc Cluster

Monitoring Worker Count / YARN NodeManagers

Observe the peak number of workers required to complete your job.

To calculate the number of required cores, multiply the machine size (2,8,16,etc. by the number of node managers.)

3. Optimize Dataproc cluster configuration

Using a non-autoscaling cluster during this experimentation phase can lead to the discovery of more accurate machine-types, persistent disks, application properties, etc. For now, build an isolated non-autoscaling cluster for your job that has the optimized number of primary workers.

Example:

N primary workers (n2-standard-8)

0 secondary workers (n2-standard-8)

pd-standard 1000GB

No autoscaling policy

No application properties set

Deploying a non-autoscaling Dataproc cluster

Choose the right machine type and machine size

Run your job on this appropriately-sized non-autoscaling cluster. If the CPU is maxing out, consider using C2 machine type. If memory is maxing out, consider using N2D-highmem machine types.

Prefer using smaller machine types (e.g. switch n2-highmem-32 to n2-highmem-8). It’s okay to have clusters with hundreds of small machines. For Dataproc clusters, choose the smallest machine with maximum network bandwidth (32 Gbps). Typically these machines are n2-standard-8 or n2d-standard-16.

On rare occasions you may need to increase machine size to 32 or 64 cores. Increasing your machine size can be necessary if your organization is running low on IP addresses or you have heavy ML or processing workloads.

Refer to Machine families resource and comparison guide | Compute Engine Documentation | Google Cloud for more information.

Submitting Jobs to Dataproc Cluster

Monitoring Cluster Metrics

Monitor memory to determine machine-type:

Monitor CPU to determine machine-type:

Choose the right persistent disk

If you’re still observing performance issues, consider moving from pd-standard to pd-balanced or pd-ssd.

Standard persistent disks (pd-standard) are best for large data processing workloads that primarily use sequential I/Os. For PD-Standard without local SSDs, we strongly recommend provisioning 1TB (1000GB) or larger to ensure consistently high I/O performance.

Balanced persistent disks (pd-balanced) are an alternative to SSD persistent disks that balance performance and cost. With the same maximum IOPS as SSD persistent disks and lower IOPS per GB, a balanced persistent disk offers performance levels suitable for most general-purpose applications at a price point between that of standard and SSD persistent disks.

SSD persistent disks (pd-ssd) are best for enterprise applications and high-performance database needs that require lower latency and more IOPS than standard persistent disks provide.

For similar costs, pd-standard 1000GB == pd-balanced 500GB == pd-ssd 250 GB. Be certain to review performance impact when configuring disk. See Configure Disks to Meet Performance Requirements for information on disk I/O performance. View Machine Type Disk Limits for information on the relationships between machine types and persistent disks. If you are using 32 core machines or more, consider switching to multiple Local SSDs per node to get enough performance for your workload.

You can monitor HDFS Capacity to determine disk size. If HDFS Capacity ever drops to zero, you’ll need to increase the persistent disk size.

If you observe any throttling of Disk bytes or Disk operations, you may need to consider changing your cluster’s persistent disk to balanced or SSD:

Choose the right ratio of primary workers vs. secondary workers

Your cluster must have primary workers. If you create a cluster and you do not specify the number of primary workers, Dataproc adds two primary workers to the cluster. Then you must determine if you prioritize performance or cost optimization.

If you prioritize performance, utilize 100% primary workers. If you prioritize cost optimization, specify the remaining workers to be secondary workers.

Primary worker machines are dedicated to your cluster and provide HDFS capacity. On the other hand, secondary worker machines have three types: spot VMs, standard preemptible VMs, and non-preemptible VMs. As a default, secondary workers are created with the smaller of 100GB or the primary worker boot disk size. This disk space is used for local caching of data and do not run HDFS. Be aware that secondary workers may not be dedicated to your cluster and may be removed at any time. Ensure that your application is fault-tolerant when using secondary workers.

Consider attaching Local SSDs

Some applications may require higher throughput than what Persistent Disks provide. In these scenarios, experiment with Local SSDs. Local SSDs are physically attached to the cluster and provide higher throughput than persistent disks (see the Performance table). Local SSDs are available at a fixed size of 375 gigabytes, but you can add multiple SSDs to increase performance.

Local SSDs do not persist data after a cluster is shut down. If persistent storage is desired, you can use SSD persistent disks, which provide higher throughput for their size than standard persistent disks. SSD persistent disks are also a good choice if partition size will be smaller than 8 KB (however, avoid small paritions).

Like Persistent Disks, continue to monitor any throttling of Disk bytes or Disk operations to determine whether Local SSDs are appropriate:

Consider attaching GPUs

For even more processing power, consider attaching GPUs to your cluster. Dataproc provides the ability to attach graphics processing units (GPUs) to the master and worker Compute Engine nodes in a Dataproc cluster. You can use these GPUs to accelerate specific workloads on your instances, such as machine learning and data processing.

GPU drivers are required to utilize any GPUs attached to Dataproc nodes. You can install GPU drivers by following the instructions for this initialization action.

Creating Cluster with GPUs

Sample cluster for compute-heavy workload:

4. Optimize application-specific properties

If you’re still observing performance issues, you can begin to adjust application properties. Ideally these properties are set on the job submission, isolating properties to their respective jobs. View the best practices for your application below.

Performance and Efficiency in Apache Pig

Submitting Dataproc jobs with properties

5. Handle edge-case workload spikes via an autoscaling policy

Now that you have an optimally sized, configured, tuned cluster, you can choose to introduce autoscaling. Autoscaling should not be viewed as a cost-optimization technique because aggressive up/down scaling can lead to Dataproc job instability. However, conservative autoscaling can improve Dataproc cluster performance during edge-cases that require more worker nodes.

Use ephemeral clusters (see next step) to allow clusters to scale up, and delete them when the job or workflow is complete.

Ensure primary workers make up >50% of your cluster.

Avoid autoscaling primary workers. Primary workers run HDFS Datanodes, while secondary workers are compute-only workers. HDFS’s Namenode has multiple race conditions that cause HDFS to get into a corrupted state that causes decommissioning to get stuck forever.

Primary workers are more expensive but provide job stability and better performance. The ratio of primary workers vs. secondary workers is a tradeoff you can make; stability versus cost.

Note: Having too many secondary workers can create job instability. Best practice indicates to avoid having a majority of secondary workers.

Prefer ephemeral, non-autoscaled clusters where possible.

Allow these to scale up and delete them when jobs are complete.

As stated earlier, you should avoid scaling down workers because it can lead to job instability.

Set scaleDownFactor to 0.0 for ephemeral clusters.

Creating and attaching autoscaling policies

sample-autoscaling-policy.yml

6. Optimize cost and reusability via ephemeral Dataproc clusters

There are several key advantages of using ephemeral clusters:

You can use different cluster configurations for individual jobs, eliminating the administrative burden of managing tools across jobs.

You can scale clusters to suit individual jobs or groups of jobs.

You only pay for resources when your jobs are using them.

You don’t need to maintain clusters over time, because they are freshly configured every time you use them.

You don’t need to maintain separate infrastructure for development, testing, and production. You can use the same definitions to create as many different versions of a cluster as you need when you need them.

Build a custom image

Once you have satisfactory cluster performance, you can begin to transition from a non-autoscaling cluster to an ephemeral cluster.

Does your cluster have init scripts that install various software? Use Dataproc Custom Images. This will allow you to create ephemeral clusters with faster startup times. Google Cloud provides an open-source tool to generate custom images.

Generate a custom image

Using Custom Images

Create a Workflow Template

To create an ephemeral cluster, you’ll need to set up a Dataproc workflow template. A Workflow Template is a reusable workflow configuration. It defines a graph of jobs with information on where to run those jobs.

Use the gcloud dataproc clusters export command to generate yaml for your cluster config:

Use this cluster config in your workflow template. Point to your newly created custom image, your application, and add your job specific properties.

Sample Workflow Template (with custom image)

Deploying an ephemeral cluster via a workflow template

Dataproc Workflow Templates provide a dataproc orchestration solution for use-cases such as:

Automation of repetitive tasks

Transactional fire-and-forget API interaction model

Support for ephemeral and long-lived clusters

Granular IAM security

For broader data orchestration strategies, consider a more comprehensive data orchestration service like Cloud Composer.

Next steps

This post demonstrates how you can optimize Dataproc job stability, performance, and cost-effectiveness. Use Workflow templates to deploy a configured ephemeral cluster that runs a Dataproc job with calculated application-specific properties.

Finally, there are many ways that you can continue striving for maximum optimal performance. Please review and consider the guidance laid out in the Google Cloud Blog. For general best practices, check out Dataproc best practices | Google Cloud Blog. For guidance on running in production, check out 7 best practices for running Cloud Dataproc in production | Google Cloud Blog.

Cloud BlogRead More