Database administrators and developers traditionally schedule scripts to run against databases using the system cron on the host where the database is running or using database schedulers, resulting in a tight coupling with the batch scripts and the database. Many Oracle database applications use files stored in a shared file system volume that is mounted on both the application and Oracle database server to load data into the database. As a managed database service, Amazon Relational Database Service (Amazon RDS) doesn’t provide access to the underlying infrastructure, so if you migrate such workloads, you must re-architect these jobs and ingestion pipelines.

To avoid the tightly coupled batch scheduling with database architecture, you can use AWS native solutions for batch scheduling as described in this post. This allows you to take advantage of fully managed Amazon RDS offerings like Amazon RDS for Oracle to reduce database management overhead and operational risk. After you decouple the batch scheduling, to reduce the dependency on database file storage, you can use Amazon S3 Integration with Amazon RDS as an alternative to shared file systems to easily, efficiently, and securely transfer data between Amazon RDS and Amazon Simple Storage Service (Amazon S3).

In this post, we present a solution that provides extensibility and portability across databases by parameterizing your batch requirements and scale without additional development efforts. You can use this solution to run various SQL scripts, shell or bash scripts, AWS Command Line Interface (AWS CLI) commands, or scripts built using programming languages such as Python, PySpark, Scala, or Java. This solution can help developers and scheduling teams decouple batch scheduling from your database and reuse it across different use cases. One additional benefit of this solution is the reusability of the script—you can have jobs that run across multiple databases, for example a tablespace utilization job.

Solution overview

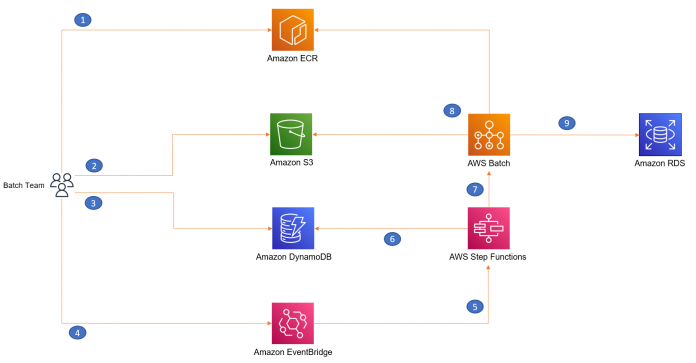

The following diagram illustrates the solution architecture.

You can create reusable container images specific to your batch script and keep those images in Amazon Elastic Container Registry (Amazon ECR). Amazon ECR helps you store, share, and deploy your container software anywhere. You can upload SQL (or other kind of) batch scripts to an S3 bucket. To make the solution parameterized and reusable for different use cases, you can keep the input parameters and metadata (for example, the location of the batch script you uploaded to Amazon S3) in Amazon DynamoDB. Amazon EventBridge invokes AWS Step Functions, a low-code, visual workflow service that you can use to orchestrate AWS Batch jobs. AWS Batch uses the container image stored in Amazon ECR. Step Functions fetches the metadata stored in a DynamoDB table to initiate an AWS Batch job and run the batch script against the target RDS database instance.

The walkthrough consists of the following high-level steps:

Store Amazon RDS credentials in AWS Secrets Manager.

Clone the GitHub repository with the sample codes to your AWS Cloud9 instance.

Create an S3 bucket and upload the batch script.

Create a Docker image to run the batch script against the database.

Build, tag, and push the Docker image to Amazon ECR.

Set up the AWS Batch environment and AWS Batch job definition.

Orchestrate running the AWS Batch job via Step Functions.

Set up an EventBridge schedule to invoke the Step Functions state machine.

The steps in this post are intended to provide general guidance, rather than specific procedures. Alternatively, you can past the sections walking through these manual steps to the section Deploy the solution using a CloudFormation template to simplify the setup through AWS CloudFormation.

Overview of AWS Batch

AWS Batch enables developers, scientists, and engineers to run hundreds of thousands of batch computing jobs on AWS. AWS Batch dynamically provisions the optimal quantity and type of compute resources based on the CPU and memory requirements of your batch jobs.

With AWS Batch, you don’t need to install and manage batch computing software or servers that you use to run your jobs, allowing you to focus on analyzing results and solving problems. For more information, watch the video How AWS Batch Works.

Prerequisites

Before you get started, complete the following prerequisites:

Have your Amazon Virtual Private Cloud (Amazon VPC) ready. Refer to the AWS CloudFormation VPC template to quickly build one.

Have your RDS instance created in the VPC above and have your database credentials handy.

Have your AWS Cloud9 instance ready in the VPC above. You can select a VPC using the Network settings (advanced) while creating the AWS Cloud9 instance.

Costs

AWS services used in this post may result some usage charges. Refer to AWS Pricing page to review the cost of those services used in this post.

Store Amazon RDS credentials in Secrets Manager

To store your Amazon RDS credentials, complete the following steps:

On the Amazon RDS console, choose Databases in the navigation pane.

Choose your database and on the Connectivity & security tab, capture the database endpoint URL and port.

On the Secrets Manager console, choose Secrets in the navigation pane.

Store a new secret as Other type of secret to store all the database login details as key-value pairs. Use the secret name as rds-secret.

If you use a different name, update the sample batch script with the correct name.

Instead of storing the database credentials as the secret type Other type of secret, you have the option to choose Credentials for Amazon RDS database or Credentials for other database. You can update the file rds_batch_sql1.sh that is referred to in the section Create an S3 bucket and upload the batch script based on your selection and refer to the SecretManager CLI commands. By using the secret type Other type of secret, we’re trying to keep the solution to be more generic. For example, you can use it for databases installed on an Amazon Elastic Compute Cloud (Amazon EC2) instance or have a different port number or SID than the default ones.

Clone the GitHub repository to AWS Cloud9

In this step, you clone the GitHub repository with the sample scripts that you can use.

Set up your AWS Cloud9 instance within the same VPC as your RDS instance.

Run the git clone command from the AWS Cloud9 terminal:

Create an S3 bucket and upload the batch script

In this step, you create an S3 bucket and upload our sample batch SQL script.

On the Amazon S3 console, choose Create bucket.

Name the bucket; for example, rdsbatch-123-useast1.

Select your Region; for example, US East (N. Virginia) us-east-1.

Choose Create bucket.

You can change the default settings.

Go to your AWS Cloud9 instance.

Change the directory to the Step1 folder of the GitHub repo you cloned:

Review the sample script script rds_batch_sql1.sh that you can run against the Amazon RDS database.

If you change the order of secret key-value pairs while storing the Amazon RDS credentials in Secrets Manager, adjust the print variable numbers in the shell script accordingly to get the right string. In the example code, the first string to be extracted is the host name, and the last is the password. If you change the name of the secret key to something other than rds-secret, update the new name in the script. Also check the Region used in the aws secretsmanager get-secret-value command. Update it according to your Region.

If you get any errors, check the Troubleshooting section.

To upload your AWS Batch files to your Amazon S3 bucket, run the following command from your AWS Cloud9 instance:

If you’re using a Windows machine to drag and drop the file to Amazon S3 using the browser, it may result in some issues running the Windows shell files on a Unix/Linux Docker image. Therefore, you should upload the script from a Unix/Linux instance (AWS Cloud9 in this case), using the AWS CLI s3 cp option.

Copy the Amazon S3 URI of the uploaded script (for example, s3://rdsbatch-123-useast1/rds_batch_sql1.sh) for future use.

Create a Docker image to run the batch script against the database

In this step, you create a Docker image that you can use as a runtime environment to run the SQL script.

To create a Docker image that downloads and runs the SQL script against the database, complete the following steps:

Go to your AWS Cloud9 instance.

Change the directory to the Step2 folder of the GitHub repo you cloned:

Edit the Dockerfile to use the right S3 bucket and object name, using the Amazon S3 URI copied in the previous section (for example, s3://rdsbatch-123-useast1/rds_batch_sql1.sh). This Docker image performs the following actions:

Installs the Oracle SQL*Plus client

Installs Python and pip

Installs the AWS CLI and which

Copies fetch_and_run_s3script.sh to the Docker image directory /usr/local/bin/

Invokes /usr/local/bin/fetch_and_run_s3script.sh when Docker starts

The script fetch_and_run_s3script.sh is a shell script that uses the AWS CLI to copy the AWS Batch file to the container and run it.

You use oraclelinux:8-slim Oracle Linux Docker build that is publicly available. If you prefer, you can download your preferred image from the Oracle site by logging in using Oracle your account.

Build the Docker image:

For more information about Docker, see What is Docker? For instructions on Creating a container image for use on Amazon ECS, see Creating a container image for use on Amazon ECS

Run the Docker image:

For a successful run, you should see the date printed as part of the output. If you get any errors, check the Troubleshooting section.

Change the Docker image and comment out or remove the line that contains ENV BATCH_FILE_S3_URL.

Update the Docker image:

Now you have the final version of the Docker image tested and ready to be uploaded to Amazon ECR, because AWS Batch requires the Docker image to be available in Amazon ECR.

Build, tag, and push the Docker image to Amazon ECR

To build, tag and push Docker image to Amazon ECR, follow these steps:

On the Amazon ECR console, create an Amazon ECR private repository (for this post, we call it myrdsbatch).

Select the repository and choose View push commands to run those from the AWS Cloud9 instance.

Retrieve an authentication token and authenticate your Docker client to your registry and run it.

If you receive an error using the AWS CLI, make sure that you have the latest version of the AWS CLI and Docker installed.

Build your Docker image.

Tag your image.

Push the image to your Amazon ECR repository.

The following screenshot shows that the image is pushed to Amazon ECR successfully.

Open the Amazon ECR image and capture the URI (for example, xxxxxx.dkr.ecr.us-east-1.amazonaws.com/myrdsbatch:latest).

Set up the AWS Batch environment and AWS Batch job definition

In this step, you set up an AWS Batch environment that you can use to run the database and SQL scripts. For more information, see Getting Started with AWS Batch.

On the AWS Batch console, choose Compute environment and then choose Create.

Select Compute environment type as Managed.

Enter a name for the environment, for example test-batch.

Select the following instance configuration options:

On-Demand (you have options to use AWS Fargate and Spot)

Minimum vCPUs: 4

Maximum vCPUs: 48

DesiredvCpus: 12

Under Networking, select the VPC settings to use your VPC and subnets, and Security group.

Create the compute environment.

In the navigation pane, choose Job queues and then choose Create.

Enter a name for the job queue, for example rds-batch-1.

Select the compute environment you just created (test-batch).

Create the job queue.

In the navigation pane, choose Job definitions and then choose Create.

Set Job Type as Single-Node.

Enter a name for the job definition, for example rds-batch-job-def-1.

Set the Platform type as EC2.

Choose an execution role.

Refer to the Troubleshooting section regarding role permissions to learn more about the permissions needed for the role.

For the job configuration image, enter the Amazon ECR image URI you copied earlier (xxx.dkr.ecr.us-east-1.amazonaws.com/myrdsbatch:latest).

Empty the Command field.

Enable Job role configuration and select your execution role.

Create the job definition.

On the AWS Batch console, choose Jobs and then Submit job.

Enter a name for the job, for example myrds-test-job1.

For Job definition, choose the job definition you created (rds-batch-job-def-1:<version#>).

For Job queue, choose the job queue you created (rds-batch-1).

Enable Environment variables configuration.

Add the BATCH_FILE_S3_URL parameter with the S3 URI as a key-value pair.

Choose Submit job.

Under Jobs, choose the job ID link to see the job Status. You can view the CloudWatch logs to make sure the job completed successfully.

Refer to the Troubleshooting section regarding how to monitor AWS Batch jobs, and fix failures or errors.

Orchestrate the AWS Batch job run via Step Functions

In this step, you orchestrate your AWS Batch job using Step Functions. Step Functions is a serverless function orchestrator that makes it easy to sequence AWS Lambda functions and multiple AWS services into business-critical applications.

In this example, you use three inputs to invoke an AWS Batch job: the S3 URI of your batch (SQL) script, the AWS Batch job definition, and AWS Batch queue ARN. You can save those values in a DynamoDB table for different scripts, and you have the option to add additional parameters such as CPU or memory if you want. The Step Functions state machine can connect to DynamoDB and use those parameters to invoke AWS Batch jobs.

On the Amazon DynamoDB console, in the navigation pane on the left side of the console, choose Dashboard. On the right side of the console, choose Create Table.

Enter the table details as follows:

For the table name, enter rds-batch.

For the partition key, enter JobName.

Leave Default settings selected.

Click on Create table to create the table.

On the Amazon DynamoDB console, in the navigation pane on the left side of the console, choose Tables. In the table list, choose the rds-batch table.

You can refer Create a table to know how to create a DynamoDB table.

Select Explore table items.

In the Items view, choose Create item.

For Attribute JobName enter value as Test123.

Choose Add new attribute, and then choose String. Name the field JobDefinition and paste the JobDefinition value of the AWS Batch job created in the previous section.

Repeat this process to create JobQueue and ScriptLocation fields of type String and enter the values for those fields.

JobName – The test job name, for example Test123

JobDefinition – The AWS Batch job definition ARN

JobQueue – The AWS Batch job queue ARN

ScriptLocation – The S3 URI of the sample batch script

You can refer Write data to a table using the console or AWS CLI to know how to write data to a DynamoDB table.

Create two more items in your DynamoDB table with deferent job names, for example Test124 and Test125, but keep the rest of the values the same.

If you select one item from the table, you have an option to duplicate the item by changing the job name. After you create all three entries, the table items look like the following screenshot.

Next, you create a Step Functions state machine to orchestrate running the AWS Batch job. You can use the sample state machine code state-machine-sample2.json, which extracts the AWS Batch job container parameters from the rds-batch DynamoDB table and runs three AWS Batch jobs (Test123, Test124, and Test125).

In case of any failures, the state machine can notify an Amazon Simple Notification Service (Amazon SNS) topic. So let’s create an SNS topic.

On the Amazon SNS console, choose Topics and then choose Create topic.

Set Standard as Type.

Name the topic Batch-Notify and create the topic.

Go to AWS Cloud9 instance and change the directory to the Step3 folder of the GitHub repo you cloned.

Copy the contents of state-machine-sample2.json or download it using the option you get when you right-click on the file explorer in AWS Cloud9.

On the Step Functions console, choose State Machines and then choose Create state machine.

Select Design your Workflow visually and choose Next.

Choose Import to import the state machine definition file.

Select the state named SNS Publish.

On the right side, you have options under API Parameters to re-select the newly created SNS topic ARN from the drop down.

Check the API Parameters values for Batch: Submit Job states where you get the AWS Batch job input from DynamoDB.

Go through the prompts to save the state machine.

Refer to Getting started with AWS Step Functions and Manage AWS Batch with Step Functions for more details.

Test the state machine by choosing Start execution to run it manually.

You can check the run details and monitor the AWS Batch logs via Amazon CloudWatch.

If you get any errors, refer to the Troubleshooting section regarding errors to see how to fix those issues.

Set up an EventBridge schedule to invoke the Step Functions state machine

To set up an EventBridge schedule to run your state machine, complete the following steps:

On the Amazon EventBridge console, choose Rules.

Choose Create a new rule.

Enter a name (for example, rds-batch-Daily10AM) and a description.

For the pattern, choose Schedule, and choose either a fixed rate or cron schedule.

To know more about EventBridge scheduling, check Creating an Amazon EventBridge rule that runs on a schedule.

Under Target, choose Step Functions state machine and choose the state machine you created.

You can create a new role or use an existing role. Go through the prompts and create the EventBridge rule.

Wait for the event to run and monitor the run details of the state machine and AWS Batch job.

Deploy the solution using a CloudFormation template

You can use the CloudFormation template provided in this post to simplify the setup.

Run the git clone command from your AWS Cloud9 instance to clone the GitHub repository:

Change the working directory to the CloudFormation folder of the GitHub repo you cloned:

Run the CloudFormation script using the following AWS CLI command by passing your parameter values:

This template creates the following resources:

A Secrets Manager key

An S3 bucket

A DynamoDB table

An Amazon ECR repository

AWS Batch resources: the compute environment, queue, and job definition

An SNS topic to notify of AWS Batch job failures

A Step Functions state machine

A CloudWatch Events rule

A AWS KMS key

Roles and permissions associated to these resources

Review the sample script rds_batch_sql1.sh kept under the same directory. This will be the batch script that you run against the Amazon RDS database.

To upload your AWS Batch files to your S3 bucket, run the following command from your AWS Cloud9 instance and copy the Amazon S3 URI of the uploaded script (for example, s3://rdsbatch-123-useast1/rds_batch_sql1.sh) for future use:

Get the push commands from Amazon ECR created by the CloudFormation script and run the push commands from your AWS Cloud9 instance working directory (cd Cloudformation) to upload the Docker image to Amazon ECR.

The script fetch_and_run_s3script.sh is a shell script that uses the AWS CLI to copy the AWS Batch file to the container and run the file.

The next step is to update DynamoDB with the AWS Batch parameters.

Open the JSON file named dynamodb_items.json under the same working directory and replace the job definition, job queue, and batch script file location in the JSON file. You can find the job definition and job queue from Resources tab of your CloudFormation Stack executed.

You can create different SQL scripts, upload them to Amazon S3, and change the script location S3 URI for jobs in the DynamoDB table.

Use AWS Cloud9 to create items in DynamoDB using the following script and verify the items in the DynamoDB table:

On the EventBridge console, choose Rules.

Edit the rule rds-batch-every10minutes and enable it.

You can monitor the scheduled run via the Step Functions state machine, AWS Batch monitoring, or the CloudWatch logs for the AWS Batch jobs. If you encounter failures such as Unable to locate credentials or TNS:Connect timeout occurred, check the Troubleshooting section regarding script errors.

Clean up

To clean up your resources, complete the following steps:

Empty the S3 bucket using the AWS Cloud9 instance:

Empty the Amazon ECR repository using the AWS Cloud9 instance:

On the CloudFormation console, delete the stacks that you created using the template rds-batch-scheduler.yaml.

Troubleshooting

In this section, we offer tips regarding SQL script errors, checking the AWS Batch job status, and AWS Batch role permissions.

Failures

If you get the error Unable to locate credentials, it means that your instance doesn’t have access to Secrets Manager. In that case, go to your AWS Cloud9 EC2 instance or AWS Batch compute instance, and on the Actions menu, choose Modify IAM role to update the AWS Identity and Access Management (IAM) role to one with access to Secrets Manager to use the secret.

If you get the error TNS:Connect timeout occurred, it means the instance can’t reach the RDS instance. In that case, change the security group of Amazon RDS to have traffic from the your instance security group flow through port 1521.

If you get the error An error occurred (403) when calling the HeadObject operation: Forbidden – Failed to download S3 script, it means that the docker runtime can’t access the S3 object uploaded. In that case, add list and read permission on S3 bucket and object to your role, check Bucket Policy Examples.

If you get the error An error occurred (AccessDeniedException) when calling the GetSecretValue operation: User: arn:aws:sts::xxxx:assumed-role/yyyy/i-abcd1234 is not authorized to perform: secretsmanager:GetSecretValue on resource: rds-secret because no identity-based policy allows the secretsmanager:GetSecretValue action, it means that the docker runtime can’t access the Secret Manager secret created. In that case, add read permission on Secret Manager secret to your role, check IAM policy examples for secrets in AWS Secrets Manager.

AWS Batch job status

The following screenshot shows the successful completion of the job.

You can validate the jobs using the CloudWatch logs that AWS Batch produced. The following screenshot shows the job details on the AWS Batch console. The details contain the CloudWatch logs for the job, the SQL script run against the database, and the date.

AWS Batch execution role permissions

Your AWS Batch execution role needs the following settings and permissions:

Trusted entities – ecs-tasks.amazonaws.com

Policies – AmazonEC2ContainerServiceRole, AWSBatchServiceRole, CloudWatchEventsFullAccess, AmazonEC2ContainerRegistryReadOnly

To grant access to the S3 bucket and object, check how to set up S3 bucket policy. To grant access to the Secret Manager secret, check IAM policy examples for secrets in AWS Secrets Manager. Also, you can create an inline policy for your role using the sample JSON policy given below:

If you made any changes to the settings and notice if the Batch Job is stuck is RUNNABLE state, see Why is my AWS Batch job stuck in RUNNABLE status?

Additional references

The post Using AWS CloudFormation to Create and Manage AWS Batch Resources highlights the native resources supported in AWS CloudFormation and demonstrates how to create AWS Batch compute environments using AWS CloudFormation.

The post Scheduling and running Amazon RDS jobs with AWS Batch and Amazon CloudWatch rules demonstrates how to use AWS Batch and CloudWatch rules to dynamically provision resources and schedule and run functions or stored procedures on a PostgreSQL database.

The post Creating a Simple “Fetch & Run” AWS Batch Job walks you through the steps to create and run a simple fetch and run job in AWS Batch.

Conclusion

In this post, we demonstrated how you can use EventBridge, Step Functions, and AWS Batch to schedule and orchestrate your batch scripts.

You can use this solution to run database and SQL scripts, bash scripts, Python scripts, and AWS service-specific tasks using the AWS CLI. You can also extend the solution to run scripts built using programming languages such as PySpark, Scala, or Java. Additionally, you can enable alerting using CloudWatch Logs and Amazon SNS, and enable monitoring using CloudWatch Logs, Amazon S3, Amazon Athena, and Amazon QuickSight.

If you have questions or suggestions, leave a comment.

About the Authors

Dilin Joy is a Partner Solutions Architect at Amazon Web Services. He works with a leading Global System Integrator (GSI) to provide architectural guidance and support them in building strategic industry solutions on AWS.

Roshin Babu is a Data Analytics Specialist Solutions Architect with Amazon Web Services. He works with the Sales Specialists team supporting AWS Public Sector customers.

Read MoreAWS Database Blog