Data scientists often train their models locally and look for a proper hosting service to deploy their models. Unfortunately, there’s no one set mechanism or guide to deploying pre-trained models to the cloud. In this post, we look at deploying trained models to Amazon SageMaker hosting to reduce your deployment time.

SageMaker is a fully managed machine learning (ML) service. With SageMaker, you can quickly build and train ML models and directly deploy them into a production-ready hosted environment. Additionally, you don’t need to manage servers. You get an integrated Jupyter notebook environment with easy access to your data sources. You can perform data analysis, train your models, and test them using your own algorithms or use SageMaker-provided ML algorithms that are optimized to run efficiently against large datasets spread across multiple machines. Training and hosting are billed by minutes of usage, with no minimum fees and no upfront commitments.

Solution overview

Data scientists sometimes train models locally using their IDE and either ship those models to the ML engineering team for deployment or just run predictions locally on powerful machines. In this post, we introduce a Python library that simplifies the process of deploying models to SageMaker for hosting on real-time or serverless endpoints.

This Python library gives data scientists a simple interface to quickly get started on SageMaker without needing to know any of the low-level SageMaker functionality.

If you have models trained locally using your preferred IDE and want to benefit from the scale of the cloud, you can use this library to deploy your model to SageMaker. With SageMaker, in addition to all the scaling benefits of a cloud-based ML platform, you have access to purpose-built training tools (distributed training, hyperparameter tuning), experiment management, model management, bias detection, model explainability, and many other capabilities that can help you in any aspect of the ML lifecycle. You can choose from the three most popular frameworks for ML: Scikit-learn, PyTorch, and TensorFlow, and can pick the type of compute you want. Defaults are provided along the way so users of this library can deploy their models without needing to make complex decisions or learn new concepts. In this post, we show you how to get started with this library and optimize deploying your ML models on SageMaker hosting.

The library can be found in the GitHub repository.

The SageMaker Migration Toolkit

The SageMakerMigration class is available through a Python library published to GitHub. Instructions to install this library are provided in the repository; make sure that you follow the README to properly set up your environment. After you install this library, the rest of this post talks about how you can use it.

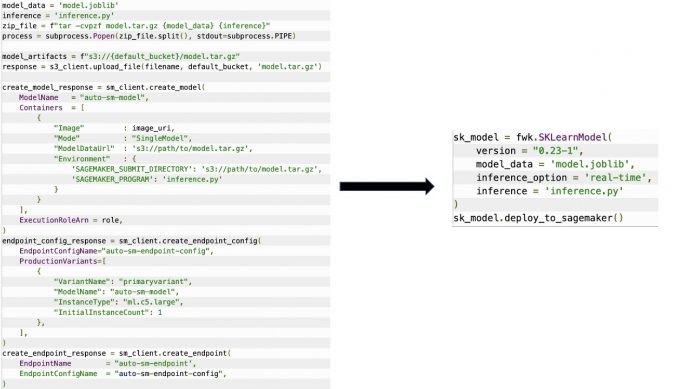

The SageMakerMigration class consists of high-level abstractions over SageMaker APIs that significantly reduce the steps needed to deploy your model to SageMaker, as illustrated in the following figure. This is intended for experimentation so developers can quickly get started and test SageMaker. It is not intended for production migrations.

For Scikit-learn, PyTorch, and TensorFlow models, this library supports deploying trained models to a SageMaker real-time endpoint or serverless endpoint. To learn more about the inference options in SageMaker, refer to Deploy Models for Inference.

Real-time vs. serverless endpoints

Real-time inference is ideal for inference workloads where you have real-time, interactive, low latency requirements. You can deploy your model to SageMaker hosting services and get an endpoint that can be used for inference. These endpoints are fully managed and support auto scaling.

SageMaker Serverless Inference is a purpose-built inference option that makes it easy for you to deploy and scale ML models. Serverless Inference is ideal for workloads that have idle periods between traffic spurts and can tolerate cold starts. Serverless endpoints automatically launch compute resources and scale them in and out depending on traffic, eliminating the need to choose instance types or manage scaling policies. This takes away the undifferentiated heavy lifting of selecting and managing servers.

Depending on your use case, you may want to quickly host your model on SageMaker without actually having an instance always on and incurring costs, in which case a serverless endpoint is a great solution.

Prepare your trained model and inference script

After you’ve identified the model you want to deploy on SageMaker, you must ensure the model is presented to SageMaker in the right format. SageMaker endpoints generally consist of two components: the trained model artifact (.pth, .pkl, and so on) and an inference script. The inference script is not always mandatory, but if not provided, the default handlers for the serving container that you’re using are applied. It’s essential to provide this script if you need to customize your input/output functionality for inference.

The trained model artifact is simply a saved Scikit-learn, PyTorch, or TensorFlow model. For Scikit-learn, this is typically a pickle file, for PyTorch this is a .pt or .pth file, and for TensorFlow this is a folder with assets, .pb files, and other variables.

Generally, you need to able to control how your model processes input and performs inference, and control the output format for your response. With SageMaker, you can provide an inference script to add this customization. Any inference script used by SageMaker must have one or more of the following four handler functions: model_fn, input_fn, predict_fn, and output_fn.

Note that these four functions apply to PyTorch and Scikit-learn containers specifically. TensorFlow has slightly different handlers because it’s integrated with TensorFlow Serving. For an inference script with TensorFlow, you have two model handlers: input_handler and output_handler. Again, these have the same preprocessing and postprocessing purpose that you can work with, but they’re configured slightly differently to integrate with TensorFlow Serving. For PyTorch models, model_fn is a compulsory function to have in the inference script.

model_fn

This is the function that is first called when you invoke your SageMaker endpoint. This is where you write your code to load the model. For example:

Depending on the framework and type of model, this code may change, but the function must return an initialized model.

input_fn

This is the second function that is called when your endpoint is invoked. This function takes the data sent to the endpoint for inference and parses it into the format required for the model to generate a prediction. For example:

The request_body contains the data to be used for generating inference from the model and is parsed in this function so that it’s in the required format.

predict_fn

This is the third function that is called when your model is invoked. This function takes the preprocessed input data returned from input_fn and uses the model returned from model_fn to make the prediction. For example:

You can optionally add output_fn to parse the output of predict_fn before returning it to the client. The function signature is def output_fn(prediction, content_type).

Move your pre-trained model to SageMaker

After you have your trained model file and inference script, you must put these files in a folder as follows:

After your model and inference script have been prepared and saved in this folder structure, your model is ready for deployment on SageMaker. See the following code:

After deployment of your endpoint, make sure to clean up any resources you won’t utilize via the SageMaker console or through the delete_endpoint Boto3 API call.

Conclusion

The goal of the SageMaker Migration Toolkit project is to make it easy for data scientists to onboard their models onto SageMaker to take advantage of cloud-based inference. The repository will continue to evolve and support more options for migrating workloads to SageMaker. The code is open source and we welcome community contributions through pull requests and issues.

Check out the GitHub repository to explore more on utilizing the SageMaker Migration Toolkit, and feel free to also contribute examples or feature requests to add to the project!

About the authors

Kirit Thadaka is an ML Solutions Architect working in the Amazon SageMaker Service SA team. Prior to joining AWS, Kirit spent time working in early stage AI startups followed by some time in consulting in various roles in AI research, MLOps, and technical leadership.

Ram Vegiraju is a ML Architect with the SageMaker Service team. He focuses on helping customers build and optimize their AI/ML solutions on Amazon SageMaker. In his spare time, he loves traveling and writing.

Read MoreAWS Machine Learning Blog