Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. Often, customers have objects in S3 buckets that need further processing to be used effectively by consuming applications. Data engineers must support these application-specific data views with trade-offs between persisting derived copies or transforming data at the consumer level. Neither solution is ideal because it introduces operational complexity, causes data consistency challenges, and wastes more expensive computing resources.

These trade-offs broadly apply to many machine learning (ML) pipelines that train on unstructured data, such as audio, video, and free-form text, among other sources. In each example, the training job must download data from S3 buckets, prepare an application-specific view, and then use an AI algorithm. This post demonstrates a design pattern for reducing costs, complexity, and centrally managing this second step. It uses the concrete example of image processing, though the approach broadly applies to any workload. The economic benefits are also most pronounced when the transformation step doesn’t require GPU, but the AI algorithm does.

The proposed solution also centralizes data transformation code and enables just-in-time (JIT) transformation. Furthermore, the approach uses a serverless infrastructure to reduce operational overhead and undifferentiated heavy lifting.

Solution overview

When ML algorithms process unstructured data like images and video, it requires various normalization tasks (such as grey-scaling and resizing). This step exists to accelerate model convergence, avoid overfitting, and improve prediction accuracy. You often perform these preprocessing steps on instances that later run the AI training. That approach creates inefficiencies, because those resources typically have more expensive processors (for example, GPUs) than these tasks require. Instead, our solution externalizes those operations across economic, horizontally scalable Amazon S3 Object Lambda functions.

This design pattern has three critical benefits. First, it centralizes the shared data transformation steps, such as image normalization and removing ML pipeline code duplication. Next, S3 Object Lambda functions avoid data consistency issues in derived data through JIT conversions. Third, the serverless infrastructure reduces operational overhead, increases access time, and limits costs to the per-millisecond time running your code.

An elegant solution exists in which you can centralize these data preprocessing and data conversion operations with S3 Object Lambda. S3 Object Lambda enables you to add code that modifies data from Amazon S3 before returning it to an application. The code runs within an AWS Lambda function, a serverless compute service. Lambda can instantly scale to tens of thousands of parallel runs while supporting dozens of programming languages and even custom containers. For more information, see Introducing Amazon S3 Object Lambda – Use Your Code to Process Data as It Is Being Retrieved from S3.

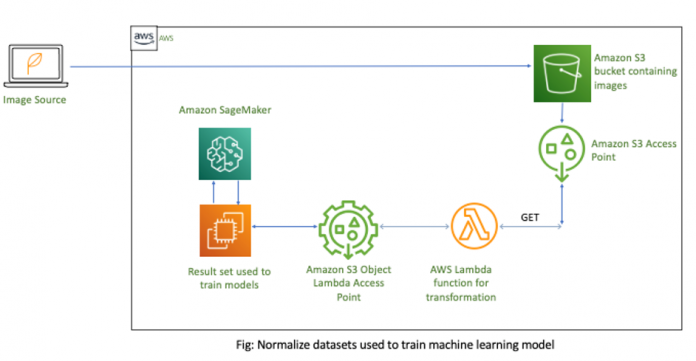

The following diagram illustrates the solution architecture.

In this solution, you have an S3 bucket that contains the raw images to be processed. Next, you create an S3 Access Point for these images. If you build multiple ML models, you can create separate S3 Access Points for each model. Alternatively, AWS Identity and Access Management (IAM) policies for access points support sharing reusable functions across ML pipelines. Then you attach a Lambda function that has your preprocessing business logic to the S3 Access Point. After you retrieve the data, you call the S3 Access Point to perform JIT data transformations. Finally, you update your ML model to use the new S3 Object Lambda Access Point to retrieve data from Amazon S3.

Create the normalization access point

This section walks through the steps to create the S3 Object Lambda access point.

Raw data is stored in an S3 bucket. To provide the user with the right set of permissions to access this data, while avoiding complex bucket policies that can cause unexpected impact to another application, you need to create S3 Access Points. S3 Access Points are unique host names that you can use to reach S3 buckets. With S3 Access Points, you can create individual access control policies for each access point to control access to shared datasets easily and securely.

Create your access point.

Create a Lambda function that performs the image resizing and conversion. See the following Python code:

import boto3

import cv2

import numpy as np

import requests

import io

def lambda_handler(event, context):

print(event)

object_get_context = event[“getObjectContext”]

request_route = object_get_context[“outputRoute”]

request_token = object_get_context[“outputToken”]

s3_url = object_get_context[“inputS3Url”]

# Get object from S3

response = requests.get(s3_url)

nparr = np.fromstring(response.content, np.uint8)

img = cv2.imdecode(nparr, flags=1)

# Transform object

new_shape=(256,256)

resized = cv2.resize(img, new_shape, interpolation= cv2.INTER_AREA)

gray_scaled = cv2.cvtColor(resized,cv2.COLOR_BGR2GRAY)

# Transform object

is_success, buffer = cv2.imencode(“.jpg”, gray_scaled)

if not is_success:

raise ValueError(‘Unable to imencode()’)

transformed_object = io.BytesIO(buffer).getvalue()

# Write object back to S3 Object Lambda

s3 = boto3.client(‘s3’)

s3.write_get_object_response(

Body=transformed_object,

RequestRoute=request_route,

RequestToken=request_token)

return {‘status_code’: 200}

Create an Object Lambda access point using the supporting access point from Step 1.

The Lambda function uses the supporting access point to download the original objects.

Update Amazon SageMaker to use the new S3 Object Lambda access point to retrieve data from Amazon S3. See the following bash code:

aws s3api get-object –bucket arn:aws:s3-object-lambda:us-west-2:12345678901:accesspoint/image-normalizer –key images/test.png

Cost savings analysis

Traditionally, ML pipelines copy images and other files from Amazon S3 to SageMaker instances and then perform normalization. However, transforming these actions on training instances has inefficiencies. First, Lambda functions horizontally scale to handle the burst then elastically shrink, only charging per millisecond when the code is running. Many preprocessing steps don’t require GPUs and can even use ARM64. That creates an incentive to move that processing to more economical compute such as Lambda functions powered by AWS Graviton2 processors.

Using an example from the Lambda pricing calculator, you can configure the function with 256 MB of memory and compare the costs for both x86 and Graviton (ARM64). We chose this size because it’s sufficient for many single-image data preparation tasks. Next, use the SageMaker pricing calculator to compute expenses for an ml.p2.xlarge instance. This is the smallest supported SageMaker training instance with GPU support. These results show up to 90% compute savings for operations that don’t use GPUs and can shift to Lambda. The following table summarizes these findings.

Lambda with x86

Lambda with Graviton2 (ARM)

SageMaker ml.p2.xlarge

Memory (GB)

0.25

0.25

61

CPU

—

—

4

GPU

—

—

1

Cost/hour

$0.061

$0.049

$0.90

Conclusion

You can build modern applications to unlock insights into your data. These different applications have unique data view requirements, such as formatting and preprocessing actions. Addressing these other use cases can result in data duplication, increasing costs, and more complexity to maintain consistency. This post offers a solution for efficiently handling these situations using S3 Object Lambda functions.

Not only does this remove the need for duplication, but it also forms a path to scale these actions across less expensive compute horizontally! Even optimizing the transformation code for the ml.p2.xlarge instance would still be significantly more costly because of the idle GPUs.

For more ideas on using serverless and ML, see Machine learning inference at scale using AWS serverless and Deploying machine learning models with serverless templates.

About the Authors

Nate Bachmeier is an AWS Senior Solutions Architect nomadically explores New York, one cloud integration at a time. He specializes in migrating and modernizing customers’ workloads. Besides this, Nate is a full-time student and has two kids.

Marvin Fernandes is a Solutions Architect at AWS, based in the New York City area. He has over 20 years of experience building and running financial services applications. He is currently working with large enterprise customers to solve complex business problems by crafting scalable, flexible, and resilient cloud architectures.

Read MoreAWS Machine Learning Blog