We are excited to announce that the open source Pub/Sub Lite Apache Spark connector is now compatible with Apache Spark 3.X.X distributions, and the connector is officially GA.

What is the Pub/Sub Lite Apache Spark Connector?

Pub/Sub Lite is a Google Cloud messaging service that allows users to send and receive messages asynchronously between independent applications. Publish applications send messages to Pub/Sub Lite topics, and applications subscribe to Pub/Sub Lite subscriptions to receive those messages.

Pub/Sub Lite offers both zonal and regional topics, which differ only in the way that data is replicated. Zonal topics store data in a single zone, while regional topics replicate data to two zones in a single region.

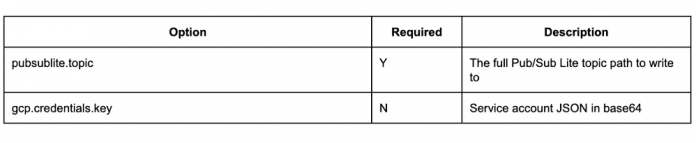

The Pub/Sub Lite Spark connector supports the use of Pub/Sub Lite as both an input and output source for Apache Spark Structured Streaming. When writing to Pub/Sub Lite, the connector supports the following configuration options:

When reading from Pub/Sub Lite, the connector supports the following configuration options:

The connector works in all Apache Spark distributions, including Databricks and Google Cloud Dataproc. The first GA release of the Pub/Sub Lite Spark connector is v1.0.0, and it is compatible with Apache Spark 3.X.X versions.

Getting Started with Pub/Sub Lite and Spark Structured Streaming on Dataproc

Using Pub/Sub Lite as a source with Spark Structured Streaming is simple using the Pub/Sub Lite Spark connector.

To get started, first create a Google Cloud Dataproc cluster:

The cluster image version determines the Apache Spark version that is installed on the cluster. The Pub/Sub Lite Spark connector currently supports Spark 3.X.X, so choose a 2.X.X image version.

Enable API access to Google Cloud services by providing the ‘https://www.googleapis.com/auth/cloud-platform’ scope.

Next, create a Spark script. For writing to Pub/Sub Lite, use the writeStream API, like the following python script:

For reading from Pub/Sub Lite, create a script using the readStream API, like so:

Finally, submit the job to Dataproc. When submitting the job, the Pub/Sub Lite Spark connector must be included in the job’s Jar files. All versions of the connector are publicly available from the Maven Central repository. Choose the latest version (or >1.0.0 for GA releases), and download the ‘with-dependencies.jar’. Upload this jar to the Dataproc job, and submit!

Further reading

Get started with the Pub/Sub Lite Spark connector Quick Start

Check out our how-to-guides to ‘Write to Pub/Sub Lite from Spark’ and ‘Read from Pub/Sub Lite from Spark’.

Read ‘Pub/Sub Lite as a source with Spark Structured Streaming on Databricks’.

Cloud BlogRead More