Migrating self-managed Oracle databases running on-premises or on Amazon Elastic Compute Cloud (Amazon EC2) to Amazon Relational Database Service (Amazon RDS) for Oracle is a common migration path for customers looking to adopt a managed database solution from AWS. This managed service appeals to customers who are looking to reduce the cost and overhead of database infrastructure management by using the service’s orchestration of backups, upgrades, monitoring, and capacity management capabilities.

Amazon RDS for Oracle migration strategies vary depending on your tolerance for cutover downtime. The database size, table sizes, data types and redo log generation rates play key factors in determining the appropriate migration path. For Oracle to Amazon RDS for Oracle homogenous migrations, when possible it’s best to utilize native tools such as Oracle Data Pump and RMAN for overall best compatibility. When native tools are not an option, you can use AWS Database Migration Service (AWS DMS) for either the initial full extract of the data or for replicating change data capture (CDC) changes to the target database.

The following table summarizes the most common recommended approaches for migrating Oracle to Amazon RDS for Oracle with minimal downtime. All of the approaches listed below can utilize the AWS Snow Family storage devices to transfer the initial datasets to AWS. AWS Snowcone or AWS Snowball portable devices can be a suitable alternative when network transfers aren’t an option.

Migration Approach

Best Fit

Oracle Data Pump export and DMS CDC

Oracle consistent export and DMS CDC

Single to multi-terabyte databases with redo log generation rates in the 10s to 100s of GBs per hour.

Oracle Transportable tablespaces

RMAN full and incremental(s) tablespace backup and restore.

Larger multi-terabyte databases where physical migration is more appropriate including migrating across architectures or operating systems.

AWS Database Migration Service (DMS) only

DMS full export and DMS change data capture (CDC)

Smaller databases with redo log generation rates in the 10s to 100s of GBs hour. Good option when Oracle Data Pump is not possible.

AWS DMS to an AWS Snow family device and DMS CDC

DMS Schema Conversion Tool agents perform initial export to an AWS Snow device followed by DMS CDC

Good option when Oracle Data Pump or Oracle Transportable Tablespaces is not a viable option and database is too large to transfer over the network.

This post discusses the planning considerations when using Oracle Data Pump and DMS CDC to migrate to Amazon RDS for Oracle. This method offers a flexible migration strategy that minimizes impact on the source database and supports a manageable cutover window. For a step-by-step example of how to perform this migration method, refer to Migrating Oracle databases with near-zero downtime using AWS DMS.

Solution overview

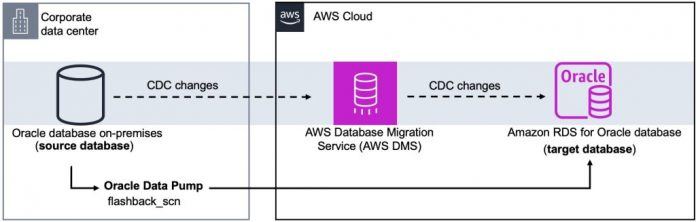

The following diagram illustrates the architecture related to the planning considerations in this post.

Migration Overview

The following are the high level steps in migrating Oracle databases to Amazon RDS for Oracle:

An initial consistent Oracle Data Pump export is taken on the source database.

The export is transferred to Amazon Simple Storage Service (Amazon S3) via the network or through an AWS Snow Family device.

The export is download from S3 onto the RDS for Oracle instance and imported into the target database.

A DMS CDC task is created to capture delta changes from the source database off of a starting flashback_scn or flashback_time.

Once the DMS CDC task is fully caught up with little to no replication lag, a cutover window can be scheduled.

Licensing

Amazon RDS for Oracle supports two licensing models. You can “Bring-Your-Own-License (BYOL)” if you are looking to deploy either Enterprise or Standard Edition Oracle databases. Alternatively, you can purchase Amazon RDS for Oracle instances with “License Included (LI)” if deploying Oracle Standard Edition One or Standard Edition Two. Oracle licensing contracts can vary from customer to customer so it is always best to check with Oracle or with a licensing specialist.

Planning

Using Oracle Data Pump and AWS DMS CDC as a migration strategy is beneficial when the source database is in the terabyte size range and the initial export can be transferred and imported into the target database within the source database’s archive log retention window. Transferring the initial Oracle Data Pump export files to AWS can be done over a high-speed network or via one of the AWS Snow Family devices that can be shipped and connected to your network in a few days.

The amount of redo log generation per hour on the source database is another important factor that could impact DMS CDC replication lag and extend a cutover window. Source database workloads with constant redo generation rates typically generate a predictable replication lag that can be more readily planned. Workloads with heavy batch cycles cause more variability in the replication lag, requiring more planning and timing of the cutover window. When the source and target databases are properly indexed and change rates are in the tens to hundreds of gigabytes per hour, AWS DMS CDC replication latency should be manageable and a short cutover window is possible. The following are some key areas to review in order to verify a successful migration:

AWS provides an AWS DMS Oracle support script that outputs all the relevant information for a migration of this type. Running this script early in the evaluation process can help you build confidence in your migration approach. We strongly recommend that you run the script and familiarize yourself with all the important areas, including source data types in use, most frequently modified tables, and LOB usage. Review this output and compare it against the limitations on using Oracle as a source for AWS DMS.

Running a pre-migration assessment AWS DMS task is also critically important because it can help reveal potential issues with the migration approach as shown in the following screenshot.

Review the Network-to-Amazon VPC connectivity options to check that your network connectivity is able to support your migration strategy. A Site-to-Site VPN option may be suitable for smaller database migrations, but an AWS Direct Connect will provide faster transfer speeds and be more resilient to network interruptions. Transferring multi-terabyte Oracle Data Pump export files and replicating gigabytes of log changes per hour can be challenging on low bandwidth networks.

Source database configuration

For details on the necessary permissions and configuration required for AWS DMS CDC to capture changes on the source database, refer to Working with a self-managed Oracle database as a source for AWS DMS. The following are some key areas to pay attention to:

Adjust the source database’s archive log retention to verify the archive logs are available for AWS DMS CDC. This means that if it takes 5 days to transfer and load the initial export, the archive log retention needs to be at least 5 days for AWS DMS CDC to start reading archive and redo logs from the time the export was created. Note that supplemental logging will only add to the amount of redo log generation.

When exporting data either through the use of the expdp utility or the DBMS_DATAPUMP API, use the PARALLEL option to speed up the export. If you own the Oracle Advanced Compression License, use the COMPRESS option to reduce the size of the dump files and speed up the transfer to AWS.

When using the PARALLEL option, use the DUMPFILE wild card character (%U or similar) to enable Data Pump to write to multiple concurrent dump files. Use the FILESIZE parameter to limit the export file sizes as Amazon Simple Storage Service (Amazon S3) has a max file size of 5 TB and multiple smaller files are easier to manage.

Review the Setting up supplemental logging requirements for AWS DMS CDC. Tables without a primary key or a unique index on the source table can be problematic. On the source database, supplemental logging can be added to all columns of a table to circumvent the lack of a unique identifier.

AWS DMS configuration

In this migration scenario, the AWS DMS replication instance and AWS DMS tasks will only be replicating log changes based off of a starting FLASHBACK_SCN or FLASHBACK_TIME. The replication instance needs to be sized with enough CPU and memory for the tasks that are running on it. Replication instances can be provisioned instance-based or serverless when Oracle is both the source and target engine. The following are some key areas to focus on:

Consider using AWS DMS Serverless to simplify the instance sizing. As of this writing, AWS DMS Serverless can be configured with up to 16 DMS capacity units (DCUs), which is equivalent to 32 GB of memory. Check the AWS DMS Serverless limitations to check that none apply in your scenario.

If AWS DMS instance-based sizing is more appropriate, refer to Choosing the right AWS DMS replication instance for your migration for guidance on how to choose the right instance type and size. AWS DMS tries to process the CDC log stream in memory when possible, but there may be cases where logs are written to the AWS DMS instance and you want to have enough disk space configured.

The AWS DMS CDC task can be configured to read from the source database via LogMiner or a Binary Reader configuration. Review Using Oracle LogMiner or AWS DMS Binary Read for CDC to determine the most appropriate option.

A single AWS DMS task can be configured to process the source database’s log stream and apply the changes on the target. However, when a few key tables are responsible for the vast majority of DML changes, it’s possible to create multiple AWS DMS tasks and use table selection rules to split a group of tables across multiple tasks and parallelize the processing of these changes. The AWS DMS Oracle support script has a section that identifies the tables with the most modifications and as long as the tables don’t have a primary or foreign key dependency, they can be configured into multiple separate AWS DMS tasks.

Monitor AWS DMS metrics such as CPUUtilization and FreeableMemory to verify that AWS DMS has enough resources if using an instance-based replication instance. For AWS DMS Serverless, review the serverless replication metrics such as CapacityUtilization.

Higher than expected AWS DMS CDC latency can occur due to a number of reasons. When investigating latency, the goal should be to first determine if the latency is due to a problem on the source database, the DMS replication instance or task, or the target database. On the source database, common root causes are slow or unstable network connectivity to AWS, heavy updates to large binary objects (LOBs) or excessive redo log generation confined to a few tables. During the DMS CDC phase, updates to a single LOB record may require multiple network roundtrips impacting latency. Refer to Migrating large binary objects (LOBs) for additional information on how to handle LOBs with DMS. If there are a few tables that are responsible for the majority of the DML changes, configuring dedicated DMS tasks for these tables may be an option to improve throughput and reduce latency. When the DMS instance is under sized or a single CDC DMS task is not enough to keep up with the volume, this can add to the replication latency. Last but not least on the target database, the lack of indexes, an undersized RDS instance or an instance without enough IO throughput can lead to increases in replication lag. You can monitor the Replication task metrics and the metric CDCLatencySource and CDCLatencyTarget to determine which component of the migration is responsible for the delay.

Target database configuration

The migration clock typically starts from when a consistent Data Pump export using a FLASHBACK_SCN or FLASHBACK_TIME parameter is executed on the source database. At this point, you need to migrate the data and synchronize CDC changes as quickly as possible. The following are some important factors to prepare the target database for this type of migration:

Create a non-default database parameter and option group before creating the target RDS for Oracle database. When creating the target database, use these non-default groups so that later on, parameter and option changes can be made more easily.

Improve the performance of the initial import by configuring the target database in NOARCHIVELOG mode. Setting the backup retention to 0 days will achieve this.

During the initial import, configure the database in Single-AZ. Multi-AZ enables a two-phase commit at the storage level and could slow down the initial data import.

Every RDS for Oracle database by default is configured with four 128 MB redo log files. If you’re trying to import multi-terabyte databases, these values are too small and will cause slowness due to excessive log switching and checkpointing. You can use the rdsadmin.rdsadmin_util.add_logfile and drop_logfile procedures to add larger log files and remove the default smaller logs.

The redo log_buffer parameter can be configured to a maximum of 256 MB. During the initial migration process, increasing this value may help improve performance. For example, on a db.r5.2xlarge (64 GB RAM), the log_buffer is preconfigured to about 111 MB, and is set to 50 MB on an db.r5.xlarge (32 GB RAM). These values may be fine for normal everyday workloads, but for the initial load and CDC, higher values may offer a small boost in performance.

Consider overprovisioning your Oracle environment for the initial full import and CDC. AWS offers a broad range of RDS for Oracle instance classes with different cpu clock speeds and different cpu to memory ratios. Your RDS instance can be downsized when the environment is in a steady replication state or after cutover.

It’s important to test and review the CPUUtilization, ReadIOPS, and WriteIOPS metrics of the database during the initial import, once CDC has been on-going and in the application test phase prior to cutover. If CPU utilization peaks over 80% and the sum of the read and write IOPS is close to what was provisioned, it may be worth exploring a larger instance type or a larger IOPS configuration.

General Purpose SSD (gp3) and Provisioned IOPS SSD (io1) are the most common storage options for RDS Oracle environments. GP3 storage is common for lower environments where io1 storage is more suitable for production environments. Refer to the Amazon RDS DB Instance storage documentation for additional performance details and the Testing Amazon RDS for Oracle: Plotting Latency and IOPS for OLTP I/O Pattern blog post for specifics on how to test RDS storage. In the context of Oracle migrations, if the log file sync is a top wait event on the source database, then careful I/O testing should be performed against the target database to determine redo commit latency.

Performance testing should also be completed for common operational activities such as backups, restores and read replica creation due to the way EBS volumes are hydrated from S3 snapshots.

When performing the initial Data Pump import into the target database, consider setting the following Data Pump import parameters to improve performance and visibility into the import itself:

Set the DBMS_DATAPUMP.SET_PARALLEL(hdnl, 8) to increasing the number of parallel workers to improve import performance. It’s important to verify that the RDS instance has enough CPU to support the parallelism setting.

Set the DBMS_DATAPUMP.SET_PARAMETER(hdnl, ‘METRICS’, 1) parameter to give you more visibility into object creation times in the Data Pump import log.

Set the DBMS_DATAPUMP.SET_PARAMETER(hdnl, ‘LOGTIME’, ‘ALL’) parameter to have all the log entries timestamped in the Data Pump import log.

It’s not uncommon for the Data Pump index creation to take as long or longer than importing the table data. Although adding the PARALLEL setting allows for tables and indexes to be created in parallel, by default there is no intra-object parallelism. This means that even for a large index, only one worker is assigned to create it. For this reason, you may opt to generate the indexes separately from the import where you can control the intra-index parallelism with the PARALLEL (DEGREE, N) syntax of the CREATE INDEX statement. In either case, the settings mentioned above will help you determine the best approach:

For example, in the following screenshot, we see eight Data Pump workers (W-N). The table data imported in about 73 seconds (22:17:32–22:18:45), and the index creation took longer at 113 seconds (22:18:45–22:20:38). With this level of detail, you can make an informed decision about going to a larger RDS instance, increasing the PARALLEL parameter setting, or building the indexes outside of the Data Pump import process.

Conclusion

This post covered the most common overlooked topics when migrating to Amazon RDS for Oracle using a combination of Oracle’s native Data Pump utility and AWS DMS for CDC. Understanding the source database’s table, index, and data type structures, and the redo generation rates, are key to determining if this hybrid migration method fits your migration and cutover expectations.

We encourage you to explore the documentation about migrating Oracle databases with AWS DMS and running the assessment and support scripts mentioned in the Planning section of this post. For more information and configuration specifics on using DMS and Oracle Data Pump see Using an Oracle database as a source for AWS DMS and Oracle Data Pump Best Practices.

About the author

Peter Santos is a Sr. Data Specialist Architect Manager with Amazon Web Services. Prior to joining Amazon in 2020, he worked in various Cloud and database engineering roles helping companies derive value from data solutions.

Read MoreAWS Database Blog