Okta is an identity and access management company. It has established itself as a key player in the digital security landscape. The Okta cloud platform powers thousands of businesses and applications worldwide and offers access management solutions for both workforce and customer identity use cases.

The Okta Customer Identity Cloud (CIC) provides robust access management solutions, such as Fine Grained Authorization (FGA) to power customer-facing applications and websites. FGA is a multi-Region software as a service (SaaS) offering for flexible, fine-grained authorization at any scale. It’s based on relationship-based access control (ReBAC), which is an evolution of role-based access control (RBAC) and attribute-based access control (ABAC) authorization models.

In this post, we discuss how Okta uses Amazon DynamoDB as a key-value data store for building highly scalable, performant, and reliable business-critical integrations in FGA. DynamoDB is a serverless, NoSQL, fully managed database with single-digit millisecond performance at any scale. We also discuss how Okta designed DynamoDB tables to achieve millions of requests per second (RPS) with multi-active replication across AWS Regions.

What is Okta FGA?

Okta FGA is a permission engine that accommodates the flexibility of complex authorization policies without hindering the development and enforcement of such policies. FGA makes it possible for platforms to effortlessly add fine-grained authorization to their apps. It includes an ecosystem of tooling including APIs, a CLI, and various SDKs spanning many languages.

Apps delegate to FGA to make authorization decisions in critical code paths, so availability and latency are critical characteristics of FGA. Our availability target is four nines (99.99% uptime), which minimizes downtime. FGA strives for a p99 latency of 50 milliseconds or less, which makes sure that our clients experience low latency for even the most demanding performance requirements. Applications integrating with FGA should be able to enforce access policies across Regions. For example, if someone revokes permission to a resource in us-east-1, the permission revocation to that resource should be enforceable in us-west-2, and vice versa. You need to be able to enforce policies across Regions for fast local read and write performance.

Given the characteristics of FGA, we considered several factors choosing a database to support FGA:

High availability

Scalability, with the ability to manage millions of RPS and billions of rows of data

Multi-active replication across Regions

Low latency

Fully managed

Our team has familiarity with open source relational databases, and we evaluated them as a starting point when building FGA. We determined that our scale would add operational complexity due to application sharding. DynamoDB provides seamless scalability for workloads of virtually any size, so we don’t have to worry about performance degradations as our user base grows, or maintenance interruptions from scaling, version upgrades, and patching. It provides a write-through cache offering, which is beneficial for meeting our performance goals. With DynamoDB global tables, a fully managed, multi-Region, and multi-active database option, we can provide high availability and seamless disaster recovery for our users.

FGA design overview

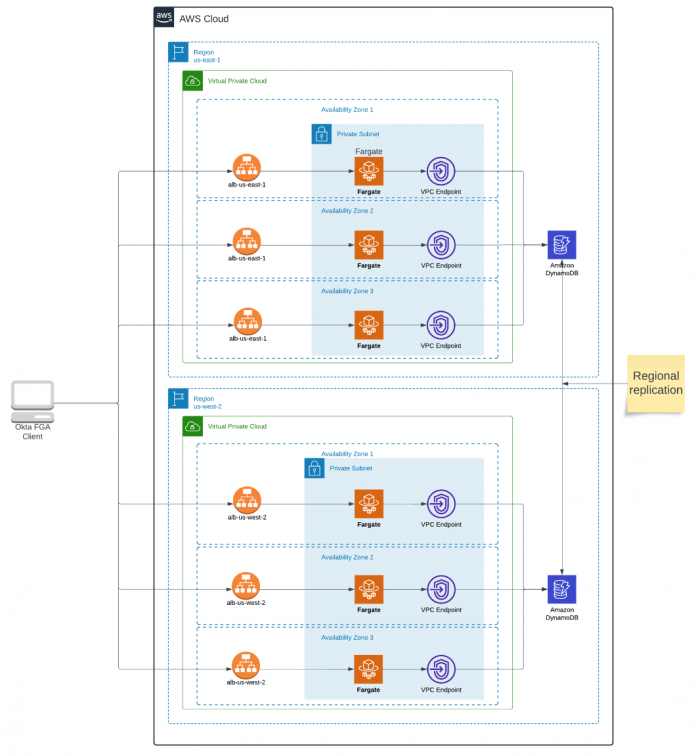

FGA is built on AWS infrastructure and is composed of Application Load Balancers (ALB), AWS Fargate, and Amazon DynamoDB.

Application Load Balancer is a fully managed layer 7 load balancing service that load balances incoming traffic across multiple targets, such as Amazon Elastic Compute Cloud (Amazon EC2) instances.

Fargate is a serverless, pay-as-you-go compute engine that lets you focus on building applications without managing servers.

The following diagram illustrates the solution architecture.

We have multi-Region deployments of FGA services. These deployments are backed by DynamoDB global tables, which provide an active-active architecture, so a write in us-east-1 is visible to readers in us-west-2 and vice versa. This architecture provides high availability and allows us to enforce access controls across Regions. Because FGA is deployed per Region, we’re also able to serve client apps in their same Region, which minimizes latency of the authorization queries they make to FGA.

We achieve this design with very minimal infrastructure. From an operations perspective, it’s straightforward to deploy and manage a multi-Region architecture with DynamoDB because the data plane can be viewed as a single logical database, and so we reduce any burden for managing regional replication.

FGA queries and DynamoDB table design

The FGA API includes various relationship queries that can be used to make authorization decisions:

Check – Can a particular user access a specific resource?

ListObjects – What list of resources can a particular user access?

Evaluating FGA queries involves an iterative traversal algorithm that is governed by two things: an authorization model, which is authored by developers, and relationship tuples, which establish relationships between resources (objects) and subjects (users). Evaluating FGA queries is similar to evaluating paths in a tree-based data structure. We iteratively evaluate the model’s relationship rules and follow the relationship tuples (analogous to edges in a graph). FGA evaluates various branches in an evaluation tree in a highly parallel manner, and if there is a resolution path that leads to a relationship in the tree, then we know the user has the correct permission.

FGA models are a declarative specification using the FGA domain-specific language (DSL) that uniquely describes the authorization model for an app, and is specific to the app and the entities defined in the app. Relationship tuples are facts that are written to FGA by the app when events and actions that impact authorization decisions occur. For example, imagine you were building a project management app; your FGA model may look like the following code:

The app has entities such as Epic, Story, and Task. When an epic entity is created, a relationship tuple can be written establishing the relationship between the user and the epic they created. For example, the relationship tuple (object: epic:someepic, relation: creator, user: user:jon) establishes the relationship that user:jon is the creator of the epic entity with ID someepic. By virtue of being the creator of an epic entity, you can edit it (line 9), and if you can edit a relationship, you can view it (line 10). You can define hierarchies between entities, which allows for relationships to be inherited. If you can view the parent object of some task, then you can view the task itself (line 24). For example, the relationship tuples (task:a, parent, story:somestory) and (story:somestory, viewer, user:jon) allow user:jon to view task:a because user:jon can view the parent story somestory, as shown in the following figure.

By combining these modeling rules and relationship tuples, you can express complex policies that allow developers to model their authorization in a very flexible and fine-grained way.

FGA DynamoDB table schema design

Designing a table schema for DynamoDB is different from using a relational database. When designing a relational database schema, you typically start by defining the data model and then deriving the SQL queries needed for your query patterns. Developing an efficient DynamoDB schema, however, starts by first defining the query patterns needed to achieve some goals, and from there the table schema structure can be derived. It’s important to follow the best practices for designing and architecting with DynamoDB during this part of the schema design phase, because it lends itself well to the performance of the overall system. These considerations were particularly important because of the performance goals that we wanted to achieve. A single FGA query may require multiple queries to DynamoDB to resolve various branches of a relationship tree, so the queries that we make to DynamoDB have to be highly optimized.

We avoid table scans and inefficient filters at all costs, and avoid throughput capacity planning of multiple tables by using a single-table design. Our single-table design schema enables better burst capacity and distributes keys across more partitions, which decreases throttling due to under-provisioned capacity. These requirements made a single-table design a good fit for our use case.

Based on our table design considerations, the team started by defining data query patterns to use the partition key (PK), sort key (SK), and global secondary index (GSI) characteristics in the most efficient way. The following are the primary query patterns governing FGA queries that we came up with (we have others, but these are the main focus).

For relationship tuples, we had the following patterns:

Direct lookup of an individual relationship tuple by store_id, object, relation, and user (PK, SK)

List all tuples in a store_id matching a specific object (GSI1)

List all objects and relations in a store_id filtered by user and object_type (GSI2)

List all tuples for a specific store_id (GSI3)

List all tuples within a store_id filtered by object_type, relation, and user. (GSI4)

For authorization models, we used the following pattern:

Direct lookup of a single model by store_id and model_id (PK, SK)

The following table summarizes the fields and values for these query patterns.

Entity/Item

Field

Value Template

Tuple

PK

TUPLE_{store_id}|{object}#{relation}|{user_type}

SK

{user}

object

{object}

relation

{relation}

user

{user}

GSI1PK

TUPLE_{store_id}|{object}

GSI1SK

{relation}#{user}

GSI2PK

TUPLE_{store_id}|{user}|{object_type}

GSI2SK

{relation}#{object}

GSI3PK

TUPLE_{store_id}

GSI3SK

{object}#{relation}@{user}

GSI4PK

TUPLE_{store_id}|{object_type}|{relation}

GSI4SK

{user}|{object}

Authorization Model

PK

AUTHZMODEL_{store_id}

SK

{model_id}

serialized_model

{serialized model}

With this schema design, we’re able to query the FGA relationship tuples from various angles to serve both forward-query (Check) and reverse-query (ListObjects) patterns. Check queries use the direct PK and SK (direct lookup) of the tuple item, whereas ListObjects does a reverse lookup using GSI4. The other GSIs (GSI1, GSI2, GSI3) are used for the Read API, which allows developers to look up tuples in the system with various filters.

Benchmarks

We tested our system against real-life large-scale traffic by running benchmark tests with 1 million Check requests per second and with upwards of 100 billion FGA relationship tuples. We synthesized an FGA model based on a social network where users can post content and other users can interact with it.

We were able to complete high-scale benchmark testing in only 2 days. The FGA workload is primarily CPU bound, and therefore horizontal scaling of the Fargate workloads allowed us to achieve high throughput. No adjustments were necessary to our DynamoDB schema because the primary key allowed for DynamoDB to write to shards evenly, and the table schema, single-table design, and GSI schemas we developed allowed us to achieve constant time, low-latency query performance regardless of the size of the table and throughput.

FGA is able to serve large-scale traffic while maintaining low latency. It was able to serve 1.05 million Check requests per second with p95 latency under 20 milliseconds, even when we disabled internal caching mechanisms. Each FGA Check query involves multiple DynamoDB queries to evaluate different branches of the Check resolution tree, and so at peak throughput, we were serving several million RPS to DynamoDB and the observed error rate was 0%.

The following figure shows the benchmark request rates (per second).

The following figure shows the benchmark check latency results. A check request consists of multiple calls to DynamoDB and processing results from DynamoDB queries.

*A check request consists of multiple calls to DynamoDB and processing results from DynamoDB queries.

Lessons learned

An important lesson learned is database connection management. Establishing new database connections is expensive and affects p95 and p99 latency. Fine-tuning the maximum connections per host avoided connection churning and improved latency.

For these kinds of demanding workloads, we learned that we needed to pre-warm the DynamoDB table when using on-demand capacity mode. This is especially important in the case of benchmarking because the ramp-up period is rather short—less than 8 minutes in our case. We switched DynamoDB to provisioned capacity mode, provisioned our desired maximum RCU and WCU, and then switched back to on-demand mode. Without pre-warming, DynamoDB queries would have been throttled because the throughput increased more than double the default capacity of the previous peak throughput. Understanding the peak demand of the query workload is important to understand how to react to throughput spikes in production applications.

Finally, we learned to account for GSI costs when doing budget planning. The provisioned throughput settings of a GSI are separate from those of its base table. A query or update to a GSI consumes read and write capacity units from the index, not from the base table. Therefore, a query or write-heavy activity on the base table might get throttled even if the base table has enough capacity provisioned compared to GSI. During the pre-warm period, we were consuming RCUs both on the primary table as well as the GSIs for the table.

Conclusion

In the post, we saw how Okta implemented FGA with DynamoDB to provide multi-Region authorization as a service with low latency and high scalability, requiring no additional operational overhead. Availability, durability, and fault tolerance are built into DynamoDB, eliminating the need to architect your applications for these capabilities. Building on these capabilities, our development team saved several months of effort compared to the traditional approach of deploying, configuring, and fine-tuning a self-managed database service. This saved time, allowing us to reinvest our engineers’ expertise back into core development rather than dedicating resources to maintaining the service infrastructure.

To learn more about FGA, see the whitepaper Evolve your Authorization Strategy with Fine Grained Authorization. To get started with FGA, sign up for free at fga.dev. Consider evaluating DynamoDB when building applications that require high throughput, consistent low latency, and serverless scalability. To learn more about how to achieve the best performance and lowest cost, see Best practices for designing and architecting with DynamoDB.

About the authors

Jonathan Whitaker is a Staff Software Engineer and core maintainer on the Okta FGA and OpenFGA projects. In his role, he oversees the development and design of the various services of the Okta FGA platform, including oversight of the open source engine behind Okta FGA, OpenFGA (a CNCF Sandbox project). His professional passion and emphasis over the last 8 years has been to bring flexible and high-performance authorization to the larger developer community so that we can build more secure applications without as much difficulty. In his spare time, he loves anything outdoors and learning new and exciting avenues of engineering disciplines.

Adrian Tam is a seasoned Senior Software Engineer, known for their talent in diving deep into code and crafting remarkable software solutions. Currently, Adrian works at Okta, where they support both Okta FGA and OpenFGA. With a passion for exploring complex problems, Adrian is known for their ability to unravel challenges and create elegant solutions that stand the test of time.

Jakub Hertyk is a Senior Engineering Manager at Okta, known for leading with a People First approach, prioritizing the well-being and growth of their team members. With two decades of experience in the software industry, Jakub has been focusing on identity and access management in the last five years. They bring a wealth of knowledge and expertise to the table, leading by example, taking ownership, and delivering simple solutions that delight customers. Jakub has a passion for leveraging cloud technologies to drive innovation and deliver scalable and efficient systems.

Sahil Thapar is Principal Solutions Architect at AWS. He works with ISV customers to help them build highly available, scalable, and resilient applications on the AWS Cloud.

Read MoreAWS Database Blog