In recent times, there has been a growing interest in using foundation models (FMs) to build generative AI applications. These models are trained on vast amounts of data and are capable of performing tasks that were previously thought to be the exclusive domain of humans, such as creating art and music. However, when it comes to using FMs in enterprise-level applications, there is a significant limitation. These models are trained on publicly available data and lack awareness of enterprise-specific contexts, such as customer segments, product inventory, and individual customer histories. This means that the applications developed with FMs may only offer generic responses that don’t consider the crucial enterprise-specific data necessary for a more tailored and valuable user experience.

To overcome this limitation, you can customize a foundation model using techniques such as Prompt engineering, Fine-tune and Retrieval Augmented Generation (RAG). RAG allows for the augmentation of the knowledge contained in FMs with domain and enterprise context, enabling the application to generate more contextualized and personal responses. To add enterprise context, enterprise data must first be converted to vector embeddings. This is achieved by using FMs to convert the input data to vectors embeddings. The resulting vector embeddings are then stored in a vector database such as PostgreSQL with the open-source pgvector extension, which provides the ability to store and retrieve vectors as high-dimensional points and facilitates efficient search operations.

pgvector is an open-source PostgreSQL extension, and as of version 0.5.0, it supports both Inverted File with Flat Compression (IVFFlat) and Hierarchical Navigable Small World (HNSW) index algorithms to effectively search the vectors. pgvector is available in Amazon Aurora PostgreSQL-Compatible Edition and Amazon Relational Database Service (Amazon RDS) for PostgreSQL.

In this post, we explore the architecture and implementation of both index types (IVFFlat and HNSW) and examine their search performances. We also discuss the benefits of using pgvector to store and search vector embeddings in PostgreSQL, and how it can help improve the performance of generative AI applications.

Indexing options for Amazon Aurora PostgreSQL and Amazon RDS for PostgreSQL with the pgvector extension

Before we delve further into the indexes that pgvector supports, it’s essential to understand the concept of an exact nearest neighbor search, or k-NN search. To identify the exact match (100% recall), you can do the search on the PostgreSQL vector column without an index. K-NN searches by taking a query vector and calculating the distance between it and every vector stored in the database to identify the shortest distances, also known as the nearest neighbors.

It can be expensive to calculate the distance because you have to compare every dimension in a vector to another vector. For example, if you’re using a model like Amazon Titan Embeddings with a 1,536-dimension output, and you have to find the smallest distance between 1,000,000 vectors, you’ll have to perform this operation across the entire dataset. For larger datasets, the performance of an exact similarity search may not be acceptable for the requirements of an applications. It’s still a valid option for smaller datasets or when you need 100% recall and accuracy (for example, a fingerprint match use case).

In some applications, perfect recall is not necessary. For example, when making product recommendations based on similarity searches, you only need products similar to a given product. In scenarios like these, approximate nearest neighbor (ANN) offers significant performance improvements at the expense of a slight decrease in accuracy, making it a favored choice for search algorithms. Let’s look at two implementations of ANN search: IVFFlat and HNSW.

pgvector offers three different distance operations that these indexes can use. PostgreSQL uses a mechanism called an operator class to define operators that are used in indexes. The operator class provides operators (such as distance functions) and tells the index that it should use them when building the index:

L2 distance – The straight-line distance between two vectors. For L2 distance (Euclidean distance), use the vector_l2_ops operator class.

Cosine distance – This is the angular distance, or how close two vectors are based on the direction they are pointing. For cosine similarity search, use the vector_cosine_ops operator class.

Inner product – The product of the magnitudes of two vectors and the cosine of the angle between them. For inner product similarity, use the vector_ip_ops operator class.

Let’s dive deeper into each type of index.

IVFFlat algorithm

The IVFFlat algorithm is an approximate nearest neighbor search algorithm that works by dividing the vector space into regions based on a K-means algorithm. The user provides an index build time parameter list to control the number of regions and clusters. Given a query vector, the algorithm first identifies the region it belongs to by calculating the distance between the query vector and the centroids of each region. It then performs a local search within that region to find the closest match. The algorithm is efficient because it only needs to search a subset of the vector space, rather than the entire space. This is especially useful for large datasets with high dimensions.

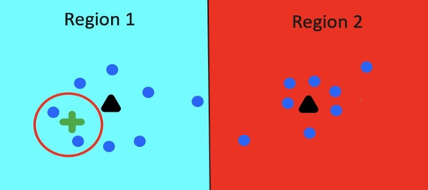

In the following examples, we show a two-dimensional vector space that is divided in two regions with the centroid marked (black triangle). Centroids are identified using the K-means algorithm, and the number of regions is controlled by the lists parameter provided during the index creation. After we build the IVFFlat index, let’s review how search works.

Example 1

In the first Example 1, the query vector is closest to the centroid of Region 1, so the algorithm only needs to search within that region to find the two closest neighbors.

Example 2

In the second Example 2, the query vector is closer to the edge of Region 2, but the algorithm still searches within Region 2 first. However, this time, there is a vector in Region 1 that is closer to the query vector than any vector in Region 2. This illustrates a limitation of the algorithm, which is that it may not always find the closest match if the query vector falls near the edge of a region.

However, to overcome this loss of accuracy and strike a balance between performance and recall, IVFFlat offers two adjustable parameters:

ivfflat.probes – This is a search parameter that governs the number of nearest regions the IVFFlat algorithm searches. The default value is 1, implying a search for the region with the shortest centroid distance from the query vector. Increasing this value can potentially improve search accuracy (recall) by exploring more than one region and reducing the accuracy loss issues that you observed in the second example we discussed. However, this improvement to recall comes at the cost of search performance.

lists – This is an index building parameter that allows you to control the number of clusters or regions created by the IVFFlat index during the index build time. A higher list parameter leads to the creation of more clusters, resulting in a faster search due to fewer vectors within each cluster. However, this also increases the likelihood of reduced recall, because with more clusters, there is a higher probability of a query vector falling closer to the edge of a cluster.

Therefore, it’s important to find the balance between recall and performance using the lists and ivfflat.probes parameters for your workload.

The following is an example of creating an IVFFlat index on PostgreSQL (using pgvector) with each distance operator.

For L2 distance, use the following code:

For cosine distance, use the following code:

For inner product, use the following code:

When creating an IVFFlat index, make sure you’re using the same distance operator that you plan to use for your search query. If you intend to perform searches with multiple distance operators, create separate indexes for each operator. Refer to the implementation section later in this post for more detailed guidance.

HNSW algorithm

HNSW is an algorithm used for an approximate search of nearest neighbors by constructing optimized graph structures. HNSW constructs a graph where a path between any pair of vertices could be traversed in a small number of steps. Before we understand HNSW and how it works, we need to first discuss skip lists and the navigable small words algorithm, which are the motivation behind the HNSW implementation.

Skip lists

A skip list is a type of data structure that stores elements in linked lists, with pointers to elements at different levels of the list. This allows for efficient access to elements at the top of the list, while still maintaining a good average access time for elements lower down in the list. Skip lists are often used in applications where fast access to elements near the top of a list is important, but the cost of accessing elements lower down in the list is not a major concern. It is a probabilistic data structure, meaning that its average time complexity is determined through a probabilistic analysis.

The following figure shows how in a skip list, elements are organized in layers, and each layer has a smaller number of elements than the one below it. The bottom layer is a regular linked list, whereas the layers above it contain skipping links that allow for fast navigation to elements that are far apart in the bottom layer. The idea behind this is to allow for quick traversal to the desired element, reducing the average number of steps needed to reach it. Skip lists are more resilient to changes, because they can be easily updated by inserting new elements or deleting existing ones.

As you can see, this process is much faster than the normal linear search with a linked list. In fact, HNSW inherits the same idea but instead of linked lists, it uses graphs.

NSW algorithm

Navigable Small World (NSW) is a graph algorithm that uses greedy routing to find the nearest neighbor to a query vertex in polylogarithmic time. The following figure shows that the algorithm starts by searching from low-degree vertices to high-degree vertices, then gradually zooms in to find the nearest neighbor among the vertices in that region. However, the efficiency of NSW breaks down for larger networks (1–10,000 or more vertices) when a graph is not navigable. In this case, the algorithm may not be able to accurately find the nearest neighbor and may cancel the search procedure prematurely.

Searching with the HNSW algorithm

Let’s understand how search works in the HNSW algorithm.

HNSW is based on similar principles as a skip list and the NSW algorithm. Its structure represents a multi-layered graph with fewer connections on the top layers and more dense regions on the bottom layers.

The following figure shows how the search starts from the highest layer and proceeds to one level below every time the local nearest neighbor is greedily found among the layer nodes. Ultimately, the found nearest neighbor on the lowest layer is the answer to the query. Similar to NSW, the search quality of HNSW can be improved by using several entry points. Instead of finding only one nearest neighbor on each layer, the hnsw.ef_search parameter limits the number of nearest neighbors maintained in the list, and each of these neighbors is used as the entry point on the next layer.

To explain how HNSW handles data inserts and construction is out of scope for this post; we recommend reading the published paper Efficient and robust approximate nearest neighbor search using Hierarchical Navigable Small World graphs for more a detailed explanation of HNSW.

The following are examples of creating an HNSW index in PostgreSQL (using pgvector) with each distance operator.

For L2 distance, use the following code:

For cosine distance, use the following code:

For inner product, use the following code:

For index build, you can tune the following parameters:

m – This specifies the maximum number of edges a vector will get in a graph. The higher this number is, the more memory the graph consume, but the better the search approximation may be. The default value is 16.

ef_construction – When a node is to be inserted into the graph, the algorithm will find its m edges by querying the graph with the new node as the query vector. This parameter controls the candidate queue size for this traversal. A larger value increases index latency, but may provide a better search approximation. The default value is 64.

For search, you can tune the following parameter:

hnsw.ef_search – This specifies the size of the queue of the candidate nodes to visit during traversal. When a node is visited, its neighbors are added to the queue to be visited in the future. When this queue is empty, the traversal ends. A larger value increases search latency, but may provide better search approximation.

Implementation and performance results using sequential scan, IVFFlat, and HNSW indexes

To perform the index test, we built a real-world use case of a product catalog search. We used multiple PDF documents from AWS product catalog such as Amazon Aurora, Amazon RDS, Amazon DynamoDB, and few others as dataset input. The user can send a prompt asking different product-related questions without mentioning the product name, and the application should return the relevant result to the user.

To create the vector embeddings, we used the Titan Embeddings G1 – Text (amazon.titan-embed-text-v1) large language model (LLM) available on Amazon Bedrock. This model can intake up to 8,000 tokens and outputs a vector of 1,536 dimensions. We also used the LangChain framework and text splitter with chunk size 1,000 to split the input data into smaller chunks. After the split, we had 58,634 documents. We stored these documents in a table called langchain_pg_embedding in Amazon RDS for PostgreSQL. You can also use a knowledge base in Amazon Bedrock to split the documents, create embeddings, and store it in Amazon Aurora PostgreSQL.

During the search, we sent prompts similar to “What is Aurora fast cloning, blue green deployment, zero downtime patching?” and measured how the search performs with IVFFlat, HNSW, and sequential scan. Before you can run the similarity search on vectors, these prompts must be converted to embeddings using the same embedding model employed for the conversion of documents. We don’t cover the implementation details of building such an application in this post, and are more focused on the performance aspects of IVVFlat and HNSW. If you are interested in building such an application, refer to Leverage pgvector and Amazon Aurora PostgreSQL for Natural Language Processing, Chatbots and Sentiment Analysis.

Let’s review the langchain_pg_embedding table, as shown in the following screenshot.

The total number of documents after the document split was approximately 58,000. We inserted the raw text data in the document column, and vector embeddings with 1,536 dimensions in the embedding column of the langchain_pg_embedding table. You can get the count with the following command:

The size of the table is 364 MB.

The following tests were initially done on the RDS instance type (db.r5.large with 2 vCPUs and 16 GiB of memory), with PostgreSQL version 15.4 and pgvector version 0.5.0. At the time of publication, pgvector 0.6.0 had been released, featuring several optimizations for HNSW index build time. We re-ran the HNSW index build test with pgvector 0.6.0 on an r7g.large instance, and saw a 2.7x speedup.

Sequential scan

Let’s see how a sequential scan performs compared to an index scan on this dataset. Currently, there is no index on embedding columns. We use a moderately sized RDS instance type (db.r5.large, with 2 vCPUs and 16 GiB of memory) because the focus of this post is to assess the efficiency of ANN and k-NN queries using various indexes, rather than conduct a benchmarking test. The following is the sample query we run to analyze the k-NN and ANN query performance:

We use the cosine operator (<=>) for measuring the vector distance. First, we convert the prompt “what is aurora” to vector embeddings using the same LLM (amazon.titan-embed-text-v1) that we used for embedding the document. Next, we use the exact value of this vector for a similarity search. During the search, we find the two vectors that are most similar (ORDER BY LIMIT 2) to the given prompt (“what is aurora”).

The following screenshot shows the output of the explain analyze command and reviews the query plan. The expected query runs a sequential scan on the langchain_pg_embedding table. Note that the screenshots don’t display the entire query or query plan. However, they do present the essential information we are interested in.

Search using an IVFFlat index

Now we create an IVFFlat index on the embedding column. Because we’re using the cosine distance for vector distance measurement during the search, we must use the cosine distance operator during index creation. This is required for the optimizer to use an index scan.

You can also increase maintenance_work_mem for faster index build time. In some cases, along with giving more memory, you can speed up index build time further by building an index in parallel (only available with IVFFlat as of this writing). We use the default setting because we only have a 2 vCPU instance and a small dataset:

Now, let’s run the identical query previously employed for the sequential scan. Upon reviewing the query plan, you can see that the query optimizer opts for an index scan using the IVFFlat index this time. Notably, the search time is significantly reduced (650 milliseconds to 2.4 milliseconds), even when dealing with such a small dataset.

HNSW index search

The tests below show the experiment results with building a HNSW index first with pgvector 0.5.0, and then with pgvector 0.6.0 using the same cosine distance operation.

With pgvector 0.5.0

With pgvector 0.6.0

Once again, we run the same query. With the HNSW index in place, the query runs an index scan using the HNSW index. The runtime is further reduced from the IVFFlat search time.

Test results

During the build phase, you may have noticed that IVFFlat index creation with pgvector 0.5.0 is much faster compared to HNSW. With approximately 58,000 records, IVFFlat takes around 15 seconds, while HNSW index creation took 81 seconds. However, with optimizations around HNSW build time in pgvector 0.6.0, we observed that HNSW index build time was reduced to 30 seconds. Considering the improved HNSW index build time from pgvector 0.6.0 coupled with low latency queries, we expect builders to start utilizing the HNSW indexing when creating their indexes.

During the search phase, we ran identical queries for all implementations and noticed that HNSW performs the best with approximately 1.5 milliseconds, IVFFlat with approximately 2.4 milliseconds, and sequential scan with approximately 650 milliseconds runtime. We also tried different prompts and queries and different orders with similar results. Although you can further optimize your sequential scan search time by using a parallel sequential scan, the difference in performance between a sequential scan and index scan is quite significant and will only be more prominent with larger datasets.

For more details on performance numbers, refer to Accelerate HNSW indexing and searching with pgvector on Amazon Aurora PostgreSQL-compatible edition and Amazon RDS for PostgreSQL.

Key takeaways

These tests yielded the following takeaways:

The primary design decision about the index must be based on query performance and recall.

Based on the filtering, PostgreSQL may choose to not use the index.

IVFFlat has the following considerations:

Increasing ivfflat.probes increases recall but decreases performance.

You can choose the value of lists to maximize recall but minimize effort of search:

Less than 1 million vectors: # vectors/1000

More than 1 million vectors: √(# vectors)

It may be necessary to rebuild when adding or modifying vectors in the index.

You can use parallelism to accelerate build times.

The IVFFlat index requires data to identify the centroid and build regions. You must load the data in the table first before creating this kind of index, in contrast to other indexes that are built on empty tables.

HNSW has the following considerations:

Index building has the biggest impact on performance and recall.

Increasing hnsw.ef_search increases recall but decreases performance.

Default values (m=16, ef_construction=64) usually work.

This is still a rapidly evolving space, and recommendations may change over time as we learn more about how to optimize storage and retrieval of vectors in databases.

Conclusion

In this post, we discussed different indexes that you can use to accelerate ingestion and query performance when using pgvector on Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL. We also did performance tests on IVFFlat and HNSW indexes on a dataset to observe the differences.

Choosing the right index can help you optimize your database configuration and design your applications so that you can take full advantage of the index’s functionality. You can use the guidance in this post to help you start building your generative AI applications with pgvector on Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL.

We invite you to leave feedback in the comments.

About the Authors

Vishal Srivastava is a Senior Partner Solutions Architect specializing in databases at AWS. In his role, Vishal works with AWS Partners to provide guidance and technical assistance on database projects, helping them improve the value of their solutions when using AWS.

Abhinav Sarin is a Principal Partner Solutions Architect at AWS. His core interests include databases, data analytics, and machine learning. He works with AWS customers and partners to provide guidance and technical assistance on database projects, helping them improve the value of their solutions when using AWS.

Read MoreAWS Database Blog