In this post post, we explain why you should consider moving your self-managed open source software (OSS) Redis workloads to managed Amazon ElastiCache for Redis. OSS Redis is a powerful, in-memory data store designed to meet the demands of modern high-performance applications. With OSS Redis, you can tackle a wide range of use-cases, including caching, real-time analytics, leaderboards, feature stores, messaging, and session management. While OSS Redis is a great choice for many applications, managing it can come with significant costs and operational overhead. We will take a closer look at the expenses and management challenges associated with self-managed OSS Redis and explore how Amazon ElastiCache can help reduce the burden. We will discuss the features of ElastiCache that enable you to optimize costs without compromising on functionality, providing you with insights to make an informed decision for your application needs.

Developers often opt for self-managed OSS Redis deployments due to the flexibility and control it provides by hosting OSS Redis on their own infrastructure. As application usage and adoption grows, scaling and sharding become vital to ensure resiliency and avoid performance degradation. Initially, a simple cache could be deployed on a single medium sized server. However, as the dataset size grows to tens of gigabytes and the throughput requirements increase, developers are required to set up an OSS Redis cluster to handle throughput with read replicas and sharding. As the cache becomes the key architecture component, ensuring availability with Redis sentinel or Redis cluster becomes critical.

Self-managing OSS Redis on Amazon Elastic Compute Cloud (Amazon EC2) or a Kubernetes deployment using EKS, can help reduce infrastructure costs partially. This allows you to provision what you need and scale the instances according to the traffic patterns. Nevertheless, complexity of managing an OSS Redis cluster with additional overhead of monitoring, alerting, patching and ensuring high availability, still pose challenges to the organizations. When managing an OSS Redis cluster, key metrics such as latency, CPU usage, and memory usage need to be closely monitored, and specific thresholds need to be set up that generate alerts to avoid potential downtime scenarios. Replication and failover mechanisms with configurations for automated or manual recovery is needed for ensuring high availability and reducing overall downtime windows. As adoption of OSS Redis expands to other use cases such as leaderboards, the deployment becomes increasingly intricate. Any unplanned outages can have significant consequences on user experience and revenue for organizations. To ensure they are prepared for peak loads, organizations often opt to provision additional resources, leading to higher maintenance costs and an elevated Total Cost of Ownership (TCO). For reference, Coupa Company, a leader in Business Spend Management (BSM), was able to reduce operations time and effort significantly, by launching Amazon ElastiCache for Redis for all existing use-cases while growing dataset size 5x. For more details on their migration, check out this blog post.

Fully managed cache in the cloud – Amazon ElastiCache

Amazon ElastiCache is a fully managed service that makes it easy to deploy, operate, and scale a cache in the cloud. The service improves the performance of web applications by allowing you to retrieve information from a fast, managed, in-memory data store, instead of relying entirely on slower disk-based databases. A managed service can offload maintenance work and provide performance and functionality at scale. It allows businesses to focus on core competencies to drive innovation while ensuring security, flexibility and efficiency. According to a recent IDC whitepaper, moving to a managed database can result in 39% lower cost of operations, while accelerating deployment of new databases to 86% and reducing unplanned downtimes by 97%.

Amazon ElastiCache for Redis is a Redis-compatible in-memory service that delivers ease-of-use and the power of Redis along with the availability, reliability and performance suitable for the most demanding applications. ElastiCache clusters can support up to 500 shards, enabling scalability to up to 310 TiB of in-memory data and serving hundreds of millions of requests per second.

Reduction of TCO

By moving their workloads to ElastiCache, businesses can leverage online scaling, automated backups, resiliency with auto-failover, in-built monitoring and alerting without any overhead of underlying resource management. This can significantly reduce the time and resources needed to manage OSS Redis workloads while improving availability.

Example Workload – Lets take a look at a workload and compare the cost of implementing it with self-managed on-premise deployment of OSS Redis versus ElastiCache. Anycompany operates a retail e-commerce application, and their product catalog plays a crucial role in driving sales. To improve performance and user experience, they need to cache the product catalog and user data for quicker responses.

Infrastructure overhead: As the company expanded its product offerings, the cache size grew resulting in scaling their infrastructure to a clustered Redis implementation with read replicas. This allowed them to meet the throughput needs that varied from a few hundred requests per second to tens of thousands during holidays, while ensuring availability. This drove the need to provision the environment upfront to handle peak traffic, resulting in an unused capacity during off-peak periods and unnecessary increase in the total cost incurred.

Infrastructure security, compliance and auditing overhead: As the improved user experience and growth led to the adoption of OSS Redis across other services such as cart management and checkout, Anycompany needed to implement strong security practices to safeguard sensitive user information and credit data. The increased compliance requirements on infrastructure for these audits and certifications resulted in additional costs related to compliance and auditing.

Overhead of monitoring and alerting: When the OSS Redis cluster had intermittent or prolonged node failures, Anycompany had to rebuild the instances and rehydrate cache resulting in revenue losses. This drove additional costs for monitoring and alerting software, and for operations setup and support resources to handle failures.

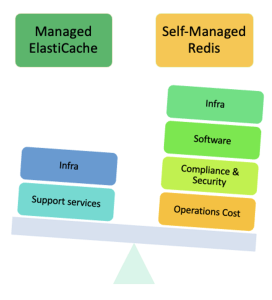

When self-managing workloads, enterprises need to consider infrastructure and core software, infrastructure compliance, security, and operational costs. With managed ElastiCache, the costs mainly include the cost of the cache instances and cloud support services. Elasticache helped Anycompany scale in or out in sync with usage patterns. As a result, they decided to move their workloads to ElastiCache which removed the additional infrastructure, compliance and monitoring costs. This allowed them to simplify their operations.

Key ElastiCache features that help with Cost optimization

ElastiCache offers several features that can enable companies to efficiently scale their applications, reduce costs and drive growth.

High Availability: ElastiCache is highly available (99.99% SLA when running multi-AZ) and resilient, with built-in failover and data replication capabilities. This reduces the risk of downtime and its associated costs, such as lost revenue and damage to reputation. With a Multi-AZ enabled cluster ElastiCache will perform a smart placement of primary and at least one replica node, of each shard, in different Availability Zones. In the event of primary node impairment, ElastiCache automatically promotes the read replica with the least replication lag to be the new primary within a few seconds. The service then self-heals the impaired primary node or replaces it with a new replica node, and synchronizes the data to the new primary. For more information see Failure scenarios with Multi-AZ responses.

Scalability: With Amazon ElastiCache for Redis, you can start small and easily scale your clusters as your application grows. ElastiCache offers a large variety of instance types and configuration including support for Graviton instances. It is designed to support online cluster resizing to scale-out, scale-in and online rebalance your Redis clusters without downtime. ElastiCache’s Autoscaling allows deploying resources and nodes in accordance to traffic patterns, thereby ensuring less resource consumption for the same workload, and allowing provisioning per need. This avoids the cost incurred in provisioning resources upfront to handle future peaks in demand.

Monitoring: ElastiCache provides enhanced visibility via Amazon CloudWatch into key performance metrics associated with your resources at no additional cost. CloudWatch alarms allow you to set thresholds on metrics, trigger notifications to inform you when preventive actions are needed. Monitoring trends over time can help you detect workload growth. Datapoints are available for up to 455 days (15 months), and the takeaways from observing the extended time range of CloudWatch metrics can help you forecast your resources utilization. Using the large range of metrics and CloudWatch alarms enables you to tightly manage your ElastiCache cluster with good utilization and avoid waste.

Extreme Performance (price/performance): ElastiCache’s Enhanced I/O multiplexing feature is ideal for throughput-bound workloads with multiple client connections, and its benefits scale with the level of workload concurrency. In March 2019, we introduced enhanced I/O, which delivered up to an 83% increase in throughput and up to a 47% reduction in latency per node. In November 2021, we improved the throughput of TLS-enabled clusters by offloading encryption to non-Redis engine threads. Enhanced I/O multiplexing further improves the throughput and reduces latency without changing your applications. As an example, when using an r6g.xlarge node and running 5,200 concurrent clients, you can achieve up to 72% increased throughput (read and write operations per second).

Data Tiering: When workloads have large memory footprint, data tiering can help alleviate costs of having the entire dataset in memory. ElastiCache automatically and transparently tiers data between DRAM and locally attached NVMe solid state drives (SSDs). It can help achieve over 60% storage cost savings when running at maximum utilization compared to R6g nodes (memory only) while having minimal performance impact on applications.

Security and Compliance: ElastiCache is a PCI compliant, HIPAA eligible, FedRAMP authorized service, and offers encryption in transit and at rest to help keep sensitive data safe. This can significantly reduce cost overhead of obtaining and maintaining certifications on infrastructure and ensuring that critical compliance and data security requirements are met. You can leverage hardened security that lets you isolate your cluster within Amazon Virtual Private Cloud (Amazon VPC).

Reserved Instances (RIs): Lastly, ElastiCache provides a cost-effective pricing model with Reserved Instances. This is based on the usage of Redis nodes, allowing businesses to pay only for the resources they need. Reserve Instances (RIs) can be beneficial when there are workloads that are stable and predictable, and utilization commitment can be from 1-3yrs.

In summary, the features below highlight the heavy lifting Elasticache provides helping customers optimize costs

Feature

Self-managed OSS Redis

Amazon ElastiCache

Autoscaling

No

Yes

Data-tiering

No

Yes

Availability

Redis Sentinel or Cluster with manual intervention.

99.99% with fast failover and automatic recovery.

Compliance

Additional price and effort

SOC1, SOC2, SOC3, ISO, MTCS, C5, PCI-DSS, HIPAA

Enhanced security

No

Yes

Online horizontal scaling

No

Yes

Monitoring

External tools

Native CloudWatch integration and aggregated metrics.

Enhanced I/O Multiplexing

No

Yes

Conclusion

Amazon ElastiCache for Redis is fully compatible with the open-source version providing security, compliance, high-availability, and reliability while optimizing costs. As explained in this post, managing OSS Redis can be tedious. ElastiCache eliminates the undifferentiated heavy lifting associated with all the administrative tasks (including monitoring, patching, backups, and automatic failover). You get the ability to automatically scale and resize your cluster to terabytes of data. The benefits of managed ElastiCache allow you to optimize overall TCO and focus on your business and your data instead of your operations. To learn more about ElastiCache, visit aws.amazon.com/elasticache/.

About the authors

Sashi Varanasi is a Worldwide leader for Specialist Solutions architecture, In-Memory and Blockchain Data services. She has 25+ years of IT Industry experience and has been with AWS since 2019. Prior to AWS, she worked in Product Engineering and Enterprise Architecture leadership roles at various companies including Sabre Corp, Kemper Insurance and Motorola.

Lakshmi Peri is a Sr, Solutions Architect specialized in NoSQL databases. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies. In her spare time, she enjoys traveling to new places and spending time with family.

Read MoreAWS Database Blog