We are excited to announce the availability of AWS Database Migration Service (AWS DMS) replication engine version 3.5.1. This version provides improvements covering data validation, support for new endpoints, and endpoint settings that were requested by many of our customers. In this post, we highlight a few key features. For the entire list of improvements, refer to AWS DMS release notes.

We dive deep into the following improvements in AWS DMS 3.5.1:

Use PostgreSQL target endpoint to migrate to Babelfish

Use PostgreSQL 15 as a source

Use an Amazon Redshift Serverless clusters as target

Use an Amazon DocumentDB (with MongoDB compatibility) elastic clusters as a target for sharded collections

Use PostgreSQL target endpoint to migrate to Babelfish

Babelfish for Aurora PostgreSQL is a capability for Amazon Aurora PostgreSQL-Compatible Edition that enables Aurora to understand commands from applications written for Microsoft SQL Server.

Starting in AWS DMS 3.5.1, we have added the new DatabaseMode and BabelfishDatabaseName settings to the PostgreSQL target endpoint to simplify migrations to Babelfish. We also improved support for LOB, BINARY, VARBINARY, and IMAGE data types.

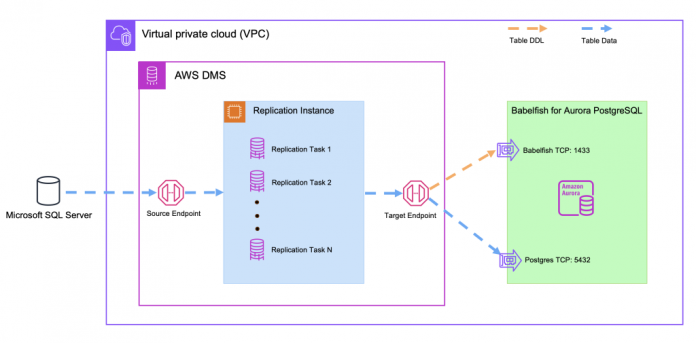

Additionally, we improved the performance of SQL Server to Babelfish migrations by loading the data directly to Aurora PostgreSQL-Compatible and bypassing Babelfish conversion. As shown in the following diagram, the t-SQL endpoint is used to run the DDL commands during the initial creation of the target schema, and the PostgreSQL target endpoint is used to migrate data.

For more information about Babelfish for Aurora PostgreSQL, see Using Babelfish for Aurora PostgreSQL.

In this section, we walk through the process of migrating from SQL Server to Babelfish for Aurora PostgreSQL.

Prerequisites

Make sure you have the following prerequisites:

An AWS account with AWS Identity and Access Management (IAM) user that allows you to create to Amazon Relational Database Service (Amazon RDS) and AWS DMS resources in your Region. For information about creating IAM user, see Create an IAM User.

A general understanding of using SQL Server as a source and Babelfish for Aurora PostgreSQL as a target endpoint in an AWS DMS-based migration. For information about working with SQL Server as a source, see Using a Microsoft SQL Server database as a source for AWS DMS. For information about working with Aurora PostgreSQL-Compatible as a target, see Using a PostgreSQL database as a target for AWS Database Migration Service.

Use Babelfish Compass to create an assessment report depending on your operating system.

Generate all the tables you want to migrate to Babelfish for Aurora PostgreSQL using SQL Server Management Studio (SSMS). Then connect to your Babelfish database using SSMS and run those scripts to create the tables on the target. For more information, see Prepare and create your tables prior to migration.

Migrate from SQL Server to Babelfish for Aurora PostgreSQL

Complete the following high-level steps to migrate from SQL Server to Babelfish for Aurora PostgreSQL using AWS DMS:

Configure your SQL Server database and tables for AWS DMS replication or MS change data capture (CDC).

For more information, see Prerequisites for using ongoing replication (CDC) from a SQL Server source. For more information on setting up a distribution database and enabling AWS DMS CDC to support your AWS DMS replication task, see Setting up ongoing replication on a cloud SQL Server DB instance. For more information about using AWS DMS replication and AWS DMS CDC, see Configuring a Microsoft SQL Server Database as a Replication Source.

Create an AWS DMS replication instance.

For more information concerning how to select the right instance class to support your data migration, see Choosing the right AWS DMS replication instance class for your migration. For the formula to size your Amazon Elastic Compute Cloud (Amazon EC2) instance, see How to size your AWS DMS replication instance.

Create an AWS DMS source endpoint for SQL Server.

For more information about AWS DMS source endpoint settings, such as providing support for SQL Server Always On read-only replicas, see Working with self-managed SQL Server AlwaysOn availability groups. For source endpoint limitations, see Limitations on using SQL Server as a source for AWS DMS.

Configure and verify the Babelfish for Aurora PostgreSQL database AWS DMS user account.

All target tables should be already created as part of the prerequisites. After your user account is created, verify that the target objects exist by connecting to the babelfish_db database on Aurora PostgreSQL-Compatible. The AWS DMS user account should have sufficient SELECT, INSERT, UPDATE, DELETE, and REFERENCES permissions on the target objects.

Configure an AWS DMS target endpoint for Aurora PostgreSQL-Compatible. Add endpoint settings DatabaseMode=Babelfish; BabelfishDatabaseName=<user’s babelfish database>.

BabelfishDatabaseName should be set to the database name used when connecting to the old SQL Server database. For information about other AWS DMS endpoint settings for a PostgreSQL target, see Endpoint Settings for PostgreSQL as target endpoint.

Create an AWS DMS full load and CDC migration task.

Because target tables were pre-created in the previous steps, we set targetTablePrepMode to Do_Nothing. As a result, the target tables created as part of the prerequisites in the Babelfish database will not be altered by AWS DMS. Keep in mind that the object names in the PostgreSQL database are case-sensitive. Make sure your AWS DMS task table mappings include a schema transformation rule to rename the schema and convert table names to lower-case. See Adding transformation rules to your migration task and Specifying task settings for AWS Database Migration Service tasks for more information.

Verify the AWS DMS replication task status.

For more information on monitoring AWS DMS task status and metrics, see Monitoring AWS DMS tasks. For more information regarding viewing AWS DMS error log events, see Task Logs.

Best practices

When using AWS DMS to replicate data to Babelfish for Aurora PostgreSQL, consider the following best practices:

Consider creating foreign keys after migrating all of your data but before cutting over your application traffic to Babelfish for Aurora PostgreSQL. If you want to import data without dropping foreign keys on the target table, make sure your table mappings include load_order according to the relational integrity enforced by the foreign keys. When data is migrated in the wrong order, which violates foreign keys, you will notice errors in the AWS DMS log with information about your constraint or foreign key violation, and the task will fail.

When replicating large tables or large amounts of data containing LOB columns, you should consider dropping the indexes on target tables before starting the migration. Indexes can be recreated after the full load stage of the migration is complete. Dropping indexes before loading the target tables can speed up the AWS DMS data load process significantly.

Use PostgreSQL 15 as a source

PostgreSQL is one of the most commonly used open-source databases. With AWS DMS 3.5.1, we’ve launched support for PostgreSQL 15.x as source and target. You can now migrate your workloads to and from PostgreSQL 15.x.

PostgreSQL 15 contains many new features and enhancements. Refer to the PostgreSQL community announcement for more details about PostgreSQL 15 release and Impactful features in PostgreSQL 15.

AWS DMS supports replication of the SQL MERGE command, which has been introduced in PostgreSQL 15. For a list of AWS DMS limitations with PostgreSQL, refer to Limitations on using a PostgreSQL database as a DMS source.

Use a Redshift Serverless cluster as target

Amazon Redshift is a cloud data warehouse that enables you to gain new insights from your data. With Redshift Serverless, you can run and scale analytics without having to provision and manage data warehouse clusters.

Starting from AWS DMS 3.5.1, AWS DMS has added a new target endpoint to support Redshift Serverless. You can now use AWS DMS to migrate your workloads to Redshift Serverless.

Note that AWS DMS supports Redshift Serverless as a target in the Regions where Redshift Serverless is generally available.

In this section, we walk through the process of creating a Redshift Serverless target endpoint for AWS DMS.

Create a Redshift Serverless target endpoint

To create a Redshift Serverless target endpoint, complete the following steps:

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

Select Target endpoint.

For Endpoint identifier, enter a name, such as redshift-serverless.

Enter an optional Descriptive Amazon Resource Name (ARN).

For Target engine, choose Amazon Redshift Serverless.

For Server name, enter the name of the data server.

Provide the workgroup endpoint as the server name along with rest of the connection details. See the following screenshot for an example.

Enter your port and connection details.

Choose Create endpoint. Refer to the screenshot for sample fields in the “Create Endpoint” page.

Create an AWS DMS migration task

To migrate the data from the source to target database, you need to create an AWS DMS task. Complete the following steps:

On the AWS DMS console, choose Database migration tasks.

Choose Create task.

For Task identifier, enter a name, such as redshiftserverless-migration.

Enter an optional description.

For Replication instance, choose the target database endpoint you created.

For Source database endpoint, choose the existing source endpoint.

For Migration type, choose the type as required (for this post, we choose Migrate existing data).

Choose a replication instance running AWS DMS 3.5.1.

Specify your task settings, table mappings, and other options as required.

Create your task.

We will continue enhancing support for this endpoint in future releases. For the list of all AWS DMS limitations with this endpoint, refer to Limitations when using Amazon Redshift Serverless as a target. To learn more about using Redshift Serverless endpoints, refer to Using AWS DMS with Amazon Redshift Serverless as a Target.

Use an Amazon DocumentDB elastic cluster as a target for sharded collections

AWS DMS now supports Amazon DocumentDB elastic clusters as a target endpoint. With elastic clusters, you can distribute your workloads across shards, which support millions of reads and writes per second. Elastic clusters also simplify scaling Amazon DocumentDB by eliminating the need to choose, manage, or upgrade instances.

In this section, we share an overview of using an Amazon DocumentDB elastic cluster target endpoint with AWS DMS.

Prerequisites

Make sure you have the following prerequisites:

An Amazon DocumentDB elastic cluster with the required number of shards. Refer to Create an elastic cluster for more details.

All the required collections on the target Amazon DocumentDB elastic cluster. Specify the shard key as appropriate and choose either the existing source DB shard key or an alternative key of your choice.

For example, in the following code, we migrate the bulkload collection present in shardtestdb from Amazon DocumentDB to an Amazon DocumentDB elastic cluster using user_id as the shard key:

Create source and target endpoints

Create your source and target endpoints after your replication instance is created. For this post, we create a source endpoint for Amazon DocumentDB and a target endpoint for an Amazon DocumentDB elastic cluster. Refer to Amazon DocumentDB elastic cluster target for more details. Complete the following steps to create the endpoints:

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

For Endpoint type, choose Source endpoint and enter the following information:

For Endpoint identifier, enter a name for your endpoint.

For Source engine, choose Amazon DocumentDB (with MongoDB compatibility).

Specify your database details to allow AWS DMS to access the database.

Specify the database to migrate.

For Metadata mode, choose Document.

Add the ECA for Amazon DocumentDB.

Create another endpoint.

For Endpoint type, choose Target endpoint and enter the following information:

For Endpoint identifier, enter a name for your endpoint.

For Target engine, choose Amazon DocumentDB (with MongoDB compatibility).

Specify your elastic cluster details to allow AWS DMS to access the database.

Specify the database to migrate.

Add the ECA replicateShardCollections.

Create a database migration task using the source and target endpoints you created, and specify the rules for tables to be migrated.

Start the migration task.

Review the table statistics to verify the migration progress.

Summary

In this post, we shared with you the latest enhancements in AWS DMS 3.5.1. AWS DMS takes your SQL Server data migration to Babelfish for Aurora PostgreSQL to the next level, all without the need for traditional, legacy methods like bcp, SSIS, or custom scripting. Additionally, we’re excited to announce AWS DMS support for PostgreSQL 15, further expanding the capabilities of AWS DMS for your data migration needs. AWS DMS also now offers support for migrating your workloads to Redshift Serverless and Amazon DocumentDB elastic clusters, making the process more streamlined and efficient.

If you have any questions, comments, or suggestions, leave a comment.

About the authors

Sanyam Jain is a Database Engineer with the AWS Database Migration Service team. He collaborates closely with customers, offering technical guidance for migrating on-premises workloads to the AWS Cloud. Additionally, he plays a pivotal role in enhancing the quality and functionality of AWS data migration products.

Abhishek Mehta is a database engineer with the AWS Database Migration Service team. He works on improving the AWS DMS service to better suit the needs of AWS customers.

Don Tam is a Database Engineer with the AWS DMS team. He works with engineers to migrate customers’ database platforms to the AWS Cloud. He also assists developers in continuously improving the functionality of AWS Cloud services.

Read MoreAWS Database Blog