When moving from monoliths to microservices, you often need to propagate the same data from the monolith into multiple downstream data stores. These include purpose-built databases serving microservices as part of a decomposition project, Amazon Simple Storage Service (Amazon S3) for hydrating a data lake, or as part of a long-running command query responsibility segregation (CQRS) architecture, where the reads and writes are segregated to separate the highly transactional write requests from the analytical read requests.

Event sourcing facilitates all these use cases by providing an immutable, ordered series of events representing the state of the source system.

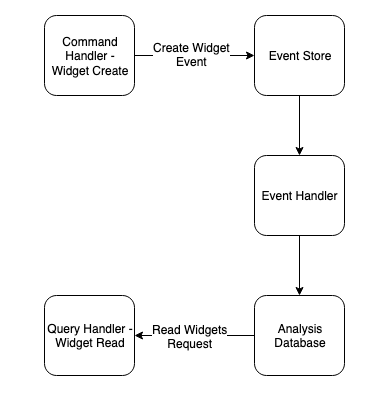

Take for example a software application that tracks the production of widgets. An example of implementing event sourcing and a CQRS architecture in this system is shown in the following diagram.

The application’s commands (writes) are statements that mutate the state of the Online Transactional Processing (OLTP) data store. The event stream then sends events as they occur to an event store. Finally, these events are replayed into downstream systems such as an Online Analytical Processing (OLAP) database, purpose-built databases backing microservices, or a data lake.

There are multiple ways to implement this architecture in AWS. One approach is to use a message broker such as Amazon Managed Streaming for Apache Kafka (Amazon MSK) to send the events to the consumers (for more information, refer to Capture changes from Amazon DocumentDB via AWS Lambda and publish them to Amazon MSK). The downside of this is that it requires the system emitting the events to be event sourcing aware—you have to change the application to capture all the events and send them to the event bus or event stream. This can be complex and error-prone, and it detracts from development efforts to move away from the monolith. Additionally, it adds additional work for any features being developed while the refactoring takes place to make sure the new services emit events.

This is where we can use AWS Database Migration Service (AWS DMS). AWS DMS allows you to migrate from any supported data source to any supported destination—both on a one-off and ongoing basis. It’s the ongoing part that is important here—not only is this ongoing change data capture (CDC) suitable for migration, it’s also well suited for hydrating event stores as part of an event-sourcing architecture. In this post, we explore two patterns to implement this approach. The first approach uses AWS DMS only. The second approach uses AWS DMS in conjunction with Amazon MSK.

Solution overview

The first pattern uses an AWS DMS-only implementation. This uses an AWS DMS task to replicate from the monolith (source) database to the event store, in this case Amazon Simple Storage Service (Amazon S3). AWS DMS then uses this intermediate event store as the marshalling point for replicating to other databases. This approach is great when there is no immediate consistency requirement. Examples could be a reporting and analytics database that only requires a daily aggregated view of the data, or for retrospective auditing of the data where real-time replication isn’t expected. This pattern is shown in the following diagram.

The second pattern uses AWS DMS as the connector to extract the data from the source without having to make changes to the source system, but it uses Kafka (in this case Amazon MSK) as the event store and for streaming to downstream consumers. This has the advantage of typically being faster than the two-hop method described in the first pattern, and allows applications to consume from the stream directly. This pattern is shown in the following diagram.

Prerequisites

To test the solution, you need to have an AWS account. You also need AWS Identity and Access Management (IAM) permissions to create AWS DMS, Amazon MSK, AWS Lambda, and Amazon DynamoDB resources.

Deploy the examples

For examples of each solution, we provide AWS Serverless Application Model (AWS SAM) templates that you can deploy in your own account. You can also use these examples as a reference for your own implementations.

To deploy the AWS SAM template, clone the GitHub repository and follow the instructions in the repository:

Solution 1: AWS DMS only

We start by exploring the simpler, AWS DMS-only solution. With the example template deployed, navigate to the AWS DMS console and verify that two tasks have been created.

The first task is the initial full load and ongoing CDC of the source database, in this case an Amazon Relational Database Service (Amazon RDS) for PostgreSQL instance. This task loads the data into the destination event store S3 bucket.

The second task is the event store (Amazon S3) to DynamoDB task. In our example scenario, we’re decomposing our monolith into microservices and picking the correct purpose-built database for the job. The first microservice we decompose, our “get widgets” function, is migrated to DynamoDB. This task manages the initial hydration and ongoing replication of our event store into this new microservice.

Run the replication tasks

After some sample data is generated (1–2 minutes), you can run the replication tasks. Complete the following steps:

On the AWS DMS console, locate the first task, which is the initial load and ongoing replication to Amazon S3.

Confirm that the task is in the Ready state.

Select the task and on the Actions menu, choose Restart/resume to run the task.

While the first task is running, repeat these steps to run the second task, which is the initial load and ongoing replication to DynamoDB.

Choose each task to view its progress and verify that records have been transferred.

In this sample application, an Amazon EventBridge scheduled rule invokes a Lambda function every minute to write a new record to the source, simulating production traffic hitting our existing monolith. This forms the “command” part of our command and query segregation—throughout the migration and testing phase, the writes continue to be persisted to the legacy data store with reads being served from the new microservice data store.

The following screenshot confirms that data is being replicated to Amazon S3.

After a few seconds, we see that the task is replicating the data from the event store to DynamoDB, as shown in the following screenshot.

Verify data in Amazon S3

Let’s follow this data through the various components involved, starting with the event store. We can use this immutable, append-only log of the events in our system to hydrate future downstream microservices, or serve analytics functions in place.

On the Amazon S3 console, navigate to the bucket created by the AWS CloudFormation stack. There are two folders in the bucket: an initial load folder and a CDC folder.

Choose the CDC folder and observe the files containing the events transmitted from the source system.

Download one of the CSV files and open it in your preferred text editor.

Confirm that the inserts coming from the source system are present here (represented by the letter ‘I’ in the first column).

If our entities were mutable, we would also see update (U) and delete (D) type events present.

The following screenshot shows a sample event file.

Validate data in DynamoDB

To view the data in DynamoDB, complete the following steps:

On the DynamoDB console, navigate to Explore items and select the table created by the CloudFormation stack.

On Scan/Query items section, select Scan and choose Run.

Confirm that all the widgets from the source system are being replicated via the event store to the microservice data store.

Now our new microservice consumes the events directly from DynamoDB, and any new functionality consuming these events is developed against the new data store without adding more code to the monolith.

Solution benefits

Why have two steps rather than replicate from the source to destination directly?

Firstly, the event store is designed to be the canonical source of truth for events generated within our system. Even in the fullness of time when we have migrated away from the monolith, we still use the event store as the core of our system. This source of truth means that multiple downstream consumers can be hydrated from this single data store, for example multiple microservices that may need access to subsets of the same data with different access patterns (think a key-value lookup of a single widget vs. fuzzy searching over a range of widgets).

Secondly, the most common of these additional access patterns is analytics. Some systems have a dedicated reporting service with a data warehouse and carefully designed star schema, but for some analytics workloads this may not be required. Having access to the raw data in a semi-structured format in Amazon S3 to be consumed for ad hoc analysis by a querying tool such as Amazon Athena or for ingestion into a visualization and business intelligence platform such as Amazon QuickSight may be sufficient. The event-sourcing architecture has this functionality built in.

Solution 2: AWS DMS and Amazon MSK

Now we explore the solution using AWS DMS and Amazon MSK. As we mentioned at the start of this post, this is closer to real-time replication, so for applications that require a more up-to-date view of the data, this is more appropriate. There is still some latency because the replication is asynchronous, so the application must still tolerate eventual consistency.

Run the replication task

To start the ongoing replication task, complete the following steps:

Deploy the CloudFormation template from the sample repository. Follow the instructions in the repository for how to deploy the template.

The template will take around 15 minutes to deploy. Once the template is deployed, wait 1–2 minutes for some sample data to be generated.

On the AWS DMS console, start the task created by the template. This task performs both the full load and the ongoing replication.

Select the task to verify that rows are now being replicated.

This data is being replicated into a Kafka topic within an MSK cluster. We then have two consumers of that topic; the first is our DynamoDB table. To hydrate this, we have a Lambda function reading the data from Kafka and writing it to DynamoDB. If we navigate to the DynamoDB console, there are now rows in our new table, as shown in the following screenshot.

The second consumer of the topic is an MSK Connect connector. For our connector, we’re using the Confluent S3 Sink plugin. For more details on configuring Amazon MSK using this plugin, refer to Examples. This connector provides a managed service for replicating the event stream into our S3 event store bucket. If we navigate to the Amazon S3 console and open the bucket created by the template, we see that we have the raw events from Kafka being streamed directly to the bucket.

From here we could use this event stream in the same way as described earlier. We can provide the staging area for an extract, transform, and load (ETL) process, facilitate in-place analytics via Athena and QuickSight, or hydrate further downstream consumers such as additional microservices.

Solution benefits

Why not default to this solution rather than the AWS DMS-only solution? Although the implementation using Amazon MSK has lower latency, it introduces the additional cost of running an MSK cluster perpetually, along with the complexity of this additional moving part. For more sophisticated or latency-sensitive operations, this is a worthy trade-off. For some applications, such as reporting and analytics where an eventual consistency of seconds is acceptable, the AWS DMS-only solution may present a simpler and more cost-effective solution.

Clean up

Several of the services discussed in this post fall within the AWS Free Tier, so you only incur charges for those services after you exceed the Free Tier usage limits. You can find detailed pricing on the Lambda, DynamoDB, Amazon RDS, and Amazon MSK pricing pages.

To avoid incurring any unexpected charges, you should delete any unused resources. If you deployed the example AWS SAM template, delete the CloudFormation stack via the AWS CloudFormation console.

Conclusion

In this post, we have explored two ways to implement an event-sourced system using AWS DMS. Event sourcing and CQRS are becoming increasingly common as more companies implement them to move away from legacy and monolithic solutions and design future-proof solutions. The key part of both solutions is the immutable, append-only event store in Amazon S3. This forms the foundation from which new microservices, analytics solutions, and alternate access patterns are served.

But perhaps most crucially of all, it future-proofs the architecture. The best data store for your application today might not be the best data store for your application in 5 or 10 years’ time. Having data portability and the ability to replay these events into any data store in the future gives you tremendous flexibility. It also gives you the ability to create data lakes or data mesh architectures from each service’s in-situ event store, giving greater discoverability, and therefore greater value, to your data.

Try out the solution today by cloning the sample repository. Share any thoughts in the comments on these and any other implementations of CQRS and event sourcing on AWS.

About the author

Josh Hart is a Senior Solutions Architect at Amazon Web Services. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Read MoreAWS Database Blog