Many organizations are benefiting from the performance gains and ease of administration of the Amazon Aurora database platform. The idea of breaking free from commercial licenses, backup administration, and data center maintenance is always a welcome thought. But where to begin, and how complex is the journey to migrate from SQL Server to Amazon Aurora PostgreSQL-Compatible Edition are common questions. AWS offers different paths for your migration journey, such as the AWS Schema Conversion Tool (AWS SCT), which makes conversions to PostgreSQL easier, and Babelfish, which allows Aurora to understand the SQL Server TDS protocol and language.

In this post, we focus on the approach of re-architecture directly into the PostgreSQL language without using Babelfish. It uses the AWS SCT and AWS Database Migration Service (AWS DMS) to convert code from SQL Server to PostgreSQL. For more information about migrating using Babelfish, visit Using Babelfish to migrate to PostgreSQL.

We also discuss lessons learned and best practices captured directly from real migration scenarios in the field.

Database migration challenges

One of the most time-consuming and technically challenging tasks for organizations and DBAs when deciding to migrate from one database platform to another is the planning phase, including both schema conversion and the physical migration of the data. We can break this phase down into five main components:

Cost – How expensive will this migration initiative be?

Complexity – Does my organization have the skills and time to ensure a successful migration?

Application downtime – How do I architect the migration to fall within my downtime SLA?

Engine-specific database code – What tool or process should I use to modify my platform?

Migration mechanisms – What tools will I use to perform the physical migration?

This post focuses on migration mechanisms and engine-specific database code.

Common database migration patterns

Database migrations often happen in phases to minimize risk and associated downtime. Typically, companies choose from three common migration strategies when migrating to AWS. We briefly describe each of these in this section.

Rehost (homogeneous)

Rehosting (otherwise known as lift and shift) involves migrating a database to the cloud in its current form. This reduces the time you spend managing database infrastructure such as the data center and physical servers. Other than the physical migration of the data to a cloud-managed virtual instance, all other aspects of the database remain the same.

This is the easiest migration approach because it lets organizations move quickly, with smaller risk or business impact, while removing the burden of maintaining a data center and other physical infrastructure. This approach does have drawbacks, however, because you’re still carrying many aspects of the operational management burden that you had on premises, such as managing backups, commercial licenses, and operating system patching and maintenance.

An example of rehosting is migrating an on-premises PostgreSQL database instance to Amazon EC2.

Replatform (homogeneous)

Replatforming a database allows you to move to a cloud-based fully managed database service while keeping the source database engine unchanged (homogeneous migration). Keeping the database engine the same minimizes complexity and risk of the overall migration while still benefiting from all the features included with a fully managed database service offering.

An example of replatforming is migrating an on-premises SQL Server to Amazon Relational Database Service (Amazon RDS) for SQL Server.

Re-architect (heterogeneous)

Re-architecting (otherwise known as rebuilding or refactoring) a database can involve many changes, which may include moving from a relational engine such as on-premises SQL Server to Aurora PostgreSQL. This may also include decomposing a database schema into smaller and more defined subsets that lend well to a microservices model. This may also lead to utilizing several different database platforms and models. Re-architecting provides the right data platform for your workload. This is the most time-consuming and difficult approach, but also yields the most long-term rewards.

The following examples of re-architecting include decoupling a database into several separate entities based on application need:

NoSQL key-value (Amazon DynamoDB)

NoSQL graph (Amazon Neptune)

Relational (Amazon Aurora or Amazon RDS)

For this post, we focus on the re-architect migration pattern, specifically the migration from on-premises SQL Server to Aurora PostgreSQL.

Convert schema and migrate using AWS purpose-built tools

The complexities of converting and migrating a database (including constraints, tables, indexes, stored procedures, and views) from one database platform to another can be involved and time-consuming, especially when coupled with strict uptime requirements. Fortunately, when re-architecting SQL Server to Aurora PostgreSQL, you have access to purpose-built tools such as AWS SCT and AWS DMS. In addition, we have developed particular prescriptive guidance through a comprehensive migration playbook. This playbook guides you through the complete journey of converting a SQL Server database schema to Aurora PostgreSQL, implementing the schema changes on the new instance, and finally migrating the data.

AWS SCT

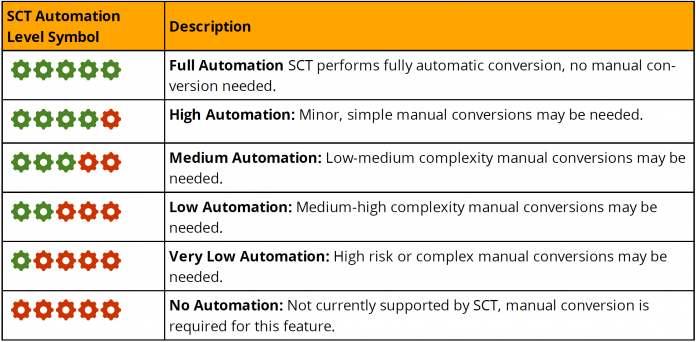

AWS SCT provides a project-based user interface to automatically convert the database schema of your source database into a format compatible with your target RDS instance. The following table indicates the level of automation AWS SCT gives for each type of SQL Server database object. This also gives you an idea of how much manual work is required for each object when converting to Aurora PostgreSQL. For example, converting SQL Server tables, views, and stored procedures has an automation rating of 4/5, which implies a higher level of automation versus manual work. In contrast, converting objects like cursors have a conversion automation rating of 3/5, which requires a bit more manual work.

For a deep dive into the AWS SCT, refer to the migration playbook.

AWS DMS

AWS DMS helps you migrate databases to AWS quickly and securely. The source database can remain fully operational during the migration, minimizing downtime to applications when failover occurs. AWS DMS supports homogeneous migrations such as on-premises Microsoft SQL Server to Amazon RDS for SQL Server as well as heterogeneous migrations between different database platforms such as on-premises Oracle to Amazon DynamoDB and Microsoft SQL Server to Amazon DynamoDB.

An example use case is mapping a relational SQL Server table schema to a NoSQL DynamoDB-compatible key-valued table and then migrating the data.

Lessons learned

Now that we’re equipped with the necessary tools and processes, we go a bit deeper into some of the lessons we learned when migrating from SQL Server to Aurora PostgreSQL. In this section, we discuss a relevant use case involving a well-known customer from the telecommunications sector.

The challenge

This customer generated $126 billion in 2017 revenues. The company operates reliable wireless and all-fiber networks, and delivers integrated solutions to businesses worldwide. One of its subsidiaries reaches about one billion people around the world with dynamic media and technology brands. They are currently operating a mission critical application that supports a customer ordering interface used by call representatives (users) to attend to its customers. The application is comprised of 40 Microsoft SQL instances, totaling 97 databases, 47 TB of storage, and a large schema footprint that includes 1,919 stored procedures and 3,021 tables. Given its business criticality, the application has millisecond latency SLAs for all APIs and the customer wanted to preserve similar performance in Aurora PostgreSQL.

The solution

AWS Professional Services enabled the customer to perform an in-depth analysis of data layout in the SQL Server database and implemented a migration strategy for its complex schema from SQL Server to Aurora PostgreSQL with no impact on source using AWS SCT and AWS DMS. AWS SCT released five versions for its schema conversions from Microsoft SQL Server to PostgreSQL to ensure the automatic conversion rate went up from 65% to 85%. The PostgreSQL design schema was fine-tuned by understanding the SQL Server’s data flow patterns.

The result

As part of the modernization initiative, the application successfully migrated to a microservices architecture.

The customer re-architected 40 in-house SQL Server database servers to nine Amazon Aurora instances, significantly reducing operational, licensing, and hardware costs. End to end, the customer completed the database schema migration for its over 8,000 database objects and functional code testing on the converted schema in 12 months. Currently, the application is in production meeting the defined SLAs of its business needs.

Migration best practices

In this section, we share best practices we uncovered when converting SQL Server to Aurora PostgreSQL. These are lessons learned directly from real database migration engagements performed for customers around the world. Each category includes a table that summarizes each scenario with corresponding links to relevant documentation and further reading.

SQL Server management

The SQL Server Agent is a Microsoft Windows service that runs scheduled administrative tasks, which are called jobs in SQL Server. PostgreSQL doesn’t have the equivalent of the SQL Server Agent, and any processes that rely on the agent need to be replaced. There are basically two kinds of jobs: backups and everything else.

Backup jobs – With Aurora PostgreSQL, you no longer have to concern yourself with backing up the database. The equivalent of SQL Server full, differential, and transaction log backups are taken care of for you, right out of the box and available to restore within a 5-minute RPO. If you need to hold on to backups for longer than the default 35 days provided by Aurora, you have the option to take Aurora database snapshots, which you can place on a schedule and hold in Amazon Simple Storage Service (Amazon S3) for as long as required. For all other jobs, you have the ability to replace them with AWS Lambda functions.

Non-backup SQL Server Agent jobs – You can replace SQL Server Agent jobs using AWS Lambda, a compute service that lets you run code without provisioning or managing servers. However, this is not a simple mapping activity in which you will find an appropriate equivalent for each SQL Server Agent job. Each use case has to be analyzed in detail to verify feasibility of the migration and determine the appropriate design pattern to use. For additional details, refer to the links in the following table.

Issue

Resolution

Reference

Replace SQL Server Agent jobs used to back up databases

Utilize Aurora backup/restore processes

Automated continuous backups (Amazon S3)

Point-in-time database restores

Database cloning

Overview of backing up and restoring an Aurora DB cluster

Replace non-backup SQL Server Agent jobs

Replace with Lambda functions

Schedule jobs for Aurora PostgreSQL using Lambda and Secrets Manager

SQL Server security

In this section, we share tips and resources for securing Aurora PostgreSQL when migrating from SQL Server:

Database schema – Both SQL Server and PostgreSQL utilize one or more named schemas, which in turn contain database objects such as tables, views, functions, operators, and so on. PostgreSQL default behavior automatically puts newly created databases in a schema named public and allows open access to any PostgreSQL user account. In contrast, SQL Server default behavior assigns ownership of the schema to the security principal that created it, and it can’t be accessed by anyone else unless explicitly granted appropriate permissions. When migrating to PostgreSQL, you need to modify the public schema so that it becomes secure. For a deeper look into migrating the database schema, see Converting SQL Server to PostgreSQL.

Linked servers – Foreign Data Wrappers are PostgreSQL’s equivalent of SQL Server linked servers. Use this PostgreSQL feature to create an equivalent solution on the target platform.

Issue

Resolution

Reference

Automatic object permission inheritance with schema-based security

Create a custom pgSQL script

Lock down Postgres public schema

Assign required permissions

Run on schema-create or modification

Ownership and User-Schema Separation in SQL Server

Schema-Based Access Control for SQL Server Databases

PostgreSQL Privileges and Security – Locking Down the Public Schema

Linked servers not supported

Use Foreign Data Wrappers

SQL Server performance

In this section, we share tips and resources for converting and optimizing Aurora PostgreSQL performance when migrating from SQL Server:

Extensive use of triggers – Even though triggers can be very handy and enable a lot of data-driven functionality, given the amount of overhead they introduce to tables (especially those with high transaction rates), we recommend reducing the number of triggers used in your database solution to a minimum. The migration to a new data platform could be an opportunity to rethink triggers.

Use of nested triggers – Given what we stated about triggers, using nested triggers only compounds the overhead problems they introduce. Again, in this case, the recommendation is to re-architect this portion of your database solution without using nested triggers.

Heavy use of TempDB (joins, sorts) – With Aurora PostgreSQL, temporary log files and temporary tables are persisted locally, on each instance node. Therefore, in use cases with heavy use of TempDB, special attention needs to be paid to local storage availability. For additional details, refer to the links included in the following table.

Extensive use of linked tables – Based on previous experiences from other migrations, we have found that consolidating all linked tables into a single target PostgreSQL database renders improved management and performance.

Converted PostgreSQL functions – To deliver optimal performance under the new data platform, closely monitor PostgreSQL functions, make sure that all read-only functions are set as IMMUTABLE, and consider using the PARALLE_SAFE option when appropriate.

Embedded multiple inline queries in application – If you encounter applications with embedded multiple inline queries, an effective workaround in PostgreSQL is batching these queries together inside a single PL/pgSQL anonymous block.

Issue

Resolution

Reference

Extensive use of triggers

Reduce number of triggers to minimum

Use of nested triggers

Reimplement and remove recursive triggers

(pg_trigger_depth() = 0)

SQL Server Nested Triggers

System Information Functions

Heavy use of TempDB (joins, sorts)

How can I troubleshoot local storage issues in Aurora PostgreSQL instances?

Extensive use of linked tables

Consolidate all linked tables into a single database

Converted PostgreSQL functions

Set read-only functions to IMMUTABLE

Consider using the PARALLEL SAFE option

Monitor functions’ performance

Function Volatility Categories

Boost your User-Defined Functions in PostgreSQL

Embedded multiple inline queries in application

Batch queries into single PL/pgSQL anonymous block

How to use block structure to write anonymous blocks

SQL Server Entity Framework integration

Entity Framework is one of the most pervasive object-relational mappers (ORMs) for ASP.NET. An ORM maps an application’s object entities to relational entities in a database, and allows developers to build and edit the database schema from the code. Support for Microsoft Entity Framework isn’t available out of the box as of this writing, but you can implement it using Entity Framework Core (EFCore), a more lightweight and flexible version that specifically enables .NET objects. It also reduces the amount of data access code developers need to write, and offers higher-performance APIs.

Issue

Resolution

Reference

Application uses Microsoft Entity Framework table inheritance feature

Manually add primary or foreign key to simulate inheritance behavior

Babelfish for Aurora PostgreSQL is planning to support Entity Framework

PostgreSQL Table Inheritance in entity framework

Npgsql Entity Framework Core Provider

Get to Know Entity Framework and PostgreSQL

Transact SQL conversion to PL/pgSQL

The following are tips and resources for converting SQL Server stored procedures and general T-SQL when migrating from SQL Server to Aurora PostgreSQL:

MERGE statement –If you encounter MERGE statements in your database or application code, the only alternative available as of this writing is to rewrite the query using the PL/pgSQL INSERT statement in combination with the ON CONFLICT DO UPDATE clause. This achieves similar functionality.

PIVOT/UNPIVOT statement – If you encounter PIVOT/UNPIVOT statements in your database or application code, manual conversion is required. However, using the crosstab function contained in the tablefunc Postgres module provides the functionality required to produce equivalent results as the original query.

IIF statement – Even though the IIF statement isn’t supported out of the box in PL/pgSQL, this can be easily implemented as an immutable function.

Table-valued user-defined functions – Table-valued functions are supported in PostgreSQL. However, there are implementation and syntactical differences to be considered.

TRY/CATCH block – If you encounter TRY/CATCH block statements in your database or application code, manual conversion is required. However, if you can upgrade to PostgreSQL version 11 or later, a very similar implementation is available.

System-versioned table not supported in PostgreSQL – The functionality equivalent to SQL Server system-version tables needs to be manually implemented. The link included in the following table references a community-based PostgreSQL extension that implements this feature. Caution and thorough testing are strongly encouraged.

Stored procedures not supported in PostgreSQL – By design, native support for .NET CLR stored procedures isn’t offered in PostgreSQL. If CLR stored procedures are part of the database solution being migrated, the two available options are to rewrite the stored procedure using PL/pgSQL or re-implement the stored procedure using AWS Lambda. Depending on the specific technical or business purpose of these stored procedures, different alternatives can be considered, as suggested in the migration playbook referenced in the following table.

MD5 hash for special characters doesn’t match with PostgreSQL MD5 hash – Based on previous experiences from other migrations, we have found that if special characters are included in the columns being hashed, the PostgreSQL MD5 hash function generates different results than the same hash in SQL Server.

Issue

Resolution

Reference

MERGE statement

Requires manual conversion

INSERT … ON CONFLICT DO UPDATE

PIVOT/UNPIVOT statement

Requires manual conversion

Consider tablefunc module or crosstab function

Table functions

Pivot Tables in PostgreSQL Using the Crosstab Function

IIF statement

Requires manual conversion

Write a replacement function in pgSQL

Table-valued user defined functions

Rewrite the function

How to Develop a PL/pgSQL Function That Returns a Table

TRY/CATCH block

Requires manual conversion

Postgres 11 has a close implementation

Try catch equivalent in Postgres

System-versioned table not supported in PostgreSQL

Manually set up triggers to update the corresponding history table (extension)

Temporal Tables Part 2: Setup Database and Create Tables

CLR Stored procedures not supported in PostgreSQL

Rewrite stored procedure using PL/pgSQL or Lambda

P. 124 of Microsoft SQL Server To Amazon Aurora with PostgreSQL Compatibility: Migration Playbook

MD5 hash for special characters don’t match with PostgreSQL MD5 hash

Avoid special characters in columns being hashed

Conclusion

In this post, we discussed the process to successfully re-architect and migrate SQL Server to Aurora PostgreSQL by using AWS purpose-built tools. We also shared lessons learned and best practices captured directly from real migration scenarios in the field. You can begin your migration journey by planning a proof of concept with Amazon Aurora and incorporate the above best practices.

If you have any questions, comments, or suggestions, please leave a comment below.

About the Authors

Camilo Leon is a Senior Database Solutions Architect at AWS. He works with AWS customers to provide guidance and technical assistance on relational database services, helping them improve the value of their solutions when using AWS. In his spare time he enjoys mountain biking, hiking with his Beagle, photography, and movies.

Dustin Brown is a Senior Database Solutions Architect at AWS who provides customers with prescriptive guidance for database architecture and migration patterns. Prior to joining AWS, he held DBA and technical leadership roles for varying companies in the airline, real estate, and genealogy industries to name a few. Outside of work he enjoys spending time with his wife and 5 children, and when he gets the chance he loves to sing.

Read MoreAWS Database Blog