In a relational database management system, a unique key is a set of one or more attributes (columns) that uniquely identifies each row in a table. It ensures that no two rows in the table can have the same values for the specified attributes. The following are the features of a unique key:

Uniqueness – A unique key ensures that each value in the specified attributes is unique within the table. No two rows can have the same combination of values for the attributes defined as the unique key.

Indexing – A unique key is typically indexed by the database system to enhance the performance of data retrieval operations. Indexing allows for faster searching and retrieval of data based on the unique key.

Constraint enforcement – The unique key constraint enforces the uniqueness of the specified attributes. If an attempt is made to insert or update a row with duplicate values for the unique key, the database system will generate an error and prevent the operation.

Relation to primary key – A unique key can be used as an alternative to a primary key in a table. Although a primary key uniquely identifies each row and serves as a foreign key reference in other tables, a unique key provides uniqueness – unlike a primary key, which doesn’t allow NULL values.

In a relational database management system (RDBMS), a unique key is a candidate key. All the candidate keys of a relation can uniquely identify the records of the relation, but only one of them is used as the primary key of the relation. The remaining candidate keys are called unique keys because they can uniquely identify a record in a relation. Unique keys can consist of multiple columns forming a multicolumn unique key. This means that the combination of values in the specified attributes must be unique for each row in the table, rather than considering each attribute individually.

In this post, we show you how unique keys are handled in various database engines. We also demonstrate how you can handle the data integrity challenges while migrating to Amazon Relational Database Service (Amazon RDS) for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition.

Unique keys in PostgreSQL

In PostgreSQL, there are two more ways to define the uniqueness (other than a primary key) of the data in a column (or multicolumn) for a given table. It can be either via a unique index or a unique constraint, which are implemented in PostgreSQL as follows:

Currently, only B-tree indexes can be declared unique.

Two NULL values are not considered equal. Note that PostgreSQL 15.x introduced NULLS NOT DISTINCT, which we discuss later in this post.

A multicolumn unique index will only reject records where all indexed columns have equal values in multiple rows.

PostgreSQL automatically creates a unique index when a unique constraint or primary key is defined for a table.

Users can’t apply functions (such as COALESCE) in a unique constraint defined at the time of CREATE TABLE, because it throws error. However, if you create a unique index separately, then the same function works without any error.

Let’s consider a payroll table where the employee is not assigned to any department during the initial probation period and a unique key is defined on the columns employee_name and department.

In this case, PostgreSQL allows you to store more than one record with same employee_name and NULL values in the department column, which is valid because every NULL value is considered different.

In the following sections, we look how NULL values are treated within the unique keys across different database engines.

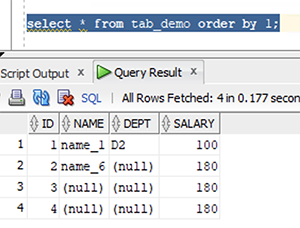

Null values in Oracle

In Oracle, if all columns of unique-key are null, then the table can have multiple such rows. However, if a few columns are not null, then only one combination of null is allowed. In the following example, if you try to enter the multiple values of (‘name_6’, null), only one is allowed. This behavior is now achievable in PostgreSQL 15.x with newly introduced NULLS NOT DISTINCT; the other throws an error:

The following insert statement will result in a duplicate key violation error:

Null values in Microsoft SQL Server and SAP ASE/Sybase ASE

SQL Server (and SAP ASE/Sybase ASE) doesn’t allow more than one record if one or all columns defined as unique are inserted with a null value:

The following statement with null values in both the name and dept columns throws a duplicate key error because the combination already exists in the table with ID value 3:

Similarly, the following statement with a null value only in the dept column throws a duplicate key error because the combination already exists in the table with ID value 2:

Null values in IBM Db2 LUW

In Db2 LUW, null values are not allowed in the columns defined as unique or the columns used in a composite unique constraint. Db2 expects that you explicitly enforce a NOT NULL constraint for the columns defined as unique.

For example, the following table definition would fail in Db2:

To resolve this, the NOT NULL constraint must be enforced as shown in the following code so that no null values are allowed:

This makes sure that there is no duplication of data based on the unique key. However, this behavior will be different if you define a unique index, which allows one NULL value.

Null values in PostgreSQL

PostgreSQL allows null values in columns defined as unique or the columns used in a multicolumn unique constraint. By default, in PostgreSQL, two null values are not considered equal; however, PostgreSQL 15.x introduced NULLS NOT DISTINCT (which we discuss later in this post). That means that even in the presence of a unique constraint, it’s possible to store duplicate rows that contain a null value in at least one of the constraint columns.

This behavior can result in a data discrepancy after migrating from other commercial database engines like Oracle, SQL Server, or Db2.

See the following example code:

PostgreSQL will accept the following insert statements, even though a similar record already exists in the table:

In the following sections, we discuss various solutions or workarounds that are available to mitigate this behavior in PostgreSQL.

Using a function-based unique index

In this method, you allow only one null value combination in columns defined as a unique index using a built-in function. For example, you can use the COALESCE function, as shown in the following code:

When you try to insert the following record, it fails with a duplicate key violation:

In this solution, only one combination of the null values in columns defined as unique or the columns used in composite unique keys are allowed.

Using an array-based unique index

This is similar to the previous solution. In this option, you create a unique index using the ARRAY data type, as shown in the following code:

When you try to insert the following record, it fails with a duplicate key violation:

In this solution, only one combination of the null values in columns defined as unique or the columns used in composite unique keys is allowed, which is similar to the first solution.

In both methods, we were able to restrict data to only one null combination. However, this isn’t yet in line with the Oracle structure, where null values in all of the columns of unique-key can still be a valid scenario. To achieve the same behavior, we examine another solution.

Using a unique index using the case structure

In this solution, you create a unique index using a case structure, as shown in the following code. This allows you to store more than one record with null values in the columns defined as unique or the columns used in a composite unique constraint.

The following insert statements would succeed:

However, the following statement would fail with a duplicate key violation:

In this solution, you can achieve Oracle-like behavior to store null columns where, if all columns of unique-key are null, then the table can have multiple such rows. However, if a few columns are not null, then only one combination of null is allowed.

If your columns have different data types, you can still use this solution with some adjustments as per your use case. For example, in the following table definition, the column name is VARCHAR and dept is an integer:

Using the NULLS NOT DISTINCT clause

From PostgreSQL 15 onwards, you can include the clause NULLS NOT DISTINCT for the columns defined as unique or the columns used in a composite unique constraint in a table definition to make sure that only one such combination is allowed to be stored. See the following code:

The following insert fails with a duplicate key violation:

Comparison summary

The following table summarizes the solutions discussed in this post.

Solution

Supported PostgreSQL Version

Description

Function-based unique index

>=11

Supports SQL Server and SAP ASE/Sybase ASE unique key behavior

Array-based unique index

>=11

Supports SQL Server and SAP ASE/Sybase ASE unique key behavior

Unique index using the case structure

>=11

Supports Oracle unique key behavior

NULLS NOT DISTINCT clause

>=15

Supports SQL Server and SAP ASE/Sybase ASE unique key behavior

Conclusion

In this post, we discussed how null values are handled by unique keys across various database engines and how you can mimic or achieve the source database functionality while migrating to PostgreSQL. When inserting or updating records with more than one null value for the columns defined as unique keys, PostgreSQL doesn’t raise warnings or errors because two null values are not the same. This may have an adverse impact on business functionality after migrating to PostgreSQL. Therefore, it’s important to validate and apply the solutions described in the post as part of your schema migration phase.

Let us know if you have any comments or questions. We value your feedback!

About the authors

Sai Parthasaradhi is a Database Migration Specialist with AWS Professional Services. He works closely with customers to help them migrate and modernize their databases on AWS.

Vikas Gupta is a Lead Database Migration Consultant with AWS Professional Services. He loves to automate manual processes and enhance the user experience. He helps customers migrate and modernize workloads in the AWS Cloud, with a special focus on modern application architectures and development best practices.

Read MoreAWS Database Blog