Amazon Relational Database Service (Amazon RDS) for Oracle is a fully managed commercial database that makes it easy to set up, operate, and scale an Oracle database in the cloud. One of its features is storage auto scaling, which allows the database storage to grow automatically, as needed, up to the maximum capacity (64 TiB) currently supported by Amazon RDS for Oracle. We use a high-water mark model to determine storage allocation, and you only pay for the allocated storage. The high-water mark is the maximum amount allocated to the instance volume at any time during its existence. Because the allocated storage of the instance may not be decreased, it’s beneficial to avoid expanding the database size without need.

However, over time, you may engage in data purging or cleanup activities, resulting in less than full utilization of your allocated storage capacity. Following a significant data purge or reduction, you need a strategy to reclaim the storage allocated to the database and eventually reduce costs.

In this post, we provide a mechanism to reduce the storage footprint during migration of an RDS for Oracle instance using Oracle Data Pump and AWS Database Migration Service (AWS DMS).

Solution overview

The solution uses Oracle Data Pump over a database link to copy the data from a source Oracle database to a target RDS for Oracle instance. For migrations that require minimal downtime, or using database links isn’t feasible, an alternative option is to use AWS DMS. With this solution, you don’t have to allocate additional storage for Data Pump files for importing into Amazon RDS for Oracle. After the data is copied, applications can use the connection string for the new RDS for Oracle instance. In the case where both the source and target are RDS for Oracle instances, you may also rename the new instance to the original instance name to avoid any changes to the connection string used by the application. Also note that the solution requires that the source and target Oracle databases can connect to each other.

You can also use this solution in the following use cases:

Migrating data from an Oracle database located on premises or an Amazon Elastic Compute Cloud (Amazon EC2) instance to or from Amazon RDS for Oracle and Amazon RDS Custom for Oracle without allocating additional storage to hold logical export files.

Performing an out-of-place upgrade of your RDS for Oracle instance with low downtime.

In this post, we discuss two options to perform the migration, each with advantages and trade-offs:

Option 1 – Perform a one-time data load with the applications down throughout the migration

Option 2 – Perform an initial data load and migrate all incremental changes using AWS DMS, with the application running

The following table summarizes the benefits and disadvantages of each option.

.

Pros

Cons

Option 1: Increased downtime

Simple and fewer steps involved in migration

No additional services required to migrate data

Lower cost

Greater application downtime that depends on database size, power of source and target instances, and process optimizations

Perform schema object validation, stats capture, and more in the target instance before cutover, thereby extending the application downtime

Option 2: Minimal downtime

Minimal application downtime

Perform schema object validation, stats capture, and more in the target instance while the application is running in the source database and incremental changes are being replicated by AWS DMS

Flexible to choose a cutover window

More steps involved in migration

Additional cost for AWS DMS to migrate incremental data

Option 1: Perform a one-time data load with the applications down throughout the migration

The first option uses Oracle Data Pump export/import over a database link to populate the target database when the application can afford a longer downtime. The downtime starts from the time the application is brought down to perform the database export until the application reconnects to the target database after import.

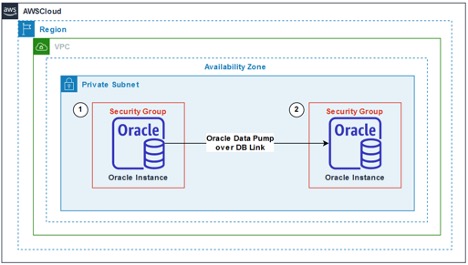

In the following diagram, we show a migration from Amazon RDS for Oracle (1) to Amazon RDS for Oracle (2) as an example. You can apply a similar method if the source Oracle database (1) is an RDS for Oracle instance, an Oracle database hosted on Amazon EC2, or hosted on premises.

Option 2: Perform an initial data load and migrate all incremental changes using AWS DMS, with the application running

The second option uses Oracle Data Pump over a database link and change data capture (CDC) replication with AWS DMS. This scenario is suitable when the application requires minimal downtime.

In the following diagram, the target RDS for Oracle DB instance (2) runs an Oracle Data Pump job to extract data from the source RDS for Oracle DB instance (1). After the Oracle Data Pump import using the source system change number (SCN) for consistent copy is complete, we use an AWS DMS task (3) to start the CDC replication, using the same SCN that was used for the Oracle Data Pump import as the start point of replication. Refer to Performing replication starting from a CDC start point to learn more.

Data migration steps for both options

To perform the data migration, complete the following steps:

Create a new RDS for Oracle instance with automated backups disabled and in Single-AZ mode.

If migrating from Amazon RDS for Oracle or Amazon EC2, create the DB instance in the same Availability Zone as the source instance. Also, pre-allocate storage close to what the database size will be once populated and set storage auto scaling on with a reasonable maximum capacity.

Set up the instance with the following DB parameter group settings to prevent any database jobs (dba_jobs or dbms_scheduler_jobs) from running before cutover:

Change the redo log file from its default of 128 MiB to match that of the source instance or as determined to be appropriate for your use case. In the following example, a new redo log group is added with size 1 GiB after logging in to the database using a client tool like sqlplus or SQL Developer:

Log in to the target instance and create a database link to the source instance. Choose a user name in the source instance that has DATAPUMP_EXP_FULL_DATABASE permission.

If using Option 1 for migration, make sure that application connected to database is down and there is no activity in the database while migrating data from the source instance to the target instance. Run the following command to help identify users or application sessions still connected to the database.

Run the following script from the target instance to load the data over the database link:

You can use the preceding script to migrate the entire database to the target DB instance. If you need to migrate only a specific list of schemas, refer to the following code:

Note the Flashback SCN and Datapump Job Name values that are displayed as outputs in the previous step.

Monitor the status of the import job and review the import log by running the following commands in the target instance:

After the import is complete, compare the database schema object count and status between the source and target instance. Recompile objects if any are invalid by running the following commands in the target instance:

If necessary, grant permission to system objects using the Amazon RDS procedure rdsadmin.rdsadmin_util.grant_sys_object.

Gather dictionary-level and schema-level statistics for optimal performance.

You can now enable automated backups and the Multi-AZ option for the target instance.

Cutover

If you chose Option 1 for data migration, you can now cut over the application to the target RDS for Oracle instance.

For Option 2, complete the following additional steps in the target instance, after you have completed the import and compared the database objects:

Generate the commands to disable triggers and foreign key constraints in the target instance:

Run the output commands from Step 1 to disable triggers and foreign key constraints in the target instance.

Generate commands to enable triggers and foreign key constraints in the target instance.

To migrate incremental changes from the source instance to the target instance, follow the sections Provisioning the AWS DMS instances, Creating the AWS DMS migration task, and Data validation in the post Migrating Oracle databases with near-zero downtime using AWS DMS to complete the migration and cutover. Use Flashback SCN while configuring the AWS DMS task (in the System change number field). Be sure to retain archive logs for an extended period to include the captured Flashback SCN.

Wait until the desired cutover time while monitoring replication lag using Amazon CloudWatch.

Stop applications when the CDCLatencySource and CDCLatencyTarget is close to 0 and allow final changes to flow through.

Run the output commands of Step 3, to enable triggers and foreign key constraints in the target instance.

Run the following SQL in the source Oracle database to generate commands to reset the sequence values before cutover:

Monitor the target instance by configuring appropriate CloudWatch alerts (see Monitoring Amazon RDS metrics with Amazon CloudWatch) and Amazon RDS events.

Modify the target instance parameter job_queue_processes with the appropriate value from the source instance.

This completes the migration of data from source to target RDS for Oracle DB instance. You can now cut over applications to the target instance.

Clean up

After you cut over to the target instance, you can stop the source instance to avoid instance charges. After the migration is confirmed to be successful, you can delete the instance. For instructions, refer to Deleting a DB instance.

Conclusion

In this post, we demonstrated how you can reduce the storage allocated to an RDS for Oracle DB instance by copying the data to a new RDS for Oracle DB instance using Oracle Data Pump over a database link, and optionally with minimal downtime by using AWS DMS for CDC replication. You can use this methodology for source Oracle databases in Amazon RDS for Oracle, self-managed Oracle databases on Amazon EC2, and Oracle databases hosted outside of the AWS Cloud.

We encourage you to try this solution. For more information about using AWS DMS, see Getting started with AWS Database Migration Service and Best Practices for AWS Database Migration Service.

Reach out with questions or requests in the comments. Happy migrating!

About the authors

Santhosh Kumar Adapa is a Sr. Database Consultant with the AWS Professional Services team at AWS. He works as a database migration specialist to help Amazon customers in design and implementing scalable, secure, performant, and robust database solutions in the cloud.

Bhanu Ganesh Gudivada is a Database Consultant with the AWS Professional Services team at AWS based out of Hyderabad, India and specializes in database migrations. He is helping and enabling customers to build high-available, cost-effective database solutions and migrate their commercial engines to AWS cloud. Curious to learn and implement new technologies around the databases and orchestrate migrations through automation.

Sushant Deshmukh is a Database Consultant with AWS Professional Services. He works with AWS customers and partners to build highly available, scalable and secured database architectures on AWS. Also, helping customers migrate and modernize their databases to AWS Cloud. Outside of work, he enjoys traveling, exploring new places, playing volleyball and spending time with his family and friends.

Jeevan Shetty is a Sr. Database Consultant with the AWS Professional Services Team. He has been supporting and enabling customers to migrate their database from OnPrem data center to AWS cloud and also in migration from commercial database engines to open source database in Amazon.

Read MoreAWS Database Blog