Migrating databases from Oracle to either Amazon Aurora PostgreSQL-Compatible Edition or Amazon Relational Database Service (Amazon RDS) for PostgreSQL presents a unique challenge when migrating JSON data. Oracle CLOB data type can contain both well-formed and invalid JSON, whereas the PostgreSQL JSON and JSONB data type requires JSON data to be correctly formatted according to RFC 8259. Therefore, transferring data stored in Oracle’s CLOB data type to JSON and JSONB data type in Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL requires a meticulous examination of data quality and integrity. This migration is dealing with both well-formed JSON and invalid JSON data present within the Oracle CLOB data type columns. Invalid JSON often has syntax or semantic errors like missing braces, omitted commas, or structurally incorrect formats, and PostgreSQL will raise an error if you try to insert it into JSON and JSONB column. Successfully migrating these tables means addressing and rectifying these data inconsistencies.

In this post, we demonstrate how to migrate JSON data from Oracle to Amazon Aurora PostgreSQL-Compatible Edition or Amazon RDS for PostgreSQL by using AWS Database Migration Service (AWS DMS) in conjunction with a PL/pgSQL procedure. You can identify and isolate the range of stored invalid JSON data in CLOB columns along with their corresponding primary key values using the method mentioned in this post.

You can use AWS DMS to streamline the migration process; however, you must exercise caution when migrating invalid JSON records stored in the source Oracle’s CLOB column, as the PostgreSQL JSON or JSONB data types will reject invalid JSON. Therefore, the PL/pgSQL procedure highlighted in this post will be a complemented solution. AWS DMS streamlines the migration process, capturing changes from the Oracle source database and replicating them in near-real time to the target Aurora PostgreSQL-Compatible Edition or RDS for PostgreSQL database. This ensures a smooth transition by transforming and mapping the data between the two platforms.

Solution overview

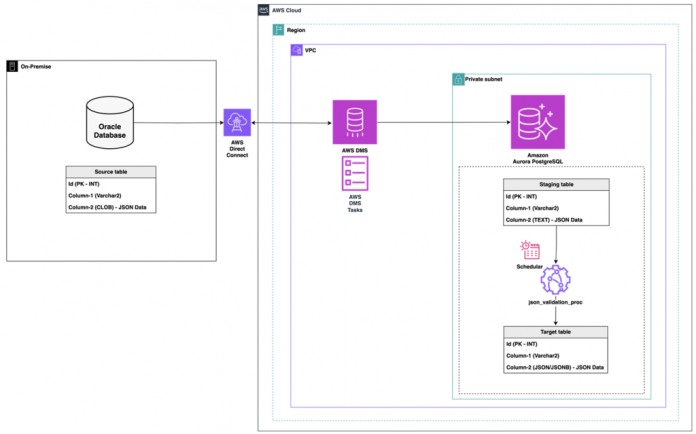

The following architecture depicts the overall solution in migrating JSON data stored in Oracle CLOB data type columns to Aurora PostgreSQL-Compatible Edition or RDS for PostgreSQL JSON or JSONB data type columns.

In this scenario, we assume an application or user populates bulk records into the Oracle table. We create a staging table in Amazon Aurora PostgreSQL-Compatible Edition or Amazon RDS for PostgreSQL where Oracle’s CLOB columns are replaced with PostgreSQL’s TEXT columns. AWS DMS migrates data from the source to staging table, and the PL/pgSQL procedure further migrates data from the staging table to target table by capturing invalid JSON records. Once the initial load onto the staging table is finished and CDC is ongoing, schedule the procedure according to the delta data sizes.

To implement the solution, we complete the following steps:

Set up AWS DMS.

Generate invalid JSON data into the source database. (This step may be skipped if invalid JSON data is being generated through the application).

Create a PL/pgSQL procedure to capture invalid JSON records.

Use the PL/pgSQL procedure.

Validate the JSON data using a PL/pgSQL procedure.

Prerequisites

For a successful data migration from Oracle’s CLOB to Aurora PostgreSQL-Compatible Edition and RDS for PostgreSQL JSON or JSONB columns, you must meet the following prerequisites regarding setting up AWS DMS and having the right source and target database settings.

AWS DMS

Make sure you have AWS DMS set up to meet the following criteria to transfer data from the source table to the staging table:

Ensure that you’ve configured AWS DMS by following the best practices for identity and access management, network for replication instance, and security in AWS DMS. If your architecture uses public traffic, consider implementing VPC endpoints.

Prepare your AWS DMS tasks for the identified tables to handle JSON data migration stored from Oracle’s CLOB to Aurora PostgreSQL-Compatible Edition and RDS for PostgreSQL TEXT. This configuration captures any invalid JSON records from tables using the procedure mentioned in this post.

Secure your data transfers from on premises with AWS Direct Connect or AWS VPN.

Source database

The source database should have table objects that meet the following requirements for the data transfer process:

An Oracle database table with a CLOB column that stores JSON data

The source table should have a primary key

Target database

The target database should have table objects that meet the following requirements to receive data from AWS DMS and run a PL/pgPSQL procedure to capture invalid JSON records:

Create a table in the target database, whether that’s Amazon Aurora PostgreSQL-Compatible Edition or Amazon RDS for PostgreSQL, that matches the metadata of the source table. Although the table name may differ, it’s essential to replace the CLOB column in this table with the TEXT data type. This table serves as a staging area. Its design, using the TEXT data type, allows it to accommodate both valid and invalid JSON records when loaded by AWS DMS.

Create another table in the target database by matching the source table metadata. The table name might not be the same. Nevertheless, replace the CLOB column from that table with JSON or JSONB. This table is used as a final migrated targeted table on Amazon Aurora PostgreSQL-Compatible Edition by having only valid JSON records stored in the JSON or JSONB data type.

Create logging tables like the following example table for filtered invalid JSON records and the procedure to run metadata:

Limitations

This solution helps identify invalid JSON records stored in CLOB columns when migrating from an Oracle database to an Aurora PostgreSQL-Compatible Edition or RDS for PostgreSQL database. However, the procedure used here primarily focuses on copying data from a staging table to a target table based on a primary key. Keep in mind the following limitations:

This procedure can’t handle changes (updates and deletes) in JSON stored CLOB columns during CDC. Alternatively, ensure your application keeps validating real-time JSON data through your application until successful cutover.

This procedure can’t handle correcting invalid JSON to valid JSON.

This solution is helpful only when there is a primary key on the table.

Set up AWS DMS

In these steps, we work with Amazon Aurora PostgreSQL-Compatible Edition, but the same solution works with Amazon RDS for PostgreSQL. Complete the following steps to set up AWS DMS, create AWS DMS tasks for both source and target, create staging tables, run AWS DMS tasks, and migrate the JSON data:

Create an AWS DMS task with Oracle as the source endpoint and Amazon Aurora PostgreSQL-Compatible Edition as the target endpoint with only the table list having JSON data in CLOB columns in the Oracle with all best practices.

Create table mapping during AWS DMS task creation, replacing the staging table’s TEXT columns with CLOB columns from Oracle.

From the preceding snippets of AWS DMS tasks, we have three source tables: SOURCE_TABLE_1, SOURCE_TABLE_2, and SOURCE_TABLE_3. For each table, we configure rules in AWS DMS to replicate to staging tables.

Start the AWS DMS task to migrate the data from on-premises Oracle JSON data in CLOB columns to Amazon Aurora PostgreSQL-Compatible Edition JSON data in TEXT columns. For any issues with AWS DMS tasks, follow the AWS DMS troubleshooting guide.

Generate invalid JSON in the source database

In a real-time environment, the invalid JSON data is received through an application. For this post, we simulate the invalid JSON records using the following queries on the source Oracle database:

Sample 1 – Insert valid JSON records for columns json_column1 and json_column2:

Sample 2 – Insert invalid JSON records for columns json_column1 and json_column2:

Sample 3 – Insert valid and invalid JSON records for columns json_column1 and json_column2:

Create a PL/pgPSQL procedure to capture invalid JSON records

The following procedure json_validation_proc helps show how the data is copied from the staging table to the target table in Amazon Aurora PostgreSQL-Compatible Edition and logs the invalid or skipped JSON records in a separate table so that the application admin or database administrator can validate and fix the data:

This json_validation_proc procedure performs a data transfer from a staging table to a target table. While doing the transfer, it also finds invalid JSON records by isolating them using its primary key and logs entry into the discard_record_log table. This procedure tracks progress, manages data ranges, and handles transfer failure issues that come up during the transfer methods of initial load and delta inserts.

Details of the PL/pgSQL procedure

The PL/pgSQL procedure mentioned the previous section takes several input parameters:

source_table_name – The name of the source table

target_table_name – The name of the target table

range_interval – The number of rows to process at a time

json_columns – An array of JSON column names

primary_key_column – The name of the primary key column

load_type – The type of data load (il for initial load or di for delta inserts) and an optional force parameter

Depending on the load_type provided, the procedure performs different actions:

If the load_type is il (initial load), it creates or checks the existence of two tables: discard_record_log and exec_history_offset. These tables are used for tracking discarded records and maintaining run history offsets.

If the load_type is di (delta inserts), it assumes that a previous initial or delta load has already occurred and proceeds accordingly.

The procedure retrieves the maximum proc_exec_counter from the exec_history_offset table for the specified source_table_name and increments it for the current run. This counter is used for tracking the run history.

If the proc_exec_counter is greater than 1, it retrieves the maximum primary key value (max_rec_id_at_end_of_proc_exec_counter) from the exec_history_offset table for the specified source_table_name. This value represents the maximum primary key value processed in the previous run.

If the load_type is il and proc_exec_counter is greater than 1, the procedure performs checks based on the force parameter. If force is true, it deletes all data from the target_table_name, discard_record_log, and exec_history_offset tables and resets the pre_exec_max_pk_id and proc_exec_counter variables. Otherwise, it raises an exception indicating that an initial load has already occurred.

The procedure generates the necessary queries to fetch column names and JSON column casts for the target table.

It calculates the number of ranges based on the row count of the source table and the specified range_interval.

For each range, the procedure creates a temporary table (temp_pk_table) to store the primary key values within the range.

It loops through each record in the temp_pk_table and generates an INSERT command to copy the corresponding rows from the source table to the target table. The INSERT command includes cast expressions for the JSON columns.

If an INSERT command fails, the failed record is logged in the discard_record_log table.

After processing each range, the procedure commits the changes to see the records in the log table and target table.

Finally, the procedure inserts the run metadata (including tbname, proc_exec_counter, range_interval, num_ranges, and max_rec_id_at_end_of_proc_exec_counter) into the exec_history_offset table.

For the examples provided, each CALL statement invokes the copy_data_proc procedure with different input parameters, including different source table names, target table names, range intervals, JSON column names, primary key column names, and load types. The optional force parameter is also used in some cases.

Running this procedure at regular intervals using any scheduler like OS crontab/scheduler or PostgreSQL pg_cron extension until the scheduled cutover makes sure that the migration process moves forward smoothly with comprehensive visibility into the data quality.

Validate the JSON data using a PL/pgSQL procedure

Let’s dive into some examples to understand how it works.

Example 1

In this example, we initiate the data copying process by calling the copy_data_proc procedure. We specify the source table as staging_table and the destination table as target_table. The procedure is configured to process 100 rows at a time using the range_interval parameter. We select the columns json_column1 and json_column2 to be copied from the source table. The rows are ordered based on the id column, and the il option indicates ascending order. Additionally, the true parameter signifies that the target table should be truncated before copying the data.

See the following code:

Example 2

In this example, we perform a similar data copy operation from staging_table1 to target_table1. However, we omit the truncation step by excluding the last parameter. This allows us to append the copied data to the existing records in the target table.

See the following code:

Example 3

In this example, we again copy data from staging_table2 to target_table2. However, we modify the sorting order by specifying di for descending order based on the id column. This makes sure that the data is copied in reverse order compared to the previous examples.

See the following code:

Example 4

In this example, we initiate the data copying process by calling the copy_data_proc procedure. We specify the source table as staging_table and the destination table as target_table. The procedure is configured to process 100 rows at a time using the range_interval parameter. We select the columns json_column1 and json_column2 to be copied from the source table. The rows are ordered based on the id column, and the il option indicates ascending order. Consider a scenario where you have the data in the staging_table, which includes columns json_column1 and json_column2, which are expected to be valid JSON objects. However, data inconsistencies or unexpected values may cause some rows to contain invalid JSON objects, because these records keep replicating from the source Oracle database through AWS DMS tasks.

Here’s an example of how the system handles such cases and logs them in the discard_record_log table:

Suppose with the preceding batch an invalid JSON format is detected in one of the JSON columns. The procedure handles this situation as follows:

Details of the error, including the table name (staging_table), the range of records processed, a failure message, the discarded record’s primary key value, and the process run counter, are logged.

The invalid record gets discarded from the target_table, because target_table columns are of data type JSON or JSONB.

After processing the batch and logging the error, the procedure commits the changes to the tables.

The discard_record_log table is instrumental in tracking and auditing data integrity until cutover.

Clean up

With the solution mentioned in this post, we’ve successfully isolated invalid JSON records from the discard_record_log table. This process continues until the successful cutover. To clean up, we must remove AWS DMS resources, staging tables, and the PL/pgPSQL procedure from the target PostgreSQL along with its scheduled runs.

Conclusion

In this post, we showed how you can migrate JSON data from Oracle to Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL. We tackled the challenges associated with migrating JSON data stored in Oracle’s CLOB data type columns and offered a solution using AWS DMS in tandem with a PL/pgSQL procedure.

The solution used AWS DMS to capture and replicate changes, which ensures a seamless migration process. The PL/pgSQL procedure was used to identify and isolate invalid JSON records, maintaining data integrity during the migration.

The architecture diagram provided a visual overview of the solution, illustrating the migration from Oracle CLOB data type columns to Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL JSON or JSONB data type columns.

We showcased an effective approach to migrate JSON data while maintaining data quality and integrity. Organizations can achieve a seamless migration process by following the outlined steps. If you have any further questions, please leave a comment.

About the authors

Ramu Jagini is a distinguished Lead Database Consultant specializing in complex database migrations and scalable solutions within the secure AWS Cloud environment. With extensive experience and a focus on both heterogeneous and homogeneous migrations, he seamlessly transfers diverse database systems to AWS while prioritizing performance, reliability, and data security.

Sumana Yanamandra is a distinguished Lead Database Consultant with Amazon Web Services. She works with customers to build scalable, highly available, and secure solutions in the AWS Cloud. Her focus area is homogeneous and heterogeneous database migrations.

Shivam Rastogi is a distinguished Lead Database Consultant with a specialization in cloud-native database architectures with scalable solutions. He focuses on heterogeneous and homogeneous migrations for seamless transition to the AWS Cloud.

Read MoreAWS Database Blog