With the evolution of microservice-based architectures, organizations are increasingly adopting purpose-built databases. Occasionally, businesses need guidance on which cloud service and solutions are best for them, as well as a plan for helping with the migration. When performing heterogeneous database migrations, you might encounter problems with attribute patterns on NoSQL compared to traditional relational database management systems (RDBMS) like restructuring traditional table and column attributes from your self-managed SQL Server to Amazon DynamoDB access patterns.

The key motivation for migrating to DynamoDB is to reduce costs while simplifying operations and improving performance at scale. DynamoDB enables you to free up resources to contribute to growing top-line revenue and value for your customers. Its serverless architecture enables you to save costs with capabilities like pay-per-request, scale-to-zero, and no up-front costs. On the operational side, not having to deal with scaling resources, maintenance windows, or major version upgrades saves significant operational hours and removes undifferentiated heavy lifting. Overall, DynamoDB provides a cost-optimized approach to build innovative and disruptive solutions that provide differentiated customer experiences.

Every application has a specific requirement pattern to fulfill the business use case, whether it’s in NoSQL, traditional SQL (RDBMS), or both. Workloads from applications communicating with database servers fall into one of these types (read or write). In this post, we discuss access patterns that emphasize writing over reading. We go over the design of access patterns and successfully migrate a conventional SQL Server table (relational) to DynamoDB (non-relational) for a microservice application using AWS Database Migration Service (AWS DMS).

Any microservice application that wants to go from a small or large monolithic table in an RDBMS to DynamoDB (NoSQL) can use this solution.

Solution overview

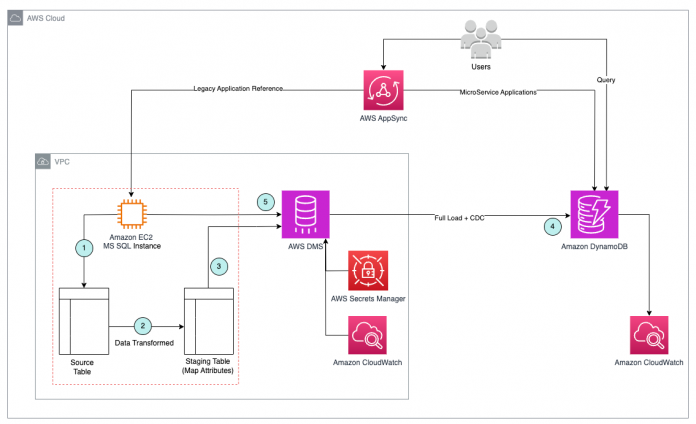

Our solution involves using a reference table created on a self-managed SQL Server on Amazon Elastic Compute Cloud (Amazon EC2). The reference table is built by analyzing the data access patterns and performing attribute mapping in DynamoDB. After the design pattern is established, we provision the DynamoDB table with the proper primary key and sort key to map the reference table. We then use AWS DMS to migrate data and set up continuous replication from the source endpoint mapped to this reference table in SQL Server to the target DynamoDB table.

The following diagram illustrates the architecture.

The solution workflow consists of the following steps:

Connect to your self-managed SQL Server instance and view the relational source tables that will be moved to a DynamoDB table.

Because the SQL source table adheres to traditional SQL standards and doesn’t match the DynamoDB attributes, we use a custom script to convert the source table into a staging table that does match the DynamoDB qualities. A new staging table is created in SQL Server and data is added using customized scripts as follows:

One-time load (full load) – You can run the ad hoc script to populate the staging table if you only want to do a one-time data transfer from the source table.

Change data capture (CDC) – When tracking real-time changes on the source table, a SQL native trigger (INSERT) is generated on the source table to insert the changes into the staging table. This post talks about the INSERT operation on the source table only. In other use cases, if there are conditions to address INSERT, UPDATE, or DELETE, you should have the triggers created accordingly.

The staging table is mapped as the source from SQL Server.

Create a table in DynamoDB with a partition key and sort key that correspond to the attributes on the SQL staging table.

Use AWS DMS to migrate data from the source SQL Server to the target DynamoDB. To migrate the data, map the SQL Server staging table as the source and the DynamoDB table as the target. With the aid of SQL native triggers, we use both full load and CDC in our scenario to obtain real-time data from the SQL Server source staging table.

We use AWS Secrets Manager for storing the SQL credentials in AWS DMS and use Amazon CloudWatch for monitoring the AWS DMS task.

The steps to implement the solution are as follows:

Configure an AWS Identity and Access Management (IAM) policy and roles.

Configure DynamoDB access patterns and a global secondary index (GSI).

Create a DynamoDB table.

Configure SQL Server table mapping.

Migrate data using AWS DMS.

Verify the migrated data in DynamoDB.

Monitor the AWS DMS migration task.

Prerequisites

To follow along with the post, you must have the following prerequisites:

Self-managed SQL Server on Amazon EC2 or on-premises RDBMS server.

IAM role privileges to work with DynamoDB.

AWS DMS set up with a replication instance. Refer to Getting started with AWS Database Migration service for more information.

The AWS Command Line Interface (AWS CLI). To get started, refer to Configure the AWS CLI.

Because this post explores access patterns and attribute mapping to DynamoDB (NoSQL) from SQL Server (RDBMS), a basic understanding of DynamoDB core concepts and its design patterns is needed. Additionally, knowledge of AWS DMS with reference to configuring the SQL Server source and DynamoDB target is recommended.

Configure IAM policy and roles

Complete the following steps to configure an IAM policy and roles for DynamoDB:

On the IAM console, choose Policies in the navigation pane.

Choose Create policy.

Select JSON and use the following policy as a template, replacing the DynamoDB ARN with the correct Region, account number, and DynamoDB table name:

In the navigation pane, choose Roles.

Choose Create role.

Select AWS DMS, then choose Next: Permissions.

Select the policy you created.

Assign a role name and then choose Create role.

Configure DynamoDB access patterns and a GSI

DynamoDB tables are often created based on the primary key’s uniqueness to satisfy business or application access patterns. The partition key and sort key are combined to form a primary key.

Access patterns

In the current use case, we use the SQL table (tbl_StatusHistory_log) as the source to be moved to DynamoDB. Because we emphasize on the write (INSERT) workload, the partition key should be based on the write access pattern compared to read patterns. The following screenshot shows the source table columns with RowID as the primary key.

We must develop a pattern that satisfies the microservice application because RowID won’t offer a distinctive pattern for applications on DynamoDB. The partition key has a combination of columns (Usr_ID, account_id, and silo_id) to give more meaningful access patterns on DynamoDB, but the combination may not be unique because the table (tbl_StatusHistory_log) can have multiple account_id and silo_id values for the same user.

To make the DynamoDB primary key unique, it would be preferable to combine a partition key with a sort key. Because RowID is already unique in the source table, we use it as the sort key. The following table shows how we can expand an access pattern on application (query need) arguments as long as the table’s uniqueness is preserved. Additional columns (Status_Code, Reason_Code, and Status_Date) are added to the partition key to allow application searches to expand the properties.

Input Arguments

Returned Attributes

Frequency

Operation

Table

Usr_ID, Account_ID, Silo_ID

All attributes

More

Insert/Query

Base

Usr_ID, Account_ID, Silo_ID, Status_Code

All attributes

More

Insert/Query

Base

Usr_ID, Account_ID, Silo_ID, Reason_Code

All attributes

More

Insert/Query

Base

Usr_ID, Account_ID, Silo_ID, Status_Date

All attributes

More

Insert/Query

Base

Usr_ID, Silo_ID, Status_Code

All attributes

Less

Query

GSI

Usr_ID, Silo_ID, Reason_Code

All attributes

Less

Query

GSI

Usr_ID, Silo_ID, Status_Date

All attributes

Less

Query

GSI

The table summarizes the following information:

Input arguments – Ideal scenarios on how an application can provide input arguments to DynamoDB for querying

Returned attributes – If the DynamoDB table will provide all attributes as results or specific columns (based on application query)

Frequency – How often application requests come in for the arguments

DynamoDB operation – The most common use cases for application requests

Table – The table options (base or GSI)

The following screenshot provides a visual depiction of the partition key and sort key, which make up the primary key. Additionally, the Other attributes section lists all columns from the original source that will be part of the results output in DynamoDB.

Global secondary index

The GSI-PK attribute was added to the base table to improve read workload query performance because the GSI index can span all of the data in the base table across all partitions. GSI-PK is a combination of columns (Usr_Id and silo_Id) that is comparable to the partition key of the base table but doesn’t include account_Id. Another benefit of GSIs is that you can choose to not include all of the anticipated properties in the result. In the following design model, we provide the GSI partition key and sort key along with attributes as an example.

Create a DynamoDB table

Use the following code to create a DynamoDB table using the AWS CLI. We call the table DDB_StatusHistory_Logs. PK and SK are defined as attribute names for the partition key and sort key, respectively.

After you run the commands, wait for the table to be created. You can verify the DynamoDB table has been created via the AWS CLI.

Configure SQL Server table mapping

In this section, we walk through the steps to configure SQL Server table mapping. We create the source and staging SQL tables, populate the source table for testing, create a script for a one-time load into the staging table, and create a SQL table INSERT trigger.

Create the source and staging SQL tables

For our reference example, we have two SQL Server tables to perform the migration. In the following code, we create a new database called dmsload. We create the source table tbl_StatusHistory_log, which acts as the base data for the AWS DMS load, and the staging table DDB_StatusHistory_DMSLoad, which we use as a reference table for AWS DMS to map the key attributes defined in DynamoDB.

Because the staging table acts as the data feed to DynamoDB, normal attributes are copied from the base table to the staging table. PK, SK, and GSI-PK are the key columns that form a unique access pattern on DynamoDB. The following scripts illustrate the staging table data load.

Populate the source table for testing

Use the following code to populate the source table (tbl_StatusHistory_log) with test data on SQL Server:

Create a script for a one-time load into the staging table

We use an ad hoc script to populate the staging table (DDB_StatusHistory_DMSLoad) with data in order to match the access pattern for DynamoDB. In the code section, you can find scripts that map the DynamoDB access pattern using the UNION clause referencing the source table (tbl_StatusHistory_log).

The output from the staging table (DDB_StatusHistory_DMSLoad) shows the data been transformed to map the access pattern on DynamoDB. You can observe the PK and GSI-PK pattern formation.

PK

GSI-PK

Usr_ID#Account_ID#Silo_id

Usr_ID#Silo_id

Usr_ID#Account_ID#Silo_id#SC-200

Usr_ID#Silo_id#SC-200

Usr_ID#Account_ID#Silo_id#RC-RC300

Usr_ID#Silo_id#RC-RC300

Usr_ID#Account_ID#Silo_id#SD-2023-06-02

Usr_ID#Silo_id#SD-2023-06-02

Create a SQL table (INSERT) trigger

If you have applications that can’t have a longer downtime and need to track the real-time changes on the source table, you can create a SQL native trigger (INSERT) on the source table (tbl_StatusHistory_log) to insert the changes into the staging table (DDB_StatusHistory_DMSLoad). The table trigger (after INSERT) follows a similar access pattern approach for the full load (ad hoc) scripts. After you complete the entire load through AWS DMS, you can drop the triggers on the source table in SQL Server.

The following code illustrates the table trigger:

To illustrate the real-time data, find the sample INSERT statement run on the source table (tbl_StatusHistory_log), which will trigger the corresponding access pattern in the staging table (DDB_StatusHistory_DMSLoad):

You can find the output on the staging table from the insert on the source table, as shown in the following screenshot.

Migrate the data using AWS DMS

Now that we have the SQL Server staging table set up, you can migrate the data from the source SQL Server staging table to the target DynamoDB table.

To start working with AWS DMS, create the AWS DMS replication instance. This instance must have enough memory and processing power to migrate data from the source database to the target database. For information on choosing an appropriate instance, refer to Working with an AWS DMS replication instance.

Create the AWS DMS endpoints

Next, create AWS DMS endpoints for the source and target database. AWS DMS endpoints provide the connection, data store type, and location information about your data store.

Source endpoint

Complete the following steps to create your source endpoint:

On the AWS DMS console, create a source endpoint.

For Endpoint identifier, enter a name.

For Source engine, choose Microsoft SQL Server.

For Access to endpoint, select Provide access information manually.

For Server name, enter the SQL Server hostname.

For Port, enter the port number being used.

Enter the appropriate user name and password.

Enter the appropriate database name.

Test the endpoint, then complete endpoint creation.

Target endpoint

Repeat the previous steps with the following parameters for the target endpoint:

For Endpoint identifier, enter a name.

For Target engine, choose Amazon DynamoDB.

For Amazon Resource Name (ARN) for service access role, enter the IAM role.

Test the endpoint, then complete endpoint creation.

Create the AWS DMS migration task

An AWS DMS task is where all the work happens. This is where you configure what database objects to migrate, logging requirements, error handling, and so on. Complete the following steps to create your task:

On the AWS DMS console, create a new migration task.

For Task identifier, enter an identifiable name.

For Replication instance, choose the replication instance you created.

For Source database endpoint, choose the SQL Server endpoint you created.

For Target database endpoint, choose the DynamoDB endpoint you created.

For Migration type, choose Migrate existing data and replicate ongoing changes.

Enable CloudWatch Logs under the task settings so you can debug issues.

Under Table mappings, for Editing mode, select JSON editor.

Use the following JSON to create the table mappings:

The JSON has two sections (selection and mapping). The SQL table (DDB_StatusHistory_DMSLoad) acts as the source selection and object mapping is done with the target table as the DynamoDB table (DDB_StatusHistory_Logs) with PK (partition key) and SK (sort key) as the key mapping parameters. In our scenario, the attribute values are matched with the key value of SQL source table ${PK} and ${SK}.

Leave everything else as default and choose Create task.

As soon as the task is created, it’s in Creating status. After a few seconds, it changes to Ready status. The migration (and CDC if enabled) starts automatically.

Verify the migrated data in DynamoDB

After the load is complete, navigate to the Table statistics tab. The following screenshot shows the full load was completed successfully.

You can also check the data for CDC by inserting records in the base table dbo.tbl_StatusHistory_log on the SQL Server database:

The reference data was inserted successfully on the base table tbl_StatusHistory_log and staging table DDB_StatusHistory_DMSLoad.

Navigate to the Table statistics tab as shown in the following screenshot. Here we see the total rows count and inserts count increased.

Now go to DynamoDB and check if the records were inserted successfully and correctly.

The AWS CLI shows that one of the item records has been inserted into the DynamoDB table.

Monitor the AWS DMS replication tasks

In particular for big migrations, monitoring is crucial to preserving the consistency, usability, and performance of AWS DMS.

AWS offers different types of tools to monitor your AWS DMS tasks. For example, you can use CloudWatch to collect, track, and monitor AWS resources using metrics. It’s crucial to ensure that the tasks are generated with the necessary mapping rules across the schemas and database objects. Refer to the following resources for further details on monitoring techniques and things to watch out for when monitoring AWS DMS tasks:

Monitoring AWS DMS tasks

Logging task settings

Troubleshooting migration tasks in AWS Database Migration Service

How do I use Table Statistics to Monitor my task in AWS Database Migration Service (DMS)?

Setting up Amazon CloudWatch alarms for AWS DMS resources using the AWS CLI

Logging Migration Hub API calls with AWS CloudTrail

Clean up

To avoid unnecessary charges, clean up any resources that you built as part of this architecture that are no longer in use. This includes stopping the EC2 instance, deleting the IAM policy, and deleting DynamoDB tables and AWS DMS resources like the replication instance you created as a part of this post. Additionally, after the migration is complete, if you don’t need to retain the source SQL Server database or tables, you can drop them.

Conclusion

In this post, you learned how to migrate data from SQL Server to DynamoDB using AWS DMS with data transformation using a staging table. The approach we described to create the SQL Server staging table is specific to the data access patterns for our use case example. You can use this approach to create any RDBMS table and define attributes according to your application’s required data access pattern on DynamoDB. You can then use AWS DMS to migrate data from the source SQL Server staging table to the target DynamoDB table and set up continuous replication to minimize the downtime in the migration.

If you have any questions or suggestions, leave your feedback in the comments section.

About the Authors

Karthick Jayavelu is a Senior Database Consultant at Amazon Web Services. He works with AWS customers offering technical support and designing customer solutions on database projects, as well as helping them on their journey to migrate and modernize their database solutions from on premises to AWS.

Poulami Maity is a Database Specialist Solutions Architect at Amazon Web Services. She works with AWS customers to help them migrate and modernize their existing databases to the AWS Cloud.

Vanshika Nigam is a Solutions Architect with the Database Migration Accelerator team at Amazon Web Services and has over 5 years of Amazon RDS experience. She works as an Amazon DMA Advisor to help AWS customers accelerate migrations of their on-premises, commercial databases to AWS Cloud database solutions.

Read MoreAWS Database Blog